visionOS Pathway

Start your path

Pathways are simple and easy-to-navigate collections of the videos, documentation, and resources you'll need to start building great apps and games.

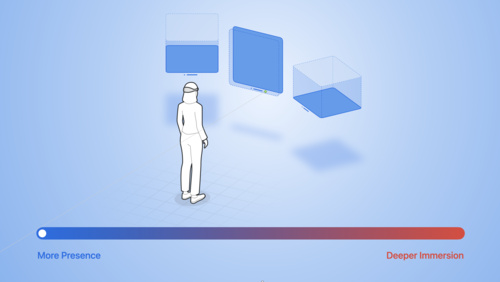

Apple Vision Pro offers an infinite canvas to explore, experiment, and play, giving you the freedom to completely rethink your experience for spatial computing. People can interact with your app while staying connected to their surroundings, or immerse themselves completely in a world of your creation. Best of all, familiar frameworks like SwiftUI, UIKit, RealityKit, and ARKit can help you build apps for visionOS — no matter your prior experience developing for Apple platforms.

What you’ll learn

- How to plan and create a visionOS app for Apple Vision Pro

- Where to download Apple design tools and resources

- How to benefit from the Human Interface Guidelines

New to Apple?

Get started as an Apple developer

Have a visionOS question?

Check out the Apple Developer Forums

Learn the building blocks of spatial computing

Learn the building blocks of spatial computing

Discover the fundamentals that make up spatial computing, like windows, volumes, and spaces. Then, find out how familiar frameworks can help you use these elements to build engaging and immersive experiences.

- Adding 3D content to your app

- Creating your first visionOS app

- Creating fully immersive experiences in your app

Learn more

Get started with building apps for spatial computing

Develop your first immersive app

Create accessible spatial experiences

Meet SwiftUI for spatial computing

Meet UIKit for spatial computing

Design for visionOS

Design for visionOS

Find out how you can design great apps, games, and experiences for spatial computing. Discover brand-new inputs and components. Dive into depth and scale. Add moments of immersion. Create spatial audio soundscapes. Find opportunities for collaboration and connection. And help people stay grounded to their surroundings while they explore entirely new worlds. Whether this is your first time designing spatial experiences or you’ve been building fully immersive apps for years, learn how you can create magical hero moments, enchanting soundscapes, human-centric UI, and more — all with visionOS.

Principles of spatial design

Design for spatial user interfaces

Design for spatial input

Design spatial SharePlay experiences

Explore immersive sound design

Design considerations for vision and motion

Related documentation

Create for visionOS

Create for visionOS

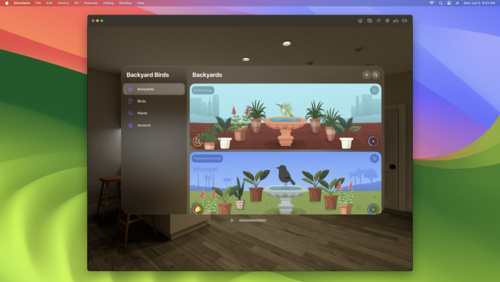

When you build apps for visionOS, you can mix and match windows, volumes, and spaces at any time in your app to create the right moments for your content.

Note: People can also run your existing iPadOS or iOS app as a compatible app in visionOS. Your app appears as a single, scalable window in the person’s surroundings.

Get the proper tools

Start the software development process by downloading Xcode — Apple’s integrated development environment. Xcode offers a complete set of tools to develop software, including project management support, code editors, visual editors for your UI, debugging tools, simulators for different devices, tools for assessing performance, and much more. Xcode also includes a complete set of system code modules — called frameworks — for developing your software.

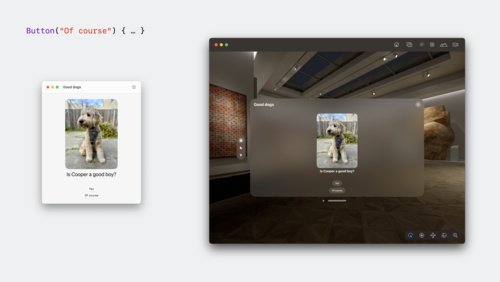

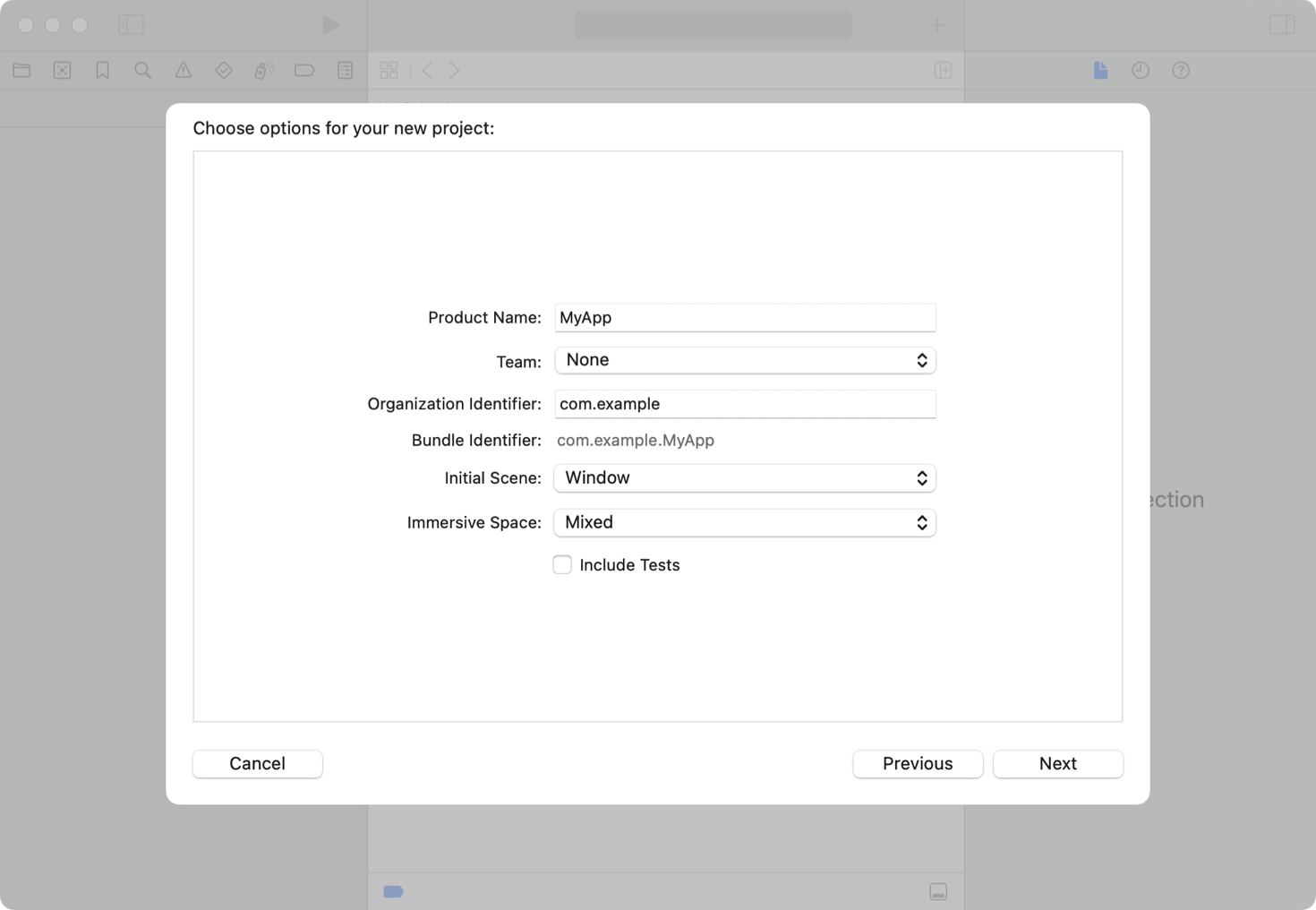

To create a new project in Xcode, choose File > New > Project and follow the prompts to create a visionOS app. All new projects use SwiftUI, which offers a modern declarative programming model to create your app’s core functionality.

SwiftUI works seamlessly with Apple’s data management technologies to support the creation of your content. The Swift standard library and Foundation framework provide structural types such as arrays and dictionaries, and value types for strings, numbers, dates, and other common data values. For any custom types you define, adopt Swift’s Codable support to persist those types to disk. If your app manages larger amounts of structured data, SwiftData, Core Data, and CloudKit offer object-oriented models to manage and persist your data.

You can also use Unity’s robust, familiar authoring tools to create new apps and games. Get access to all the benefits of visionOS, like passthrough and Dynamically Foveated Rendering, in addition to familiar Unity features like AR Foundation.

Add a new dimension to your interface

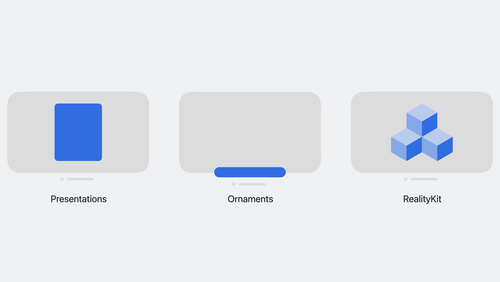

When building your app, start with a window and add elements as appropriate to help immerse people in your content. Add a volume to showcase 3D content, or increase the level of immersion using a Full Space. The mixed style configures the space to display passthrough, but you can apply the progressive or full style to increase immersion and minimize distractions.

- Add depth to your windows: Apply depth-based offsets to views to emphasize parts of your window, or to indicate a change in modality. Incorporate 3D objects directly into your view layouts to place them side by side with your 2D views.

- Add hover effects to custom views: Highlight custom elements when someone looks at them using hover effects. Customize the behavior of your hover effects to achieve the look you want.

- Implement menus and toolbars using ornaments: Place frequently used tools and commands on the outside edge of your windows using ornaments.

RealityKit plays an important role in visionOS apps, and you use it to manage the creation and animation of 3D objects in your apps. Create RealityKit content programmatically, or use Reality Composer Pro to build entire scenes that contain all the objects, animations, sounds, and visual effects you need. Include those scenes in your windows, volumes, or spaces using a RealityView. In addition, take advantage of other 3D features in your apps:

- Adopt MaterialX shaders for dynamic effects: MaterialX is an open standard supported by leading film, visual effects, entertainment, and gaming companies. Use existing tools to create MaterialX shaders, and integrate them into your RealityKit scenes using Reality Composer Pro.

- Store 3D content in USDZ files: Build complex 3D objects and meshes using your favorite tools and store them as USDZ assets in your project. Make nondestructive changes to your assets in Reality Composer Pro and combine them into larger scenes.

- Create previews of your 3D content in Xcode: Preview SwiftUI views with 3D content directly from your project window. Specify multiple camera positions for your Xcode previews to see your content from different angles.

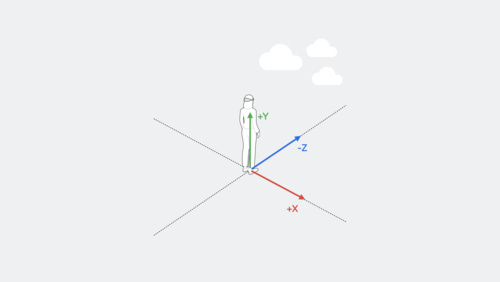

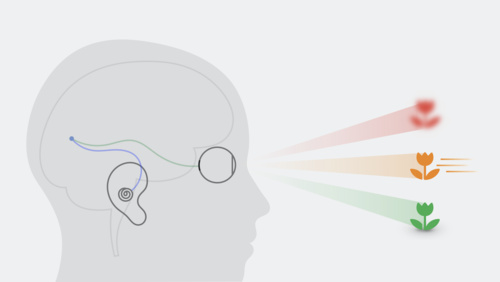

Devise straightforward interactions

In visionOS, people interact with apps primarily using their eyes and hands. With an indirect gesture, a person looks at an object, and then selects it by tapping a finger to their thumb. With a direct gesture, the person’s finger interacts with the object in 3D space. When handling input in your app:

- Adopt the standard system gestures: Rely on tap, swipe, drag, touch and hold, double-tap, zoom, and rotate gestures for the majority of interactions with your app. SwiftUI and UIKit provide built-in support for handling these gestures across platforms.

- Add support for external game controllers: Game controllers offer an alternative form of input to your app. The system automatically directs input from connected wireless keyboards, trackpads, and accessibility hardware to your app’s event-handler code. For game controllers, add support explicitly using the Game Controller framework.

- Create custom gestures with ARKit: The system uses ARKit to facilitate interactions with the person’s surroundings. When your app moves to a Full Space, you can request permission to retrieve the position of the person’s hands and fingers and use that information to create custom gestures.

In a Full Space, ARKit provides additional services to support content-related interactions. Detect surfaces and known images in the person’s surroundings and attach anchors to them. Obtain a mesh of the surroundings and add it to your RealityKit scene to enable interactions between your app’s content and real-world objects. Determine the position and orientation of Apple Vision Pro relative to its surroundings and add world anchors to place content.

Create next-level audio and video

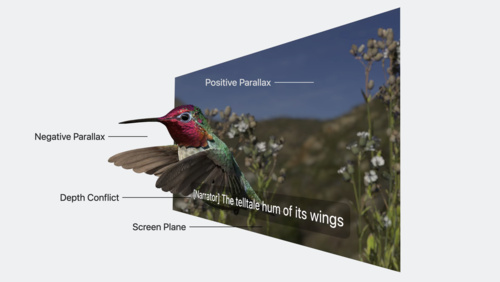

Apple Vision Pro supports stereoscopic video to help movies and other entertainment leap off the screen and into the person’s surroundings. Spatial Audio is the default experience in visionOS, so think about how you want to take advantage of that experience.

- Update video assets for 3D: Take movie night to the next level by playing 3D movies in an immersive 3D environment. The QuickTime file format supports the inclusion of content that appears to jump right off the screen. Play your movies using AVKit and AVFoundation. Include atoms for stereoscopic content in your movie files.

- Incorporate support for Spatial Audio: Build your app’s music player using AVFAudio, which contains the audio-specific types from the AVFoundation framework. Take your audio into another dimension using PHASE, which supports the creation of complex, dynamic Spatial Audio experiences in your games and apps.

- Stream live or recorded content: Learn how to create streamed content and deploy it to your server using HTTP Live Streaming. Play back that streamed content from your app using AVFoundation.

Embrace inclusion

Creating an inclusive app ensures that everyone can access your content. Apple technologies support inclusivity in many different ways. Make sure to support these technologies throughout your app. Find out more about ensuring inclusion across all platforms in the HIG.

- Update accessibility labels and navigation: Apple builds accessibility support right into its technologies, but screen readers and other accessibility features rely on the information you provide to create the accessible experience. Review accessibility labels and other descriptions to make sure they provide helpful information, and make sure focus-based navigation is simple and intuitive. See Accessibility.

- Support alternative ways to access features: Give people alternative ways to select and act on your content, such as menu commands or game controllers. Add accessibility components to RealityKit entities so people can navigate and select them using assistive technologies.

- Add VoiceOver announcements: When VoiceOver is active in visionOS, people navigate their apps using hand gestures. If they enable Direct Gesture mode to interact with your app instead, announcements make sure they can still follow interactions with your content.

- Include captions for audio content: Captions are a necessity for some, but are practical for everyone in certain situations. For example, they’re useful to someone watching a video in a noisy environment. Include captions not just for text and dialogue, but also for music and sound effects in your app. Make sure captions you present in a custom video engine adopt the system appearance.

- Consider the impacts of vision and motion: Motion effects can be jarring, even for people who aren’t sensitive to motion. Limit the use of effects that incorporate rapid movement, bouncing or wave-like motion, zooming animations, multi-axis movement, spinning, or rotations. When the system accessibility settings indicate reduced motion is preferred, provide suitable alternatives. See Human Interface Guidelines > Motion.

For additional information about making apps accessible in visionOS, see Improving accessibility support in your visionOS app.

Test and tune

There are multiple ways you can test your app during development and make sure it runs well on Apple Vision Pro.

- Test and debug your app thoroughly: During development, debug problems as they arise using the built-in Xcode debugger. Build automated test suites using XCTest and run them during every build to validate that new code works as expected. Run those tests under different system loads to determine how your app behaves.

- Be mindful of how much work you do: Make sure the work your app performs offers a tangible benefit. Optimize algorithms to minimize your app’s consumption of CPU and GPU resources. Identify bottlenecks and other performance issues in your code using the Instruments app that comes with Xcode. See Creating a performance plan for your visionOS app.

- Adopt a continuous integration (CI) workflow: Adopt a CI mindset by making sure every commit maintains the quality and stability of your code base. Run performance-related tests as part of your test suite. Use the continuous integration system of Xcode Cloud to automate builds, test cycles, and the distribution of your apps to your QA teams.

Dive deeper into SwiftUI and RealityKit

For more on SwiftUI and RealityKit, explore a dedicated series of sessions focusing on SwiftUI scene types to help you build great experiences across windows, volumes, and spaces. Get to know the Model 3D API, learn how you can add depth and dimension to your app, and find out how to render 3D content with RealityView. And we’ll help you get ready to launch into ImmersiveSpace — a new SwiftUI scene type that lets you make great immersive experiences for visionOS. Learn best practices for managing your scene types, increasing immersion, and building an "out of this world" experience.

Elevate your windowed app for spatial computing

Take SwiftUI to the next dimension

Go beyond the window with SwiftUI

Related documentation

From there, learn how you can bring engaging and immersive content to your app with RealityKit. Get started with RealityKit entities, components, and systems, and find out how to add 3D models and effects to your project. We’ll show you how you can embed your content into an entity hierarchy, blend virtual content and the real world using anchors, bring particle effects into your apps, add video content, and create more immersive experiences with portals.

Related documentation

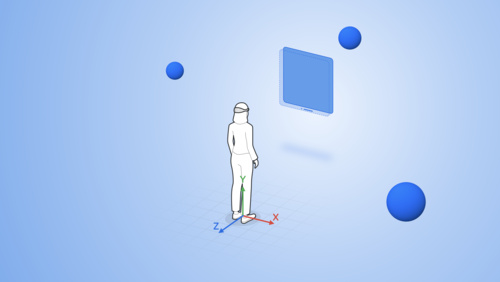

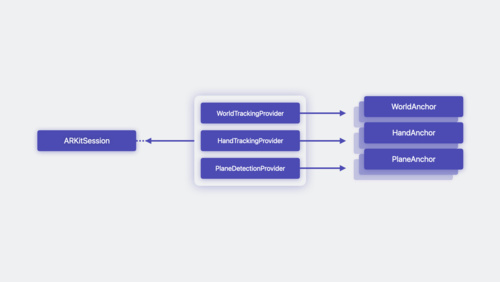

Rediscover ARKit

visionOS uses ARKit algorithms to handle features like persistence, world mapping, segmentation, matting, and environment lighting. These algorithms are always running, allowing apps and games to automatically benefit from ARKit while in the Shared Space. Once your app opens a dedicated Full Space, it can take advantage of ARKit APIs and blend virtual content with the real world.

We’ll share how this framework has been completely reimagined to let you build interactive experiences — all while preserving privacy. Discover how you can make 3D content that interacts with someone’s room — whether you want to bounce a virtual ball off the floor or throw virtual paint on a wall. Explore the latest updates to the ARKit API and follow along as we demonstrate how to take advantage of hand tracking and scene geometry in your apps.

Related documentation

Explore more developer tools for visionOS

Explore more developer tools for visionOS

Apple offers a comprehensive suite of tools to help you build great apps, games, and experiences for visionOS. You’ve already explored Xcode above — now find out how to take advantage of Reality Composer Pro in your 3D development workflow, and discover how you’ll be able use Unity’s authoring tools to create great experiences for spatial computing.

Meet Reality Composer Pro

Discover a new way to preview and prepare 3D content for your visionOS apps. Available later this month, Reality Composer Pro leverages the power of USD to help you compose, edit and preview assets, such as 3D models, materials, and sounds. We’ll show you how to take advantage of this tool to create immersive content for your apps, add materials to objects, and bring your Reality Composer Pro content to life in Xcode. We’ll also take you through the latest updates to Universal Scene Description (USD) on Apple platforms.

Meet Reality Composer Pro

Explore materials in Reality Composer Pro

Work with Reality Composer Pro content in Xcode

Explore the USD ecosystem

Related documentation

Get started with Unity

Learn how you can build visionOS experiences directly in Unity. Discover how Unity developers can use their existing 3D scenes and assets to build an app or game for visionOS. Thanks to deep integration between Unity and Apple frameworks, you can create an experience anywhere you can use RealityKit — whether you’re building 3D content for a window, volume, or the Shared Space. You also get all the benefits of building for Apple platforms, including access to native inputs, passthrough, and more. And we’ll also show you how you can use Unity to create fully immersive experiences.

Learn about TestFlight and App Store Connect

App Store Connect will provide the tools you need to manage, test, and deploy your visionOS apps on the App Store. We’ll share basics and best practices for deploying your first spatial computing app, adding support for visionOS to an existing app, and managing compatibility. We’ll also show you how TestFlight for visionOS lets you test your apps and collect valuable feedback as you iterate.

Dive deeper into creating for visionOS

Dive deeper into creating for visionOS

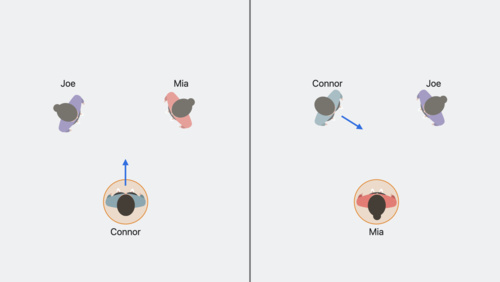

Build for collaboration, sharing, and productivity

Sharing and collaboration make up a central part of visionOS by offering experiences in apps and games that make people feel present as if they were in the same space. By default, people can share any app window with others on a FaceTime call, just like they can on Mac. But when you adopt the GroupActivities framework, you can create next-generation collaborative experiences.

Get started designing and building for SharePlay on Apple Vision Pro by learning about the types of shared activities you can create in your app. Discover how you can establish shared context between participants in your experiences and find out how you can support even more meaningful interactions in your app by supporting spatial Personas.

Build games and media experiences

Discover how you can create truly immersive moments in your games and media experiences with visionOS. Games and media can take advantage of the full spectrum of immersion to tell incredible stories and connect with people in a new way. We’ll show you the pathways available for you to get started with game and narrative development for visionOS. Learn ways to render 3D content effectively with RealityKit, explore design considerations for vision and motion, and find out how you can create fully immersive experiences that transport people to a new world with Metal or Unity.

Build great games for spatial computing

Explore rendering for spatial computing

Design considerations for vision and motion

Discover Metal for immersive apps

Sound can also dramatically enhance the experience of your visionOS apps and games — whether you add an effect to a button press or create an entirely immersive soundscape. Learn how Apple designers select sounds and build soundscapes to create textural, immersive experiences in windows, volumes, and spaces. We’ll share how you can enrich basic interactions in your app with sound when you place audio cues spatially, vary repetitive sounds, and build moments of sonic delight into your app.

If your app or game features media content, we’ve got a series of sessions designed to help you update your video pipeline and build a great playback experience for visionOS. Learn how you can expand your delivery pipeline to support 3D content, and get tips and techniques for spatial media streaming in your app. We’ll also show you how to create engaging and immersive playback experiences with the frameworks and APIs that power video playback for visionOS.

Related documentation

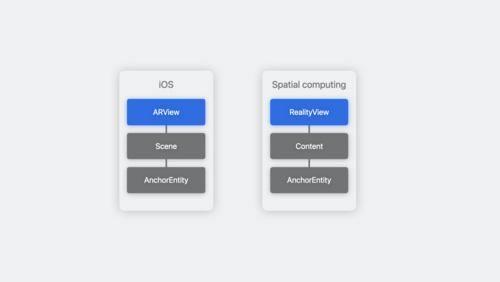

Run your iPad and iPhone apps in visionOS

Discover how you can run your existing iPadOS and iOS apps in visionOS. Explore how iPadOS and iOS apps operate on this platform, learn about framework dependencies, and find out about the Designed for iPad app interaction. When you’re ready to take your existing apps to the next level, we’ll show you how to optimize your iPad and iPhone app experience for the Shared Space and help you improve your visual treatment.

Run your iPad and iPhone apps in the Shared Space

Enhance your iPad and iPhone apps for the Shared Space

Related documentation

Go further

Go further

Once you have an app up and running, look for additional ways to improve the experience.

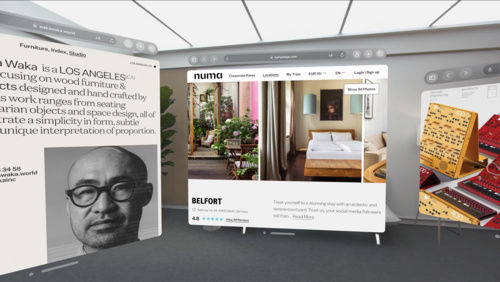

Build web experiences

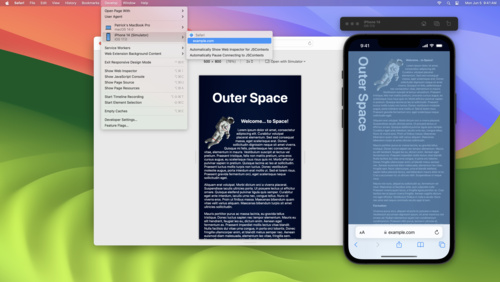

Discover the web for visionOS and learn how people can experience your web content in a whole new way. Explore the input model for this platform and learn how you can optimize your website for spatial computing. We’ll also share how emerging standards are helping shape 3D experiences for the web, dig into the latest updates to Safari extensions, and help you use the developer features in Safari to prototype and test your experiences for Apple Vision Pro.

Meet Safari for spatial computing

What’s new in Safari extensions

Rediscover Safari developer features

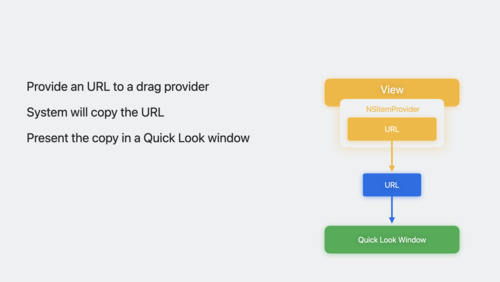

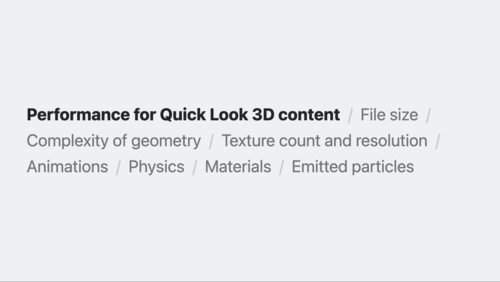

Whether you use Quick Look on the web or in your app, learn how you can add powerful previews for 3D content, spatial images and videos, and much more. We’ll share the different ways that the system presents these experiences, demonstrate how someone can drag and drop this content to create a new window in the Shared Space, and explore how you can access Quick Look directly within an app. We’ll also go over best practices when creating 3D content for Quick Look in visionOS, including important considerations for 3D quality and performance.