Retired Document

Important: This document may not represent best practices for current development. Links to downloads and other resources may no longer be valid.

Events (iOS)

Events in iOS represent fingers touching views of an application or the user shaking the device. One or more fingers touch down on one or more views, perhaps move around, and then lift from the view or views. As this is happening, iPhone’s Multi-Touch system registers these touches as events and sends them to the currently active application for processing. This range of possible touch behaviors, from the moment the first feature touches down on a view until the last finger lifts from that view, defines a multitouch sequence. Applications (and framework objects) analyze multitouch sequences, usually to determine whether they are gestures such as pinches and swipes.

The operating system may also generate motion events when users shake the devices. These are delivered as discrete events to an application.

Objects Represent Fingers Touching a View

Fingers touching a view are represented by UITouch objects. Touch objects include information such as the view the finger is touching, the location of the finger in the view, a timestamp, and a phase. A touch object goes through several phases during a multi-touch sequence in a given order:

UITouchPhaseBeganThe finger touched down on a view.

UITouchPhaseMovedThe finger moved on that view or an adjacent view.

UITouchPhaseEndedThe finger lifted from a view.

A touch object can also be a Stationary phase or be canceled. Touch objects are persistent through a multitouch sequence, but their state mutates. An application packages touch objects in UIEvent objects when it delivers them to the view for handling.

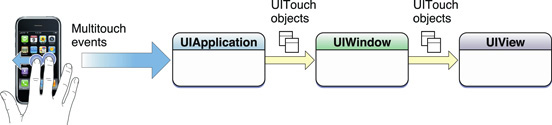

The Delivery of Touch Objects Follows a Defined Path

In the main event loop, the application object gets (raw) touch events in its event queue, packages them as UITouch objects in UIEvent objects, and sends them to the window of the view in which the touch occurred. The window object, in turn, sends these objects to this view, which is known as the hit-test view. If this view cannot handle the touch event (usually because it hasn’t implemented the requisite event-handling methods) the event travels up the responder chain until it is either handled or discarded.

To Handle Events You Must Override Four Methods

Responder objects, which include custom views and view controllers, handle events by implementing four methods declared by the UIResponder class:

touchesBegan:withEvent:is called for touch objects in the Began phase.touchesMoved:withEvent:is called for touch objects in the Moved phase.touchesEnded:withEvent:is called for touch objects in the Ended phase.touchesCancelled:withEvent:is called when some external event—for example, an incoming phone call—causes the operating system to cancel touch objects in a multitouch sequence.

The first argument of each method is a set of touch objects in the given phase. The second argument is a UIEvent object that tracks all touch objects in the current multitouch sequence.

By default, a view is able to receive multitouch events. Instances of some UIKit view classes, however, cannot receive multitouch events because their userInteractionEnabled property is set to NO. If you are subclassing these classes, and want to receive events, be sure to set this property to YES. Although custom views and view controllers should implement all four methods, subclasses of UIKit view classes need only implement the method corresponding to the phase or phases of interest; in this case, however, they must be sure to call the superclass implementation first.

Handling Motion Events

Through a device’s accelerometer, the operating system detects specific motions and sends these as events to the active application. (Currently the only supported motion is shaking the device.) The first responder first receives these motion events (as UIEvent objects) and if it cannot handle them they travel up the responder chain. The system tells an application only when the motion starts and when it stops; the corresponding UIResponder methods are motionBegan:withEvent: and motionEnded:withEvent:. The only information passed to the handling responder object is the data encapsulated by the event object: event type (in this case, UIEventTypeMotion), event subtype (in this case, UIEventSubtypeMotionShake), and a timestamp .

Copyright © 2018 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2018-04-06