Retired Document

Important: This document does not represent best practices for current development. You should convert your app to use AVFoundation instead. See Transitioning QTKit Code to AV Foundation.

Building a Simple QTKit Recorder Application

In this chapter, you build a simple QTKit recorder application. When completed, your QTKit recorder will allow you to capture a video stream and record the output of that stream to a QuickTime movie. To implement this recorder, you’ll be surprised at how few lines of Objective-C code you have to write.

Your recorder application will have simple start and stop buttons that allow you to output and display your captured files in QuickTime Player. For this project, you need an iSight camera, either built-in or plugged into your Mac.

In building your QTKit recorder application as described in this tutorial, you work essentially with the following three QTKit capture objects:

QTCaptureSession. The primary interface for capturing media streams.QTCaptureMovieFileOutput. The output destination for aQTCaptureSessionobject that writes captured media to QuickTime movie files.QTCaptureDeviceInput. The input source for media devices, such as cameras and microphones.

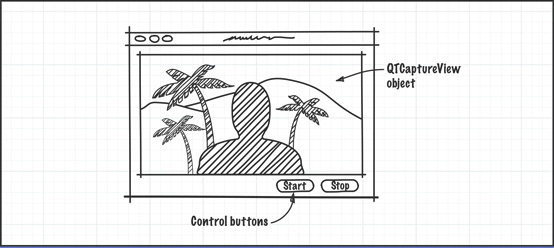

Prototype the Recorder

Rather than simply jumping into Interface Builder and constructing your prototype there, visualize the elements first in a rough design sketch, thinking of the design elements you want to incorporate into the application.

In this prototype, you use three simple Cocoa objects: a QuickTime capture view, and two control buttons to start and stop the actual recording process. These are the building blocks for your application. After you’ve sketched them out, you can begin to think of how to hook them up in Interface Builder and what code you need in your Xcode project to make this happen.

Create the Project Using Xcode 3.2

Follow the same steps you performed when you created and built the MyMediaPlayer application, described in Creating a Simple QTKit Media Player Application.

To create the project:

Launch Xcode 3.2 and choose File > New Project.

When the new project window appears, select Cocoa Application and click Choose.

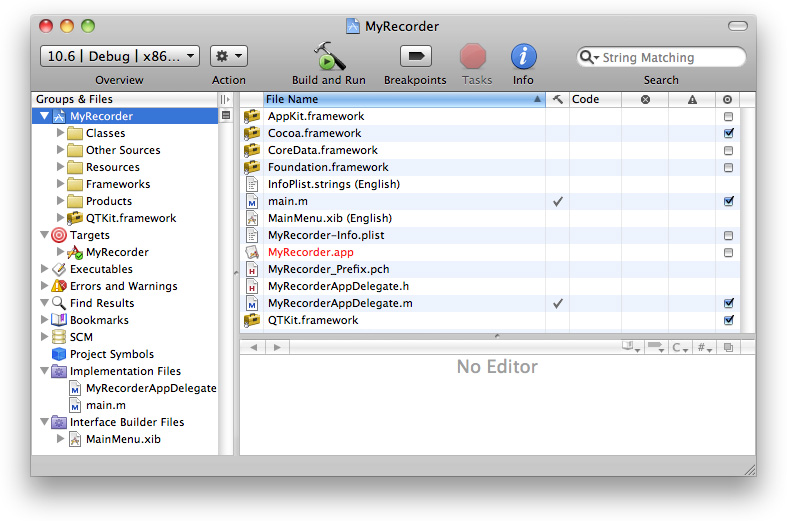

Name the project MyRecorder and navigate to the location where you want the Xcode application to create the project folder. The Xcode project window appears, as shown here.

Add the QTKit framework to your MyRecorder project.

From the Action menu in your Xcode project, choose Add > Add to Existing Frameworks.

In the

/System/Library/Frameworksdirectory, selectQTKit.framework.Click the Target “MyMediaPlayer” Info panel and verify you have linked to the QTKit.framework and the Type

Requiredis selected.

This completes the first sequence of steps in your project. In the next sequence, you define actions and outlets in Xcode before working with Interface Builder.

Name the Project Files and Import the QTKit Headers

Now that you’ve already prototyped your QTKit recorder application, at least in rough form with a clearly defined data model, you can determine which actions and outlets need to be implemented. In this case, you have a QTCaptureView object, which is a subclass of NSView, and two simple buttons to start and stop the recording of your captured media content.

Choose File > New File. In the panel, scroll down and select Cocoa > Objective-C class, which includes the

<Cocoa/Cocoa.h>header files.Name your implementation file

MyRecorderController.m. Check the item in the field below to name your declaration fileMyRecorderController.h.In your

MyRecorderController.hfile, add the necessary QTKit import statement.#import <QTKit/QTKit.h>

Determine the Actions and Outlets You Want

Now begin adding outlets and actions.

In your

MyRecorderController.hfile, add the instance variablemCaptureView, which points to theQTCaptureViewobject as an outlet.IBOutlet QTCaptureView *mCaptureView;

Define two actions to start and stop recording.

- (IBAction)startRecording:(id)sender;

- (IBAction)stopRecording:(id)sender;

At this point, the code in your

MyRecorderController.hfile should look like this:#import <Cocoa/Cocoa.h>

#import <QTKit/QTKit.h>

@interface MyRecorderController : NSObject {IBOutlet QTCaptureView *mCaptureView;

}

- (IBAction)startRecording:(id)sender;

- (IBAction)stopRecording:(id)sender;

@end

Open the

MyRecorderController.mfile and add the following actions, along with the requisite braces.- (IBAction)startRecording:(id)sender

{}

- (IBAction)stopRecording:(id)sender

{}

Save both your

MyRecorderController.handMyRecorderController.mfiles. Otherwise, when you go to work in Interface Builder, the application will not know about theMyRecorderControllerclass.

This completes the second stage of your project. Now use Interface Builder 3.2 to construct the user interface for your project.

Create the User Interface

Now you construct and implement the various user interface elements in your project.

Launch Interface Builder 3.2.

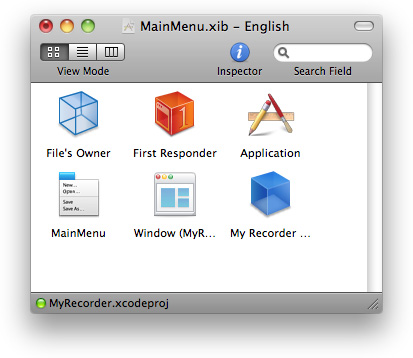

In your Xcode project window, open the

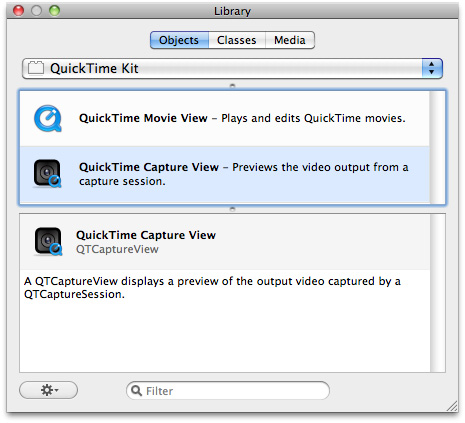

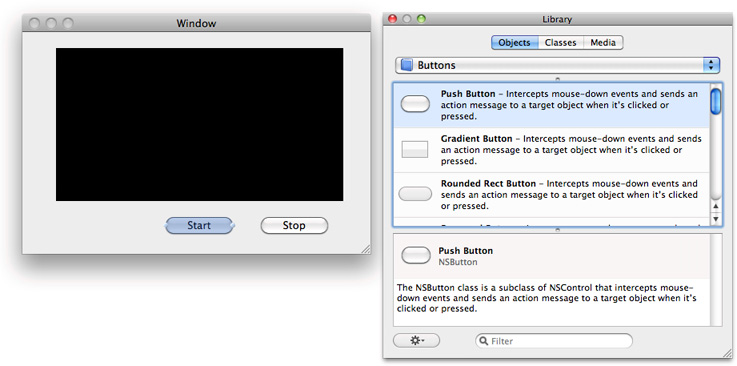

MainMenu.xibfile .In Interface Builder 3.2, find the library of objects.

Set up the preview window.

Scroll down until you find the QuickTime Capture View object.

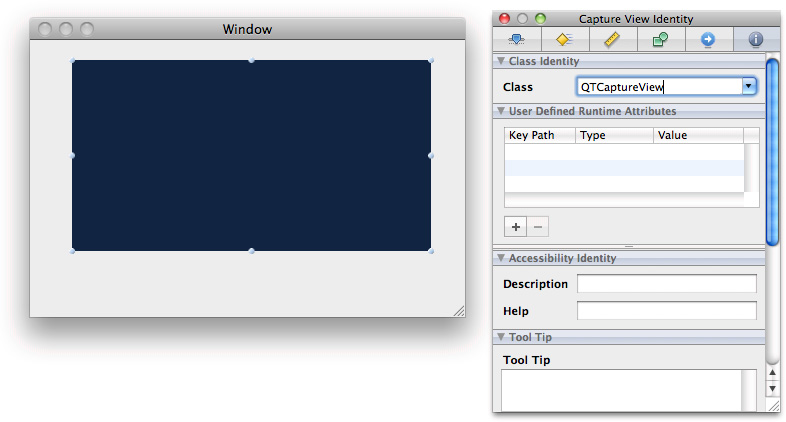

The

QTCaptureViewobject provides you with an instance of a view subclass to display a preview of the video output that is captured by a capture session.Drag the

QTCaptureViewobject into your window and resize the object to fit the window, allowing room for the two Start and Stop buttons in your QTKit recorder.Click the object in the window.

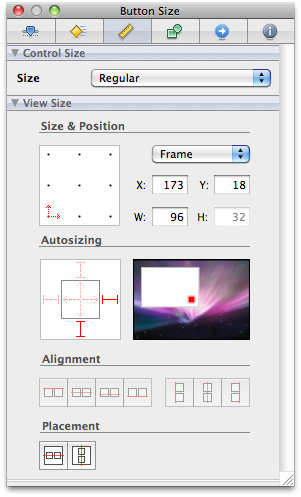

Set the autosizing for the object in the Capture View Size panel, as illustrated below.

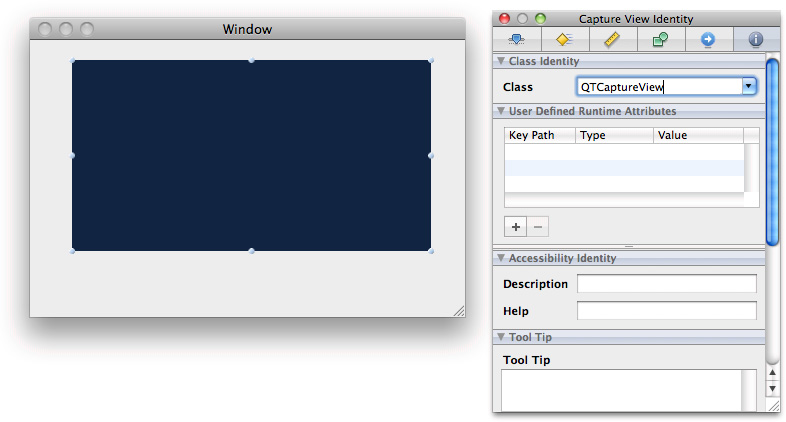

Define the window attributes of your MyRecorder Window.

Verify the controls, appearance, behavior, and memory items are checked, as shown in the illustration.

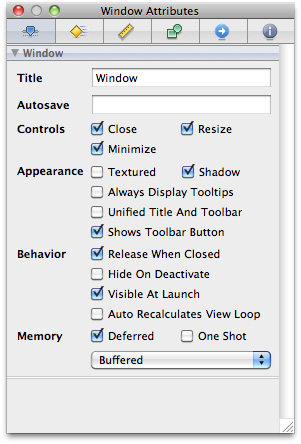

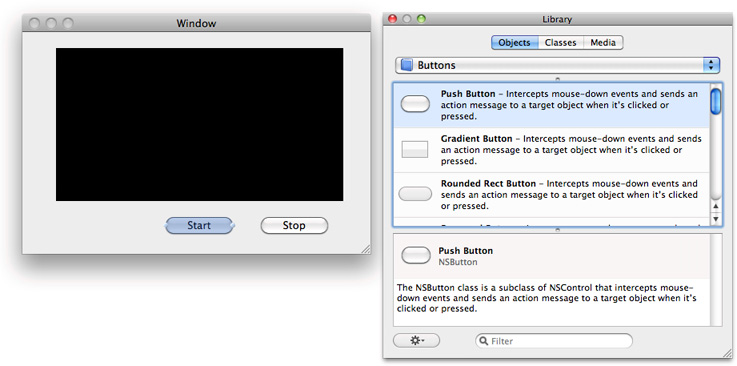

Create the Start and Stop buttons for the MyRecorder Window.

In the Library, select the Push Button control and drag it to the Window.

Enter the text

Startand duplicate the button to create another button asStop.

Set up the autosizing for your buttons (as shown in the illustration) by selecting the button and clicking the Button Size Inspector.

Add a controller, and name it.

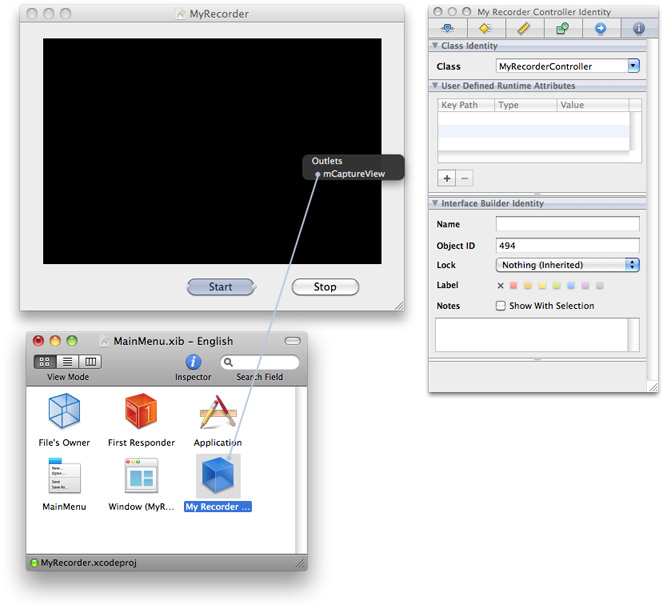

In the Library, scroll down and select the blue cube control, which is an object (

NSObject) you can instantiate as your controller.Drag the object into your

MainMenu.xibwindow.Select the object and enter its name as

My Recorder Controller. Then click the information icon in the Inspector.When you click the Class Identity field, enter the first few letters of the class name into the text field. Interface Builder autocompletes it for you.

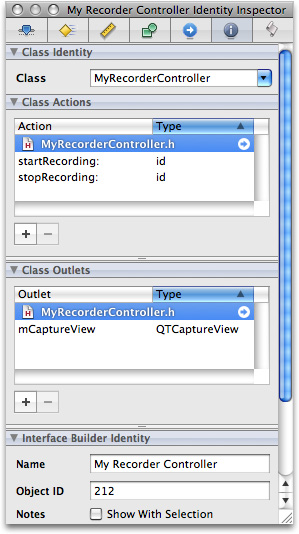

The

MyRecorderControllerobject appears. If you have saved both your declaration and implementation files as specified in Determine the Actions and Outlets You Want, Interface Builder automatically updates theMyRecorderControllerclass specified in your Xcode implementation file. To verify and reconfirm that an update has occurred, press Return. If the identify field is not automatically updated, you may need to specify manually that it is aMyRecorderControllerobject.

Set Up a Preview of the Captured Video Output

In the next phase of your project, you continue working with Interface Builder and Xcode to construct and implement the various elements in your project. Because of the seamless integration between Interface Builder and Xcode, your code will run more efficiently and with less overhead.

In Interface Builder, hook up the

MyRecorderControllerobject to theQTCaptureViewobject.Control-drag from the

MyRecorderControllerobject in your nib file to theQTCaptureViewobject.A transparent panel appears, displaying the

IBOutletinstance variable,mCaptureView, that you’ve specified in your declaration file.

Click the Interface Builder outlet

mCaptureViewto wire up the two objects.

Wire the Start and Stop Buttons

Now you’re ready to add your Start and Stop push buttons and wire them up in your MainMenu.nib window.

Control-drag each of the Start and Stop buttons from the window to the

MyRecorderControllerobject.Click the

startRecording:method in the transparent Received Actions panel to connect the Start button.Likewise, click the

stopRecording:method in the Received Actions panel to connect the Stop button.

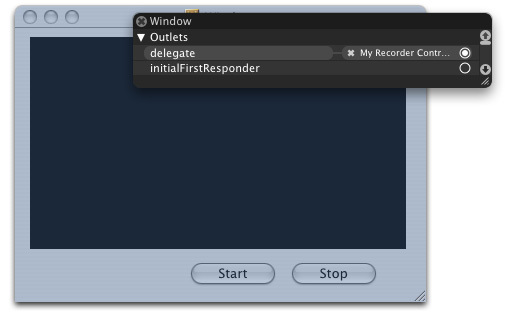

Now hook up the window and the

MyRecorderControllerobject as a delegate.Control-drag a connection from the window to the

MyRecorderControllerobject and click the outlet, connecting the two objects.

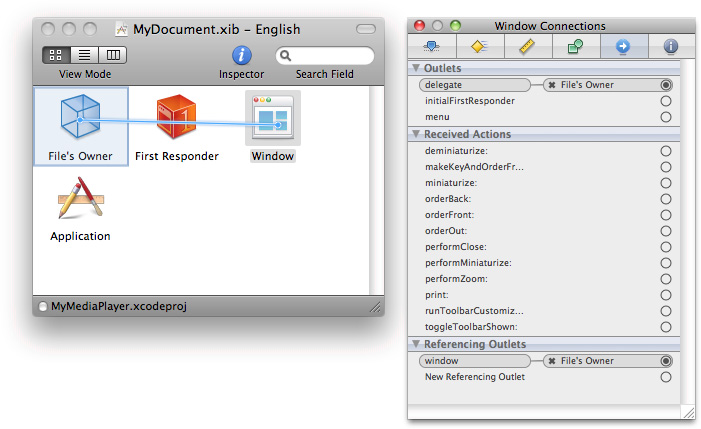

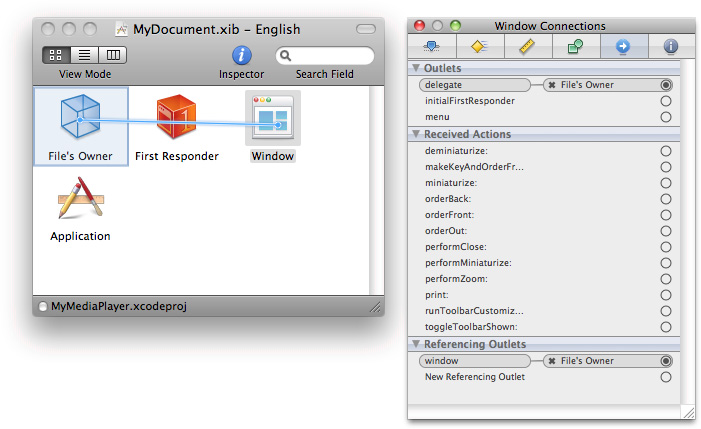

To verify that you’ve correctly wired up your window object to your delegate object, select the Window and click the Window Connections Inspector icon.

Verify that you’ve correctly wired up your outlets and received actions.

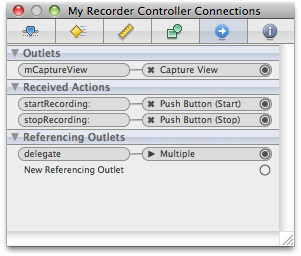

Select the My Recorder Controller object and click the My Recorder Controller Connections icon.

Check the My Recorder Controller Identity Inspector panel to confirm the class actions and class outlets.

Click the

MainMenu.xibfile to verify that Interface Builder and Xcode have worked together to synchronize the actions and outlets you’ve specified.A small green light appears at the left bottom corner of the

MainMenu.xibfile next toMyRecorder.xcodeprojto confirm this synchronization.

Save your nib file.

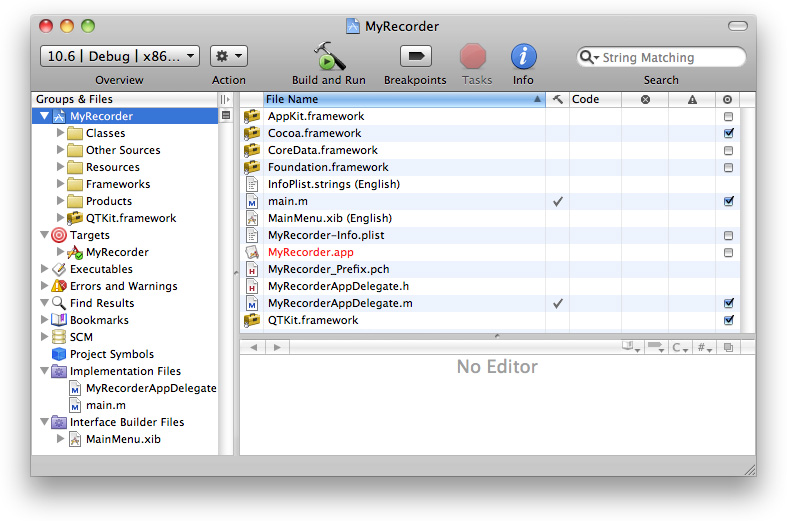

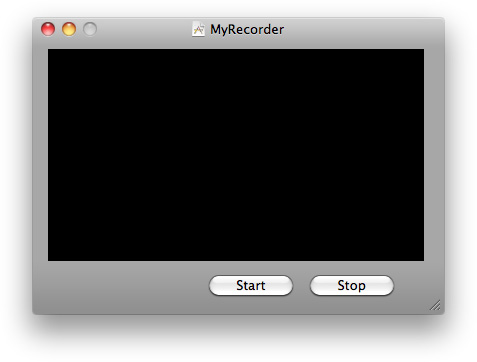

Verify that your QTKit MyRecorder application appears as shown in the illustration below.

You’ve now completed your work in Interface Builder 3.2. In this next sequence of steps, you return to your Xcode project and add a few lines of code in both your declaration and implementation files to build and compile the application.

Complete the Implementation of the MyRecorderController Class

To complete the implementation of the MyRecorderController class, you need to define the instance variables that point to the capture session, as well as to the input and output objects. In your Xcode project, you add the instance variables to the interface declaration.

Update your

MyRecorderController.hdeclaration file:@interface MyRecorderController : NSObject {QTCaptureSession *mCaptureSession;

QTCaptureMovieFileOutput *mCaptureMovieFileOutput;

QTCaptureDeviceInput *mCaptureDeviceInput;

The complete code for your declaration file should look like this:

#import <Cocoa/Cocoa.h>

#import <QTKit/QTKit.h>

@interface MyRecorderController : NSObject {IBOutlet QTCaptureView *mCaptureView;

QTCaptureSession *mCaptureSession;

QTCaptureMovieFileOutput *mCaptureMovieFileOutput;

QTCaptureDeviceInput *mCaptureDeviceInput;

}

- (IBAction)startRecording:(id)sender;

- (IBAction)stopRecording:(id)sender;

@end

In the remaining steps, you modify the

MyRecorderController.mimplementation file. These steps are arranged in logical, though not necessarily rigid, order.Create the capture session.

- (void)awakeFromNib

{mCaptureSession = [[QTCaptureSession alloc] init];

Connect inputs and outputs to the session.

BOOL success = NO;

NSError *error;

Find the device and create the device input. Then add it to the session.

QTCaptureDevice *device = [QTCaptureDevice defaultInputDeviceWithMediaType:QTMediaTypeVideo];

if (device) {success = [device open:&error];

if (!success) {}

mCaptureDeviceInput = [[QTCaptureDeviceInput alloc] initWithDevice:device];

success = [mCaptureSession addInput:mCaptureDeviceInput error:&error];

if (!success) {// Handle error

}

Create the movie file output and add it to the session.

mCaptureMovieFileOutput = [[QTCaptureMovieFileOutput alloc] init];

success = [mCaptureSession addOutput:mCaptureMovieFileOutput error:&error];

if (!success) {}

[mCaptureMovieFileOutput setDelegate:self];

Specify the compression options with an identifier with a size for video and a quality for audio.

NSEnumerator *connectionEnumerator = [[mCaptureMovieFileOutput connections] objectEnumerator];

QTCaptureConnection *connection;

while ((connection = [connectionEnumerator nextObject])) {NSString *mediaType = [connection mediaType];

QTCompressionOptions *compressionOptions = nil;

if ([mediaType isEqualToString:QTMediaTypeVideo]) {compressionOptions = [QTCompressionOptions compressionOptionsWithIdentifier:@"QTCompressionOptions240SizeH264Video"];

} else if ([mediaType isEqualToString:QTMediaTypeSound]) {compressionOptions = [QTCompressionOptions compressionOptionsWithIdentifier:@"QTCompressionOptionsHighQualityAACAudio"];

}

[mCaptureMovieFileOutput setCompressionOptions:compressionOptions forConnection:connection];

Associate the capture view in the user interface with the session.

[mCaptureView setCaptureSession:mCaptureSession];

}

Start the capture session running.

[mCaptureSession startRunning];

}

Handle the closing of the window and notify for your input device, and then stop the capture session.

- (void)windowWillClose:(NSNotification *)notification

{[mCaptureSession stopRunning];

[[mCaptureDeviceInput device] close];

}

Deallocate memory.

- (void)dealloc

{[mCaptureSession release];

[mCaptureDeviceInput release];

[mCaptureMovieFileOutput release];

[super dealloc];

}

Implement start and stop actions, and specify the output destination for your recorded media, in this case a QuickTime movie (

.mov) in your/Users/Sharedfolder.- (IBAction)startRecording:(id)sender

{[mCaptureMovieFileOutput recordToOutputFileURL:[NSURL fileURLWithPath:@"/Users/Shared/My Recorded Movie.mov"]];

}

- (IBAction)stopRecording:(id)sender

{[mCaptureMovieFileOutput recordToOutputFileURL:nil];

}

Finish recording and then launch your recording as a QuickTime movie on your Desktop.

- (void)captureOutput:(QTCaptureFileOutput *)captureOutput didFinishRecordingToOutputFileAtURL:(NSURL *)outputFileURL forConnections:(NSArray *)connections dueToError:(NSError *)error

{[[NSWorkspace sharedWorkspace] openURL:outputFileURL];

}

Build and Compile Your Recorder Application

After you’ve saved your project, click Build and Go. After compiling, click the Start button to record, and the Stop button to stop recording. The output of your captured session is saved as a QuickTime movie in the path you specified in this code sample.

Using a simple iSight camera, you now have the capability of capturing and recording media at a specific size and audio quality, and then outputting your recording to a QuickTime movie.

Summary

In this chapter, as a programmer, you focused on building a recorder application using three capture objects: QTCaptureSession, QTCaptureMovieFileOutput and QTCaptureDeviceInput. These were the essential building blocks for your project. You learned how to:

Prototype and design the data model and controllers for your project in a rough sketch before constructing the actual user interface.

Define two specific IB actions as buttons to start and stop recording.

Create the user interface using the

QTCaptureViewplug-in from the Interface Builder library of controls.Wire up the control buttons for your user interface.

Specify compression options with an identifier for the size of your recorded video and the for quality of your audio.

Build and run the recorder application, using the iSight camera as your input device.

Copyright © 2016 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2016-08-26