Sessions

-

Create accessible spatial experiences

8:00 a.m.Learn how you can make spatial computing apps that work well for everyone. Like all Apple platforms, visionOS is designed for accessibility: We’ll share how we’ve reimagined assistive technologies like VoiceOver and Pointer Control and designed features like Dwell Control to help people interact in the way that works best for them. Learn best practices for vision, motor, cognitive, and hearing accessibility and help everyone enjoy immersive experiences for visionOS.

-

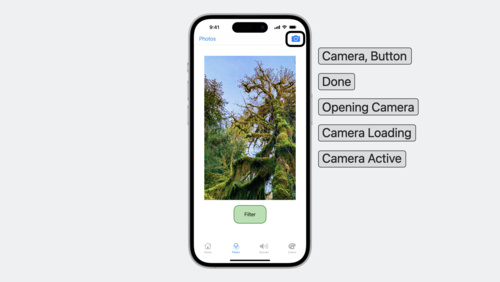

Perform accessibility audits for your app

8:00 a.m.Discover how you can test your app for accessibility with every build. Learn how to perform automated audits for accessibility using XCTest and find out how to interpret the results. We’ll also share enhancements to the accessibility API that can help you improve UI test coverage.

-

Unlock the power of grammatical agreement

8:00 a.m.Discover how you can use automatic grammatical agreement in your apps and games to create inclusive and more natural-sounding expressions. We’ll share best practices for working with Foundation, showcase examples in multiple languages, and demonstrate how to use these APIs to enhance the user experience for your apps. For an introduction to automatic grammatical agreement, watch “What’s new in Foundation” from WWDC21.

Labs

-

Accessibility design lab

Tuesday @ 9:00 - 10:00 a.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility design lab

Tuesday @ 1:00 - 2:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility design lab

Tuesday @ 5:00 - 6:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

Activities

-

Meet the presenters: Create accessible spatial experiences

Tuesday @ 10:00 - 11:00 a.m.Meet the presenters of “Create accessible spatial experiences” and join a text-based watch party followed by a short Q&A. The watch party begins 5 minutes after the start of this activity — so don’t be late!

-

Meet the presenter: Perform accessibility audits for your app

Tuesday @ 11:00 - 12:00 p.m.Meet the presenter of “Perform accessibility audits for your app” and join a text-based watch party followed by a short Q&A. The watch party begins 5 minutes after the start of this activity — so don’t be late!

-

Community panel: What has the iPhone meant to people with disabilities?

Tuesday @ 1:00 - 2:00 p.m.Join members of the Apple Accessibility team for a 1 hour text-based conversation about how assistive technologies are used in their day-to-day lives.

Sessions

-

Build accessible apps with SwiftUI and UIKit

8:00 a.m.Discover how advancements in UI frameworks make it easier to build rich, accessible experiences. Find out how technologies like VoiceOver can better interact with your app’s interface through accessibility traits and actions. We’ll share the latest updates to SwiftUI that help you refine your accessibility experience and show you how to keep accessibility information up-to-date in your UIKit apps.

Labs

-

Accessibility design lab

Wednesday @ 9:00 - 10:00 a.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility lab

Wednesday @ 10:00 - 12:00 p.m.Request an appointment with an Apple engineer for guidance and conversation about Accessibility, including VoiceOver, visual accessibility, assistive devices, and more.

-

Accessibility design lab

Wednesday @ 1:00 - 2:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility design lab

Wednesday @ 5:00 - 6:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

Activities

-

Meet the presenter: Build accessible apps with SwiftUI and UIKit

Wednesday @ 1:00 - 2:00 p.m.Meet the presenter of “Build accessible apps with SwiftUI and UIKit” and join a text-based watch party followed by a short Q&A. The watch party begins 5 minutes after the start of this activity — so don’t be late!

-

Q&A: Internationalization and localization

Wednesday @ 3:00 - 5:00 p.m.Ask Apple engineers about internationalization and localization during this 2 hour text-based Q&A. Stop in to request guidance on a code-level question, ask for clarifications, or learn from others.

-

Q&A: Accessibility

Wednesday @ 3:00 - 5:00 p.m.Ask Apple engineers about accessibility in SwiftUI, UIKit, and AppKit during this 2 hour text-based Q&A. Stop in to request guidance on a code-level question, ask for clarifications, or learn from others.

Sessions

-

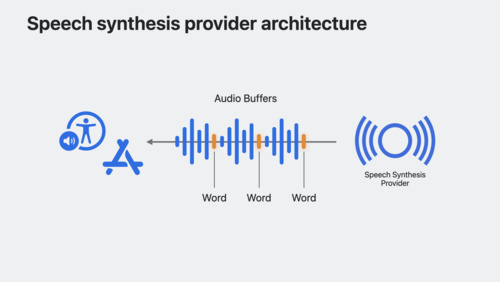

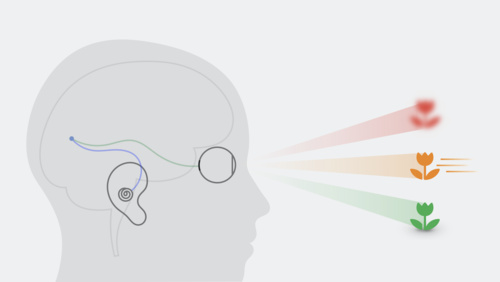

Extend Speech Synthesis with personal and custom voices

8:00 a.m.Bring the latest advancements in Speech Synthesis to your apps. Learn how you can integrate your custom speech synthesizer and voices into iOS and macOS. We’ll show you how SSML is used to generate expressive speech synthesis, and explore how Personal Voice can enable your augmentative and assistive communication app to speak on a person’s behalf in an authentic way.

Labs

-

Accessibility design lab

Thursday @ 9:00 - 10:00 a.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility design lab

Thursday @ 1:00 - 2:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Internationalization and localization lab

Thursday @ 3:00 - 5:00 p.m.Make your app a great global citizen. Request an appointment with an Apple engineer for guidance and conversation about internationalization and localization.

-

Accessibility design lab

Thursday @ 5:00 - 6:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

Activities

-

Q&A: Accessibility

Thursday @ 10:00 - 12:00 p.m.Ask Apple engineers about accessibility in SwiftUI, UIKit, and AppKit during this 2 hour text-based Q&A. Stop in to request guidance on a code-level question, ask for clarifications, or learn from others.

-

Meet the presenter: Extend Speech Synthesis with personal and custom voices

Thursday @ 2:00 - 3:00 p.m.Meet the presenter of “Extend Speech Synthesis with personal and custom voices” and join a text-based watch party followed by a short Q&A. The watch party begins 5 minutes after the start of this activity — so don’t be late!

Sessions

-

Design considerations for vision and motion

8:00 a.m.Learn how to design engaging immersive experiences for visionOS that respect the limitations of human vision and motion perception. We’ll show you how you can use depth cues, contrast, focus, and motion to keep people comfortable as they enjoy your apps and games.

-

Meet Assistive Access

8:00 a.m.Learn how Assistive Access can help people with cognitive disabilities more easily use iPhone and iPad. Discover the design principles that guide Assistive Access and find out how the system experience adapts to lighten cognitive load. We’ll show you how Assistive Access works and what you can do to support this experience in your app.

Labs

-

Accessibility design lab

Friday @ 9:00 - 10:00 a.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility design lab

Friday @ 1:00 - 2:00 p.m.Learn how you can make your apps accessible and simple to use for everyone. Attend an accessibility design lab to better understand how people who use Apple’s accessibility features will navigate your products. Come prepared with a working prototype, development build, or your released app. Appointments are 30 minutes long, and are limited to one per person per lab for the duration of the conference. If you do not receive an appointment, you can submit a request again on another day.

-

Accessibility lab

Friday @ 1:00 - 4:00 p.m.Request an appointment with an Apple engineer for guidance and conversation about Accessibility, including VoiceOver, visual accessibility, assistive devices, and more.

Activities

-

Meet the presenter: Meet Assistive Access

Friday @ 10:00 - 11:00 a.m.Meet the presenter of “Meet Assistive Access” and join a text-based watch party followed by a short Q&A. The watch party begins 5 minutes after the start of this activity — so don’t be late!