Event Architecture

The path taken by an event to the object in a Cocoa application that finally handles it can be a complicated one. This chapter traces the possible paths of events of various types and describes the mechanisms and architectural designs for handling events in the Application Kit.

For further background, About OS X App Design in Mac App Programming Guide is recommended reading.

How an Event Enters a Cocoa Application

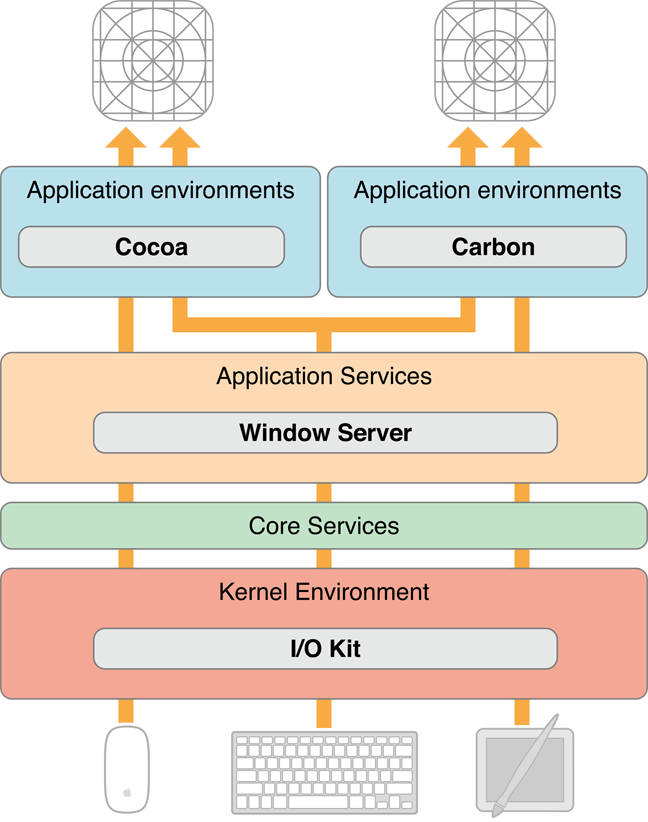

An event is a low-level record of a user action that is usually routed to the application in which the action occurred. A typical event in OS X originates when the user manipulates an input device attached to a computer system, such as a keyboard, mouse, or tablet stylus. When the user presses a key or clicks a button or moves a stylus, the device detects the action and initiates a transfer of data to the device driver associated with it. Through the I/O Kit, the device driver creates a low-level event, puts it in the window server's event queue, and notifies the window server. The window server dispatches the event to the appropriate run-loop port of the target process. From there the event is forwarded to the event-handling mechanism appropriate to the application environment. Figure 1-1 depicts this event-delivery system.

Before it dispatches an event to an application, the window server processes it in various ways; it time-stamps it, annotates it with the associated window and process port, and possibly performs other tasks as well. As an example, consider what happens when a user presses a key. The device driver translates the raw scan code into a virtual key code which it then passes off (along with other information about the key-press) to the window server in an event record. The window server has a translation facility that converts the virtual key code into a Unicode character.

In OS X, events are delivered as an asynchronous stream. This event stream proceeds “upward” (in an architectural sense) through the various levels of the system—the hardware to the window server to the Event Manager—until each event reaches its final destination: an application. As it passes through each subsystem, an event may change structure but it still identifies a specific user action.

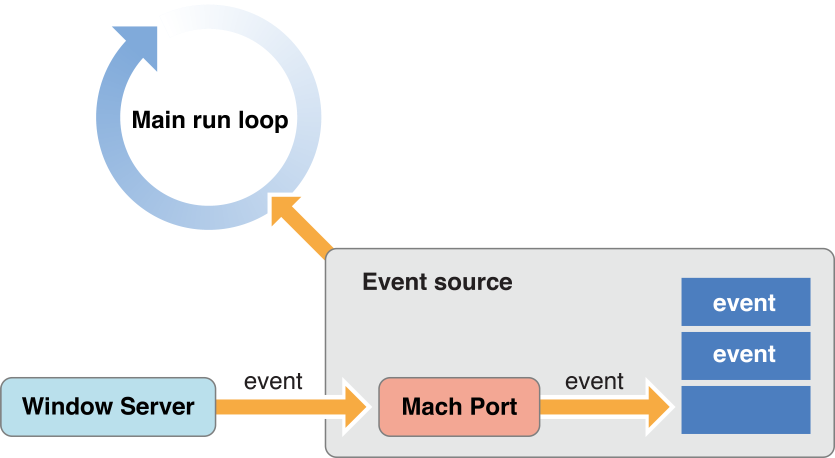

Every application has a mechanism specific to its environment for receiving events from the window server. For a Cocoa application, that mechanism is called the main event loop. A run loop, which in Cocoa is an NSRunLoop object, enables a process to receive input from various sources. By default, every thread in OS X has its own run loop, and the run loop of the main thread of a Cocoa application is called the main event loop. What especially distinguishes the main event loop is an input source called the event source, which is constructed when the global NSApplication object (NSApp) is initialized. The event source consists of a port for receiving events from the window server and a FIFO queue—the event queue—for holding those events until the application can process them, as shown in Figure 1-2.

A Cocoa application is event driven: It fetches an event from the queue, dispatches it to an appropriate object, and, after the event is handled, fetches the next event. With some exceptions (such as modal event loops) an application continues in this pattern until the user quits it. The following section, Event Dispatch, describes how an application fetches and dispatches events.

Events delivered via the event source are not the only kinds of events that enter Cocoa applications. An application can also respond to Apple events, high-level interprocess events typically sent by other processes such as the Finder and Launch Services. For example, when users double-click an application icon to open the application or double-click a document to open the document, an Apple event is sent to the target application. An application also fetches Apple events from the queue but it does not convert them into NSEvent objects. Instead an Apple event is handled directly by an event handler. When an application launches, it automatically registers several event handlers for this purpose. For more on Apple events and event handlers, see Apple Events Programming Guide.

Event Dispatch

In the main event loop, the application object (NSApp) continuously gets the next (topmost) event in the event queue, converts it to an NSEvent object, and dispatches it toward its final destination. It performs this fetching of events by invoking the nextEventMatchingMask:untilDate:inMode:dequeue: method in a closed loop. When there are no events in the event queue, this method blocks, resuming only when there are more events to process.

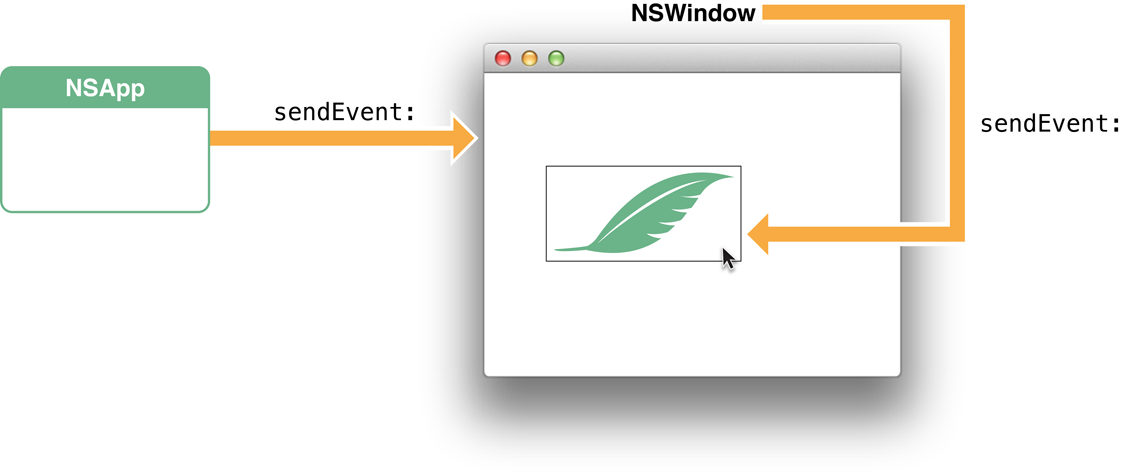

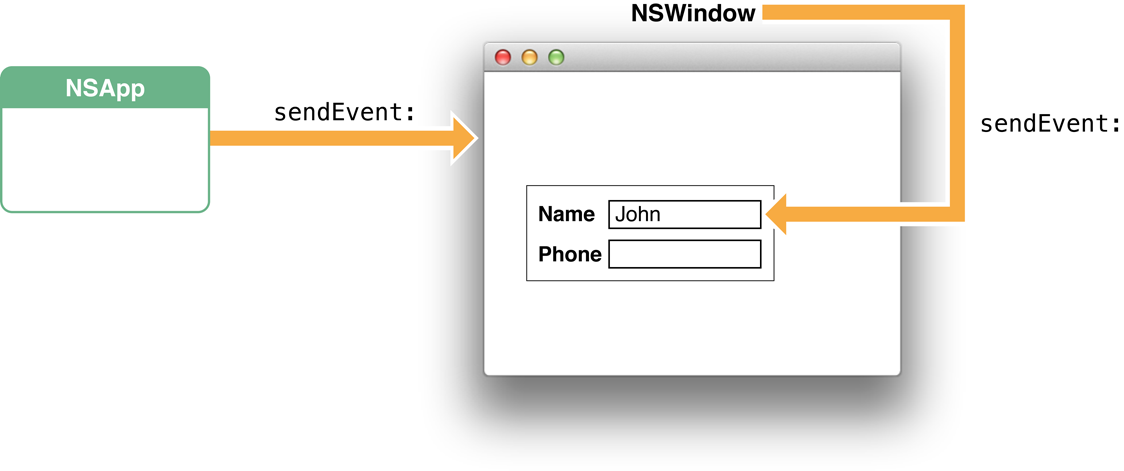

After fetching and converting an event, NSApp performs the first stage of event dispatching in the sendEvent: method. In most cases NSApp merely forwards the event to the window in which the user action occurred by invoking the sendEvent: method of that NSWindow object. The window object then dispatches most events to the NSView object associated with the user action in an NSResponder message such as mouseDown: or keyDown:. An event message includes as its sole argument an NSEvent object describing the event.

The object receiving an event message differs slightly by type of event. For mouse and tablet events, the NSWindow object dispatches the event to the view over which the user pressed the mouse or stylus button. It dispatches most key events to the first responder of the key window. Figure 1-3 and Figure 1-4 illustrate these different general delivery paths. The destination view may decide not to handle the event, instead passing it up the responder chain (see The Responder Chain).

In the preceding paragraph you might have noticed the use of qualifiers such as “in most cases” and “usually.“ The delivery of an event (and especially a key event) in Cocoa can take many different paths depending on the particular kind of event. Some events, many of which are defined by the Application Kit (type NSAppKitDefined), have to do with actions controlled by a window or the application object itself. Examples of these events are those related to activating, deactivating, hiding, and showing the application. NSApp filters out these events early in its dispatch routine and handles them itself.

The following sections describe the different paths of the events that can reach your view objects. For detailed information on these event types, read Event Objects and Types.

The Path of Mouse and Tablet Events

As noted above, an NSWindow object in its sendEvent: method forwards mouse events to the view over which the user action involving the mouse occurred. It identifies the view to receive the event by invoking the NSView method hitTest:, which returns the lowest descendant that contains the cursor location of the event (this is usually the topmost view displayed). The window object forwards the mouse event to this view by sending it a mouse-related NSResponder message specific to its exact type, such as mouseDown:, mouseDragged:, or rightMouseUp:, On (left) mouse-down events, the window object also asks the receiving view whether it is willing to become first responder for subsequent key events and action messages.

A view object can receive mouse events of three general types: mouse clicks, mouse drags, and mouse movements. Mouse-click events are further categorized—as specific NSEventType constants and NSResponder methods—by mouse button (left, right, or other) and direction of click (up or down). Mouse-dragged and mouse-up events are typically sent to the same view that received the most recent mouse-down event. Mouse-moved events are sent to the first responder. Mouse-down, mouse-dragged, mouse-up, and mouse-moved events can occur only in certain situations relative to other mouse events:

Each mouse-up event must be preceded by a mouse-down event.

Mouse-dragged events occur only between a mouse-down event and a mouse-up event.

Mouse-moved events do not occur between a mouse-down and a mouse-up event.

Mouse-down events are sent when a user presses the mouse button while the cursor is over a view object. If the window containing the view is not the key window, the window becomes the key window and discards the mouse-down event. However, a view can circumvent this default behavior by overriding the acceptsFirstMouse: method of NSView to return YES.

Views automatically receive mouse-clicked and mouse-dragged events, but because mouse-moved events occur so often and can bog down the event queue, a view object must explicitly request its window to watch for them using the NSWindow method setAcceptsMouseMovedEvents:. Tracking rectangles, described in Other Event Dispatching, are a less expensive way of following the mouse’s location.

In its implementation of an NSResponder mouse-event method, a subclass of NSView can interpret a mouse event as a cue to perform a certain action, such as sending a target-action message, selecting a graphic element, redrawing itself at a different location, and so on. Each event method includes as its sole parameter an NSEvent object from which the view can obtain information about the event. For example, the view can use the locationInWindow to locate the mouse cursor’s hot spot in the coordinate system of the receiver’s window. To convert it to the view’s coordinate system, use convertPoint:fromView: with a nil view argument. From here, you can use mouse:inRect: to determine whether the click occurred in an interesting area.

Tablet events take a path to delivery to a view that is similar to that for mouse events. The NSWindow object representing the window in which the tablet event occurred forwards the event to the view under the cursor. However, there are two kinds of tablet events, proximity events and pointer events. The former are generally native tablet events (of type NSTabletProximity) generated when the stylus moves into and out of proximity to the tablet. Tablet pointer events occur between proximity-entering and proximity-leaving tablet events and indicate such things as stylus direction, pressure, and button click. Pointer events are generally subtypes of mouse events. Refer to Handling Tablet Events for more information.

For the paths taken by mouse-tracking and cursor-update events, see Other Event Dispatching.

The Path of Key Events

Processing keyboard input is by far the most complex part of event dispatch. The Application Kit goes to great lengths to ease this process for you, and in fact handling the key events that get to your custom objects is fairly straightforward. However, a lot happens to those events on their way from the hardware to the responder chain. Of particular interest are the types of key events that arrive in a Cocoa application as NSEvent objects and the order and way these types of events are handled.

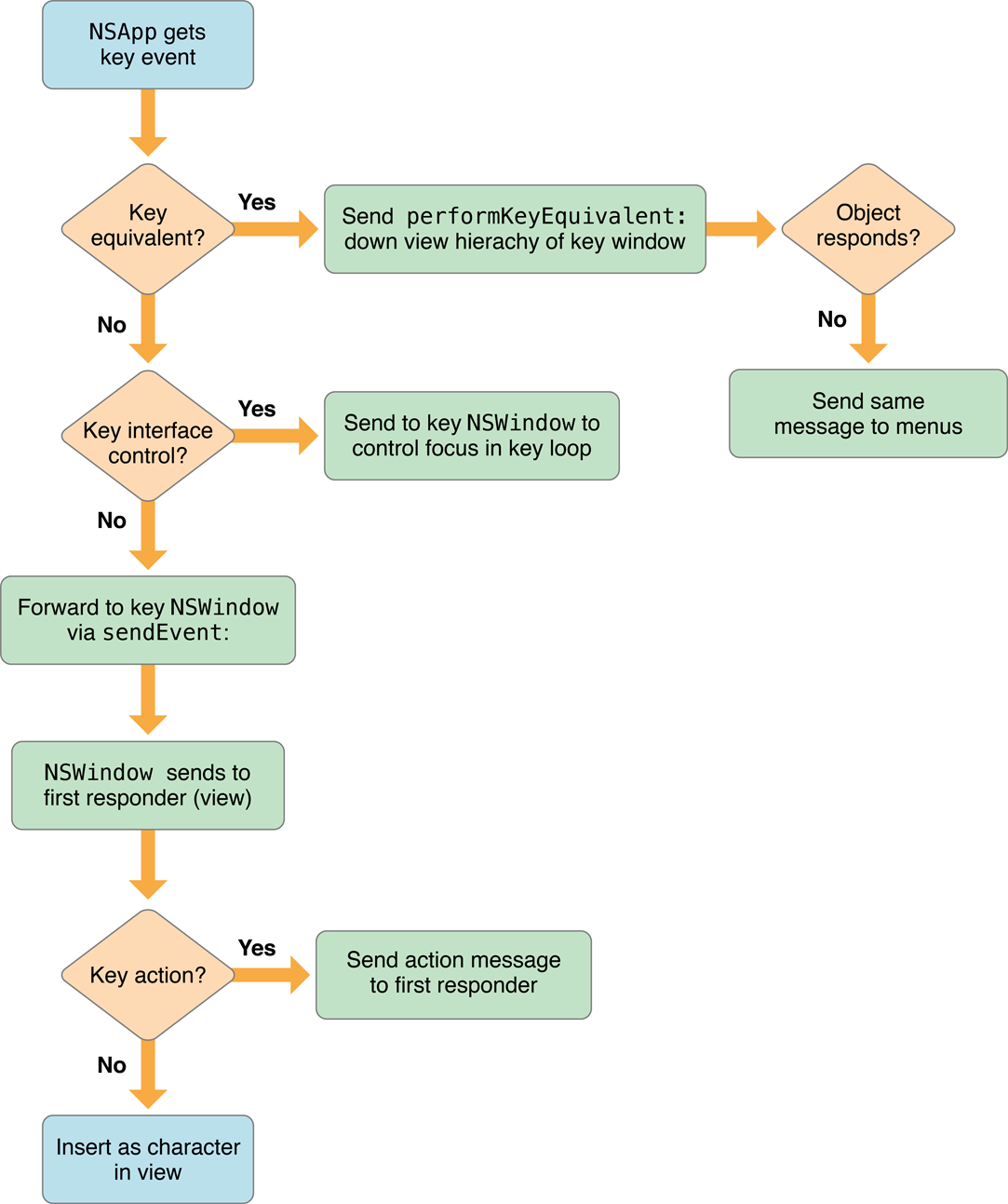

A Cocoa application evaluates each key event to determine what kind of key event it is and then handles in an appropriate way. The path a key event can take before it is handled can be quite long. Figure 1-5 shows these potential paths.

The following list describes in detail the possible paths for key events, in the order in which an application evaluates each key event;

Key equivalents. A key equivalent is a key or key combination (usually a key modified by the Command key) that is bound typically to some menu item or control object in the application. Pressing the key combination simulates the action of clicking the control or choosing the menu item.

The application object handles key equivalents by going down the view hierarchy in the key window, sending each object a

performKeyEquivalent:message until an object returnsYES. If the message isn’t handled by an object in the view hierarchy,NSAppthen sends it to the menus in the menu bar. Some Cocoa classes, such asNSButton,NSMenu,NSMatrix, andNSSavePanelprovide default implementations.For more information, see Handling Key Equivalents.

Keyboard interface control. A keyboard interface control event manipulates the input focus among objects in a user interface. In the key window,

NSWindowinterprets certain keys as commands to move control to a different interface object, to simulate a mouse click on it, and so on. For example, pressing the Tab key moves input focus to the next object; Shift-Tab reverses the direction; pressing the space bar simulates a click on a button. The order of interface objects controlled through this mechanism is specified by a key view loop. You can set up the key view loop in Interface Builder and you can manipulate the key view loop programmatically through thesetNextKeyView:andnextKeyViewmethods ofNSView.For more information, see Keyboard Interface Control.

Keyboard action. Unlike the action messages that controls send to their targets (see Action Messages), keyboard actions are commands (represented by methods defined by the

NSResponderclass) that are per-view functional interpretations of physical keystrokes (as identified by the constant returned by thecharactersmethod ofNSEvent). In other words, keyboard actions are bound to physical keys through the key bindings mechanism described in Text System Defaults and Key Bindings. For example,pageDown:,moveToBeginningOfLine:, andcapitalizeWord:are methods invoked by keyboard actions when the bound key is pressed. Such actions are sent to the first responder, and the methods handling these actions can be implemented in that view or in a superview further up the responder chain.See Overriding the keyDown: Method for more information about the handling of keyboard actions.

Character (or characters) for insertion as text.

If the application object processes a key event and it turns out not to be a key equivalent or a key interface control event, it then sends it to the key window in a sendEvent: message. The window object invokes the keyDown: method in the first responder, from whence the key event travels up the responder chain until it is handled. At this point, the key event can be either one or more Unicode character to be inserted into a view’s displayed text , a key or key combination to be specially interpreted, or a keyboard-action event.

See Handling Key Events for more information about how key events are dispatched and handled.

Other Event Dispatching

An NSWindow object monitors tracking-rectangle events and dispatches these events directly to the owning object in mouseEntered: and mouseExited: messages. The owner is specified in the second parameter of the NSTrackingArea method initWithRect:options:owner:userInfo: and the NSView methodaddTrackingRect:owner:userData:assumeInside:. Using Tracking-Area Objects describes how to set up tracking rectangles and handle the related events.

Periodic events (type NSPeriodic) are generated by the application at a specified frequency and placed in the event queue. However, unlike most other types of events, periodic events aren’t dispatched using the sendEvent: mechanism of NSApplication and NSWindow. Instead the object registering for the periodic events typically retrieves them in a modal event loop using the nextEventMatchingMask:untilDate:inMode:dequeue: method. See Other Types of Events for more information about periodic events.

Action Messages

The discussion so far has focused on event messages: messages arising from an device-related event such as a mouse click or a key-press. The Application Kit sends an event message of the appropriate form—for example, mouseDown: and keyDown:—to an NSResponder object for handling.

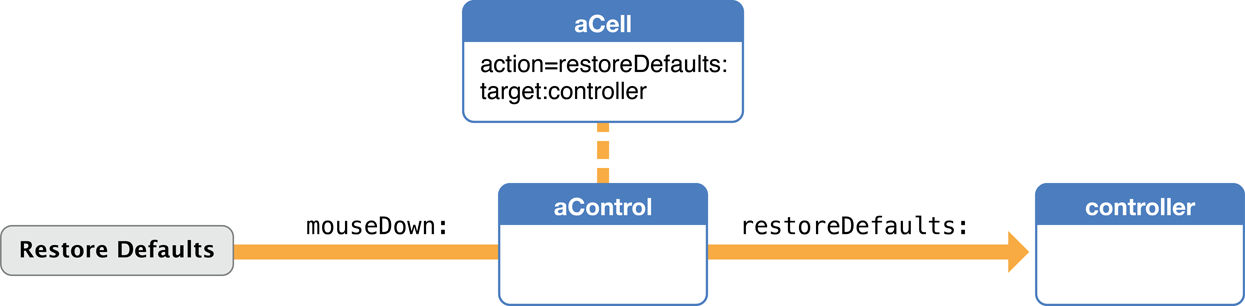

But NSResponder objects are also expected to handle another kind of message: action messages. Actions are commands that objects, usually NSControl or NSMenu objects, give to the application object to dispatch as messages to a particular target or to any target that’s willing to respond to them. The methods invoked by action messages have a specific signature: a single parameter holding a reference to the object initiating the action message; by convention, the name of this parameter is sender. For example,

- (void)moveToEndOfLine:(id)sender; // from NSResponder.h |

Event and action methods are dispatched in different ways, by different methods. Nearly all events enter an application from the window server and are dispatched automatically by the sendEvent: method of NSApplication. Action messages, on the other hand, are dispatched by the sendAction:to:from: method of the global application object (NSApp) to their proper destinations.

As illustrated in Figure 1-6, action messages are generally sent as a secondary effect of an event message. When a user clicks a control object such as a button, two event messages (mouseDown: and mouseUp:) are sent as a result. The control and its associated cell handle the mouseUp: message (in part) by sending the application object a sendAction:to:from: message. The first argument is the selector identifying the action method to invoke. The second is the intended recipient of the message, called the target, which can be nil. The final argument is usually the object invoking sendAction:to:from:, thus indicating which object initiated the action message. The target of an action message can send messages back to sender to get further information. A similar sequence occurs for menus and menu items. For more on the architecture of controls and cells (and menus and menu items) see The Core App Design in Mac App Programming Guide.

The target of an action message is handled by the Application Kit in a special way. If the intended target isn’t nil, the action is simply sent directly to that object; this is called a targeted action message. In the case of an untargeted action message (that is, the target parameter is nil), sendAction:to:from: searches up the full responder chain (starting with the first responder) for an object that implements the action method specified. If it finds one, it sends the message to that object with the initiator of the action message as the sole argument. The receiver of the action message can then query the sender for additional information. You can find the recipient of an untargeted action message without actually sending the message using targetForAction:.

Event messages form a well-known set, so NSResponder provides declarations and default implementations for all of them. Most action messages, however, are defined by custom classes and can’t be predicted. However, NSResponder does declare a number of keyboard action methods, such as pageDown:, moveToBeginningOfDocument:, and cancelOperation:. These action methods are typically bound to specific keys using the key-bindings mechanism and are meant to perform cursor movement, text operations, and similar operations.

A more general mechanism of action-message dispatch is provided by the NSResponder method tryToPerform:with:. This method checks the receiver to see if it responds to the selector provided, if so invoking the message. If not, it sends tryToPerform:with: to its next responder. NSWindow and NSApplication override this method to include their delegates, but they don’t link individual responder chains in the way that the sendAction:to:from: method does. Similar to tryToPerform:with: is doCommandBySelector:, which takes a method selector and tries to find a responder that implements it. If none is found, the method causes the hardware to beep.

Responders

A responder is an object that can receive events, either directly or through the responder chain, by virtue of its inheritance from the NSResponder class. NSApplication, NSWindow, NSDrawer, NSWindowController, NSView and the many descendants of these classes in the Application Kit inherit from NSResponder. This class defines the programmatic interface for the reception of event messages and many action messages. It also defines the general structure of responder behavior. Within the responder chain there is a first responder and a sequence of next responders

For more on the responder chain, see The Responder Chain.

First Responders

A first responder is typically a user-interface object that the user selects or activates with the mouse or keyboard. It is usually the first object in a responder chain to receive an event or action message. An NSWindow object’s first responder is initially itself; however, you can set, programmatically and in Interface Builder, the object that is made first responder when the window is first placed on-screen.

When an NSWindow object receives a mouse-down event, it automatically tries to make the NSView object under the event the first responder. It does so by asking the view whether it wants to become first responder, using the acceptsFirstResponder method defined by this class. This method returns NO by default; responder subclasses that need to be first responder must override it to return YES. The acceptsFirstResponder method is also invoked when the user changes the first responder through the keyboard interface control feature.

You can programmatically change the first responder by sending makeFirstResponder: to an NSWindow object. This message initiates a kind of protocol in which one object loses its first responder status and another gains it. See Setting the First Responder for further information.

An NSPanel object presents a variation of first-responder behavior that permits panels to present a user interface that doesn’t take away key focus from the main window. If the panel object representing an inactive window and returning YES from becomesKeyOnlyIfNeeded receives a mouse-down event, it attempts to make the view object under the mouse pointer the first responder, but only if that object returns YES in acceptsFirstResponder and needsPanelToBecomeKey.

Mouse-moved events (type NSMouseMoved) are always sent to the first responder, not to the view under the mouse.

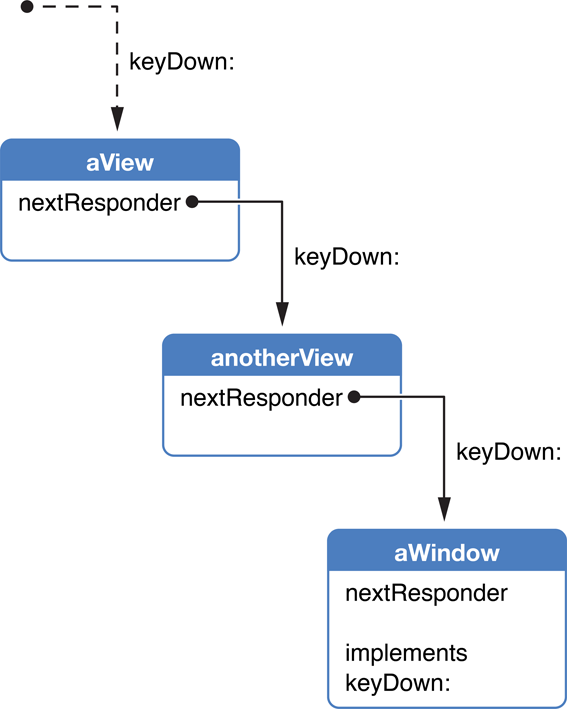

Next Responders

Every responder object has a built-in capability for getting the next responder up the responder chain. The nextResponder method, which returns this object, is the essential mechanism of the responder chain. Figure 1-7 shows the sequence of next responders.

A view’s next responder is always its superview—most of the responder chain, in fact, comprises the views from a window’s first responder up to its content view. When you create a window or add subviews to existing views, either programmatically or in Interface Builder, the Application Kit automatically hooks up the next responders in the responder chain. The addSubview: method of NSView automatically sets the receiver as the new subview’s superview. If you interpose a different responder between views, be sure to verify and potentially fix the responder chain after adding or removing views from the view hierarchy.

The Responder Chain

The responder chain is a linked series of responder objects to which an event or action message is applied. When a given responder object doesn’t handle a particular message, the object passes the message to its successor in the chain (that is, its next responder). This allows responder objects to delegate responsibility for handling the message to other, typically higher-level objects. The Application Kit automatically constructs the responder chain as described below, but you can insert custom objects into parts of it using the NSResponder method setNextResponder: and you can examine it (or traverse it) with nextResponder.

An application can contain any number of responder chains, but only one is active at any given time. The responder chain is different for event messages and action messages, as described in the following sections.

Responder Chain for Event Messages

Nearly all event messages apply to a single window’s responder chain—the window in which the associated user event occurred. The default responder chain for event messages begins with the view that the NSWindow object first delivers the message to. The default responder chain for a key event message begins with the first responder in a window; the default responder chain for a mouse or tablet event begins with the view on which the user event occurred. From there the event, if not handled, proceeds up the view hierarchy to the NSWindow object representing the window itself. The first responder is typically the “selected” view object within the window, and its next responder is its containing view (also called its superview), and so on up to the NSWindow object. If an NSWindowController object is managing the window, it becomes the final next responder. You can insert other responders between NSView objects and even above the NSWindow object near the top of the chain. These inserted responders receive both event and action messages. If no object is found to handle the event, the last responder in the chain invokes noResponderFor:, which for a key-down event simply beeps. Event-handling objects (subclasses of NSWindow and NSView) can override this method to perform additional steps as needed.

Responder Chain for Action Messages

For action messages, the Application Kit constructs a more elaborate responder chain that varies according to two factors:

Whether the application is based on the document architecture and, if it isn't, whether it uses

NSWindowControllerobjects for its windowsWhether the application is currently displaying a key window as well as a main window

Action messages have a more elaborate responder chain than do event messages because actions require a more flexible runtime mechanism for determining their targets. They are not restricted to a single window, as are event messages.

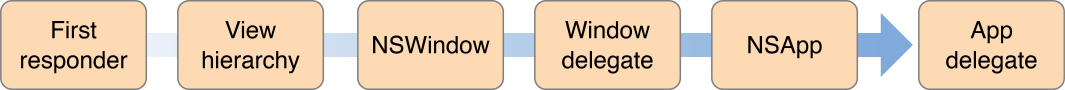

The simplest case is an active non-document-based window that has no associated panel or secondary window displayed—in other words, a main window that is also the key window. In this case, the responder chain is the following:

The main window’s first responder and the successive responder objects up the view hierarchy

The main window itself

The main window’s delegate (which need not inherit from

NSResponder)The application object,

NSAppThe application object’s delegate (which need not inherit from

NSResponder)

This chain is shown graphically in Figure 1-8.

As this sequence indicates, the NSWindow object and the NSApplication object give their delegates a chance to handle action messages as though they were responders, even though a delegate isn’t formally in the responder chain (that is, a nextResponder message to a window or application object doesn’t return the delegate).

When an application is displaying both a main window and a key window, the responder chains of both windows can be involved in an action message. As explained in Window Layering and Types of Windows, the main window is the frontmost document or application window. Often main windows also have key status, meaning they are the current focus of user input. But a main window can have a secondary window or panel associated with it, such as the Find panel or a Info window showing details of a selection in the document window. When this secondary window is the focus of user input, then it is the key window.

When an application has a main window and a separate key window displayed, the responder chain of the key window gets first crack at action messages, and the responder chain of the main window follows. The full responder chain comprises these responders and delegates:

The key window’s first responder and the successive responder objects up the view hierarchy

The key window itself

The key window’s delegate (which need not inherit from

NSResponder)The main window’s first responder and the successive responder objects up the view hierarchy

The main window itself

The main window’s delegate (which need not inherit from

NSResponder)The application object,

NSAppThe application object’s delegate (which need not inherit from

NSResponder)

As you can see, the responder chains for the key window and the main window are identical with the global application object and its delegate being the responders at the end of the main window's responder chain. This design is true for the responder chains of the other kinds of applications: those based on the document architecture and those that use an NSWindowController object for window management. In the latter case, the default main-window responder chain consists of the following responders and delegates:

The main window’s first responder and the successive responder objects up the view hierarchy

The main window itself

The window's

NSWindowControllerobject (which inherits fromNSResponder)The main window’s delegate

The application object,

NSAppThe application object's delegate

Figure 1-9 shows the responder chain of non-document-based application that uses an NSWindowController object.

NSWindowController object (action messages)

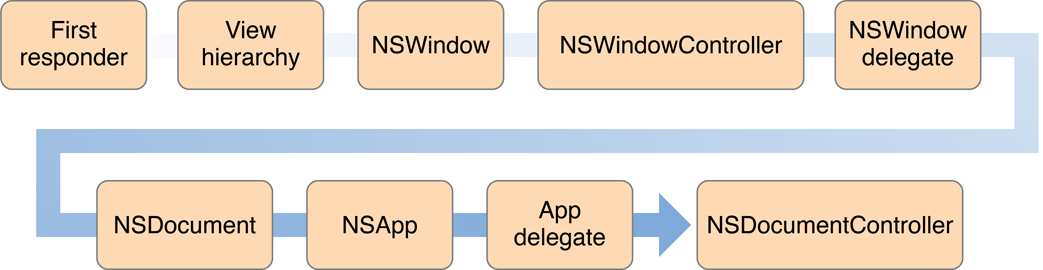

For document-based applications, the default responder chain for the main window consists of the following responders and delegates:

The main window’s first responder and the successive responder objects up the view hierarchy

The main window itself

The window's

NSWindowControllerobject (which inherits fromNSResponder)The main window’s delegate.

The

NSDocumentobject (if different from the main window’s delegate)The application object,

NSAppThe application object's delegate

The application's document controller (an

NSDocumentControllerobject, which does not inherit fromNSResponder)

Figure 1-10 shows the responder chain of a document-based application.

Other Uses

The responder chain is used by three other mechanisms in the Application Kit:

Automatic menu item and toolbar item enabling: In automatically enabling and disabling a menu item with a

niltarget, anNSMenusearches different responder chains depending on whether the menu object represents the application menu or a context menu. For the application menu,NSMenuconsults the full responder chain—that is, first key, then main window—to find an object that implements the menu item’s action method and (if it implements it) returnsYESfromvalidateMenuItem:. For a context menu, the search is restricted to the responder chain of the window in which the context menu was displayed, starting with the associated view.Enabling and disabling of toolbar items makes use of the responder chain in a fashion identical to that of menu items. In this case, the key validation method is

validateToolbarItem:.For more on automatic menu-item enabling, see Enabling Menu Items; for more on validation of toolbar items, see Validating Toolbar Items.

Services eligibility: Similarly, the Services facility passes

validRequestorForSendType:returnType:messages along the full responder chain to check for objects that are eligible for services offered by other applications.For further information, see Services Implementation Guide.

Error presentation: The Application Kit uses a modified version of the responder chain for error handling and error presentation, centered upon the

NSRespondermethodspresentError:modalForWindow:delegate:didPresentSelector:contextInfo:andpresentError:.For more information on the error-responder chain, see Error Handling Programming Guide.

Copyright © 2016 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2016-09-13