-

Meet Apple Spatial Audio Format and APAC

Discover how Apple Spatial Audio Format (ASAF) brings together Higher Order Ambisonics and object-based audio to create spatial precision that complements Apple Immersive Video. This session also introduces APAC, a highly efficient codec designed to play back high-resolution ASAF mixes at low bitrates while maintaining immersive detail and clarity.

This session was originally presented as part of the Meet with Apple activity “Create immersive media experiences for visionOS - Day 2.” Watch the full video for more insights and related sessions.리소스

관련 비디오

Meet with Apple

-

비디오 검색…

Good morning. I'm Deep and I'm the lead immersive audio architect at Apple. This year, our team publicly released new end to end immersive audio technology involving a new audio format, the Apple Spatial Audio Format or ASAF, and a new codec called APAC.

Today, I'm excited to give you an overview of this new format and the new codec.

I'll also dive into the workflow a little bit and tooling to create content in this new format.

Let's explore the format a little bit.

The format allows content with both HOA objects and metadata. HOA microarrays allow accurate capture of 3D audio, and objects allow creatives to mold the sound field with infinite spatial resolution. Furthermore, the format allows very rich combinations of these to provide the high spatial resolutions required to match human acuity. Most of the content of the Vision Pro, for example, is made up of fifth order Ambisonics along with many objects. That's unprecedented in industry in terms of spatial resolution. Finally, the format uses a metadata driven renderer that generates acoustic cues on the fly during playback. It adapts to object, listener, object, and listener changes in position and orientation, presenting an amazing level of acoustic detail to the listener. None of these are available in an existing spatial audio format.

All of these are designed to meet the incredibly high bar for creating realistic, immersive audio on a device like the Vision Pro. Let's discuss why that is. As with any audiovisual content, there is a need to create an acoustic scene that matches the visuals. However, here any slight deviations and incongruencies are easily discernible. In essence, the requirement is to teleport the audience into that scene and feel naturally present in that new soundscape. This is not an easy task.

This is very different from the audio system in a theater like this, for example. For example, a movie played on this screen would be at a significant distance to the viewer. Incongruences and precise audio positions and distance from the corresponding video are difficult to discern, and often at this distance the visual cues override incorrect positions of audio sources anyway. Also here, the viewer is never present in the scene. The viewer is always aware of their immediate surroundings. And finally, consider that in a theater like this, sounds are always going to be emanating from loudspeakers, which are, of course, outside of the listener. These sounds are therefore externalized by definition. All existing spatial audio formats were primarily designed to be played in this way. That is, over loudspeakers and externalization is guaranteed.

But when listening over headphones, externalization is not guaranteed without significant accommodations in the sound design. Its accurate transport through a specially designed codec and adaptive rendering. The audio experience tends to be mostly internalized or inside of the head. The new format ASAF, on the other hand, has been designed primarily for headphone playback. We've added a level of detail and accuracy that produces the natural and convincingly externalized experience that you've all heard on AIV content on the Vision Pro by now.

But before I go too much further, let's quickly formalize what I mean by immersive audio.

I've used the terms natural and externalized, and these are the two principal dimensions that lead to an immersive audio experience.

There's no doubt that the sense of immersion is most pervasive when the spatial experience is completely natural and convincingly externalized.

But let's break that down some more. First, naturalness. What is it really? It's essentially matching the audio experience to our internal and often subconscious expectation of what the audio experience should be. There's almost an internal plausibility metric that dictates that sense of naturalness and externalization.

This matching is achieved when key acoustic cues in the rendered audio are accurate and non-conflicting. Listing. This is fairly intuitive and backed up by all recent research.

To summarize, in order to get compelling immersive experiences, acoustic cues need to be presented accurately. Let's take one very important cue for now early reflections in a common real world scenario, and consider the challenge of rendering it accurately.

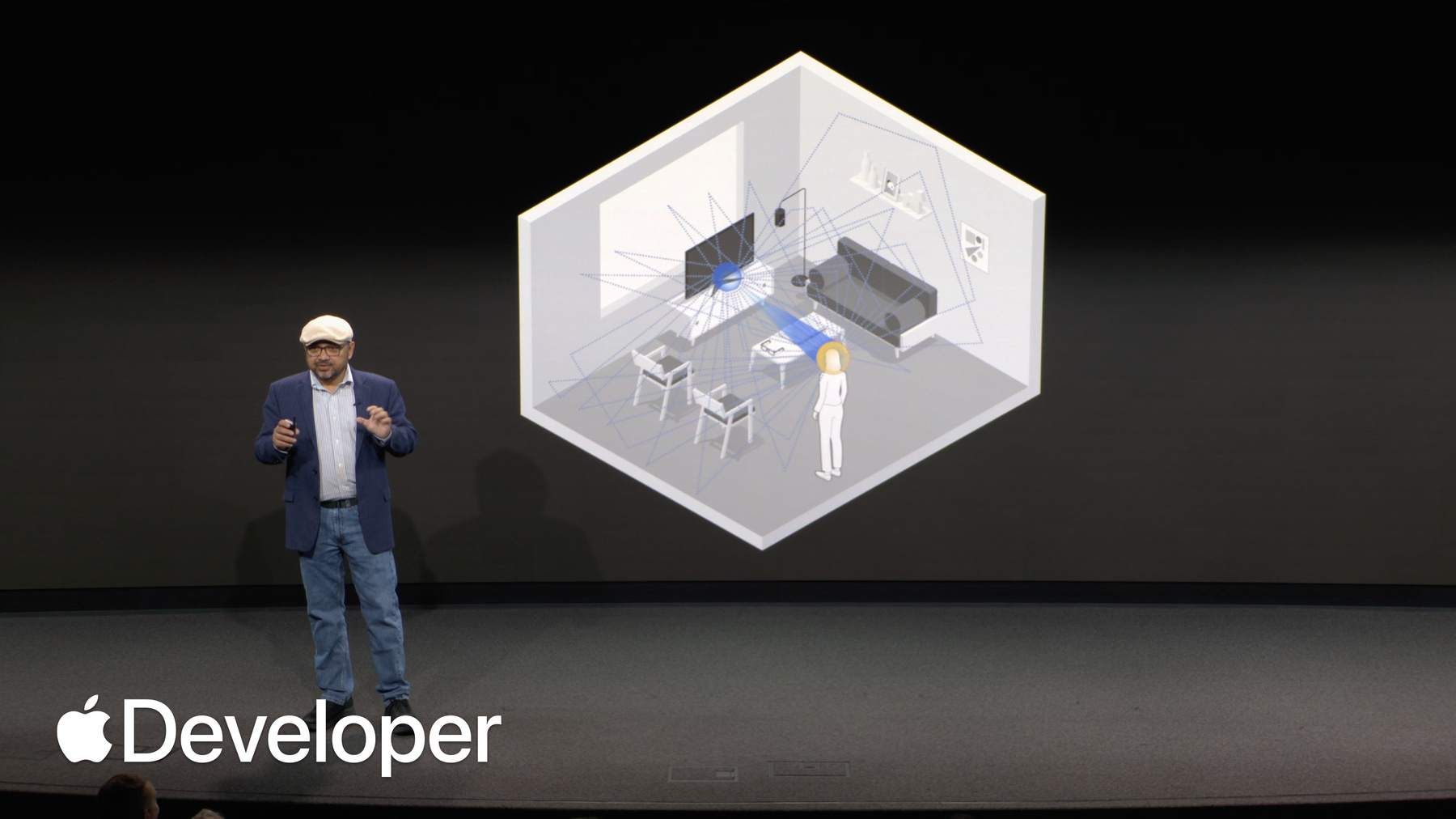

I'll start with a panel of sorts that's present in some form in all spatial audio content creation tools. This depicts a listener in a room as seen from above. I'll visually visualize this in 2D for now.

The walls of the room are here outlined in white. There's some furniture like a couch and a table. The listener label L is standing in the center of the room, with a directional arrow indicating their orientation and an audio object label. Oh in blue is about to make a sound. The directional arrows indicate the orientation of the object.

The direct sound from the object travels to the listener's left and right ears, and if the listener were to rotate their head, the angles of the direct sound change. Now, Apple is already provides the best in class adaptive rendering of this effect. By tracking the head rotations as well as modeling our individual head shapes. We've been doing that for a number of years, but the effect of direct sounds is just one factor.

In reality, people don't just listen to the direct path. A listener hears the sound as a combination of both the direct path as well as the reflections from the surfaces in the local vicinity. And this is a lot more difficult to both represent accurately in the mix and to render it. Consider the effect from the closest wall. It's useful to think of the reflection from the wall as a virtual object, reflecting the sound from the original source to the listener's ears. However, notice what happens if the listener rotates their head. The position of the virtual object changes.

And if that change is not accounted for, an incorrect acoustic cue would be presented to the listener, even though it might look subtle when considered in isolation. This will contribute to the overall inaccuracy that will at best reduce the immersion effect for the listener. That is, reduce naturalness and externalization, essentially reminding the listener that they're really not part of the scene.

And remember, it's not just one virtual object that you have to account for, unless the virtual soundscape is in free space. Reflections have to be accounted for from many different reflective points.

And what I showed exemplifies that these reflections change as a function of listener orientation. As such, they cannot be baked in as a constant into the content. Baking in these effects, however, is the norm in existing spatial audio formats.

The reflections will also change if the object moves, or if both listener and object move. And remember, this example is just in two dimensions when the effect is really in three dimensions. And remember, reflections emanate not just from the walls and the furniture. They also come from the ceiling and the floor. If the mixer stored the effect in, say, 7.1 channel bed, the effect of the floor and ceilings are seriously underrepresented.

It's not just the reflections, however, there are many other such acoustic cues that the listener is subconsciously expecting to match. For each object, there are distance cues. There's the radiation pattern and orientation of each sound source, all of which change when the position of the object or the listener, or both change with time.

It would be almost impossible to precisely account for all of these manually, and for hundreds of objects when creating an immersive audio mix. And if you do manage to bake in specific effects during content creation, you're at best only accounting for one possible listener position or orientation. It's impossible for the acoustics to adapt. This leads to unnaturalness and reduced externalization of the audio, which breaks immersion for the audience.

Since no existing format supported this level of accuracy and adaptation, while also not having the required high spatial resolution, we built ASAF.

To summarize, the new format allows metadata driven, real time rendering of critical acoustic cues that adapt with the listener's position and Orientation. These acoustic cues are not baked in for object based audio.

Unlike other formats where the effects are manually generated and baked in during content creation, the effects in ASAF are computationally generated during playback.

And all of this is powered by a new spatial renderer built into all Apple platforms. This means that the renderer used during content creation, say on macOS, will maintain artistic integrity on the Vision Pro. These powers are a key part of why this format can help you tell such realistic stories for the audience, and when it's done correctly, the listener is immersed into the scene, disassociated from both the awareness of the device and their immediate surroundings.

Apple has already been using this in production for all of them. Apple Immersive Video content. However, the format is only one part of the technology. Most of the content shown here actually delivers that fifth order Ambisonics simultaneously with 15 objects and metadata to the end device. That is an industry leading 51 number of LPCM signals.

To transport this high resolution Spatial Audio, Apple developed a brand new spatial codec.

It's called the Apple Positional Audio Codec, or APAC, and the primary motivation for the development of the codec was to deliver high resolution ASAF content. APAC is able to do this with very high efficiency that is keeping the bitrate low. This also isn't just for the visionOS. APAC is available on all Apple platforms except watchOS.

That content dimension fifth order Ambisonics and 15 objects at 32 bits per sample, that's an 81 megabit per second payload. With APAC, we can encode this at one megabit per second with excellent quality. That's 80 to 1 compression ratio, or 20kbps per channel. This bitrate can actually go down. The total bitrate can go down to as low as 64kbps, while still providing head tracked spatial audio experiences. Consider the fact that audio bitrate for transparent stereo music is 256kbps. That's 128kbps per channel.

So how can you take advantage of the new format and the new codec? Well, let's go over the end to end workflow.

To start, let's go over the tools to actually create ASAF content. Currently there are two. There's the just released Apple AAX plugins for Pro Tools. This is called the ASAP Production Suite. You can download the suite from Apple's Developer portal for free. Go to.

Download and search for Spatial Audio or scan this QR code if you like.

And then there is Blackmagic's Fairlight in DaVinci Resolve studio. Both of these allow the creation of up to seventh order Ambisonics, in addition to hundreds of ASAP objects and metadata.

Both of these tools provide a 3D panner that facilitates the positioning of audio objects in 3D space and at any distance. A video player that allows for the objects to be overlaid on video. The video player and the panner are synced. The ability to describe the type of acoustic environment that the objects are located in.

The ability to provide a radiation pattern, as well as a look direction for each object.

Give the object a width and a and height.

Convert the object to HOA via room simulation if desired. Room simulation for HOA allows it to have accurate reverb that we talked about that is critical for externalization. HOA signals can be manipulated in various ways as well, tagging objects as music and effects, dialog or interactive elements, and finally saving the entirety to a Broadcast Wave File or optionally also to an APAC encoded MP4 file.

Later today, the Blackmagic team will talk about Fairlight, the professional audio production software that supports ASAF and is completely integrated with Apple Immersive Video. The Apple team will also briefly discuss the Apple Pro Tools plugins for creating immersive audio.

I'll go over. I'll go through the end to end content creation and distribution process for ASAF. Content is created by the creative mixer, bringing in all kinds of microphone recordings and stems into the tool.

This produces a set of PCM signals representing a combination of objects. Ambisonics and channels, as well as a set of time varying metadata such as position direction. Room acoustics.

The Creative Mixer is able to listen to that mix by rendering the PCM signals in the metadata, and once the creative mixer is done, of course there's a representation of the format, which are the PCM signals, the metadata, and also the renderer. But it's assumed that the renderer is present in the playback device. So it's really the audio format is really made up of the PCM and the metadata. This is saved on that Broadcast Wave File and then encoded using APAC and subsequently converted using HLS tools to a format that's suitable for streaming.

On the playback device. that fragmented MP4 is decoded into the PCM and metadata ingested by the adaptive renderer, which also gets the position and orientation of the listener.

This allows then the rendering of the immersive audio experience.

To summarize, these tools provide the creative artist with the ability to create both live and post-produced ASAF content using objects. Ambisonics as well as channels and a combination of all of these, Ambisonics allows a tremendous capacity for live capturing of soundscapes using microphone arrays. Artists can also augment an object into Ambisonics. Discrete objects can be described using a comprehensive set of metadata.

Multiple alternative audio experiences can be created and delivered in the same APAC bitstream, allowing the audience to select and personalize their experience and the rendering produces key acoustic cues accurately and adaptively and directly to headphones, without a need for intermediate virtualization to loudspeakers.

And all of this, especially the ability to efficiently transport spatial audio with industry leading resolutions, is facilitated by the new codec APAC.

All right. So that brings me to the end of my presentation. Remember, audio is at least 50% of the experience. I hope you're encouraged to dive in and get immersed. Well, that is dive into creating compelling soundscapes with ASAF and APAC. We're not quite done with audio just yet. Later today, Tim iMac will talk in more depth about post-production, and both the Blackmagic team and Apple team members will discuss ASAF tooling. And with that, I'll hand it back to Elliot.

Thank you.

-