-

What's New in Machine Learning

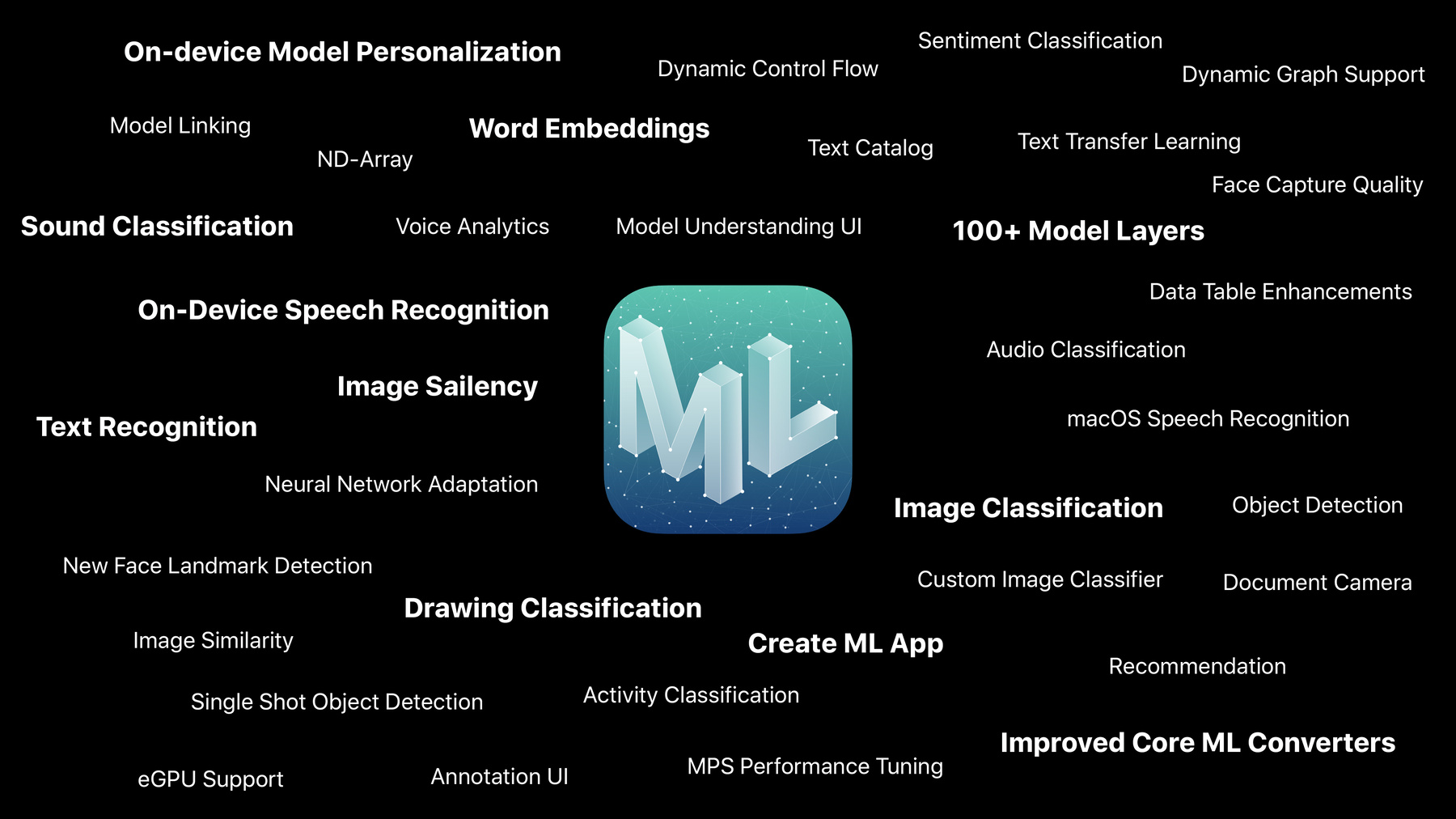

Core ML 3 has been greatly expanded to enable even more amazing, on-device machine learning capabilities in your app. Learn about the new Create ML app which makes it easy to build Core ML models for many tasks. Get an overview of model personalization; exciting updates in Vision, Natural Language, Sound, and Speech; and added support for cutting-edge model types.

리소스

관련 비디오

WWDC19

- 우수한 ML 경험 디자인하기

- Advances in Natural Language Framework

- Advances in Speech Recognition

- Building Activity Classification Models in Create ML

- Creating Great Apps Using Core ML and ARKit

- Designing for Privacy

- Introducing the Create ML App

- Metal for Machine Learning

- Text Recognition in Vision Framework

- Training Sound Classification Models in Create ML

- Understanding Images in Vision Framework

-

비디오 검색…

Hello. Welcome everyone. My name is Gaurav and today we're going to talk about What's New in Machine Learning.

Machine Learning is used by thousands of apps.

The apps that you are making are amazing. They're touching every aspect of a user's life. In hospitals, doctors are using apps such as Butterfly iQ to do medical diagnostics in real time. In sports, coaches are using apps such as HomeCourt to train their players. In creativity, apps such as Pixelmator Pro are helping other users to augment their creativity using ML.

These are just a few examples, and we would like all of these apps you guys should also make these kind of apps. So now the question is what's new? Let me begin by saying a lot. We have so much material that we can hardly cover it one session or two sessions. We need ten sessions to cover the entire material.

We also have a session intersecting the design in machine learning.

We also have Daily Labs where you can meet and discuss your ideas with Apple Machine Learning engineers.

All of us are here to remove any roadblocks that you might be facing while integrating Machine Learning in your app.

In all of these sessions, you will see that we follow simple principles.

We want Machine Learning to be as easy to use as possible. We are laser focused on doing that. We want to make it flexible so you can do it by variety of tasks, and we want to make it powerful so you can run state of the art Machine Learning models on your devices. It is truly Machine Learning for everyone whether you are a researcher or someone new to Machine Learning.

With Create ML app you can make state of the art, task-focused ML models.

The next big pillar of our offering is Domain APIs.

Domain APIs allow you to leverage Apple's built-in intelligence and models. So you don't have to worry about collecting the data and building the model. Simply call the API and be done.

This year we are significantly expanding our Domain APIs. We have Domain APIs in Vision, Text, Speech and Sound. Now let's take a sneak peek of some of these APIs. Let's start with Vision.

Vision allows you to reason about the content of the image.

One of the new features this year is Image Saliency.

Image Saliency can help you identify the most relevant region in your image. In this case, the region around the person.

You can use it to generate thumbnails or to make image cropping, generate memories, guide camera et cetera. Another big feature we are introducing this year is Text Recognition.

You can now take a picture of the document, perform perspective correction, lighting correction and reorganize the text on the device. This is huge, but that's not all. We have Image inbuilt classifier, human detector, pet detector, and we are going to cover them in detail in our two Vision sessions.

Next domain is Natural Language. Just like you use Vision to reason about images you can use Natural Language to reason about the text. New this year is inbuilt Sentiment Analysis. So you can use to analyze the sentiment of the text in real time on the device in a privacy friendly way. So, for example, if somebody types something like this I was so excited about the season finale that's a positive sentiment, but it was a bit disappointing at the end it's a negative sentiment. So you can provide this kind of feedback in real time. For the first time we are also exposing inbuilt Word Embeddings.

Word Embeddings can allow you to find semantically similar words. So, for example, the word thunderstorm is very close to cloudy but it is very far away from shoes and boots. One of the big use case of Word Embeddings is semantic search and we are going to give you an example soon. Natural Language will be discussed in detail in Advances in Natural Language Framework session. The third way user interacts with your app is through speech and sound.

Now we have an on-device speech support so you can transcribe the text on the device so you no longer have to rely on the network connection, and we also have new Voice Analytics API that can tell you not only what is spoken but how it is spoken. So you can differentiate between a normal voice and a high jittery voice.

We also have a brand-new Sound Analysis Framework, that we will discuss in Create ML session. There's a lot more in each of these domains and another thing you can do to combine these domains almost seamlessly. Let me show you an example. Let's just say you want to build a feature that does Semantic Search on Images. That's a very complex feature. So if a user searches for thunderstorm, you want to provide them the results not only for thunderstorm but also for sky and cloudy. Now, you can combine Vision with Natural Language to implement this feature in very few lines of code.

This is how you will do it. You will run your Image Classifier on images or you can have them use inbuilt Image Classifier to generate the tags. When the user types the word something like thunderstorm, you can use Word Embeddings to generate similar words and find the images that matches these tags. And that's not all. You can also combine the custom models that you made using Create ML with Domain APIs, and we are going to show an example of that in our Creating Great Apps Using Core ML and ARKit session. So to summarize, Domain APIs allow you to leverage Apple's built-in intelligence and model using API so you don't have to collect the data and make the models. And this year we have a significant expansion in our Vision Natural Language and Speech and Sound APIs.

Now let's talk about Core ML 3, the third big pillar of our offering.

Core ML is now supported across all our platforms. All the work is done on the device so user's privacy is maintained. Core ML is hardware accelerated so you can do, you can use it for realtime Machine Learning and you don't need a server and it always available. Core ML has always supported a wide variety of Machine Learning models ranging from classical generalized linear models, tree ensembles and support vector machines as well as neural networks such as Convolution Neural Network and Recurrent Neural Networks.

New in Core ML is model flexibility and model personalization. So let's take a look at both of them.

Core ML has expanded support for Data Neural Networks and we have added a support for more than 100+ Neural Network layers.

This means that you can bring almost, you can bring the most cutting-edge Machine Learning models into your app such as ELMo, BERT, Wavenet. What does that mean? Let's just say you have an app and you want to integrate a state of the art Question and Answer system in your app so that when a user asks a question how many sessions will there be at WWDC this year? You can do it by using BERT model.

So the model can analyze the statement and gives feedback the result over 100 is the answer. Besides Natural Language you can also run the latest advances in Vision such as Instance Segmentation as well as latest advances in Audio Generation.

And to take full advantage of this expanded support of Core ML, we are updating our converters. So we will have a brand new TensorFlow Core ML converter and ONNX support ML converter will be coming soon. We are also updating our Model Gallery importing some of these research models on our Model Gallery so you can start using them immediately.

So Core ML 3 with this new model representation and embedded converters with Model Gallery can help you bring the cutting edge ML research into your app. Now a big feature, Model Personalization. So let me explain what it is. So up to now you have seen, you have used Create ML to build the models and Core ML to deploy the models, but new in Core ML 3 is On-Device Model Personalization. You can fine tune the model on the device. And we use this kind of technology in Face ID and setting Watch Face. So this is how it happens. Today you have data, you make an ML model, you ship it to your app and all of your users download the same model. And this is great because now users don't have to upload their photos. You can directly take photos, run through the model on the device and get inference such as dog. But what if you are trying to deal with a concept that is unique to each user? Say the concept of My Dog. You might want users to find pictures of their own dog in the photo library and not all dog photos they've taken. And each user's dog looks different. For example, someone may have Golden Retriever, someone may have this Bulldog, ah, and this is my crazy dog.

So, how do we do that? So what we want is for user 1 we need image classifier that takes this dog and says it's their dog.

For user 2, that's the English Bulldog and this the third dog.

So in order to do that, one approach would be to use Server Based Approach. So you may ask each of your users to upload the photos in the Cloud. Server generates model for each of the users and then sends it back. Unfortunately, this approach has privacy concerns. Your user may not be very happy uploading their photo to your server and you may also not be okay taking their photo.

You have to setup the server so there is some cost involved. Let us say you have a million users, which we sincerely hope you do, you have to make millions such models and keep track of them over time.

With Core ML 3 you can do more personalization on the device. So if you have a training data on the device, you can just simply use it to fine tune the model on the device. So previously you take LMH and you do the inference and now you can take the label data feedback from the user, provide some kind of training data and fine tune the model on the device.

You have a personalized model for each user.

We respect user's privacy and you don't need to put server. So Core ML 3 supports On-Device Personalization for Neural Networks. We're also supporting Nearest Neighbor and you can do it in the background at night.

So to summarize and end our session we saw Create ML a brand new app to build ML models, we have a significant expansion in our Domain APIs and Core ML is much more flexible and now supports On-Device Personalization. You can find more information on our Developer Website Session 209 and we hope you are as excited about these technologies as we are. I look forward to seeing you in the labs. Thank you. [ Applause ]

-