-

사진 세분화 매트 소개

iOS 12의 인물 사진 모드에서 캡처된 사진에는 배경 교체와 같은 창의적인 시각 효과를 쉽게 만들 수 있는 인물 세분화 매트가 내장되어 있습니다. iOS 13에서는 기기 내 머신 러닝을 활용하여 캡처된 모든 사진에 새로운 세분화 매트를 지원합니다. 인물의 머리카락, 피부, 치아를 분리하는 새로운 시맨틱 세분화 매트를 AVCapture와 Core Image에서 모두 사용하는 방법을 알아보세요. 매트를 개별적으로 사용하거나 모두 결합하면 앱에서 여러 사진 편집 제어 기능을 제공할 수 있습니다.

리소스

관련 비디오

WWDC19

-

비디오 검색…

Hi. I'm Jacob. I'm here to tell you about the Semantic Segmentation Mattes. So, first, I'm going to go through what are these new types of mattes. And then, David is going to talk you through how to leverage a core image to work with these new mattes.

So, remember in iOS 12, we introduced the Portrait Effect Matte? So, this was a matte designed explicitly to provide effects for portraits.

So, we use it internally to render beautiful looking portrait mode photos and portrait lighting photos. So, in taking a closer look at the Portrait effect in matte, you can see how that clearly delineates the foreground subject from the background. So, this is beautifully represented here as a black and, black and white matte. So, values of 1 indicating foreground and values of zero indicating background.

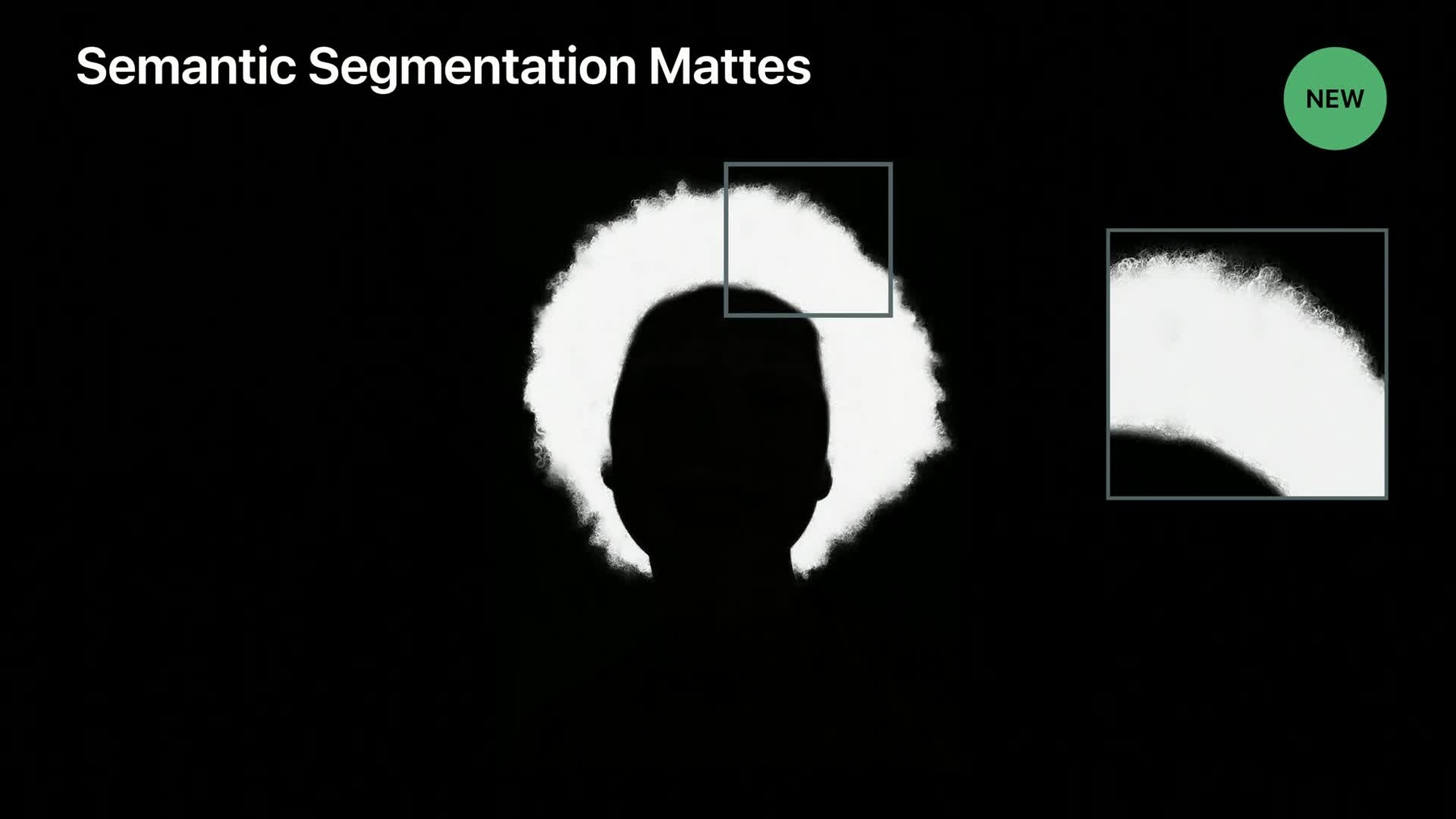

In iOS 13, we are taking this a step further with Semantic Segmentation Mattes.

So, we're introducing hair, skin, and teeth.

So, taking a closer look at the hair matte, for instance, you can see how this is beautifully separating the hair region from the non-hair regions. So, we get great hair details against the background. And we get great separation between the non-hair regions and-- and the hair. Similarly, for the skin regions where now we have alpha values indicating how much of a pixel is of type skin. So, so an alpha value of .7, for instance, would indicate that, that a pixel is 70% of type skin.

So, we hope these new three types, three new types of mattes will give you the creative freedom to, to render some cool effects and beautiful looking photos.

So, a few things to note is that the mattes are half size of the original image. That means they are half in each dimension of the original image. And that means quarter resolution.

So, another thing to remember is that-- that these segmentation mattes can actually overlap. So, this is particularly true for the Portrait Effects Matte and the Skin Matte that will inherently overlap.

So, these mattes do not come for free. So, we heavily leveraged the Apple Neural Engines for machine learning spectral graph theory. And looking a bit under the hood what we do is we take the original size image. We feed it through the Apple Neural Engine and together with the original sized image, we, we render these high-resolution, high-quality with high consistency segmentation mattes. These are then ready to be embedded into the HEIF or JPG files as you know them, together with the original sized image and the Depth, as you know, from iOS 11.

So, there are two distinct ways to generate these two types of mattes. So, so one is that they're embedded in all Portrait Mode captures. So, you can grab them from those files. Or even better, you can write your won capture app and opt into these mattes on capture. So, if you have files with the segmentation mattes in them, you can work with them through Core Image and Image I/O. David's going to talk more about that. But first, I'm going to talk you through how to capture with AV Foundation API.

There are four phases we're going to go through here that relates to the extension. So, first, is when we set up the AVCapturePhotoOutput. So, again, is when the capture request is being initiated and any point in the lifecycle of your app. Then, two of the callbacks. So, one is when the settings are resolved for your capture. And the final one is the when the Photo did finish processing. So, for, for full details on this, please, refer to Brad's 2017 talk on this exact topic.

Let's go through how to set up AVCapturePhotoOutput. So, this usually happens when you're, you're setting, you're configuring your session. So, you've already at this point on session dot begin configuration, you've set your presets. You've added your device inputs, you add your AVCapturePhotoOutput. At this point is when you, when you tell the API what superset of segmentation mattes are you going to ask for at any point in the lifecycle of your app.

When you actually want to initiate your capture request, you need to specify the AVCapturePhoto setting.

So, this, this is where you tell the API this is what I really want in this particular capture. So, here again, you can specify all the ones that you already enabled. Or you can specify a subset, say hair or skin.

Now, you initiate your capture request. So, you give it the AVCapturePhoto settings and you give it the delegate where you want to have your callbacks.

So, time passes and soon after you get that, get a willBeginCaptureFor callback. This is when, this is when the API tells you, you may have asked for something but this is what you're actually going to get. So, this is important for the Portrait Effects Matte and the Semantic Segmentation Mattes because if there are no people in the scene, you'll actually not get a matte here. So, you need to check for the dimension of the-- dimensions of the Semantic Segmentation Mattes. They'll be zero, in such case. More time passes. The photo did finish processing. So, this is when you get your AV-- AVSemanticSegmentationMatte back. This is the variable matte, in this case. So, this new class have the same type of methods and properties as you know from the Portrait Effects Matte. That means you can rotate according to Exif data. You can get your CVPixelBuffer reference or you can get a dictionary representation for each file I/O. So, for a full walkthrough of the lifecycle of, of how to make these captures, please refer to the AVCam sample app. It has been updated with the Semantic Segmentation Mattes and will take you through all these different steps. I'm going to hand it over to David who is going to talk about the Core Image.

All right. Thank you very much. Now that we've learned how to capture images with Semantic Segmentation Mattes, we get to have some fun and learn how we can leverage Core Image to apply some fun effects. Now, I'm going to have a demo next. But I should warn you if-- there's clowns in this image. So, if you have any coulrophobia or irrational fear of clowns, you know, avert your eyes. All right. So, here we have an image that was captured in Portrait Mode on a device.

And what we can see in this application is that we can now very easily see all the different Semantic Segmentation Mattes that are present in this file.

We can use the-- the traditional Portrait Effects Matte or we can also see the Skin Matte or we can see-- the Hair Matte or the Teeth Matte. And it's also possible to use Core Image to combine these various mattes into other mattes, such as this one I've synthesized by using logical operations to create a matte of just eyes and mouth.

If we go back to the main image, however, we see this is a picture of me in Apple Park. And one of the great things you could do with Semantic-- with Portrait Effects Mattes is you could add a background very easily. As you can see here, we can put me in a circus tent. And while that really does look like a circus tent, I don't look like I fit in. So, now we can use some fun effects. For example, we can make it look like I've got some clown makeup on.

Or if we want to go a little further, we can give myself some green hair. And lastly, we can use some of these other mattes to give myself some makeup.

So, that's what I'd like to talk to you about today, is how we can do these kinds of fun effects in your application.

All right. So, most of the clown references are gone now so it's safe to look back.

All right. So, we're going to be talking about three things today. One is how you create matte images using Core Image, how you can apply filters to those images, and lastly, how you can save these into files.

So, firstly, let's talk about creating matte images using Core Image. There are two ways. One if you can create matte images by using the AVCapturePhoto APIs. And then, from that, you can create a Core Image. So, the code for this is very simple. What we're going to be doing is using the Semantic Segmentation Matte API and specifying that we want either the hair or the skin or the teeth. And that returns an AVSemanticSegmentationMatte object. And from that, it's trivial to create a CIImage or we can just substantiate a CIImage from that object.

The other common way you're going to want to create matte images is by loading them from a HEIF or a JPG file. These files have a main image which you're familiar with, a typical RGB image. But they also have auxiliary images such as the Portrait Effects Matte as well as the new mattes that we're talking about, the Skin Segmentation Matte and the Hair and the Teeth.

The code for this is very simple. The traditional code to create a CIImage from a HEIF file is just to say CIImage and specify a URL.

To create these auxiliary images, all you do is make the same call and provide an options dictionary specifying which matte image you want to return. So, we can specify the auxiliarySegmentationHairMatte. Or if we want, we can get the mattes for the other Semantic Segmentations.

So, very simple. Just couple lines of code.

The next thing we want to do is talk about how you can apply effects to these images.

So, I showed a bunch of effects. I'm going to talk about one in a little bit of detail. What we're going to do is we're going to start with a base RGB image. And then, we're going to apply some effect to that. Let's say we want to do the washed-out clown white makeup. So, I'm going to apply some adjustments to that. Those adjustments, however, apply to the entire image so we want those to be limited to just the skin area.

So, we're going to use the Skin Matte and then, we're going to combine these three images to produce the result we want.

Let me walk you through the code for it because it's actually quite simple.

But first, I want to talk about the top feature request we've had for Core Image which is to make it easier for people to discover and use the 200 plus built-in filters we have. And that is the new header called Core mage CIFilterBuiltins. And these allow you to use all of the built-in filters without having to remember the names of the filters for the names of the input.

So.

It's-- it's really great.

So, let me show you some code that will use this new header.

So, the first thing we're going to do is create the base image. And we're just going to call it image with contents of URL. And that will produce the traditional RGB image.

Now, we're going to start applying some effects. So, the first thing I want to do is I'm going to convert it to grayscale and I'm going to use a filter called the maximumComponent.

And I'm going to give that filter an input image of the base image.

And then, I'm going to ask for that filter's output.

And that produces the image that looks grayscale like this.

This doesn't look quite bright enough to look like clown makeup. So, we're going to apply an additional filter. We're going to say use the gamma adjustment filter.

And the input to this will be the previous filter's output. And then, we're going to specify the power for the gamma function and ask for the output image.

And you'll notice it's now very easy to specify the power for the gamma filter. It's a Float rather than having to remember to use NSNumber. So, that's the first part of our effect.

The next thing we want to do is start by getting this Skin Segmentation Matte. So, again, as I described earlier, we're going to start with the URL to specify that we want the Skin Matte. However, when we get this image, notice it's smaller than the other image. As we mentioned before, these are half size by default.

So, we need to scale it up to match the image, main image size. So, we're going to create a CGAffineTransform that scales from the matte's size to the base image size. And then, we're going to apply a transform to the image. And that produces a new image which, as you expect, matches the correct size.

The next step we're going to do is start combining these two.

And we're going to use the blendWithMask filter. And this is great and we use this throughout the sample I just showed.

We're going to specify the background image to be the base RGB image which looks like this.

Next, we're going to specify the input image which will be the foreground image which is the image which has the-- the white makeup applied. And lastly, we're going to specify a mask image which is the image that I showed previously.

Given these three inputs, you can ask the blend filter for its output and the result looks like this.

Now, as you can see, this is just the starting point. You can combine all sort of interesting effects to produce great results in your application.

Once you're done applying these effects, you want to save them.

And, most typically, you want to save them as a HEIF or a JPG file which supports saving auxiliary images, as well.

So, in addition to the main image, you can also store the Semantic Segmentation Mattes so that either your application or other applications can apply additional effects.

The code for this is very simple. You use the Core Image API writeHEIFRepresentation.

And typically, you specify the main image, the URL that you want to save it to, and then the pixel format that you want it to be saved as, and the colorSpace you want it to be saved as.

And what I want to highlight today is another set of options that you can provide when you're saving the image.

So, for example, you can specify the key semanticSegmentationSkinMatte and specify the skinImage or the hairImage or the teethImage. And all four of these images will be saved into the resulting HEIF or JPG file.

Now, there's an alternate way of getting this result, which is, if you want, you can save the main images and specify the segmentation mattes as AVSemanticSegmentationMatte objects. This again, the API is very simple. You specify the-- the URL, the primary image, the pixel format, and the color space.

In this case, if you want to specify these objects to be saved in the file, you just say AVSemanticSegmentationMattes and you provide an array of mattes.

So, that's what you can do using Core Image with these mattes. What I talked about today is how to create images for-- for mattes, how to apply filters, and how to save them. I will, however, mention that the sample app I showed you has been written as a Photos App plug-in. And if you want to learn about how you can do that in your application so that you can save these images, not just to HEIFs but, also, into the user's Photo library, I recommend you consult these earlier presentations. Especially, the "Introduction to the Photos Frameworks" from WWDC in 2014. All right. And thank you all, very much, I really look forward to seeing what you do with these great features. Thanks.

[ Applause ]

-