-

Building AR Experiences with Reality Composer

Reality Composer is a tool that lets anyone quickly prototype and build AR scenes ready to integrate into apps or experience with AR Quick Look. Walk through the powerful and intuitive capabilities of Reality Composer and discover hundreds of ready-to-use virtual objects in its built-in AR library. See how easy it is to build animations and interactions to enrich your 3D content, and get details about integrating Reality files right into your apps.

리소스

관련 비디오

WWDC21

WWDC19

-

비디오 검색…

Good morning.

Great to see you. I hope you're all having an awesome WWDC.

We're super excited to be here today to chat with you about Building AR Experiences with Reality Composer.

I'm Michelle and with me are my colleagues, Pau and Abhi. And today we're going to cover what Reality Composer is and how it can help you create an AR app. After this session, you'll know what's provided with Reality Composer, how to set up your experiences in it, and then how to take in-- take what you've created in Composer into your app in Xcode. So, first, what is Reality Composer? As you've heard earlier this week, Reality Composer is a tool, an app, to help iOS developers get started in working with 3D and AR.

Some of you have already begun and it's been really, really amazing to see what you've all been making, posting, and tagging with Reality Composer. So, please keep doing it. If you're not familiar with 3D, the first time you ever open a 3D app can be pretty daunting and it can be kind of time consuming to even get started in AR.

But with Reality Composer, it's really easy to just pre-vis and lay out your experiences using the built-in content library. Or, if you prefer, you can use your own USDZs. And if you're not familiar, USDZ is just a 3D file format that's supported in Reality Composer.

Once you've got the experience laid out, you can add simple interactions and animations with the behavior system before you even commit to writing any code. So, it really speeds up the iteration time on the experience itself.

And then finally, everything that you've made in Reality Composer you can take directly into your app in Xcode. And so much about building for augmented reality is in seeing how the experience feels in physical space, looking at it on the intended device and seeing how your audience might see that experience.

So, Reality Composer is available on macOS, iPhone and iPad, and you can edit on iOS so that you can develop on your regular dev machine and then edit on iOS in order to continue working on the exact same project on iPad or iPhone.

Alternately, you can also just start the project on your iOS devices and take it the other way. So, today I'm going to take you through building scenes. Pau will cover adding behaviors and using physics. And then Abhi's going to take you through building an app. So, for building scenes, first, what's a scene? So, as you may have heard in the introduction to RealityKit and Reality Composer talk, a Reality Composer scene is essentially a collection of entities and it contains an anchor, objects, behaviors, and a physics world.

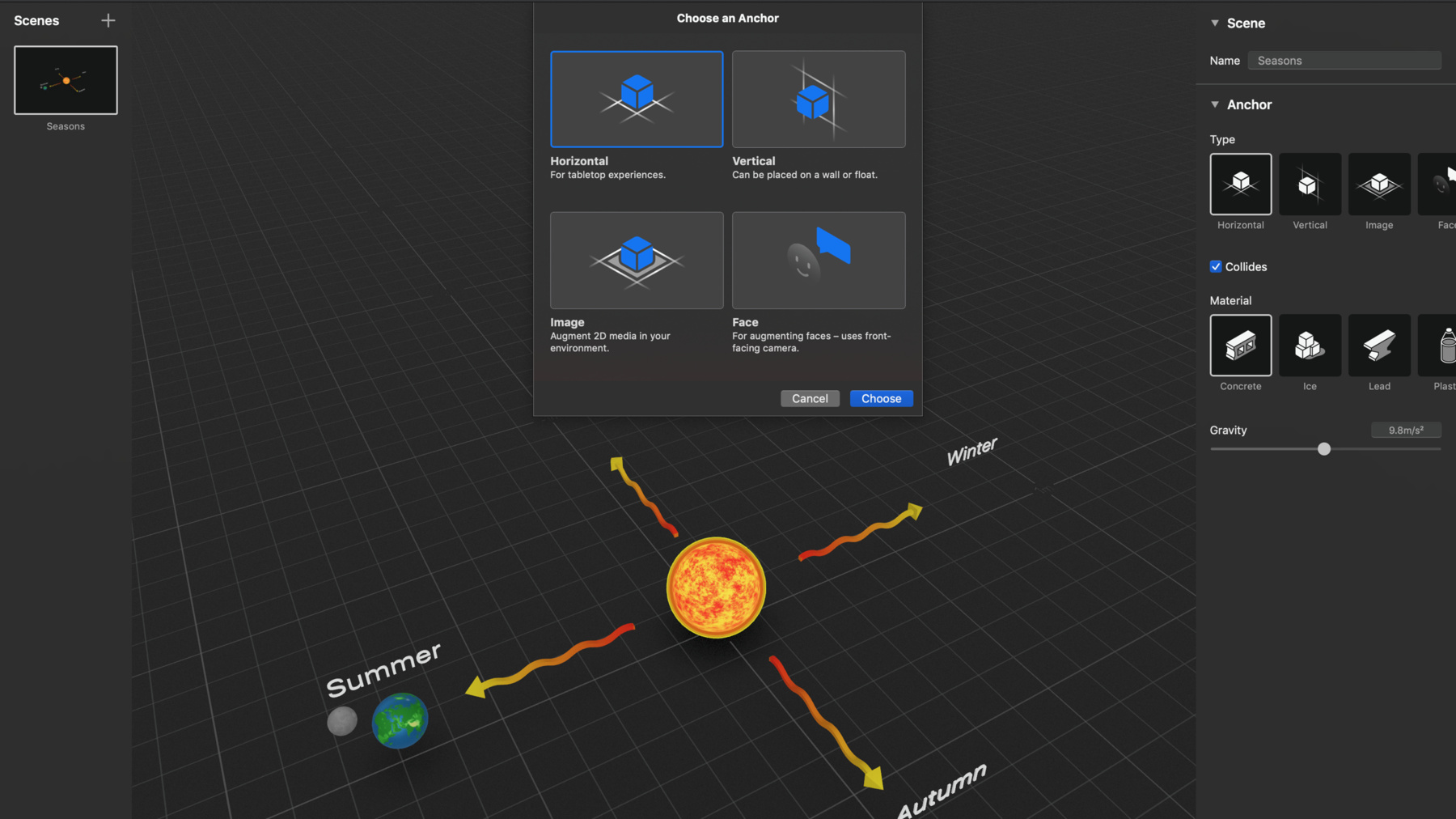

Every scene has exactly one anchor, and when you start a project or when you start a scene, any scene, you'll be asked what kind of scene, what kind of experience are you building. If you're building something for a room, a stage, or a tabletop, you'll choose the horizontal anchor.

If you want it to be on a wall, pick vertical.

If you're augmenting a book or some packaging, a brochure, or a movie poster, choose the image anchor.

And for selfie effects like glasses or hats, choose the face anchor.

Projects can have multiple scenes but I think you'll find when you're first starting out, one or two scenes is usually sufficient. Okay, so in this case, let's assume that you've picked the horizontal anchor. And so here we are.

The content is our focus in Reality Composer. So, it's laid out exactly as you would see it in AR in the Main View Port or the workspace. And this pane on the right here shows you the properties of whatever you selected and shows you options for configuring that entity, be it the object itself, like this text that's selected here. You can see that it's selected because of the ring. You can select the object, or a scene. I could show you more slides, but why don't we check out Composer together on iPad. Oh, I want you to remember this scene, though. Because we're going to be building up this little tutorial over the course of the session together. It's Seasons and Solar System lesson. We'll build up in AR.

Okay, so for our demo, I'm going to show you three quite different RC projects today. The first is a little primer. And it's going to show you kind of just five really basic examples of things you could create in Reality Composer probably just in a couple of minutes. The second is a much more richer and more complex experience, a full experience, of what you could create just in Reality Composer alone if you didn't want to write code at all. So, this is great if you're not a developer or you're just kind of starting out and you want to just try out the experience before you fully commit to it when you make your app. And finally, we're going to build that Seasons scene that we saw together. Okay, so here is the primer in Reality Composer. And I'm going to take us into AR, because that was how we intended to see it.

Okay, so these are the five examples I mentioned, and let's press play so that we kind of see the interactions. So, when we started, we're just, oh, hi everyone. When we just started, the spiral started spinning. And here, if I get close in the second example, I can make this chess piece jiggle. I can tap this one for physics and audio . It's kind of a fun sound. And then someone had asked if I'm an animator and I have my own models, I have my own animations, can I bring them into Reality Composer? And yes, you can. And you can trigger them on user interactions like a tap. And if you're not an animator, you can use prebuilt emphasis animations on any object in the scene. Here we've applied them to some pretty basic shapes but you can apply them, you know, to this dancer as well. So, that's the Scene in AR.

Next, I'm going to show you this more complex experience, richer experience. Oh, and you're probably wondering how I'm moving the content around the scene and moving the camera. So, for panning, all I'm doing is taking two fingers, moving them across like this so I can see the content exactly as I want.

If you were watching State of the Union on Tuesday, this island might look familiar to you. And we wanted to build up the experience a bit more. So, if you imagine you're making, like, a museum installation or a tourism center experience, that's something that we kind of envisioned here.

So, let's look at this here in AR and I'm going to press play to start.

So, you should hear some waves. And now we're being prompted to tap on the helicopter, so let's do that.

You can see we were also asked to tap a location to learn more. So, I'm going to do that tap on these markers now, and you can see I'm getting more information about each location as I tap. The other ones fade off.

And so I'm just going to take apart what we did to build up this scene.

So, there's actually not much custom content in this scene itself. There's only two artist-created custom USDZs, the island and the helicopter. You can see here we imported them. You can import more if you want. The rest are just built in from the content library that comes with Reality Composer. You can see we have a lot of stuff here. Text, basic shapes. And that's kind of what you're seeing in this scene. These markers are just basic shapes. We group them to create two pieces as one marker.

Have text here.

The other question we get asked a lot is I work mostly in 2D and so I have a lot of photos and, you know, company graphics. Can I use them in Reality Composer? And the answer is yes, of course you can! You can bring them into the experience as photos using the Picture Frame, which is in the Content Library that we just saw.

Finally, you're probably wondering how did we create those little interactions. So, if we go to Behaviors by clicking on this Behaviors button in the top right, you can see the Behaviors we used to create the flow of that sequence and those interactions. So, we're billboarding things to look, you might've noticed as I moved around the scene, those items looked at me. So, we're using the Look At actions here to make everything look at the camera. At the beginning, you saw just the island, and everything else was hidden. So, you can see that we hid everything else and then we showed them to you in sequence. So, we waited for 0.2 seconds, and then first we showed the helicopter text.

Then we showed the helicopter itself and its tap to start. And then we faded the markers in from below, so that's what this move from below is doing and I can preview that to you now just by pressing play on the card.

I'm going to show you also a little secret.

These birds, you might've noticed-- I'm just going to look at them here. So, we asked them to orbit the island as a group.

And they're not really birds at all. They're just primitives that we're using, or just basic shapes that we've put two move cards on to flap their wings. So instead of investing time in creating birds, all we did was we took a couple shapes and put two action cards on them to make an animation. And if you've tried to animate something in 3D before, you know how time consuming a simple thing like this can be, and this takes just a couple of seconds.

So, that's an example of a full experience that you can make in Reality Composer without any code at all. And the really great part about this is that once you have this Reality file, you can just share it with anyone and preview it in AR QuickLook, and the experience will be exactly as you've done it in Composer. So, that's that second project.

So, finally let's build up the experience of the Seasons lesson together. So, I'm going to add three celestial bodies, the Sun, the Moon, and the Earth. So for this I'll just block in the scene with some placeholder objects to start us off.

So, here's the Sun. I've brought it in.

And now I'm going to duplicate it to create the Earth and I'm just using pinch here to zoom us out a little bit, to move the camera. So, let's grab this arrow to move the Earth over. And then next I need also a Moon.

So, let's duplicate this guy one more time.

Bring it over to about here. And then I can kind of grab anywhere in this ring to move it up in this plane. So let's move it over. Okay, great. And now I want to scale the Moon down a little bit. So I'm just going to grab the ring and move inwards to scale it.

There we go.

Move it a little closer.

Okay, so I have these three celestial bodies. Let's scale the Sun up. So I'll grab the ring again. Just grab it to scale.

Scale the Sun. So we've got our three items there.

Next I'm going to add some text. So I go to the content library again. Let's grab the text. And it's a little bit below where I want it to be, so I'm just going to grab this green arrow and drag it up.

And you can see it pop up to the ground plane. And it's doing that because I have snapping turned on. So, if you look in the left corner, we have this little magnet, and I can turn snapping off if I want to move stuff freely, but actually I just want to pull this blue arrow to bring it forward. And I want it to be about half a meter or so from the Sun. And I'm going to open the pane that we saw on the right to change up the text. So, let's just make it say autumn. It's super easy to just add these elements in Composer. So, finally, I think that's pretty much where I want it to be. So, all I'm doing to move the camera around is using my finger and touching the screen.

Next I'm going to add an arrow. And it's not pointing the right direction I want it to be, kind of rotated. So, I'm going to move the camera so I can see the green ring which is sitting on the ground plane. This ring rotates with the camera as I move, so I can always be working in the plane that I want to be working in. If I don't want to move the camera to have to do that, I can just tap on the arrow and that will lock into the corresponding area. You can see the ring got a little fatter there. So I'm just going to rotate the area and it's snapping to 15 degree increments. Again, because I have that snapping turned on. But if I didn't want to have it snap, I could move it freely. So here I'm dragging it and it's moving always in the horizontal plane for this green ring here.

But I actually do want it to snap between the Sun, sphere, and the autumn text. So, I'm going to turn snapping on. You can see it snapping into place. So, it's just super useful, super easy.

Then I want to modify this arrow a little bit. So, I can go ahead and click the icon next to snapping to modify it. I'm just going to zoom this in a little so we can see better. And I can grab this handle. Drag it.

I'm also just going to make this just a touch smaller, a little flatter.

Okay. And then I'm going to move it just a little bit more. Okay, so now everything is kind of blocked out, and we did that in just a couple minutes.

I actually want to replace these celestial bodies because they're all pretty indistinct right now and I want to import some custom USDZs.

And since I already have them placed exactly where I want, I'm going to do a replace. So I just tap on this object. I get this edit menu. Hit replace. And I'm going to import the Sun, and it'll just replace. And you can see it's the same scale and it's exactly in the right position that I wanted.

I can do the same with the Earth. So let's do that really quick. There it is. And the Moon, I'm just going to customize it a little bit here with some, let's see, I want the map material. And I can make the Moon, like, any color that I want, but I think I'm just going to pick grey for the sake of safety. And if I want to see any of these items a little better, I can just double tap on them to frame the object. It's a really easy way to get around the scene.

Or if I want to see the scene, pull back, I can double tap on anywhere in the grid to see the scene. Those shortcuts are here too. Frame scene or frame selected.

Okay, so we're just about ready, I think, to add Behaviors. I could add all of the other elements but for the sake of time we won't do all of that now. There's a few tips I want to leave you with before we finish this part of the demo. That replace that you saw? If we had Behaviors applied to this already like we were doing any animations on, like, let's say the Sun, if I replaced it, those Behaviors would stay. So, it's a huge time saver if you're just like swapping in new versions of content. It's really powerful.

And then also we mentioned having multiple scenes. You can access them here with the Scene Selector in the top left. And so if you wanted to add any more scenes, you just add them here.

If you are working with these entities in Xcode, you're going to want to name them. And you'll see more about this later on in the session. And you can do that when you open the pane. There's a configure panel and you can just name the entity here. I'm going to name it Sun. Finally, if there's something you didn't want to do, like let's say I moved the Earth and I didn't want to do that. I can undo that action. I can also long press and redo and it'll tell me, like, what the undo that I, what the undo is that I'm about to invoke.

Okay. Finally, some folks have asked what's the easiest way for me to share my RC projects? And you can airdrop them between your devices. Or like we talked about earlier, you can use the edit on iOS to go easily between your development machine and your iOS devices.

Okay.

So, that's our scene now, ready for Behaviors.

So, you just saw how to build scenes in Reality Composer.

So, we took you through how to lay stuff out quickly using the content library. We also showed you how to bring in your own custom USDZs. And now we're going to move on to adding interactions and behaviors. So, to take you through all that, let's welcome my colleague, Pau.

Thank you, Michelle.

So, now that we know how to bring content to the scene, it's time to bring all this content to life.

So, you might want to play some music when the user taps on an object, or maybe show some text when the user gets close to an object.

Or maybe you just want to play some USD animation.

And so in order to do that and more, we need to take advantage of Behaviors in Reality Composer.

So, let's get started.

As we've seen before, a scene in Reality Composer contains an anchor, objects, behaviors, and the physics world.

Behaviors are always scene scope. That means that you can only target objects within the same scene.

So, let's take a closer look at what the behavior is.

A behavior is composed by two items, the trigger and the action sequence.

The trigger, as the name suggests, is simply the criteria for the action sequence to begin.

So, let's say we have a scene with a model of a record player. And when the user taps on it, we want to play some music and spin at the same time.

Well, in order to do that, you just need to create the new behavior, use a tap trigger, and then create an action sequence comprised of a music action and the spin action.

So, the first thing when creating a new behavior is to pick what trigger will begin the action sequence.

Reality Composer comes with five different triggers that you can use. With the start trigger, the action sequence will begin as soon as the scene is loaded. With the tap trigger, the action sequence will begin once the user taps on an object that you define.

With a proximity trigger, you define a threshold distance from the object to the camera.

Once the camera is close enough to the object, the action sequence will begin.

With collision trigger, you define two objects, and once those two objects collide, the action sequence will begin.

And the last trigger is the notification trigger.

This trigger is what allows you to begin action sequences programmatically from your app and we'll take a look at this trigger in the last section of this talk.

So, now let's move into action sequences.

There are three main ways that you can modify the execution of action sequences.

Those are groups, loops, and exclusive action sequences.

So, let's start with groups.

By default, in Reality Composer, groups play sequentially. So, the actions go one after the other. But you can combine multiple actions if you want to play them in parallel. So, in this example, action two and three will play together right after action one finishes. And action four will only begin once all the action in the group are over.

Action sequences can also be looped.

So let's say you want to have some music in your scene and you want that music to loop forever. Well, you just need to create the behavior with music action in the action sequence and mark that action sequence to be looped.

And the last part is the exclusive action sequences. In Reality Composer, you can mark an action sequence to be exclusive. Only one exclusive action sequences will play at any one time. That means once an exclusive action sequence starts or any other running exclusive action sequences will stop.

And action sequences that are nonexclusive, they will play, like, together with other exclusive and nonexclusive action sequences. And that's pretty much all you need to know about action sequences. So now let's take a look at some of the actions available to you in Reality Composer.

With the visibility actions, you can bring objects in and out of your scene using animations.

So, we support multiple type of animations, and you can use basic things like fade in-fade out.

But you can also use more playful animations like scale up and then scale down.

Reality Composer also supports animating objects in place with the emphasis similar to the show height, you can animate the object with different motion types and animation styles.

So, you can use BasicPop, but you can also use a more playful pop animation. You can also spin or orbit your object around other objects and if you're using a USD asset in your file, you can use their transform and skeletal animation.

Now, with the move actions, you can translate, scale, and rotate an object in the same. With the Move By, the movement will be relative to where the object is right now. Like in this example, where the object always goes two units forward and one left. And with the Move To, you can always move an object to a specified location in the scene.

Like here, where the object is going back to the origin of the scene.

With the Look At, you can make an object always face the camera. Like in this example, where the horse will always face the user's device.

Now that we have all the animation actions, it's time to bring some sound to your experience. In Reality Composer, there's three actions that you can use to bring some audio to your scene.

With Play Sound, the audio will originate from the object itself. Like here, where the horse is doing a horse sound.

With Play Ambient, the sound will be scene-oriented. And with Play Music, the audio will play without any transformations. So now let's take a quick demo, and let's take a quick look at how to bring some behaviors to the scene Michelle was building before.

Here we have a more complete scene compared to what Michelle was building. So, we have the Moon, the Earth, the Sun, and we have some text and some sunrays showing the different seasons.

So, let's bring up the behavior final by clicking on the behaviors button on the top right corner. And as you see here, there's already some behaviors. So let's hit play and see what is going on in this scene.

So, you see here, there's no movement for the Moon, the Earth, or the Sun.

But the different rays and seasons, text, are appearing in a sequential order. So, let's go ahead and create the new behavior for 20 minutes the different Moon, Earth, and Sun objects. So for that, let's start by bringing a new behavior.

We hit plus, and then here we can see all the available presets that we can pick from. But for this demo, we're going to start with a custom trigger. Now the first thing is to pick what trigger we want to use to begin the action sequence. And because we want to start animating these objects when the scene is loaded, we can bring up the library and choose the scene start.

Great. Now it's time to start with the action sequence. For this action sequence, we want the Earth to orbit around the Sun, and then the Moon to orbit around the Earth. And at the same time, we want to spin the Earth and the Sun.

So let's start with the orbits.

So we can bring the action library and then select orbit as the first action. The first thing when creating a new action is to define what targets are the targets of the action. So in this case, the affected object is going to be the Earth, and the center is going to be the Sun.

Great. We have all the properties like duration, revolutions, align to path, and orbit direction. And for the direction, we want to use counter-clockwise.

So, now, we can easily preview this action by just hitting play on the play button in the action card.

That was a bit fast. So, let's change the duration to something more suited for this scene. Let's start with 20 seconds. You can change duration and try again. And yeah, I think that duration looks right.

Now, let's bring the second orbit.

So, we can bring again the library and select orbit.

Now, the affected object is going to be the Moon and the center will be the Earth.

The duration, we want it to be the same as before.

And for the revolutions, because we are not trying to be physically accurate, we just want to have something that looks nice in the scene, we're going to use four. I think four is a good number. And now because the Moon is tidally locked with the Earth, we want the Moon to be aligned to the orbit. So, we have to select align to path.

And now the direction will be also counter clockwise.

So, let's give that a try and yeah, this animation looks right.

So now that we have the orbits out of our way, let's begin with the spin actions.

So for that, we bring another spin action and let's start with the Sun.

Same direction as before. So, 20 seconds. And for iterations, I think four will be a good number.

And same direction, so counter clockwise.

If we hit play, we see that that's a good speed for the spin.

Right. So, now, let's bring the last action in the sequence which is the spin for the Earth.

So, we create another spin. We select the Earth.

Same duration.

And because we want to have a higher number of iterations compared to the Sun, let's use 10 for this example.

And direction, again, counter clockwise.

Let's give that a try and yeah, that looks right.

So now our action sequence is completely laid out. So, let's try to play the whole action sequence. And we can do that by just pressing play on the play button in the Action Sequence header.

And what we see here is that only the Earth is moving. And that's because the action sequence is playing all the actions sequentially, one after the other.

What we want to do here though is to make all these actions running parallel. And for that we can use drag and drop and drag the different cards on top of each other. And just by doing that, we create a group with the four actions.

And now let's just go ahead and try . Thanks. So, now, let's go ahead and hit play and see how all the behaviors play together. And what we can see here is that now all the orbit and spin actions play together at the same time as the text, and the rays appear in the scene when the Earth is passing by.

Okay. Let's go back to the keynote. So, that's how it easy it is to bring some interactions and behaviors to your scenes in Reality Composer.

Now, let's move onto physics. And so in order to create amazing and immersive AR experiences, you need your objects to feed right into the real world. You need your objects to move like real objects. And for that, we need to take advantage o this powerful physics engine available to you in Reality Composer.

So, let's take a look at it. This is an example of a scene that you can build in Reality Composer using physics.

I use it here. The sphere is falling because of gravity, then it's colliding with the pins. And right at the end, once it bounces with the green box, it's, we show this, like, winner text.

So, let's see how we can build this kind of scene. There's three different ways that you can influence the physics simulation in your scene.

A variety of materials will define how your objects interact with the scene and with other objects.

With the force action and the gravity, you can determine how the forces will play into your world. And with the collision trigger and the collision shapes, you can define how the collision mechanics will work in your physics simulation.

So, let's start with materials. In Reality Composer, there's six different materials that you can use.

You have to define the materials for the scene and for the objects.

So, let's say you use material for your object. Use ice as the material of your object. This will make your object slide through the scene. If you use rubber instead, your object will be much more bouncy. Now, let's move onto forces.

All your scene will have gravity enabled by default and the value will be set to the Earth's gravity. But you can change that to any value you want. With the force action, you can give your object an initial impulse. You define a direction, how much velocity you want to give to the object. And you can build these kinds of behaviors where you can add the force once the user taps on the object.

And now that your objects have materials and can be affected by forces, it's time to make them collide with each other.

So, by default, in Reality Composer, all the objects don't participate in the physics simulation. If you want your objects to collide with other objects, you have to set the collide option. If you want your objects to be moved by the physics simulation, you need to set the simulate option.

So, here you can see how default collides and simulate options affect the objects in the scene.

So, the collision mechanics are determined by the shapes of the object in a scene.

In Reality Composer, there's three different shapes that you can use. And those are box, sphere, and capsule.

Those shapes will determine how your object moves in the scene and how it collides with other objects and the anchor.

And with the collide trigger, you can build these kinds of behaviors where here we have a collide trigger with the sphere and the box. And that way we can detect when the user is winning this game and show the winner text.

And that's pretty much all we have to say about physics in Reality Composer.

So now I would like to hand it over to my colleague, Abhi, to talk you through how to build apps using Reality Composer. Abhi? Hi everyone. My name is Abhi. I'm super excited to be here today. So, earlier in the session, we saw how with Reality Composer we can create amazing 3D experiences and then bring it to life with behaviors. Now, let's see how we can take those AR experiences and integrate them into our app. So to get started, we provide three different ways.

The first is create a new RealityKit AR or Game template right in Xcode.

Then second is to create a new project from Reality Composer.

And the third is to export a Reality file from Reality Composer and then include it inside your app.

To get started, let's start about the two essential files, the Reality Composer project and the Reality file. And we'll get started by talking about the Reality Composer project. So, this is the project file for Reality Composer.

It's included automatically inside of RealityKit AR and Game templates.

It's previewable right inside of Xcode; we have Xcode integration.

And Xcode automatically exports your Reality Composer project as a Reality file during the build step of Xcode. So, here we can see our Reality Composer project. In Xcode 11, we're able to preview it and we're able to preview each of our scenes as well. And in the upper right-hand corner, we can see an Open in Reality Composer button, and this will launch Reality Composer right from Xcode. As I mentioned earlier, if you have a Reality Composer project in your Xcode application during the build step, we automatically generate a Reality file for you. And it's included inside of your application, just like any other resource. You can access it exactly the same way.

So, what is a Reality file? This is a central object for how you will use your application and work with your AR experience. It contains all the data required for rendering and simulation, and it's optimized for RealityKit.

Like I said earlier, it's exported from Reality Composer or it's automatically exported by Xcode during the build step of your project. You can use a Reality file either by referencing it directly in your application or previewing it in AR Quick Look, and we encourage you guys to go check out the Advances in AR Quick Look session tomorrow to learn more about that.

Diving a little bit deeper, what is a Reality file? So because this is a file exported from Reality Composer, it'll contain each of the scenes that you've created in Reality Composer. For example, we have two scenes here, and it also contains every object that you've created in the application. When we think about a scene in a Reality file, we consider a scene an anchor, an anchor entity, specifically. So, this contains for example the horizontal anchor information that you set up in Reality Composer.

So, we thought one of the biggest difficulties in working with Reality files was being able to access things with strings. And we both have the Reality Composer project and Xcode. So, we wanted to introduce a way to make this very easy to work with, almost like just an object. And so we decided to do that with code generation in Xcode.

What code generation will do is analyze the structure of your Reality Composer project and generate an object, a real object that you can use inside of your application. So for example here, we have our Solar System Reality Composer project, and we automatically generate a Solar System object that you can use in your app.

So, the code generated for you is an application-specific API for your scenes, for your named entities that Michelle mentioned earlier, for notify actions, and for notification triggers. And we'll get to the second, those last two items later in the session. The first step to get started with code generation and working with your AR experience is to give names to everything you care about and one axis in code right in Reality Composer. And you can do that here in the Settings pane under Configure. So for example here we've given the Sun, our Sun USDZ that we imported, the name Sun. And we'll use this name to access it in code.

So, how does our Reality Composer project get converted into an object that we can use? At the top level, our Reality Composer project becomes, for example, solarsystem.swift.

Our scene becomes an entity that has anchoring. And this is really important, because it means when you load it, you can add it right into your scene's anchors.

Additionally, any objects you set up get converted into entities. These are real RealityKit entities that you can use. You can work with exactly the same way.

In addition, we also provide two different ways to load your scene. One that is synchronous and one that is asynchronous. So, taking a look at loading a Reality file synchronously, here we have our solar system as our example again and we'd like to load the seasonsChapter. And we can do that with a single line of code. So, we can say solarsystem.load seasons chapter. And we'll get returned an anchor that we can then add into our view. It's super simple.

Next, if we want to load our seasonschapter asynchronously or any scene, we can use for example the solarsystem.load seasons chapter async, and we'll get a result object. And we can handle the success case. We'll get the anchor. Or we can handle the failure case, and display the error to the user. In addition, if we want to access the named entities in our scene, we can do so as easily as calling for example seasons.sun, .earth, and .moon. And this will give us access to the entity that we named in Reality Composer. It's just like working with any other object.

So, putting it all together with code generation in Xcode and Reality Composer, we can load our AR experience in our application with just two lines of code.

The first is loading our chapter or loading our scene, and the second is adding it right into our view. It's super easy.

So, that's code generation in Xcode. We also provide a way to access your Reality file. For example, in cases where you're downloading content off of a server and you want to display that in your application, to do that, we have RealityKit API.

So, to load a Reality file synchronously, you first need to get the URL just like any other resource in your application bundle. And next, using the entity.load anchor method, passing the URL of the resource, and the name of the scene that you'd like to load, and this will give you back an anchor that you can use. Optionally, you can also use entity.load which will load the entity tree without the anchor information.

To load your Reality file asynchronously, there's also a way to do that. So the first step is exactly the same. Just get the URL resource of the resource.

Use entity.load anchor async, passing in the URL and the name of the scene that you'd like to load. This will give you a load request. And using the new combined framework, we can receive a completion and then receive the value, and the value contains the anchor that we'd like to load. So, you can put this right into your AR view scene. Accessing entities. You can do this through anchor.find entity, and then passing the name of the object that you'd like to load. For example, Sun, Earth, and Moon.

So, that's how easy it is with code generation in Xcode with the RealityKit to bring your AR experiences into your application. So, next, let's talk about how our application can interact with our AR content. We can create amazing experiences right inside of Reality Composer, but how do we actually work with it? So, as Pau mentioned earlier, we have a great built-in set of actions already for you, but there might be actions that you want to define yourself. So to do that, we've created the notify action. This is an action that you set up in Reality Composer.

It's called alongside your other action sequences, exactly in the same order. For example, if it's sequenced, it'll be called in that sequence.

There's a settable closure in your application code, so you can define the implementation of this action.

And just like accessing an entity through code generation, the Notify action is also accessible through your object.

So, in Reality Composer, we can set up a notify action here and we can give it an identifier. This is the identifier we'll use in code to reference our notify action and provide a custom implementation. In the structure of our generated object, the actions lives alongside your energies inside of the scene object.

So here we have actions and a property actions.

Inside of the actions class, you'll see multiple notify actions that have the name of the identifier you've given your notify action. So you can access these as simply as the loaded anchor.actions and then the name of the action that you care about. In addition, we also generate an all actions array, so you can do great things with this, just like any other collection in Swift. You can filter over it, you can iterate over it. Anything you can do with an array in Swift, you can do with this all actions property.

To actually set the implementation of a notify action, you first need to access it through your Alert anchor. So, for example here, seasonschapter.actions .display Earth details, and then provide the custom closer. So .onaction. And the entity that you'll get returned inside of this closure is the target entity you set up in Reality Composer. On the other side, to actually kick off action sequences and provide a way to do this as well, we have a couple of great built-in triggers such as Scene Start and Proximity. But there might be times you want to provide custom triggers. For example, selecting a segment of control or tapping on a button. And for that, we're providing the Notification Trigger.

So Notification Trigger is set up in Reality Composer.

It starts an action sequence or multiple sequences that have the same identifier.

It's posted from the runtime of your application.

And it's accessible just like a Notify Action and acts as your entities just by name in your code. You can set up a notification trigger right inside of your behavior and here we have given it the identifier show gold star. And we'll use this name in code. Just like actions, it lives right alongside your entities in the generated code inside of a new notifications object class.

And inside of Notifications, just like Notify Actions, you'll have Notification Triggers corresponding to the names, the identifiers that you've given to those Notification Triggers in Reality Composer. And just like Notify Actions, you have an array that you can filter over it or iterate over as well.

That's called all notifications. You can post a Notification Trigger by calling the post method, and this will fire an action sequence or sequences with the same name.

And you can also pass in an optional override set. This is really powerful for cases where you want to copy an entity and dynamically call an action sequence on only the new entity.

The overrides will take as a key the name of the original target of the action in Reality Composer, and then you pass in the new target. So when the action sequence runs, it only targets the new entity. So, let's pull this all together and let's check it out in Xcode. So, here I have my application. Over the course of the session, Pau and Michelle have built up the Seasons chapter of our Solar System lesson. And here I want to implement a second chapter based on the sizes of different celestial bodies. So here we have our Xcode 11, just our base application, and we have already included our Reality Composer project. Again, you can create a new AR or Game template or you can drag a Reality Composer project right into Xcode. We can preview the project right in Xcode here and go ahead and open it in Reality Composer.

Let's take a quick tour of this and the application before writing some code.

So, in our chapter we've set up a couple of entities and we've given our scene a name and we've given different entities that we care about a name. For example this gold star is called special star. This is the name we'll use in our code. Additionally, we've set up a couple of behaviors. So for example, for the Moon, we want to know when the Moon is tapped so we can store some application state, and also hide all the other text labels and only display the Moon's labels. And all of these actions happen at the same time because they're grouped.

Additionally, we have a notify action and we'll use this to know when the Moon is tapped inside of our application.

We've implemented the same action sequence for the Earth and the Sun as well.

We also have a Look At camera action set up when the scene starts, so each of our text labels will look at the camera no matter where we're looking. And we have a hide on start action sequence which will hide all the entities that we want to view only at specific times. For example, the chapter completed, text, and the text labels. Additionally, we have some action sequences that will be triggered in code. We'll get to this later in this demo, that will scale the celestial bodies to their relative sizes, scale them back to the same size.

Display the chapter completed text. Begin the Earth orbit. And hide all of the other celestial bodies.

And we have a cool little animation fired from a notification trigger that will show the gold star, spin it, and give it a bounce.

So let's jump back to our Xcode application now and do a quick tour. So this application is pretty straightforward. It's a single view application. And at the top level we have an AR view, a segmented control to switch between size and detail view, a lesson completed button, so the student can just say I'm done with the lesson and view the chapter at the end.

In a detail view, that will show more details as we tap on the Moon, the Earth, and the Sun. Additionally, we have some view models that will just drive that detail view, so it just shows some text, and then some basic application logic to kick off our loading. And let's look at loading first.

So down here, the first thing you'll notice is we're using code generation in this project. So our Reality Composer project is automatically converted into an object that we can use. It's a real type, so we can use it right here for the size chapter property.

So for example, Solar System lesson.size chapter is a real type that we can use. We can go ahead and load our scene and let's walk through this code as well. So what we're doing is loading the size chapter asynchronously and then we're handling, we're giving the loaded anchor collision shapes so that tap triggers work. And then we're adding it into our scene.

Because we'll be using the size chapter later, we're storing it in the size chapter property and we're setting up notify actions, which we'll get to later. Right now it's not hooked up.

So let's go ahead and run this. We'll see with just a few lines of code we're bringing our entire AR experience and it should work exactly the same way it did in Reality Composer. So here we go. We have our scene. We see the content. For example, chapter completed is hidden and when we tap on each of our celestial bodies, we'll see text automatically appear and it will face the camera no matter where we're looking, and same with the Sun. So, that looks pretty great. With a few lines of code, we brought our entire Reality Composer project into Xcode. It's super simple. What I want to do next is actually fill in this detail view. You'll notice when I tapped the Sun, for example, the detail view didn't actually get filled in. So let's jump back to Xcode and fill in the notify actions.

So because our notify actions are automatically generated for us, we also get an all actions array that we can filter over. And we're going to filter over those actions to get only the actions we care about which begin with display. So, let's walk through this code.

Because we set up notify actions in our size chapter, we can jump back to Reality Composer to look at those. For each the Moon, the Earth, and the Sun, right here, display Moon details, display Earth details, and display Sun details, we can access these in code. And we want to actually filter over our notify actions for only actions that begin with display, because we want to set the same closure for each of them.

So, jumping back to Xcode, that's what we're doing here. We're doing size chapter.actions .all actions, and we're filtering over it, getting the identifier from the notify action object, and checking that it starts with display.

Next we're iterating over all of the display actions and setting the same closure for each using the on-action property.

And we're taking the target of our notify action, in this case for the Moon it's the Moon, for the Sun it's the Sun, and for the Earth it's the Earth, and using that entity to display different view models.

So let's run this application now. And what we like to see is different view models appear when we tap on each of our celestial bodies.

There we go.

So we have our content, and now when we tap on the Moon, because we've set up a notify action and we're observing those notifications, we're now seeing the Moon detail right at the bottom here. When we tap on the Earth, we see Earth's details, and we tap on the Sun, we're seeing the Sun's details. That looks pretty great.

We also were keeping some state. So, when we tap on all three of our Earth, Sun, and Moon actions, we're just making sure that three different actions were called, and we display a complete lesson button up here in the right-hand corner.

So what we want to do now is using Notification Triggers, fire off those size relative size, and size to same size action sequences that we set up in Reality Composer using the segmented control at the top and the button in the upper right-hand corner.

So, we can do this with a segmented control value change method. This is an Interface Builder method that we've set up and hooked up in Interface Builder itself.

And using our Notification Action, Triggers, we can fire the scale to same size action sequence, which we set up in Reality Composer down here, which will scale each of our celestial bodies back to their original scale of 100%.

And when the size segmented control is selected, we want to scale our Sun to a relative size to the Earth.

To do that, we can fire our scale to relative sizes action sequence.

And finally when the lesson completed button is tapped, this is an Interface Builder action that we've set up and hooked up, we'll fire the chapter completed Notification Trigger.

And what this one does is because there are three different Notification Triggers hooked up in Reality Composer, we'll fire all three of these action sequences. We'll fade out the existing content.

We'll then begin an orbit of our little Earth and little Moon. And we'll show the chapter completed section. So, let's run this now and see our application.

All right, so here we have our content. We can tap on the Moon. Everything works exactly the same as we set up in Reality Composer. And now when we tap the size segmented control when looking at the Earth and the Sun, we get a really good scale of just how big the Sun is compared to Earth. It's pretty tiny. So, that looks great. Let's size it back, and let's complete our lesson. I'm going to tap the lesson completed, the complete lesson button at the top right-hand corner.

And we'll see our content fades out, and we see our chapter completed along with an orbit starting for our tiny Sun. That looks pretty great, and we can see how with Notification Triggers and Notify Actions, we can work with our application and our AR content. There's one last thing I want to do. We discussed post with overrides, and I want to show you how we can use that to display dynamic content using application state.

So because this is a lesson application, we want to display gold stars based on how well a student does.

For this session, I think we all did pretty great so I'm just going to give us three stars, and we can see how we can set this up. This is a method that we're calling inside of our display details view up above where we're keeping state. So this looks like a lot of code that I just pasted in, but let's walk through it.

So the first thing we're doing is accessing our special star entity that we set up in Reality Composer right here. We've given it the name special star, so we're now accessing it right in Xcode.

Next, we're triggering the show gold star action sequence right in code, so that will display the first gold star. But we want to display three.

So, we can do that by dispatching after maybe two and a half seconds for this demo, and we're going to clone the star.

Then we're going to position it to the right and up a little bit.

We're going to add it to our scene. And then using post overrides, we're passing in the original target of the action sequence, and then passing in the new action, the new target of the action, in this case the right star. And we want to do the same thing for the left star. So, we're copying it again, copying the star.

Positioning it to the left and down a little bit, adding it to our scene, and again, posting with overrides, taking the original name and passing in the left star this time as the target of our action sequence.

So, let's build and run this app now, and what we'd like to see is three gold stars appear, even though we've only set up one in our application. This is driven entirely by application logic.

So here's our scene again. We can walk through the lesson.

I think I'm done now, so I'll click complete lesson. And we see one gold star appears.

We'll see a second gold star appears. And there's our third star. We did a great job, so we got three stars here.

So, that's how easy it is to load your AR content into your application, begin working with it with notify actions to provide your custom own implementations, and then Trigger Action sequences that you set up in Reality Composer using Notification Triggers. So, let's take a tour of what we learned today. We learned with Reality Composer we can create amazing AR experiences using the built-in modular assets, for example the sphere or the cube. And then we can bring those assets to life with behaviors and simple interactions.

Finally, we can bring those amazing AR experiences into our applications using code generation and deep integration with Xcode. We have already seen some amazing stuff being created by you guys using Reality Composer. We encourage you guys to keep creating. We're super excited to see the amazing AR applications that you can create.

We have some sessions and labs available to you guys today and tomorrow, and we encourage you guys to bring questions, your projects, anything else, we're happy to help you. Have a great rest of your WWDC. Thank you. [ Applause ]

-