-

Introducing Accelerate for Swift

Accelerate framework provides hundreds of computational functions that are highly optimized to the system architecture your device is running on. Learn how to access all of these powerful functions directly in Swift. Understand how the power of vector programming can deliver incredible performance to your iOS, macOS, tvOS, and watchOS apps.

리소스

관련 비디오

WWDC21

-

비디오 검색…

Hello. My name is Simon Gladman and I'm with the Vector and Numerics group. In this presentation, I'll be talking about two topics. First, our new Swift Overlay for Accelerate. And second, measuring Accelerate's performance using the Linpack benchmark. Before we dive into the Swift Overlay, let's recap exactly what the Accelerate framework is. The primary purpose of Accelerate is to provide thousands of low-level math primitives that run on a CPU and support image and signal processing, vector arithmetic, linear algebra, and machine learning. Most of these primitives are hand tuned to the microarchitecture of the processor. This means we get excellent performance and this performance translates directly into energy savings. So, if you're an app developer and you use the Accelerate framework, not only will your application run faster, but you'll also use less battery life.

We provide the primitives across all of Apple's platforms. This includes not only macOS and iOS but watchOS and tvOS as well.

This means your users are going to have an overall better experience.

Accelerate's libraries are immensely powerful but up until now, their interfaces weren't that friendly to Swift developers. We've looked at four libraries and created new Swift-friendly APIs to make using Acclerate in Swift projects really easy. The four libraries we focused on are vDSP that provides digital signal processing routines including arithmetic on large vectors, Fourier transforms, biquadratic filtering, and powerful type conversion. vForce that provides arithmetic and transcendental functions including trig and logarithmic routines. Quadrature, that's dedicated to the numerical integration of functions. And vImage, that provides a huge selection of image processing functions and integrates easily with core graphics and core video. Accelerate gets its performance benefits by using vectorization. To understand vectorization, let's first look at a simple calculation over the elements of an array using scalar code.

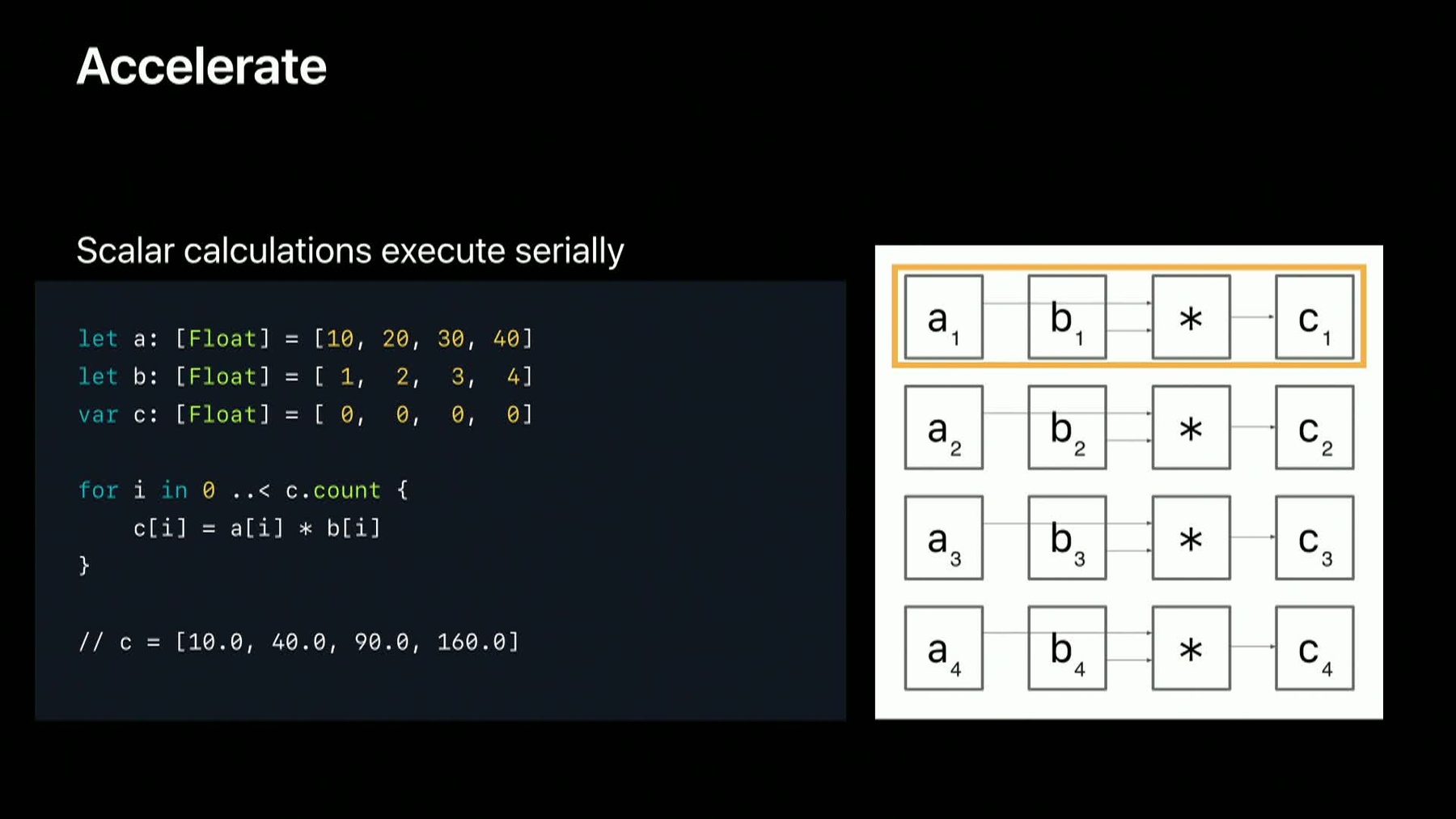

If, for example, you're writing code that multiplies each element of one array with the corresponding element in another, and you're using a four loop, each pair of elements are separately loaded, multiplied together, and the results stored.

So, after the first elements in A and B are multiplied together to calculate the first element in C, the second pair are processed. Then, the third. And, finally, the fourth. However, if you're processing the elements of an array using Accelerate, your calculation is performed on single instruction multiple data, or simD registers. These registers can perform the same instruction on multiple items of data by packing those multiple items into a single register. For example, a single 128-bit register can actually store four 32-bit floating point values. So, a vectorized multiply operation can simultaneously multiply four pairs of elements at a time.

This means that not only will the task be quicker, it will also be significantly more energy efficient.

The multiply function we just looked at part of Accelerate's digital signal processing library, vDSP. So, let's begin by looking at how the new Swift API simplifies using vDSP.

vDSP provides vectorized digital signal processing functions including Fourier transforms, biquadratic filtering, convolution, and correlation.

Furthermore, vDSP also provides some powerful, more general functions including element-wise arithmetic and type conversion.

So, even if you don't have an immediate need to, for example, compute the coherence of two signals, you may find that vDSP's general computation routines offer a solution to improve your app's performance.

Let's take a look at some basic arithmetic. An example could be given four arrays of single-precision values, you need to calculate the element-wise sum of two of the array's the element-wise difference in the other two, and multiply those results with each other. Using a four loop is a perfectly reasonable solution to this problem and calculates the expected results. Here's how you perform that calculation using vDSP's classic API.

Using vDSP is approximately three times faster than the four loop.

Here's the same computation using our new Swift API for vDSP. We're exposing the new Swift-friendly functions through our vDSP namespace and you can see the function and parameter names explain the operation. Because the new functions work with familiar types including arrays and array slices rather than pointers, you no longer need to explicitly pass the count. So, the entire function call is clearer and more concise.

Passing an initialized result array offers the best performance and you can obviously reuse that array in other operations for further performance benefits. However, we're also providing self-allocating functions. These make use of Swift's new ability to access an array's uninitialized buffer to return the result of a computation. Although not quite as fast as passing existing storage, it's still faster than the scalar approach and, in some cases, will simplify your code.

Another common task that vDSP can vectorize is type conversion. This example converts an array containing double precision values to 16-bit unsigned integer values rounding toward zero.

The scalar version uses map with explicit rounding. Again, this is a perfectly reasonable technique to use, but vDSP can vectorize this task to improve performance. In this example, vDSP is approximately four times faster than the previous scalar implementation.

The new Swift version of the vDSP function offers a clear interface. The function accepts a source array. The integer type you ought to convert each element to, and an enumeration to specify the rounding.

vDSP provides Fourier transforms for transforming one-dimensional and two-dimensional data between the time domain and the frequency domain. A forward Fourier transform of a signal decomposes it into its component sign waves. That's the frequency domain representation.

Conversely, an inverse transform of that frequency domain representation recreates the original signal and that's the time domain representation. Fourier transforms have many uses in both signal and image processing. For example, once an audio signal has been forward transformed, you can easily reduce or increase certain frequencies to equalize the audio.

The classic API is reasonably easy to follow if you're familiar with it. You begin by creating a setup object specifying the number of elements you want to transform and the direction. Then, after creating two arrays to receive results, you call the execute function. Once you're done, you need to remember to destroy the setup to free the resources allocated to it. The new API simplifies the instantiation of the setup object and the transform itself is a method with parameter names on the DFT instance. And now you don't need to worry about freeing the resources. We do that for you.

And much like the vDSP functions we've looked at, there's a self-allocating version of the transform function that creates and returns the result's arrays for you.

If you work with audio data, you may be familiar with biquadratic or biquad filtering. Biquad filters can be used to equalize audio to shape the frequency response, allowing you to, for example, remove either low or high frequencies.

vDSP's biquad feature operates on single and multichannel signals, and uses a set of individual filter objects called sections. The filters are cascaded; that is, they are set up in a sequence and the entire signal passes through each filter in turn.

The filters are defined by a series of coefficients that plug into the equation shown here. In this example, these values form a low pass filter; that is, a filter that reduces high frequencies. Here's the code using vDSP's classic API to create the biquad setup using the coefficients in the previous slide. And here's the code to apply that biquad filter to an array named signal, returning the result to an array named output. Let's look at the same functionality implemented with a new API.

As you can see, the new API vastly simplifies the creation of the biquad structure. You simply pass the coefficients to the biquad initializer and specify the number of channels and sections.

Applying the biquad filter to a signal is a single function call.

Now, let's look at the new API we've created for Accelerate's library for fast mathematical operations on large arrays, vForce.

vForce provides transcendental functions not included in vDSP. These include exponential, logarithmic, and trig operations.

A typical example of vForce would be to calculate the square root of each element in a large array. The scalar version of this code could use map.

vForce provides a vectorized function to calculate the square roots that in some situations can be up to 10 times faster than the scalar implementation.

The new Swift overlay offers an API that's consistent with the new vDSP functions and provides the performance and energy efficiency benefits of vectorization. And much like we've seen earlier, there's a self-allocating version that returns an array containing the square roots of each element in the supplied array.

Next, we'll take a look at the new API we've created for Quadrature. Quadrature is a historic term for determining the area under a curve. It provides an approximation of the definite integrative function over a finite or infinite interval. In this example, we'll use Quadrature to approximate the area of a semicircle, shown here in green, by integrating the functions shown.

Much like the Biquad code for vDSP, there's a fair amount of code required to use the existing Quadrature API. The first step is to define a structure that describes a function to integrate.

The second step is to define the integration options including the integration algorithm. Finally, with the function on options defined, you can perform the integration using the Quadrature integrate function.

The new API simplifies the code. One great advantage is that you can specify the integrand, that is, the function to be integrated, as a trading closure rather than as a C function pointer. This means you can easily pass values into the integrand.

Also note that integrators are now enumerations with associated values. So, there's no need to supply unnecessary points for interval or maximum intervals here. For example, you can pass the enumeration for the globally adaptive integrator specifying the points for interval and maximum intervals.

Now, let's look at the new API we've created for Accelerate's image processing library, vImage.

vImage is a library containing a rich collection of image processing tools. It's designed to work seamlessly with both core graphics and core video. It includes operations such as alpha blending, format conversions, histogram operations, convolution, geometry, and morphology.

Our new Swift API introduces lots of new features that makes using vImage in Swift easier and more concise. We've implemented flags as an option set. vImages throw Swift errors. And we've hidden some of the requirements for mutability and working with unmanaged types.

If you're working with core graphics images, there's a common workflow to get that image data into a vImage buffer.

First, you need to create a description of the CG images format.

Then, instantiate a vImage buffer. Initialize that buffer from the image. And finally, check for errors in a non-Swift way. And that's a lot of boilerplate code for a common operation.

The new API wraps up all of that code into a single throwable initializer.

However, since we're going to use a CG images format later, here's similar functionality implemented in two steps with a new API. We've added a new initializer to CG image format using a CG image, and an alternative buffer initializer that accepts a CG image and an explicit format description. Once you're finished working with a buffer, here's the classic vImage function to create a CG image from the buffer's contents.

And our new API simplifies that operation too with a new create CG image method that uses the format we've just generated from the image.

One important use case for vImage is converting between different domains and different formats. vImage's any-to-any convertors can convert between core video and core graphics, and convert between different core graphics formats.

For example, you might want to convert a CMYK core graphics image to RGB.

The existing API to create a convertor accepts the source and destination formats for the conversion and returns an unmanaged convertor.

You take the managed reference of the convertor and pass that to the function that does the conversion.

Our new API adds a new static make function to the existing convertor type that returns a convertor instance. The conversion is done with the convert method on the convertor instance.

Finally, let's look at working with core video image formats. In a typical example, you may want to create an image format description from a core video pixel buffer and calculate its channel count.

Here's the code required by the classic vImage API to create an image format description from a pixel buffer and get its channel count. The new API provides the same functionality in two lines of code. You create an instance of a core video image format from a pixel buffer using a new static make function. And simply access its channel count as a property.

That was a quick tour of a fraction of the new API. Let's now take a look at Linpack Benchmark and see just how much faster and more energy efficient Accelerate can be.

The Linpack Benchmark came out of the Linpack library which started as a set of routines for providing fast computational linear algebra. This was later subsumed by a library called LApack, which stands for Linear algebra package. LApack was developed to take advantage of these new things at the time called caches. LApack is comprised of many blocked algorithms. These algorit6thms are built on top of another library called BLAS, which stands for basic linear algebra subroutines. We'll talk more about BLAS later in this presentation. For now, keep in mind that the Linpack Benchmark runs on top of LApack, which runs on top of BLAS.

The Linpack Benchmark measures how quickly a platform can solve a general system of linear equations. It is comprised of two steps. The matrix factorization step, followed by the backsole step. By fixing the algorithm, we're able to see how well different platforms are at running the algorithm. This provides us with a method of comparing different platforms. The Linpack Benchmark has evolved over time. Originally, it solved a 100 by 100 system, and later a 1000 by 1000 system. The variant most often used today is the no holds barred variant, where the problem size can be as large as you want. This is the variant we will be running today. We are now going to compare Linpack performance on an iPhone 10S. At the top in orange, we're going to run an unoptimized Linpack. This Linpack Benchmark does not make use of the accelerate framework. It relies on software that is not tuned to the process that it is running on. Let's see what that looks like. We are now going to compare that with using the Accelerate framework; that is, we're going to run the same benchmark on the same platform, but using the Accelerate framework which is tuned to the platform.

We can see that by using the Accelerate framework, we are over 24 times faster. This will not only save time, but also energy, which improves battery life. We're now going to shift gears and take a look at the primary workhorse routine for the Linpack Benchmark called GEMM.

As I mentioned earlier, Linpack, which runs on LApack, is built on top of BLAS. Within BLAS is a routine called GEMM, which stands for general matrix multiplier. This routine is used to implement several other blocked routines in BLAS, which are used inside the blocked algorithms at LApack, most notably the matrix factorization and solver routines.

Because of this, GEMM is sometimes used as a proxy for performance. For this presentation, we are specifically going to look at the single-precision variant of GEMM.

Here, we're going to compare the performance of the Eigen library with that of Accelerate. Both the Eigen library and the Accelerate framework will run on top of an iPhone 10S. Both will be performing a single-precision matrix multiplier. Let's see how well Eigen does. Eigen tops out at about 51 gigaflops. Now, let's see how well Accelerate does. We can see that the Accelerate framework is almost two and a half times faster than Eigen on the same platform.

This is because the Accelerate framework is hand-tuned to the platform, allowing us to fully take advantage of what the platform can offer. So, if you're a developer, using Accelerate in your app will offer better performance. This performance translates into less energy, which means better battery life and an overall better experience for your users.

In summary, Accelerate provides functions for performing large-scale mathematical computations and image calculations that are fast and energy efficient. And now we've added a Swift-friendly API that makes Accelerate's libraries super easy to work with so your users will benefit from that performance and energy efficiency.

Please visit our site where we have samples, articles, and extensive reference material that covers the entire Accelerate framework. Thank you very much.

-