-

Edit and play back HDR video with AVFoundation

Find out how you can support HDR editing and playback in your macOS app, and how you can determine if a specific hardware configuration is eligible for HDR playback. We'll show you how to use AVMutableVideoComposition with the built-in compositor and easily edit HDR content, explain how you can use Core Image's built-in image filters to create your own AVMutableVideoComposition, and demonstrate how to create and use a custom compositor to enable HDR editing.

리소스

관련 비디오

Tech Talks

WWDC22

WWDC21

-

비디오 검색…

Hello and welcome to WWDC.

Hello, everyone. My name is Shu Gao. Today I'm going to talk about HDR video editing and play back using AVFoundation. Apple brought HDR play back on iOS a couple of years back, and on macOS last year. This year, we're also adding HDR support in our framework to enable HDR for video editing. In this session, I will show you how you can take advantage of that, using AVFoundation. We will start with a very quick introduction to HDR followed by a video of different video editing workflows. Then we'll talk about how to enable HDR on them. We will also discuss some finer controls you can have in configuring the HDR editing, mainly about the color properties. At the end, we'll touch upon HDR playback.

Now, what's HDR? HDR stands for high dynamic range. It greatly extends the dynamic range of the video above and beyond what is capable by standard dynamic range video.

Because of this, you can produce more vibrant videos with brighter whites and deeper blacks, better contrast and more details in shadows and highlights.

To give you a quantitative idea, SDR video is limited to up to 100 candelas per square meter, or nits. HDR video can go 100 times brighter all the way up to 10,000 nits.

HDR is often implicitly paired with Wide Color. The combination of Wide Color and high dynamic range can define a much larger color volume. In other words, it can produce colors you have never seen in any SDR video.

To accommodate the larger luminance and color range, HDR is also typically associated with higher bit depths, with 10-bit common for distribution media.

Transfer functions describe how scene linear lights is mapped to nonlinear code values in the video, and then back to display light.

HDR video comes with either HLG, or PQ transfer function. Apple supports both types of transfer functions.

To get a more detailed introduction to HDR, I recommend you check out the HDR Export session of this year's WWDC.

With this high-level concept of HDR, let's move on to review video editing workflows.

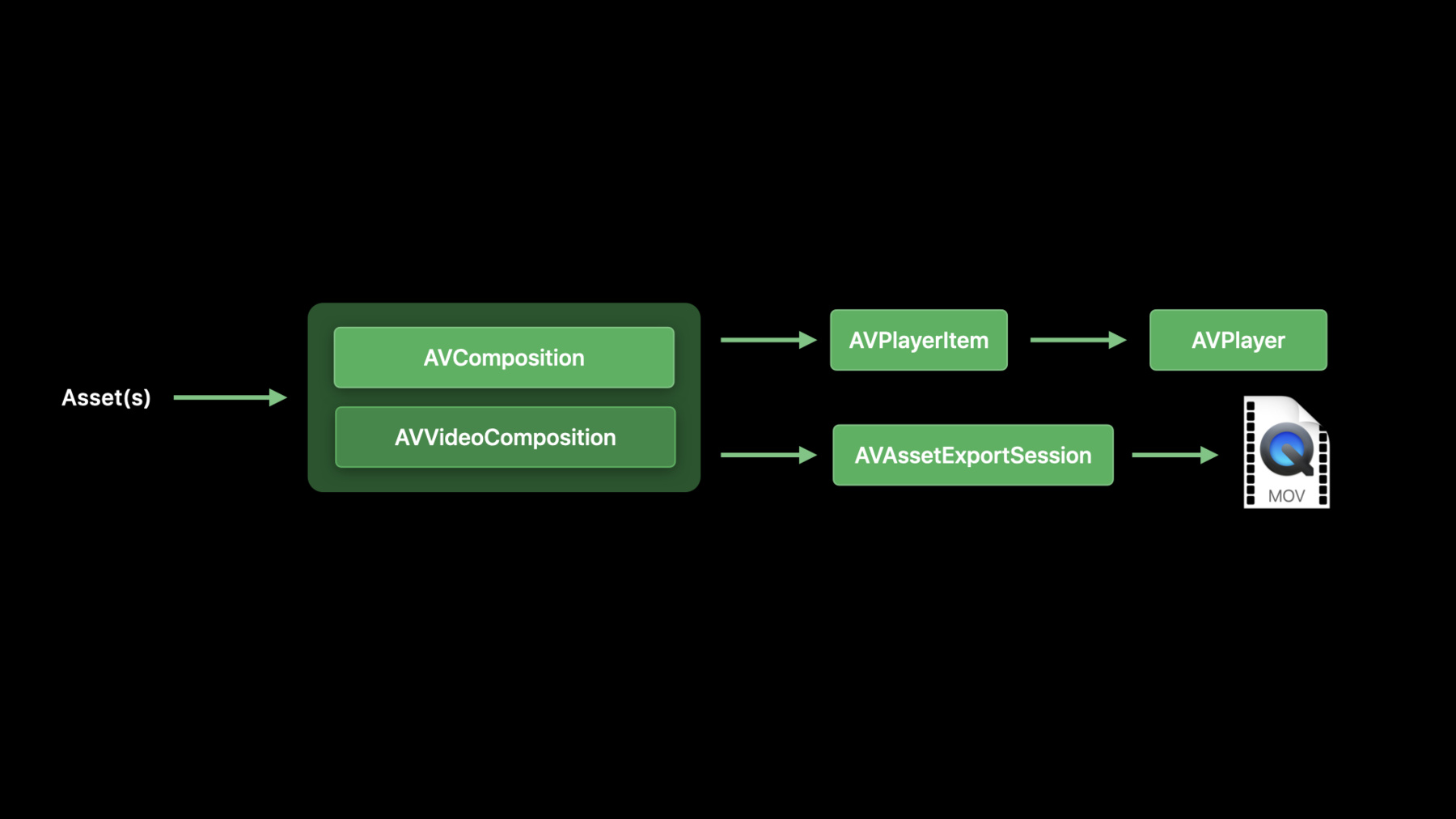

Before I get to the details, I would like to direct your attention to the previous WWDC sessions on video editing. If you're new to video editing, I highly recommend you watch the recordings of these talks. They will help you to get a basic understanding of the important classes we will discuss here. Here is a common workflow for video editing. You start with an asset, or a set of assets, then you build AVComposition and AVVideoComposition. These two objects together specify how to slice, splice, position and blend tracks in assets to achieve the artistic intent of your video editing. AVComposition specifies the temporal alignment of the source tracks. AVVideoComposition specifies geometry transformation and blending of tracks at any given time point.

We can use these two objects to construct an AVPlayerItem and create an AVPlayer from it to play back the editing effects. This is the preview pass of our workflow. We can also use AVComposition and AVVideoComposition to provide to an AVAssetExportSession which can write the editing results to a movie file.

Here is another workflow using a lower level API. This is an export only workflow. There's no preview path. We start with an asset, use an AVAssetReader to read the samples of the asset. The editing work is inserted in the AVAssetReaderVideoComposition Output object. This object contains an AVVideoComposition object that specifies the details of the editing intentions.

We can then extract the edited frames from the AVAssetReaderVideoComposition Output object, send it to an AVAssetWriter to write it out to a movie file.

Now we have reviewed the video editing workflows. Next, let's look at how we can enable HDR on these workflows. In each of the workflows we just reviewed, AVVideoComposition is in the center. Some of the workflows also need an AVComposition object, but it is specifying the temporal alignment of the tracks only, so it is pretty much HDR agnostic.

The AVVideoComposition, on the other hand, has to do with blending and transformations, which must be HDR aware to support HDR editing.

When you preview your editing, you also want to make sure your system can play back HDR video. We will touch upon that toward the end of this session.

If you export your video composition, you want to make sure you select the right settings on your AVAssetExportSession or AVAssetWriter. There is a separate session this year that provides all the details you need to know on exporting HDR.

Now, assume your display can display HDR video, and your export settings are set to the ones supporting HDR, let's dig deep into AVVideoComposition and see what you need to do to enable HDR supports there. There are three ways to construct an AVVideoComposition. We will discuss them one by one. The first one is to build it ground up using AVMutableVideoComposition but use a built-in compositor.

This is best suited if you want to blend multiple layers of video, and/or you want to apply frame level geometry transformations, like cropping, scaling, translation and rotation. The most common use case is for transition effects between two clips of video, like swipe, fade in, fade out, et cetera. This method is not good if you want to apply some filtering effects, like blurring, color tint or some fancy artistic effects. Let's look at a code snippet that builds AVVideoComposition in this way.

We start with an empty AVMutableVideoComposition and build it out. Note that the video composition instruction, contains a complex hierarchy of parameters to instruct the compositor precisely how to blend or transform the layers.

You can also have an array of composition instructions, each covers a different segment in the timeline of your editing.

In our example here, there's only one element in the array. If you do have multiple video composition instructions, they must not overlap in time and must have no gaps in between. We will not expand on that for the consideration of time.

We left the customVideoCompositor Class field unset. This tells the framework that we want to use the built-in compositor to carry out the composition instructions. Note that nothing here says anything about HDR. In fact, you may have a piece of code in your existing video editing app looking just like this. Now, let's look at the code you need to add to make this AVVideoComposition HDR capable.

Nothing. Apple has put in the heavy lifting in the framework, especially in the built-in compositor, to do the right thing when the video frames are HDR. If you take your existing video editing app using built-in compositor, without modifying it, and start to feed HDR video to it, chances are that you will get HDR video out with the same composition instructions properly applied to the HDR sources.

Now, let's look at the second way of constructing a AVVideoComposition, that is to use the "applyingCIFiltersWithHandler" parameter in the constructor of AVMutableVideoComposition.

This is useful when you want to apply filtering effects and you are working on a single layer of video.

This method does not give you the ability to blend multiple layers. Let's look at the code.

You can see we are using a different constructor of the AVMutableVideoComposition class.

We pass in the asset we want to apply the composition on, and a handler that applies CIFilters to the frames of the first enabled video track of the asset.

Please note that only the first enabled video track of the asset gets filtered and come out of the video composition.

When your handler is invoked, a AVAsynchronousCIImageFilteringRequest object is passed in.

You can use this request object to get the current frame of the input track as a CIImage object, suitable to send to a CIFilter to apply filtering effects.

Once you have a filtered output image, you can call "request.finish" to send it out.

This way of building the video composition frees you up from setting up the composition instructions and other churns of constructing the AVVideoComposition object. It lets you focus on and gives you full control on how you want to filter the source images. In this example, we pass the sourceImage to a built-in "CIZoomBlur" CIFilter. You can do more complicated filtering effects by chaining multiple built-in CIFilters, or apply your own CIFilter that you can build from your own CoreImage metal kernel code.

Now, how do we bring up this type of AVVideoComposition to support HDR? Again, the answer is mostly you don't need to do anything.

If your handler is calling into CoreImage's built-in CIFilters, all of them can handle HDR sources.

The only thing you need to worry about is if you are using your own CIFilter built from your own metal CoreImage kernel code in your handler. In the metal CoreImage kernel code, you need to be aware of the extended dynamic range that comes with HDR. Let's look at a couple of examples.

This is a piece of metal CoreImage kernel code that is aware of the extended dynamic range. The intention of this filter is to highlight any pixel that exceeds the normal zero to 1 dynamic range. If any of the r, g, or b channel's intensity exceeds 1, we will paint that pixel in ultra-bright red. You may have noticed that we include CoreImage header here, and the syntax is a bit different from your normal metal code. This type of code is called the "Metal CoreImage Kernel." If you want to learn more about it, you can go to this session in this year's WWDC.

Here is an example that an existing SDR metal CoreImage filter may break down with HDR. This is a color inverter. In the SDR world, you assume the maximum intensity is 1 and you subtract each channel's intensity from 1. With HDR frames, your resulted intensity could go to negative.

Next, let's talk about the third way of creating an AVVideoComposition, which is the most flexible way, using a custom compositor. It allows you to blend multiple video layers, do frame-level geometry transformations on each layer, and it also gives you control to apply filtering effects to each layer.

With this greater flexibility, you need to do more yourself. First, you need to define a custom compositor class. The framework will use your class to create and maintain the lifecycle of a custom compositor object to do the real composite job.

To construct the AVVideoComposition object, just like in the built-in compositor case, you start with an empty AVMutableVideoComposition, and you build it up.

The difference here is that you tell the framework about your custom compositor class by setting the customVideoCompositorClass field of the video composition.

What do you need to do to enable HDR on this type of AVVideoComposition? It's not free like in the previous two cases.

First obvious thing is the real work you do in your custom compositor needs to be HDR capable. Most HDR sources come in with 10-bit pixel formats. Your custom compositor needs to be able to handle them.

When you are manipulating pixel values, you need to be aware of the extended dynamic range brought by HDR sources.

After you have done all that, there are two more group of things you need to set on the custom compositor class to inform the framework that you are capable of doing the HDR work. This includes, number one, indicate that you support 10-bit or higher bit depth pixel formats for both input and output. Number two, indicate that your custom compositor can accept HDR source frames as its input. Let's look at the code.

This custom compositor is set up properly to support HDR. First, we set both sourcePixelBufferAttributes and requiredPixelBufferAttributes- ForRenderContext to include 10-bit pixel formats.

Fill in the formats that you actually support. The framework will make sure you get source frames in one of the listed pixel formats, and create the render context for your output frame in the format you can support. It will do format conversions if necessary.

Next is the supportsHDRSourceFrames field. This is a new field introduced for HDR. You must set it to true to welcome HDR source frames into your custom compositor. If you don't have this field, or set it false, the framework will think you cannot handle HDR frames and will convert them to standard dynamic range before sending it to you. So don't forget to set this field after all your hard work to actually handle HDR in your custom compositor.

Like I stated in the introduction, HDR video normally comes with Wide Color. So you also need to make sure your custom compositor can handle Wide Color. Actually, the framework assumes if you are capable of handling HDR, you are also capable of handling Wide Color. Here I'm setting it to true explicitly.

The startRequest is the function where you do the real work of the composition. As mentioned before, the work you do need to be aware of the extended dynamic range of HDR. Next, let's see a demo of HDR enabled custom compositor in action.

Depending on whether your streaming link is HDR or not, you may not be able to see this demo in HDR, but the video editing effect should still be evident. This is the live preview produced by a custom compositor using a custom CIFilter to add filtering effects.

It is an upgraded version of the HDR highlighting metal CoreImage kernel code we saw earlier. Here, instead of painting the HDR region to solid color, we use rolling stripes. The bright stripes you see is our proof that the source video is actually HDR.

If you download our sample code and run it on your new macOS, you will experience the high dynamic range of the ultra-bright red stripes.

So far, we have talked about different ways of constructing AVVideoComposition for your video editing, and how to enable HDR support on them.

Next, we will go further to talk about how you can control some aspect of the HDR behavior once you enable it, mainly controlling the color properties of your video composition. If you just do the bare minimum to turn on HDR support and leave everything else in default, what will happen if you get different types of video coming into your video composition? This table shows the output format of the video composition given different mixture of inputs.

Apple supports two types of HDR video, categorized by their transfer functions, namely HLG and PQ.

So you have three possible types of inputs, HLG, PQ and SDR. If you don't specify the composition color properties, the framework takes a policy, preferring HLG over PQ, over SDR. This table shows how the policy play out for two video inputs. This can easily expand to multiple inputs. To change this behavior, you can specify the color properties of your video composition in your AVVideoComposition object. Namely the colorPrimaries, the colorTransferFunction and the colorYCbCrMatrix fields.

If you set these three to color properties with HLG transfer function, the output of your composition will always be HLG regardless of your input video format. Likewise, you can set them to PQ or SDR properties.

Under the hood, the framework converts the input source frames to the specified color properties before it sends them to the compositor, be it the built-in compositor or the custom compositor. Remember I mentioned earlier in this session that if you have an existing video editing app that uses built-in compositor and start feeding HDR sources to it, chances are that you will get HDR output without modifying your code. Well, I said "chances are" for a reason.

If your app does not explicitly specify these color properties in the AVVideoComposition, the default behavior of the built-in compositor will produce HDR video. If, however, your app is explicitly setting these properties to SDR color space, then the built-in compositor will respect that setting and convert the HDR sources to SDR before compositing.

There are scenarios that this is actually what you do want. For example, you want to attach your edited HDR video to an e-mail and you know the recipient doesn't have the system capable of playing back HDR video. In this case, you want to produce an SDR version of your editing. Let's look at some typical values for these color properties.

Here is a table showing example values of the video composition color properties you can set. Note that if you want your composition to be in HLG, you must set the color transfer function to "HLG." If you want your composition to be in PQ, you must set the transfer function to "PQ." For either HLG or PQ, you normally want to set the colorPrimaries and YCbCrMatrix to ITU_R_2020, which are associated with Wide Color.

We have talked about video compositions. Now let's look at the playback path.

Like we reviewed earlier, to playback your editing, you build your pipeline like this. After you constructed the AVPlayerItem from your AVComposition and AVVideoComposition, you create an AVPlayer to play that AVPlayerItem, just like what you have been doing for any playback.

There's nothing special here. You would expect your HDR video composition to be displayed in HDR, right? This is not always the case. You have to make sure that you are on a system that is capable of playing back HDR. That's obvious, but how do you find out if you are on such a system? There is the eligibleForHDRPlayback API coming to rescue.

Instead of remembering which platform supports HDR playback, you can query this eligibleForHDRPlayback field on the AVPlayer class to get an answer.

Note this is a class var, so you don't need to have an AVPlayer instance to query. If the property is "true," it means, number one, your system is capable of consuming HDR video, and number two, there is at least one display, built in or external, that can display HDR video. I would like to point out here that HDR playback is not available on watchOS and macOS Catalyst.

Here is an example code that sets the video composition color properties based on the HDR playback eligibility. If the system is not capable of playing back HDR, we don't want to waste the processing power to compose in HDR, so we set the color properties to SDR.

Please note this field is only a playback statement. A system that is not capable of playing back HDR does not mean it cannot export HDR either. You can still set your composition color properties to HDR for your export path. You can check out the "HDR Export" session of this year's WWDC for information on supported platforms for HDR export.

Now, let's summarize what we have discussed today. First and foremost, HDR video editing is available. You have been on the consuming side of the HDR story, now you can help your users to get onto the production side. You can enable them to express their creativity in HDR through HDR video editing. Through reviewing video editing workflow we understand that AVVideoComposition is at the center of video editing. The good news is that there is no additional work to enable HDR if you are using built-in compositor, or you are using the applyingCIFiltersWithHandler with built-in CIFilters to create your AVVideoComposition.

If you are using custom compositor, there is some additional work, that is to indicate you support 10-bit or higher pixel formats, and you support HDR and Wide Color source frames, aside of making the real work you do be HDR capable.

Then we talked about how you can control the composition color properties and the default behavior if you leave them to default.

Last, we talked about the eligibleForHDRPlayback API you can use to check if your system supports HDR playback. Thank you very much for participating in this session. We hope you have a great time in WWDC and look forward to your HDR enabled video editing apps.

-

-

6:43 - Create AVVideoComposition with custom compositor class

// Create AVVideoComposition with custom compositor class let videoComposition = AVMutableVideoComposition() videoComposition.instructions = [videoCompositionInstruction] videoComposition.frameDuration = CMTimeMake(value: 1, timescale: 30) videoComposition.renderSize = assetSize -

9:55 - Create AVVideoComposition using “applyingCIFiltersWithHandler”

// Create AVVideoComposition using “applyingCIFiltersWithHandler” let videoComposition = AVMutableVideoComposition(asset: asset, applyingCIFiltersWithHandler: { (request: AVAsynchronousCIImageFilteringRequest) -> Void in let ciFilter = CIFilter(name: “CIZoomBlur”) ciFilter!.setValue(request.sourceImage, forKey: kCIInputImageKey) request.finish(with: ciFilter!.outputImage!, context: nil) }) -

10:57 - First CIKernel

// HDRHighlight.metal #include <metal_stdlib> #include <CoreImage/CoreImage.h> using namespace metal; extern “C” float4 HDRHighlight(coreimage::sample_t s, coreimage::destination dest) { if (s.r > 1.0 || s.g > 1.0 || s.b > 1.0) return float4(2.0, 0.0, 0.0, 1.0); else return s; } -

11:22 - Color Inverter CI Kernel

// ColorInverter.metal - not HDR ready #include <metal_stdlib> #include <CoreImage/CoreImage.h> using namespace metal; extern “C” float4 ColorInverter(coreimage::sample_t s, coreimage::destination dest) { return float4(1.0 - s.r, 1.0 - s.g, 1.0 - s.b, 1.0); } -

12:23 - Custom compositor class

// Custom compositor class class SampleCustomCompositor: NSObject, AVVideoCompositing { … } // Create AVVideoComposition with custom compositor class let videoComposition = AVMutableVideoComposition() videoComposition.instructions = [videoCompositionInstruction] videoComposition.frameDuration = CMTimeMake(value: 1, timescale: 30) videoComposition.renderSize = assetSize videoComposition.customVideoCompositorClass = SampleCustomCompositor.self -

13:58 - Setting custom compositor to support HDR

// Setting custom compositor to support HDR class SampleCustomCompositor: NSObject, AVVideoCompositing { var sourcePixelBufferAttributes: [String : Any]? = [kCVPixelBufferPixelFormatTypeKey as String: [kCVPixelFormatType_420YpCbCr10BiPlanarVideoRange]] var requiredPixelBufferAttributesForRenderContext: [String : Any] = [kCVPixelBufferPixelFormatTypeKey as String: [kCVPixelFormatType_420YpCbCr10BiPlanarVideoRange]] var supportsHDRSourceFrames = true var supportsWideColorSourceFrames = true func startRequest(_ request: AVAsynchronousVideoCompositionRequest) { ... } func renderContextChanged(_ newRenderContext: AVVideoCompositionRenderContext) { } } -

21:01 - AVPlayer API definition

// API definition extension AVPlayer { @available(macOS 10.15, *) open class var eligibleForHDRPlayback: Bool { get } } -

21:41 - AVPlayer API definition 2

// API definition extension AVPlayer { @available(macOS 10.15, *) open class var eligibleForHDRPlayback: Bool { get } } // Set video composition color properties based on HDR playback eligibility if AVPlayer.eligibleForHDRPlayback { videoComposition.colorPrimaries = AVVideoColorPrimaries_ITU_R_2020 videoComposition.colorTransferFunction = AVVideoTransferFunction_ITU_R_2100_HLG videoComposition.colorYCbCrMatrix = AVVideoYCbCrMatrix_ITU_R_2020 } else { videoComposition.colorPrimaries = AVVideoColorPrimaries_ITU_R_709_2 videoComposition.colorTransferFunction = AVVideoTransferFunction_ITU_R_709_2 videoComposition.colorYCbCrMatrix = AVVideoYCbCrMatrix_ITU_R_709_2 }

-