-

Author fragmented MPEG-4 content with AVAssetWriter

Transform your audio and video content into fragmented MPEG-4 files for a faster and smoother HLS streaming experience. Learn how to work with the fragmented MPEG-4 format, generate fragmented content from a movie, and set up AVAssetWriter to create fragments for HLS output.

리소스

-

비디오 검색…

Hello and welcome to WWDC.

Hello. My name is Takayuki Mizuno. I am a CoreMedia engineer at Apple. This session is about a new feature of AVFoundation for writing fragmented MP4 files for HLS. Here is a diagram that shows a typical workflow for HLS. There is a source material. This may be a video on demand material or live material. There is a part that encodes media data. There the video samples are encoded to, for example, HEVC, and the audio samples are encoded to, for example, AAC.

Then there is a segmenter here...

which segments media data in a specific format.

The segmenter also creates a playlist that lists those segments at the same time.

Finally, there is a web server that hosts the content. AVFoundation provides new features that is useful mainly for what the segmenter does.

AVFoundation has AVAssetWriter to allow media authoring applications to write media data to a new file of a specified container type such as MP4. We enhanced it to output the media data in fragmented MP4 format for HLS.

Apple HLS supports other formats such as MPEG-2 transport stream, ADTS and MPEG audio. But this new feature is specific to fragmented MP4. Here is an example of a use case.

My example is that the source is video on demand material.

AVFoundation has AVAssetReader, which is an object to read sample data from a media file.

You read sample data from a source movie, push the samples to AVAssetWriter and write the fragmented MP4 segment files.

Another example is that the source is live material.

AVFoundation has AVCaptureVideoDataOutput and AVCaptureAudioDataOutput, which are objects to provide you with captured sample data from a connected device.

You receive sample data from a device, push the samples to AVAssetWriter and write the fragmented MP4 segment files. Fragmented MP4 is a streaming media format based on ISO base media file format. Apple HTTP Live Streaming has supported fragmented MP4 since 2016. Here's a basic diagram of a regular MP4 file. It starts with a file type box that indicates which of the file formats this file conforms to.

There is a movie box that is organizing the information about all that sample data, then there is a media data box that contains all that sample data. The movie box contains the information relevant to the entire sample such as audio and video codecs.

It also contains references to the sample data such as location of the sample data. The order of most of those boxes is arbitrary.

The file type box has to come first, but the other boxes can come in pretty much any order. If you have used AVAssetWriter, you may know that AVAssetWriter has a movieFragmentInterval property. This specifies the frequency at which movie fragments will be written.

The resulting movie is what is called a fragmented movie. Here is a basic diagram of a fragmented movie file.

There are movie fragment boxes in this movie which referenced all the sample data held in the media data box followed by it.

This format structure is useful, for example, for live capture since even if writing is unexpectedly interrupted by a crash or something, data that is partially written is still accessible and playable.

Please note that this fragmented movie case, if writing finishes successfully, AVAssetWriter defragments the file as the last step. So, this ends up making a regular movie file.

Here is a basic diagram of a fragmented MP4 file. The file type box comes first, and the movie box comes after the file type box. Then there is a movie fragment box which is followed by a media data box. And after that, a pair of movie fragment boxes and the media data box continues. The order of those boxes should be like this. The major difference between fragmented movie and the fragmented MP4 is that the movie box of the fragmented MP4 does not contain references to the sample data. It only contains the information relevant to the entire samples, and the order of the movie fragment box and the media data box is reversed.

Now I'll talk about how to use AVAssetWriter. This shows how to create an instance of AVAssetWriter. You do not provide output URL, since AVAssetWriter does not write a file but outputs data. You just have to specify output content type. Fragmented MP4 conforms to the MP4 family, so you should always specify UTType with AVFileType.MP4. You create AVAssetWriterInput. In this example, compressionSettings, which is a dictionary, is provided to encode the media samples. Alternatively, you can set nil to the outputSettings, which is called passthrough mode. In passthrough mode, AVAssetWriter just writes the samples as it is given and then adds the input to the AVAssetWriter. Here is how to configure AVAssetWriter. You have to specify outputFileTypeProfile.

You should specify Apple HLS or CMAF compliant profile to output the media data in fragmented MP4 format.

You specify preferredOutputSegmentInterval.

AVAssetWriter outputs the media data at that interval. This interval time can be a rational time, but target segment duration for HLS must be an integer in seconds, so we are not using greater precision here because this is the right choice for HLS. You also specify initialSegmentStartTime...

which is the starting point of the interval.

Then you specify a delegate object that implements a designated protocol.

I will talk about the protocol later. After this, the same process as normal file writing continues.

You start writing, then append the samples using AVAssetWriterInput.

I'm not actually going to talk about this anymore in this session, but if you watch the WWDC session "Working with Media in AV Foundation" in 2011, you will see how you perform those processes. You can find the session video on Apple's Developer site. Here is the protocol for the delegate methods. There are two methods. Both delegate methods deliver NSData of a fragmented media data.

They also deliver AVAssetSegmentType...

that indicates a type of the fragmented media data.

You should implement one of them. The difference is that the second one delivers an AVAssetSegmentReport object. That object contains the information about the fragmented media data. If you do not need such information, you should implement the first one.

AVAssetSegmentType has two types...

initialization and separable. Fragmented media data with type of initialization contains the file type box and the movie box. Fragmented media data with type of separable contains one movie fragment box and one media data box.

You will receive data with a type of separable successfully.

You package the media data you received into what is called a segment and write the segment to files.

A pair of file type boxes and the movie box...

should be an initialization segment.

And the pair of movie fragment boxes and the media data box...

can be one segment.

For HLS, a single file that contains all of those segments can be used for streaming.

Or each segment can be divided into multiple segment files.

Moreover, multiple pairs of movie fragment boxes and the media data boxes can be one segment and stored in one segment file. HLS uses Playlist. Playlist is the centerpiece of how the client finds out about the segments.

Here is an example of Playlist. There are several tags that indicate information about segments, such as URL and the duration. AVAssetWriter does not write the playlist. You should write the playlist. That is why one of the delegate methods delivers AVAssetSegmentReport. You can create the playlist and the I-frame playlist based on the information AVAssetSegmentReport provides.

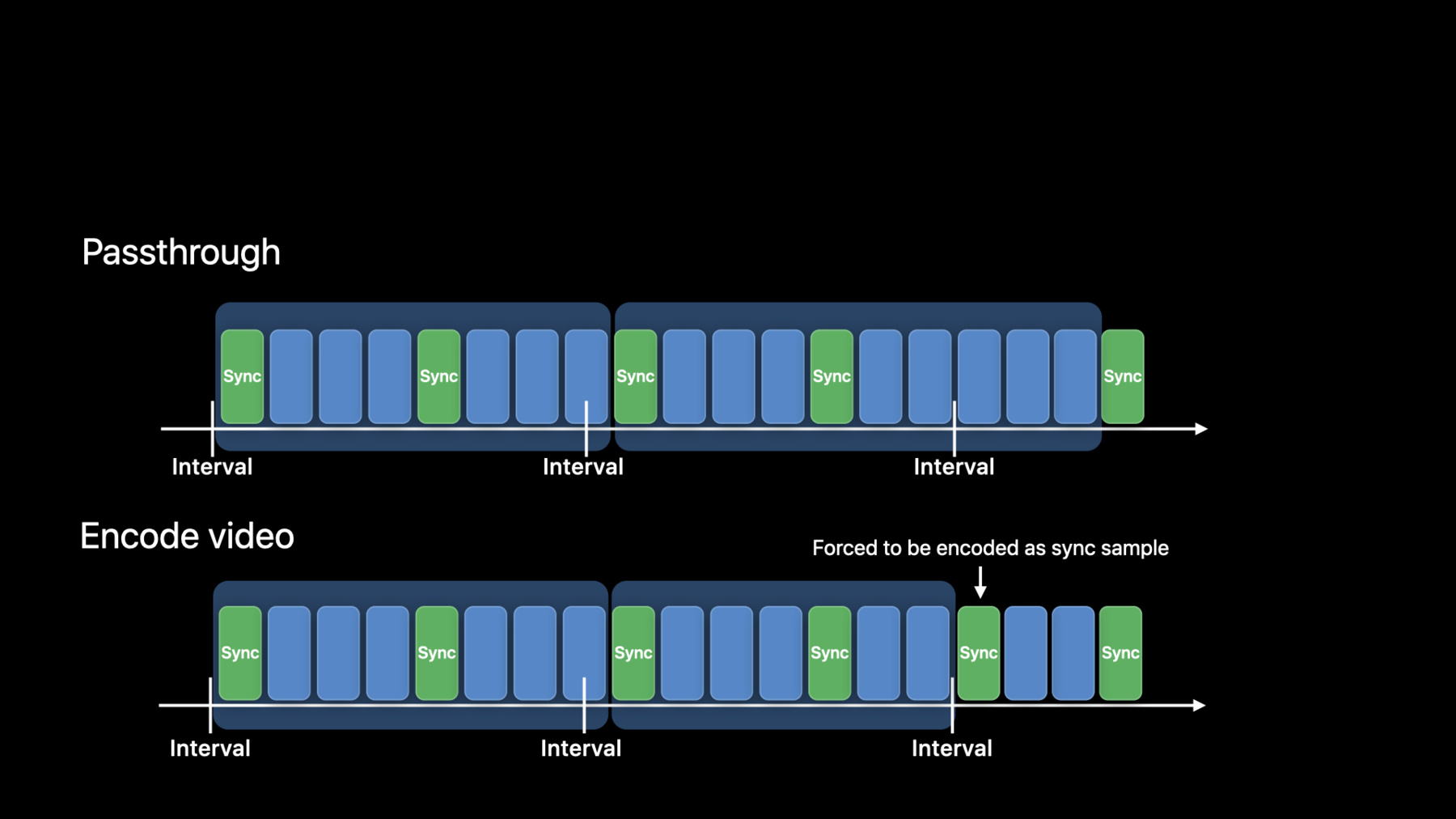

If you look at our sample code called fmp4Writer, you will see how to create the playlist. Also, the document about Playlist can be found here at the Developer streaming page. In passthrough mode, which is a mode where a sample is not encoded, AVAssetWriter outputs media data that includes all the samples just prior to the next sync sample after the preferred output segment interval has been reached or exceeded.

The sync sample here is a sample that does not depend on another sample. This is because CMAF requires that every segment starts with a sync sample, and Apple HLS also prefers this.

This rule applies not only to video, but also to audio that has sample dependencies, such as USAC audio. Therefore, for passthrough, only one AVAssetWriterInput can be added. If sync samples are not laid out near to the interval, the media data may be output in a considerably longer duration than what is specified. As a result, it will be unsuitable for HLS. One of the solutions is to encode video samples at the same time. As I mentioned earlier, if you specify output settings for compression in AVAssetWriterInput, samples will be encoded. In encoding mode, a video sample that just reaches or exceeds the preferred output segment interval will be forced to be encoded as a sync sample.

In other words, sync samples are automatically generated so that the fragmented media data will be output at or very close to the specified interval. If you encode both audio and video, one AVAssetWriterInput for each media can be added. Earlier, I said that for passthrough, only one AVAssetWriterInput can be added. But you can deliver video and audio as separate streams using a master playlist. You can deliver not just one pair of audio and video streams, but also streams at various bit rates or in different languages.

You need to create multiple instances of AVAssetWriter to create multiple streams. Again, the documents about master playlist and recommendations for encoding video and audio for HLS can be found here at the Developer streaming page.

This is an advanced use case, but if you require different segmentation than preferredOutputSegmentInterval does, you can do it on your own. For example, you may want to output media data not at a sync sample after an interval has been reached, but before the interval has been reached. Here is the code I showed you earlier that set properties of AVAssetWriter. To signal custom segmentation, set preferredOutputSegmentInterval to CMTime indefinite. It is not necessary to set initialSegmentStartTime since there is not an interval. Other settings are the same as the settings that are used for preferredOutputSegmentInterval. This is only for passthrough. You cannot encode with custom segmentation. So when you create AVAssetWriterInput...

you have to set nil to the output settings.

You call the flushSegment method to output media data.

FlushSegment outputs a media data that includes all samples appended since the previous call.

You must call flushSegment prior to a sync sample so that the next fragmented media data can start with the sync sample.

Otherwise, an error that indicates that a fragmented media data started with nonsync sample occurs.

In this mode, you can mux audio and video. But if both audio and video have sample dependencies, it would make it difficult to align both media evenly. In that case, you should consider to package video and audio as separate streams.

AVAssetTrack now has a new property that indicates whether the audio track has sample dependencies.

You can check if a track has sample dependencies in advance using this property. I mentioned earlier that you should specify Apple HLS or CMAF compliant profile to output media data in fragmented MP4 format.

CMAF is a set of constraints for how you construct fragmented MP4 segments.

It is a standard for using fragmented MP4 for streaming.

It is supported by multiple players, including Apple HLS. Some of the constraints are not required if you target just Apple HLS. But if you wanted a broader audience for your media library, then you could consider CMAF.

Now I will talk about how HLS deals with audio priming. This diagram represents audio waveform of AAC audio.

AAC audio codecs require data beyond the source PCM audio samples to correctly encode and decode audio samples due to the nature of the encoding algorithm. For this reason, the encoders add silence before the first true audio sample.

This is called priming. The most common priming for AAC is 2,112 audio samples, which is just about 48 milliseconds, presuming that that sample rate is 44,100.

When audio and video are written in separate files as separate streams, the media data are laid out like this...

presuming that both media start at time zero. As you may have noticed, if audio and video start at the same time...

the audio will be delayed by the priming so that the audio and the video will be slightly off.

CMAF adopts edit list box in audio track to compensate for this issue. The priming is edited out...

so that start time of audio and video match.

Historically, Apple HLS hasn't used edit list, so if you specify Apple HLS profile, the edit list box is not used for compatibility with older players. Instead, baseMediaDecodeTime of audio media is shifted the amount of priming backward.

However, the baseMediaDecodeTime cannot be earlier than zero since it is defined as an unsigned integer.

One solution is to shift both audio and video forward by same time offset. This way, the baseMediaDecodeTime of audio media can be shifted the amount of priming backward.

Even if start time is not zero, in HLS, playback starts at the earliest presentation time of video sample...

so playback starts immediately without waiting for the time offset.

This is important, not only for exact video and audio sync, but also for exact match of time stamps between audio variants with different priming duration. So we recommend you to shift media time by adding a certain amount of time to all samples if you specify Apple HLS profile.

Initial segment start time should be shifted same time offset as well.

As I said earlier, the most common priming is less than one second, so a couple of seconds is enough for the time offset. But Media File Segmenter, which is Apple's segmenter tool, has a ten-second offset, so you could choose the same ten seconds. Our sample code shows how to add a time offset to all samples. In this demo, I will use our sample command line application to create fragmented MP4 segment files and the playlist.

Then I'll play the resulting content using Safari.

This demo scene is set up as a local server in advance to host the content.

I entered the command line application in the terminal application.

This command line application reads media data from a premade source movie and encodes the video and the audio. This is the source movie. The length is just 30 seconds. This command line application uses six seconds as the preferred output segment interval. The segment files and the playlist will be created in this directory. Let's get started. The video frame rate of the source movie is 30 frames per second. You see five segment files and a playlist was created. I open the playlist.

Each segment's duration is six seconds.

I enter the local host as URL to play it using Safari.

Let's play it.

HLS has several requirements. It also has several recommendations to improve the experience for users. We recommend you to validate segment files and playlist that you write using AVAssetWriter to make sure that those files meet the requirements and recommendations. WWDC session "Validating HTTP Live Streams" in 2016 is a great source for this purpose. You can find the session video in Apple's Developer site.

AVAssetWriter now outputs media data in fragmented MP4 format for HLS. Thank you for joining the session. [speaking Japanese]

-

-

5:36 - Instantiate AVAssetWriter and input

// Instantiate asset writer let assetWriter = AVAssetWriter(contentType: UTType(AVFileType.mp4.rawValue)!) // Add inputs let videoInput = AVAssetWriterInput(mediaType: .video, outputSettings: compressionSettings) assetWriter.add(videoInput) -

6:28 - Configure AVAssetWriter

assetWriter.outputFileTypeProfile = .mpeg4AppleHLS assetWriter.preferredOutputSegmentInterval = CMTime(seconds: 6.0, preferredTimescale: 1) assetWriter.initialSegmentStartTime = myInitialSegmentStartTime assetWriter.delegate = myDelegateObject -

8:00 - Delegate methods

optional func assetWriter(_ writer: AVAssetWriter, didOutputSegmentData segmentData: Data, segmentType: AVAssetSegmentType) optional func assetWriter(_ writer: AVAssetWriter, didOutputSegmentData segmentData: Data, segmentType: AVAssetSegmentType, segmentReport: AVAssetSegmentReport?) -

8:37 - AVAssetSegmentType

public enum AVAssetSegmentType : Int { case initialization = 1 case separable = 2 } -

13:45 - Custom segmentation

// Set properties assetWriter.outputFileTypeProfile = .mpeg4AppleHLS assetWriter.preferredOutputSegmentInterval = .indefinite assetWriter.delegate = myDelegateObject // Passthrough let videoInput = AVAssetWriterInput(mediaType: .video, outputSettings: nil) -

15:17 - Audio has dependencies

extension AVAssetTrack { /* indicates whether this audio track has dependencies (e.g. kAudioFormatMPEGD_USAC) */ open var hasAudioSampleDependencies: Bool { get } }

-