-

Build an Action Classifier with Create ML

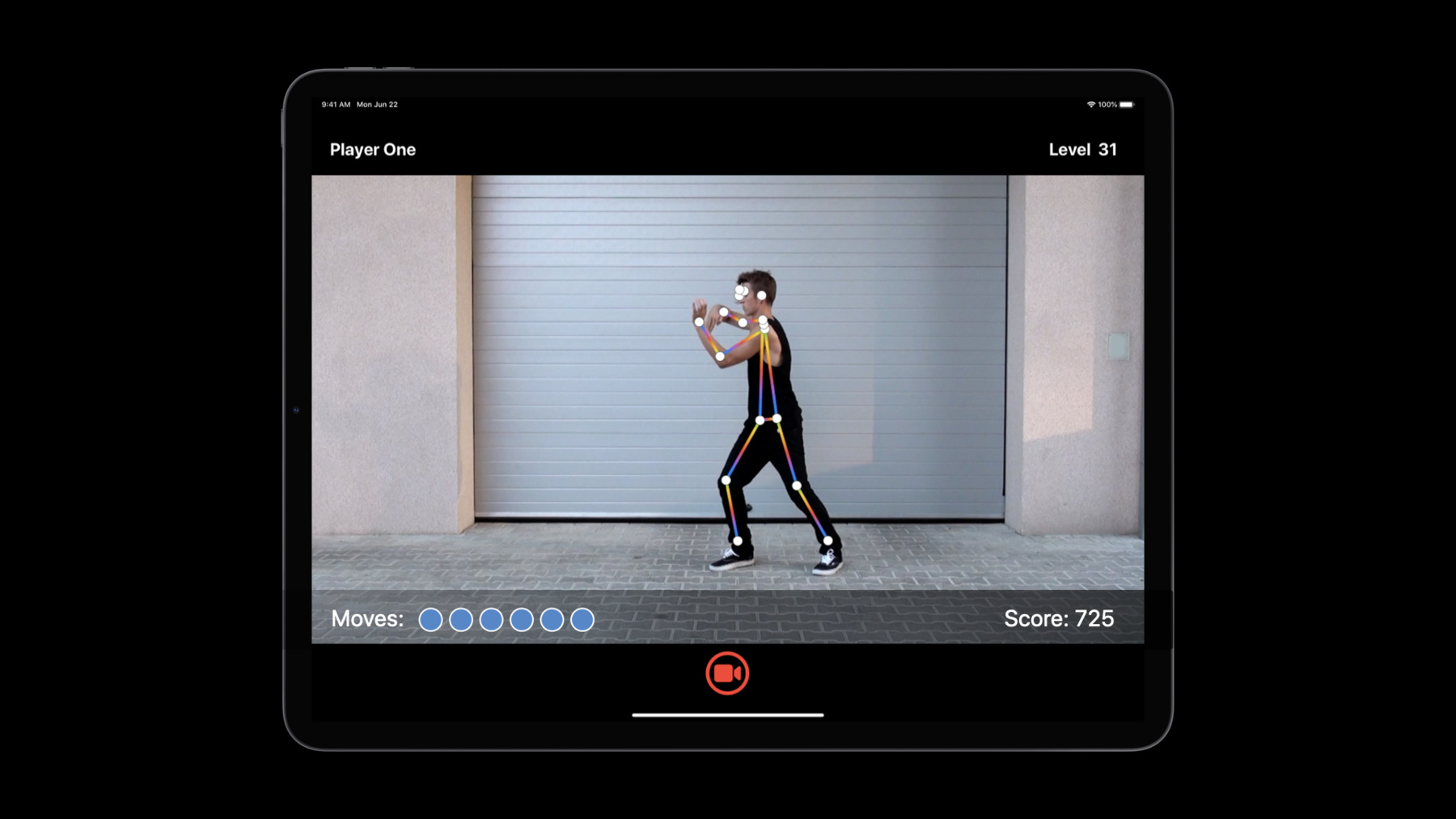

Discover how to build Action Classification models in Create ML. With a custom action classifier, your app can recognize and understand body movements in real-time from videos or through a camera. We'll show you how to use samples to easily train a Core ML model to identify human actions like jumping jacks, squats, and dance moves. Learn how this is powered by the Body Pose estimation features of the Vision Framework. Get inspired to create apps that can provide coaching for fitness routines, deliver feedback on athletic form, and more.

To get the most out of this session, you should be familiar with Create ML. For an overview, watch “Introducing the Create ML app.” You can also brush up on differences between Action Classification and sensor-based Activity Classification by watching “Building Activity Classification Models in Create ML.”

To learn more about the powerful technology that enables Action Classification features, be sure to check out “Detect Body and Hand Pose with Vision.” And you can see how we combined this classification capability together with other technologies to create our own sample application in “Explore the Action & Vision App.”리소스

관련 비디오

WWDC22

WWDC21

WWDC20

- Action & Vision 앱 알아보기

- Control training in Create ML with Swift

- Detect Body and Hand Pose with Vision

WWDC19

-

비디오 검색…

Hello and welcome to WWDC.

I am Yuxin, an engineer on the Create ML team. Today, my colleague, Alex, and I are very excited to introduce a new template: Action Classification in Create ML. Last year, we introduced Activity Classification, which allows you to create a classifier using motion data. But what if you wanted it to classify actions from videos? Cameras are ubiquitous today, and it's become so easy to record our own videos with our phones. Whether we are in a gym or at home, a self-guided workout could easily brighten our days.

Other activities, such as gymnastics, reveal the complexity of human body movements. Automatic understanding of these actions could be helpful for athletes' training and competition.

This year, we built Action Classification to learn from human body poses. Look at the sequence of this amazing dance. It would be interesting to recognize these moves and even build an entertainment app around it. So, what is Action Classification? First, it's a standard classification task that has the goal to assign a label of action from a list of your predefined classes.

This year, the model is powered by Vision's human body pose estimation. And therefore, it works best with human body actions but not with actions from animals or objects.

To recognize an action over time, a single image won't be enough. Instead, the prediction happens to a time window that consists of a sequence of frames.

For a camera or video stream, predictions could be made, window by window, continuously. For more details about poses from Vision, please check our session "Body and Hand Pose Estimation." Now, with Create ML, let's see how it works.

Assume we'd like to create a fitness classifier to recognize a person's workout routines, such as jumping jacks, lunges, squats, and a few others. First, I need to prepare some video clips for each class, then launch Create ML for training. And finally, save the Fitness Classifier model and build our fitness training app. Now, let's dive in to each step. My colleague, Alex, will first talk about data and training for Action Classification.

Thanks, Yuxin. Since I can't get to my gym class right now, an exercise app which can respond to how I do workouts seems like a good way to keep fit. My name is Alex, and I'm an engineer on the Create ML team. Today I'm going to show you how to capture action videos and train a new Action Classifier model using the Create ML app. Let's start by capturing the actions on video. We want our model to be able to tell when we're exercising and which workout we're doing. We need to collect videos of each of the five workouts: jumping jacks, squats, and three others. Each video should contain just one of the action types and should have just one human subject. After taking your video, if there's extra movement at the start or end of your video, you might want to trim these off in your photo app. We identify actions using the whole body, so make sure the camera can see the arms and legs throughout the range of motion. You might need to step back a bit.

Now, make a folder to hold each action type. The folder name is the label the model will predict for actions like this.

If you collected some examples of resting, stretching, and sitting down, you could put all these together in a single folder called "other." We're now ready for training. But I want to take a moment to consider what to do if we have a different sort of data. Perhaps someone else prepared the video for us, or we downloaded it from the Internet. In that case, we have a montage. With a montage, a single video contains multiple different actions we need, as well as titles, establishing shots, and maybe people just resting.

Looking at this video in sequence, we see periods of no action mixed with specific actions we want to train for. We have two options here. We can use video editing software to trim or split videos into the actions we need, and then put them into folders like before, or... we can find the times in the video where the actions start and stop and record those in an annotation file in CSV or JSON format. Here's an example. You can find out more in the Create ML Documentation on developer.apple.com. Let's set that aside, though, and keep going with the exercise videos we already prepared.

On my Mac, I'm going to start up the Create ML application.

If you are using Mac OS Big Sur, it has a new template: Action Classification.

Let's create an Action Classification project for our exercise model.

We give it a suitable name.

And I'm going to add a description so I can recognize it later on.

Our new Action Classification project opens on the Model Source Settings page. This is where we prepare for training, by adding the data we captured and making some decisions about the training process. We're going to need to drag the folder of videos we already prepared into the training data source. Let's take a look at that now.

I've collected all the videos in a "train" folder, which has sub-folders named for each of the actions we want to train. Let's have a look in the "jacks" folder. It contains all of the videos of jumping jacks.

Let's drag that "train" folder into the training data well. The Create ML app checks the data's in the right format and tells us a little about it. It says we've recorded seven classes. That's the five exercises plus two negative classes: "other" and "none." It also says we recorded 362 items. Videos. We can dive into this using the data source view.

Here we can see that each action has around 50 videos.

That's what you should aim for when building your project.

Below the data inputs are some parameters we can set. There's two I want to talk about here: Action Duration and Augmentations. Action Duration is a really important parameter. It represents the length of the action we're trying to recognize. If we're working with a complex action, like a dance move, this needs to be long enough to capture the whole motion, which might be ten seconds.

But for short, repeating actions, like our exercise reps, we should set the window to around two seconds.

The length of the action, also known as prediction window, is actually measured in frames, so Create ML automatically calculates the number of frames, 60, based upon the frame rate and action duration.

Augmentations are a way to boost your training data, without going out and recording more videos, by transforming the ones you already have in ways which represent real-world scenarios. If all of our videos were taken with a person facing to the left, then "horizontal flip" generates a mirror-image video that make the model work better in both orientations. Let's turn it on.

You'll have noticed two other boxes you can add data to. These are Validation and Testing. If you have extra data set aside to test your model, you can add that now to the testing data well. Create ML will automatically perform tests on it when the model is done training.

Machine learning veterans might like to choose their own validation data, but, by default, Create ML will automatically use some of your training data for this. Let's hit the "train" button to begin making our model.

When your Mac is training an Action Classifier from videos, it does this in two parts. First, it does feature extraction, which takes the videos and works out the human poses. Once that's complete, it can train the model.

Feature extraction is a big task and can take a while, so let's dig a little deeper.

Using the power of the Vision API, we look at every frame of our video and encode the position of 18 landmarks on the body, including hands, legs, hips, eyes, etcetera. For each landmark, it records x and y coordinates, plus a confidence, and these form the feature that we use for training. Let's go back and find out how it's getting on. The feature extraction is going to take about half an hour, and we don't want to wait, so I'm going to stop this training process and open a project with a model that we made earlier. This model's already finished training on the same data that we used before. I also added some validation data. You can see the final report in the training and evaluation tabs.

We can see how the model performance improved over time. It looks like 80 iterations was a reasonable choice. The line going up and to the right and then flattening out means that training has reached a stable state.

I can review the performance per class using the evaluation tab. This lets me check that the model will perform equally well across each kind of action we want to recognize. If we added validation or test data, you could see the results here.

But what I really want is to see the model in action. Let's go to the preview tab, where we can try the model out on new videos we haven't used for training. Here's one I recorded in my garden yesterday.

The video is processed to find the poses, and then the model classifies these into actions. Right now, it's classifying the whole video up front, but when you make your own app, you can choose to stream it if you're working with live videos or want a responsive experience. Let's press "play" to see what it's detected. Look out for the label on the video. You can see the classification change as the action in the video unfolds.

Let's see it one more time. You can see that pose skeleton superimposed over the video. We can turn that off.

Or we can just watch the pose skeleton exercising in the dark.

Below the video, we can see the timeline, which has divided the video into two-second windows. Remember when we chose that? And it shows the best prediction for each window underneath, as well as other predictions with lower probability.

I think we're ready to make a great app using this model. For this, I need to export the model from the project. And we can do this on the output tab. On the output tab, we can also see some facts about the model, including the size, which is an important consideration for assets in mobile apps, and you can find out what operating system versions this model is supported on. Let's save the model by dragging the icon into the finder.

FitnessClassifier.MLmodel.

And now we can share it with Yuxin, who's going to show us how to build an awesome iOS fitness app. Now we've got a classifier from Alex to drive our fitness training app. Let's first check out how to use the model to make a prediction. For example, we'd like to recognize jumping jacks either from a camera or a video file.

The model, however, takes poses, rather than a video, as the input. To extract poses, we use Vision's API: VNDetectHumanBodyPoseRequest.

If we are working with a video URL, VNVideoProcessor could handle the pose request for an entire video, and pose results for every frame can be obtained in a completion handler.

Alternatively, when working with a camera stream, we could use VNImageRequestHandler to perform the same pose request for each captured image.

Regardless of how we get poses from each frame, we need to aggregate them into a prediction window in a three-dimensional array as the model input.

This looks complicated, but if we use the convenient keypointsMultiArray API from Vision, we don't have to deal with the details. Since our fitness model's window size is 60, we could simply concatenate poses from 60 frames to make one prediction window.

With such a window prepared, it can be passed to our model as the input. And finally, the model output result includes a top predicted action name and a confidence score. Now let's take a tour of these steps in Xcode.

This is our fitness training app. Let's open it.

And this is the Fitness Classifier we've just trained. This Metadata page shows us relevant user and model information, such as the author, description, class label names, and even layer distributions.

The Predictions page shows us detailed model input and output information, such as the required input multi-array dimensions, as well as the names and types for the output. Now let's skip the other app logic and jump right to the model prediction.

In my predictor, I first initialize the Fitness Classifier model, as well as the Vision body pose request. It's better to do this only once.

Since the model takes a prediction window as the input, I also maintain a window buffer to save the last 60 poses from the past two seconds. Sixty is the model's prediction window size we have used for training.

When a frame is received from the camera, we need to extract the poses.

This involves calling Vision APIs and performing pose requests that we have already seen on the slides. Before we add the extracted poses to our window... remember that Action Classifier takes only a single person, so we need to implement some person-selection logic if Vision detects multiple people. Here in this app, I simply choose the most prominent person based on their size, and you may choose to do it in other ways. Now, let's add the pose to the window.

And once the window is full, we can start making predictions. This app makes a prediction every half a second, but you can decide a different interval or trigger based on your own use cases. Now let's move on to make a model prediction.

To prepare the model input, I need to convert each pose from the window into a multi-array using Vision's convenient API, keypointsMultiArray. And if no person is detected, simply pad zeros.

Then we concatenate 60 of these multi-arrays into a single array, and that's our model input.

Finally, it's just the one line of code to make a prediction.

The output includes a top-predicted label name and the confidences in a dictionary for all the classes. In the end, don't forget to reset the pose window so that once it is filled again, we're ready to make another prediction.

And that's everything about making predictions with an Action Classifier. Now let's try out this app and see how it works in action.

I feel some extra energy today and would like to challenge myself to a little workout. Let's open the app... and hit the "start" button to begin a workout. Now my poses are extracted from every incoming frame, and predictions are made continuously, as displayed at the bottom of the app. That's our Debugging View: confidence and the labels. Now, let me get started.

Wow. I finished a five-second challenge. As you may have seen, as soon as the model recognized my action, the timer starts, and as soon as I stopped, the model recognized my action as "other action" class now and starts to wait for next challenge, lunges. I'm ready.

I finished lunges, but I am not in a hurry yet and would like to take a rest and drink some water.

Everything happens interactively... and I don't have to get back and operate a device, which is very convenient for home exercises.

Lastly, let's try squats.

Now all three challenges are finished, and here is the summary of my time spent for each. Wow. That's a lot of exercise for today.

Now we have covered how to train an Action Classifier in making predictions. Let's invite Alex back and wrap up this session with some best practices. Thanks, Yuxin. I love the way this application waits for me to start my workout. I often need time to remember how an exercise is done and maybe which arm or leg is moved first. Building great features like that needs great data, so let's make sure we get the best performance out of the athletes, gamers and kids who bring our videos to life. Your model will train best if it's exposed to repetition and variety.

Earlier, I made sure I had around 50 videos for each exercise I wanted to classify, and you should, too, in your apps. Make sure you train with a variety of different people. They'll bring different styles, abilities and speeds, which your model needs to recognize. If you think your users will move around a lot, consider capturing the action from the sides and back as well as the front.

The model does need to understand exercises, but it also needs to understand when we're not exercising, or not moving at all. You could create two extra folders: one for walking around and stretching, and one for sitting or doing nothing quietly.

Let's consider how to capture great videos for the Action Classifier. Any motion from the camera person might be interpreted as the subject moving around, so let's keep the camera stable using a tripod or resting it somewhere solid.

The pose detector needs to clearly see the parts of the body, so if your outfit blends into the background, it won't work as well, and flowing clothing might conceal the movement you're trying to detect.

Now you know how to take the best videos for your Action Classifier. Yuxin, can you tell us how to get the most out of training in Create ML? Sure, Alex. Once you have the data, take a moment to configure your training parameters. One key parameter is action duration, in seconds, or prediction window size, which is number of frames in your time window. The length should match the action length in your videos, and try to make all actions roughly at the same duration.

Frame rate in your video affects the effective length of the prediction window... so it's important to keep the average frame rate consistent between your training and testing videos to get accurate results.

When it comes to using the model in your applications, make sure to only select a single person. Your app may remind users to keep only one person in view when multiple people are detected, or you can implement your own selection logic to choose a person based on their size or location within the frame, and this can be achieved by using the coordinates from pose landmarks.

If you'd like to count the repetitions of your actions, you may need to prepare each training video to be just one repetition of a single action. And when you make predictions in your app, you should find a reliable trigger to start a prediction at the right time or implement some smoothing logic to properly update the counter.

Finally, you can use an Action Classifier to score or judge the quality of an action. For example, your app could use a prediction's confidence value to score the quality of an action compared to the example actions in your training videos.

So, that's Action Classification in Create ML. We can't wait to see the amazing apps you are going to create using it. Thanks for joining us today, and enjoy the rest of WWDC.

-

-

5:28 - Working with montage videos

[ { "file_name": "Montage1.mov", "label": "Squats", "start_time": 4.5, "end_time": 8 } ] -

14:05 - Getting poses

import Vision let request = VNDetectHumanBodyPoseRequest() -

14:10 - Getting poses from a video

import Vision let videoURL = URL(fileURLWithPath: "your-video-file.MOV") let startTime = CMTime.zero let endTime = CMTime.indefinite let request = VNDetectHumanBodyPoseRequest(completionHandler: { request, error in let poses = request.results as! [VNRecognizedPointsObservation] }) let processor = VNVideoProcessor(url: videoURL) try processor.add(request) try processor.analyze(with: CMTimeRange(start: startTime, end: endTime)) -

14:26 - Getting poses from an image

import Vision let request = VNDetectHumanBodyPoseRequest() // Use either one from image URL, CVPixelBuffer, CMSampleBuffer, CGImage, CIImage, etc. in image request handler, based on the context. let handler = VNImageRequestHandler(url: URL(fileURLWithPath: "your-image.jpg")) try handler.perform([request]) let poses = request.results as! [VNRecognizedPointsObservation] -

14:57 - Making a prediction

import Vision import CoreML // Assume pose1, pose2, ..., have been obtained from a video file or camera stream. let pose1: VNRecognizedPointsObservation let pose2: VNRecognizedPointsObservation // ... // Get a [1, 3, 18] dimension multi-array for each frame let poseArray1 = try pose1.keypointsMultiArray() let poseArray2 = try pose2.keypointsMultiArray() // ... // Get a [60, 3, 18] dimension prediction window from 60 frames let modelInput = MLMultiArray(concatenating: [poseArray1, poseArray2], axis: 0, dataType: .float) -

16:27 - Demo: Building the app in Xcode

import Foundation import CoreML import Vision @available(iOS 14.0, *) class Predictor { /// Fitness classifier model. let fitnessClassifier = FitnessClassifier() /// Vision body pose request. let humanBodyPoseRequest = VNDetectHumanBodyPoseRequest() /// A rotation window to save the last 60 poses from past 2 seconds. var posesWindow: [VNRecognizedPointsObservation?] = [] init() { posesWindow.reserveCapacity(predictionWindowSize) } /// Extracts poses from a frame. func processFrame(_ samplebuffer: CMSampleBuffer) throws -> [VNRecognizedPointsObservation] { // Perform Vision body pose request let framePoses = extractPoses(from: samplebuffer) // Select the most promiment person. let pose = try selectMostProminentPerson(from: framePoses) // Add the pose to window posesWindow.append(pose) return framePoses } // Make a prediction when window is full, periodically var isReadyToMakePrediction: Bool { posesWindow.count == predictionWindowSize } /// Make a model prediction on a window. func makePrediction() throws -> PredictionOutput { // Prepare model input: convert each pose to a multi-array, and concatenate multi-arrays. let poseMultiArrays: [MLMultiArray] = try posesWindow.map { person in guard let person = person else { // Pad 0s when no person detected. return zeroPaddedMultiArray() } return try person.keypointsMultiArray() } let modelInput = MLMultiArray(concatenating: poseMultiArrays, axis: 0, dataType: .float) // Perform prediction let predictions = try fitnessClassifier.prediction(poses: modelInput) // Reset poses window posesWindow.removeFirst(predictionInterval) return ( label: predictions.label, confidence: predictions.labelProbabilities[predictions.label]! ) } }

-