-

Discover built-in sound classification in SoundAnalysis

Explore how you can use the Sound Analysis framework in your app to detect and classify discrete sounds from any audio source — including live sounds from a microphone or from a video or audio file — and identify precisely in a moment where that sound occurs. Learn how the built-in sound classifier makes it easy for you to identify over 300 different types of sounds without the need for a custom trained model. This includes a variety of noises, ranging from human sounds, musical instruments, animals, and various items.

For custom models, see how you can leverage the Audio Feature Print feature extractor to create smaller models with variable sound window control to better serve your app's purposes.

For more about Sound Classification and the Sound Analysis framework, watch “Training Sound Classification Models in Create ML” from WWDC19.리소스

관련 비디오

WWDC22

WWDC21

WWDC19

-

비디오 검색…

♪ ♪ Hi. Welcome to the Sound Analysis session at WWDC. My name is Jon Huang. I'm a researcher on the audio team. Today, my colleague, Kevin, and I will introduce enhancements to sound classification made available through the SoundAnalysis framework and CreateML. In 2019, we made it possible to train sound classification models using CreateML. We showed it was easy to create sound classification models and to deploy them in Apple devices. When you use this framework, all of the computations are optimized for hardware acceleration and are done locally on-device. This helps preserve your user's privacy because audio is never sent to the Cloud. We leveraged the Sound Analysis framework to introduce the Accessibility Feature called Sound Recognition. This feature can provide notifications to the user when certain sounds are heard in the environment like alarms, pets, and other household sounds. This is just one application of sound classification. Let's see what else we can do with it. The demo app is using my Mac's built-in microphone to listen to sounds in the environment. It's passing audio through a sound classifier and displaying the classification results in the UI. So as I'm chatting to you, speech is detected. Please sit back with me for a bit and see what happens. Make yourself comfortable. Let's start with some music. Hey Siri, play "Catch a Vibe" by Karun and Mombru.

Now playing "Catch a Vibe" by Karun and Mombru. ♪ ♪ ♪ Oh, oh, oh ♪ Notice the classifier is picking up both music and singing sounds. ♪ I don't know anyone ♪ ♪ Who feels so right ♪ ♪ Don't know anyone who makes me ♪ ♪ Catch a vibe, feel the frequency...♪ Now please join me for some tea. ♪ Feel all right, baby ♪ ♪ We could see ♪ ♪ If we could be right ♪ ♪ See if it's something about the way you smile ♪ ♪ Hold your breath and, baby, we could ♪ ♪ Catch a vibe, feel the frequency ♪ ♪ Catch a vibe, only you and me ♪ ♪ Feel all right, baby, we could see ♪ ♪ If we could be ♪ ♪ I've said, I've said ♪ ♪ It's part of me ♪ ♪ All the part you see ♪ ♪ You should be proud of...♪ This is good tea. ♪ You're my baby, gee ♪ ♪ You a cart, I see... ♪ ♪ How could this be? ♪ ♪ How could this be?... ♪ Hey, Siri. Stop. Now it's reasonable to assume that I collected some data for each of these sound categories and trained a custom model using CreateML. Yes, I could have done that, but actually, the classifier used is built-in. New to this year, we have a sound classifier built right into the Sound Analysis framework. It's never been easier to enable sound classification in your app. It's supported on all platforms.

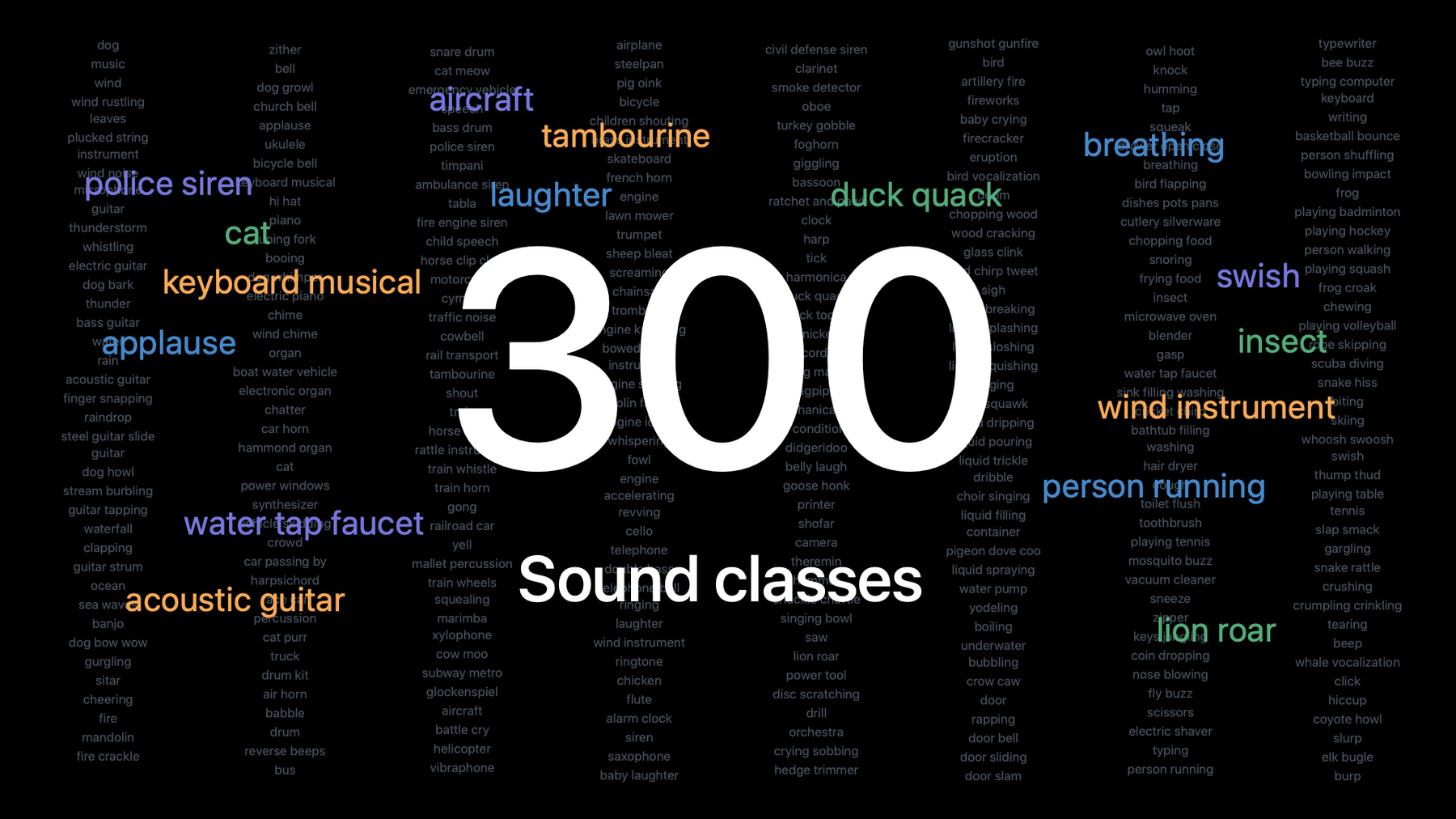

The built-in classifier is all about simplifying the developer experience. It removes the need for collecting and labeling massive amount of data, specialized machine learning and audio expertise, and lots of compute power in order to develop a high-accuracy model. The less time you have to worry about these details, the more time you can spend enriching the user experience in your app. It only takes a few lines of code to enable sound classification. I'll show you what this classifier can do. There's over 300 categories for you to use. Let's take a closer look.

We can classify sounds of domestic animals, livestock animals, and wild animals.

For music, many instruments can be recognized: keyboard instruments, percussion, string instruments, wind instruments. We can detect various human sounds: group activities, respiratory sounds, vocalizations.

Then there's sounds of things like vehicles, alarms, tools, liquids, and so much more. These ready-to-use categories are available for you to try. I'm going to turn it over to Kevin to walk you through how to use this sound classifier. Thanks, Jon. Hi, I'm Kevin, a software engineer on the Audio team. I'd like to show you how to use the new built-in sound classifier by taking a look at a small application I built. I was hoping Jon would show how well the classifier works with cowbell, but since it didn't fit in his demo, I came up with another idea. I have some media on my Mac that I collected while preparing for this session, and I'm pretty sure that I have some old footage containing cowbell. I'd love to show it to you, but first I have to find it. This means I'll have to find the right file, and I'll have to look inside to find just the right part. So how will I do this? I'll write a simple program that uses the built-in sound classifier to read a file and tell me whether a certain sound is inside. If the sound is found, the program can use the classifier to tell me the time at which it occurred.

I can then use Shortcuts, now available on macOS, to create a workflow that runs my program over lots of files. When my program finds a sound, the workflow can automatically use the reported detection time to extract a video clip containing the sound. This will work great for finding a cowbell clip.

So let's see it in action.

Here, I have a folder full of videos.

I'll select all the files and kick off my shortcut using the Quick Actions menu.

I'm asked to select the sound that I'd like to find, so from the list of options, I'll choose Cowbell.

Now, my shortcut is running, and after just a few moments, it finds the sound I'm looking for and shows it to me in a Finder window. Let's take a look. Looking good, Jon. Let's take a closer look at the shortcut that I used.

When my shortcut starts, it collects a list of all the sounds that it can recognize, and it asks me to pick one. Using my choice, it visits each of the files that I selected in Finder and looks for the sound inside. When my sound is detected, it extracts a few seconds from the video around the time that the sound occurred and shows me the resulting clip. Of these steps, two of them make use of the built-in classifier. These are the steps that I implemented in my own custom application for Shortcuts to use on demand.

Though I won't talk about Shortcuts in more detail, if you're curious to learn more, please refer to the WWDC session entitled "Meet Shortcuts for macOS." Now let's look at the implementation for my app's two custom actions. The first action reports all of the sounds that the app can recognize. Since the app is using the built-in sound classifier, it can recognize a few hundred sounds. Here's a function I wrote to get these sounds. I create an SNClassifySoundRequest using a new initializer that allows me to select the built-in classifier. Once I have this request, I can use it to query the list of sounds that the classifier supports. The app's second action tells Shortcuts when a sound is heard within a file. To implement this, I'll perform sound classification and report back the first time that the sound is detected, if it's detected at all. To perform sound classification, I'll need to prepare three objects. First, I'll need an SNClassifySoundRequest, which I can use to configure sound classification. Second, I'll need an SNAudioFileAnalyzer, which will let me target classification toward a particular file. The third object will require a little extra attention. I'll need to define my own Observer type, which will handle the results of classification. Skipping the Observer for now, here's some code for preparing the first two of these objects.

I can create the SNClassifySoundRequest using the built-in classifier, and I can create an SNAudioFileAnalyzer using a URL to the file I want to classify.

If, at this point, I had an Observer ready to go, it would be easy to start sound classification, but defining that Observer is the missing piece. So let's do that. I'm starting here with a bare Observer that inherits from NSObject and conforms to the SNResultsObserving protocol.

I'll initialize instances with the sound label I want to search for, and I'll add a CMTime member variable to store the time at which I detect the sound. I just need to implement the request: didProduce result method. This method will be called when results are produced by sound classification, so I expect to receive instances of SNClassificationResult. I can use the classificationForIdentifier method of the result to extract information about the label that I'm searching for. I'll query the confidence score associated with the label, and if that score exceeds a certain threshold, I'll consider the sound to be detected. When I notice the detection for the first time, I'll save the time at which the sound occurred so that I can provide it to Shortcuts later.

With that, my Observer is complete, and I have all the pieces needed to determine when a sound occurs within a file. This example touches on two important topics that I'd like to discuss further. First, I'll talk about time of detection, and then I'll talk about detection thresholds.

Let's start with time of detection. When you classify audio, the signal gets broken up into overlapping windows.

For each of these windows, you'll get a result that tells you what sounds were detected and how confidently. You'll also get a time range, which tells you what part of the audio was classified. In my app, when I detect a sound, I use the result's time range to determine when the sound occurred. But the time range can be impacted when the duration of a window changes. You can customize the window duration to make it big or small based on your use case.

Short windows work well when working with short sounds, like a drum tap. This is because you can capture all the important features of that sound within a small window of time. The small window doesn't cut out any important information. The advantage of using a small window duration is that it allows you to more closely pinpoint the moment at which a sound occurred.

But small window durations may not be appropriate when working with longer sounds. A siren, for example, may contain both rising and falling pitches over a longer period of time. Capturing all of these pitches together in a single window can help sound classification correctly detect the sound. In general, it's good to use a window duration long enough to capture all the important parts of the sounds that you're interested in. If you'd like to edit the window duration, you can set the windowDuration property of SNClassifySoundRequest. Note, though, that not all window durations are supported.

Different classifiers might support different window durations. You can check what window durations are supported by reading the windowDurationConstraint property of SNClassifySoundRequest. The built-in classifier supports window durations between 1/2 second long and 15 seconds long. A duration of one second or longer is a great starting point when adopting the classifier in your app.

Let's talk next about confidence thresholds. In my app, I considered a sound to be detected any time the confidence for that sound rose above a fixed threshold. I chose a value of 0.5 for my threshold, but there are some things to consider when choosing a threshold for your own app. The classifier can detect multiple sounds at the same time. When this happens, you may notice that several labels score with high confidence.

Unlike when using a custom model trained using CreateML, label scores do not add up to a value of one. The confidences are independent and shouldn't be compared against one another.

Because confidence scores are independent, you may find it useful to choose different confidence thresholds for different sounds.

Choosing a threshold involves a tradeoff. A higher confidence threshold reduces the probability that a sound will be falsely detected, but it also increases the probability that a true detection will be missed because it wasn't strong enough. When you select a threshold for your application, you'll need to find a value that achieves the right balance of these factors for your use case. Note that confidence scores may change when you set a custom window duration, so this can impact your thresholds as well. One final thing to keep in mind when using the built-in classifier is that some sounds are similar. Among the large number of sounds that the classifier can identify are several groups of sounds that can be difficult to differentiate using audio alone, even for a human. Where possible, it's best to be selective about the sounds that you pay attention to. You should try to watch only for sounds that are likely to occur in the contexts where your app will be used. With that, let's turn back to Jon to learn about what's new in CreateML regarding sound classification. Thanks, Kevin, for that great example. I'm glad you had fun with that cowbell video. Now let me show you what's new in CreateML or, specifically, how you can improve your custom models by leveraging the power of the built-in classifier. The built-in classifier was trained with a ton of data across a vast number of categories. So the model actually contains a lot of knowledge about sound classification. All this knowledge can be utilized for the training of your custom model using CreateML. I'll show you how this works. The sound classifier can be separated into two different networks. The first part is the feature extractor, and the second part is the classifier model. The feature extractor, sometimes known as the embedding model, is the backbone of the network. It takes an audio waveform and transforms it into a low-dimensional space. A well-trained feature extractor organizes acoustically similar sounds into nearby locations in the space.

For example, sounds of guitar would cluster together but are placed away from drum and car sounds.

Now, the second part of this pipeline is the classifier model. It takes the output of the feature extractor and computes the class probabilities. The classifier benefits from being paired with a good feature extractor, like the one we have embedded into the built-in classifier.

We're making the built-in classifier's feature extractor available to you. It is called Audio Feature Print. When you train your own custom model in CreateML, your model will be paired with Audio Feature Print. With this, your model benefits from all the knowledge contained in the built-in classifier. Compared to the previous generation feature extractor, Audio Feature Print has improvements across the board. Even though this network is smaller and faster, it achieves higher accuracy on all benchmark data sets we compared against. And like the built-in classifier, models using Audio Feature Print support a flexible window duration. You can select a long window duration to optimize for sounds like sirens or a short window duration for sounds like drum taps. Audio Feature Print is the new default feature extractor when you train a custom model using CreateML.

The Window duration is the length of audio used to generate a single feature while training. It defaults to three seconds, but you can adjust it to suit your needs. CreateML gives you the option to select a window duration between 1/2 second to 15 seconds. For a more detailed example of training a custom model, you can check out the 2019 session on "Training Sound Classification Models in CreateML." It also shows you how to use the Sound Analysis Framework to run the custom model. Thanks for joining us in the session on Sound Analysis. Today, we introduced a powerful new sound classifier built into the OS. Along with it, we've upgraded the feature extractor in CreateML. These will unlock new possibilities, and we can't wait to see what you'll do with them in your app. Enjoy the rest of WWDC. [upbeat music]

-

-

8:12 - Get list of recognized sounds

func getListOfRecognizedSounds() throws -> [String] { let request = try SNClassifySoundRequest(classifierIdentifier: .version1) return request.knownClassifications } -

9:19 - Create sound classification request

let request = try SNClassifySoundRequest(classifierIdentifier: .version1) let analyzer = try SNAudioFileAnalyzer(url: url) var observer: SNResultsObserving // TODO try analyzer.add(request, withObserver: observer) analyzer.analyze() -

9:52 - Implement sound classification observer

class FirstDetectionObserver: NSObject, SNResultsObserving { var firstDetectionTime = CMTime.invalid var label: String init(label: String) { self.label = label } func request(_ request: SNRequest, didProduce result: SNResult) { if let result = result as? SNClassificationResult, let classification = result.classification(forIdentifier: label), classification.confidence > 0.5, firstDetectionTime == CMTime.invalid { firstDetectionTime = result.timeRange.start } } }

-