-

Extract document data using Vision

Discover how Vision can provide expert image recognition and analysis in your app to extract information from documents, recognize text in multiple languages, and identify barcodes. We'll explore the latest updates to Text Recognition and Barcode Detection, show you how to bring all these tools together with Core ML, and help your app make greater sense of the world through images or the live camera.

To learn more about Vision, watch “Detect people, faces, and poses using Vision” from WWDC21 as well as “Explore Computer Vision APIs” from WWDC20.

For further understanding of all that Vision has to offer, watch “Detect people, faces, and poses using Vision” from WWDC21 as well as “Explore Computer Vision APIs” from WWDC20.리소스

관련 비디오

WWDC21

WWDC20

WWDC19

-

비디오 검색…

Hello, and welcome to WWDC. My name is Frank Doepke, and I'm an Engineer on the Vision team.

The Vision framework has grown over the years, with its focus on image analysis. To get a better grasp, we can look at Vision's capabilities in terms of its usage focus. Sports. Tracking objects and the analysis of the human pose are just some of the requests that can help you to create a sports application.

Accessibility. Visions request like OCR or image classification and object detection are helping visually-impaired users.

People. Vision provides a number of face and body-related requests that your app can use. You can find out more about this in the "Detect people, faces, and poses using Vision" session.

Health. From barcode scanning and OCR to analysis of the human pose, Vision provides building blocks to create a smart Health application.

Computational Photography. Features like portrait mode rely on face detection and segmentation.

Security. Requests like face and human detection are helpful for applications like motion detection in security cameras.

And documents. This is what we want to focus on in this session.

Vision offers a number of requests that help you with the analysis of documents: barcode detection, text recognition, or commonly known as OCR, contour detection, rectangle detection, and new for this year, document segmentation detection.

Here's our agenda. First, we'll talk about barcode detection. Then, we'll talk about text recognition. And last, we'll talk about document detection.

Let's look at barcode detection. This year, we are introducing a new revision of the barcode detection request.

VNDetectBarcodesRequestRevision2 offers support for new symbologies. Codabar, GS1Databar, including Expanded and Limited, MicroPDF, and MicroQR, where the latter is particularly helpful if you want to make a QR code for a URL and need to place it on a small label or package, as it uses a lot less space.

We changed the behavior for this new revision to be in line with the rest of Vision in respect to how the resulting bounding boxes are reported in relation to the region of interest that a client has specified.

Let's look at that change in detail. Here, we have a document with a QR code. When we do not specify a Region of Interest, also known as ROI, the bounding box gets reported in relation to the full image. Now, let's specify an ROI, like we want to only focus on the center part of what the camera sees. Revision 2 now reports the bounding box in relation to the ROI, just like other Vision requests.

Unfortunately, Revision 1 always reports in relation to the full image. But we don't want to change that behavior, as it would potentially break existing clients. And just as a reminder, when you compile your application against the latest SDK and do not specify a specific revision, you will always get the latest revision on your request. But for applications that specify Revision 1 or do not re-compile against a new SDK, they will still get the old Revision 1 behavior.

Let me highlight a few interesting aspects of the barcode detection request in Vision. Vision supports 1D and 2D barcodes.

But what makes it really interesting is that within one image, it can detect multiple codes, as well as multiple symbologies, at once.

That means you don't have to scan again and again to get multiple codes. This is a huge advantage over most handheld scanners. Keep in mind that if you scan for multiple symbologies, it'll take longer the more symbologies you have specified. So you want to setup the request with only the symbologies that are relevant to your use case.

With the expansion of the new symbologies for barcode scanning, Vision can play a particularly helpful role in the health sector. With an iPhone, you can analyze multiple codes at once, and thanks to its connectivity to the internet, pull up the information without needing a separate scanner.

And thanks to the iPhone's strong low-light capabilities, you can scan codes, even in dark scenarios, without shooting off a laser or disturbing the patient while they are resting.

Now, let's look at how Vision performs barcode detection.

1D codes will get scanned as lines. That means you will likely get multiple detections for the same code. It is easy to de-duplicate them by looking at the payload, which is the real data that is included in the barcode.

2D codes get scanned as a single unit. That means you get one bounding box back for the whole code. An example for a 2D code would be a QR code.

Each barcode gets reported with its own observation. But as I mentioned before, 1D codes can return multiple observations, with the same content, but in different physical locations. The payload is the content of the barcode, that is, the data that is included in this machine-readable code. In particular for the payload of QR codes, you might want to use data detectors to analyze the encoded URL.

Now, let's look at this in a little demo.

Alright, here we have an Xcode playground, where you see that I have an image with all the barcodes in them. I use the VNDetectBarcodesRequest, and I set the Revision to 2. Now, as the symbologies, I just have codabar, and when we look at this, we see the codabar got highlighted in red.

Now, I can change this to, let's say, QR.

What happens now is that we run the request again, and we see that the QR code gets highlighted. But it's an array, so I can also specify other requests with it, let's say ean8. And when I do that, we'll now see that we have both, the ean8 and the QR code. But what if I want to get all of them? I simply pass in an empty array, and in that moment, all symbologies get read. And as you see, all highlighted right now with the code on the bottom. Let's go back to our slides.

From barcodes, we are now moving on to look at text recognition. Vision introduced text recognition in 2019. It operates in two modes: Fast and Accurate. Since then, Vision has expanded its language support. Let's look at how text recognition works and where language plays a role. In the Fast path, we have a Latin-character recognizer. The Accurate path, on the other hand, uses a machine-learning-based recognizer that operates on words and lines.

After the recognition is done, each path goes through a language correction stage. And, in the end, we get back the recognized text. The language selection affects the recognition stage. In the Fast path, it would mean that the different Latin character sets are supported, like the umlaut for German. In the Accurate path, a completely different model gets used when we have to recognize Chinese, as its structure is very different to Latin-based languages. That means if you need to read Chinese text, then it is important that Chinese is the primary language in the request. The language selection also influences language correction, as it picks the correct dictionary for its work.

So, what are the best practices when using languages in text recognition? Even though it might look like a fixed set of languages is supported, it is always better to query which languages are supported for a given request configuration using supportedRecognitionLanguages(). You can specify multiple languages, and, in that case, the order matters. When there is ambiguity, it gets resolved in the order of languages. In particular for the Accurate path, the first language decides which recognition model gets used. That means your use case dictates which languages you want to use in the request.

Let's look at this in a little demo.

So, I have here now a revised version of our sample code, and you can see that I have an image with different languages of text in it. Now, I specified Revision 2, and I can see which languages are supported. We have English, French, and so on.

Now, if I switch, for instance, back to Revision 1, we can see I only have English. And that is the same for the Fast as it is for the Accurate path. Now, let's go back to Revision 2.

Notice that when I switch now, for instance, to German, I actually get the umlaut correctly in Grüsse aus Cupertino.

But I don't have support in the Fast path for Chinese.

In the Accurate path, I can now pick Chinese.

And now, we finally get the correct Chinese letters for "Hello World." Let's go back to the slides.

Last but not least, let's look at Document Detection.

Vision introduces a new request called VNDocumentSegmentationRequest. It's a machine-learning-based detector that we have trained on various types of documents, like sheets of paper, signs, notes, receipts, labels, et cetera.

The result of the request is a low resolution segmentation mask, where each pixel represents a confidence if that pixel is part of the detected document or not. In addition it provides the four corner points of the quadrilateral.

On devices with a Neural Engine, the request can run in realtime on a camera or video feed. The VNDocumentCamera in VisionKit is now using the request instead of the VNDetectRectanglesRequest on modern devices with a Neural Engine.

Speaking of the VNDetectRectanglesRequest, how do these two requests differ, as they both can be used to detect a document? The DetectDocumentsRequest is, as I mentioned, machine-learning-based and performs fastest on the Neural Engine. But it can also be used on the GPU or CPU, but it is not fast enough there for realtime performance.

The rectangle detector is a traditional computer vision algorithm that runs only on the CPU and can keep up with the realtime performance, as long as the CPU is not saturated with other tasks.

The document request is trained on a variety of documents, and they don't have to be all rectangles, which is one of its main strengths. The rectangle detector, on the other hand, works by finding edges and intersections that form a quadrilateral, which can be a challenge with obscured corners or folds in the document.

The documents requests provides a segmentation mask and the corner points, while the rectangle detector only provides corner points. And the document detector is trained to look for one document only. With the rectangle detector will return multiple rectangles. These rectangles can even be nested. Let's look at this a little bit more.

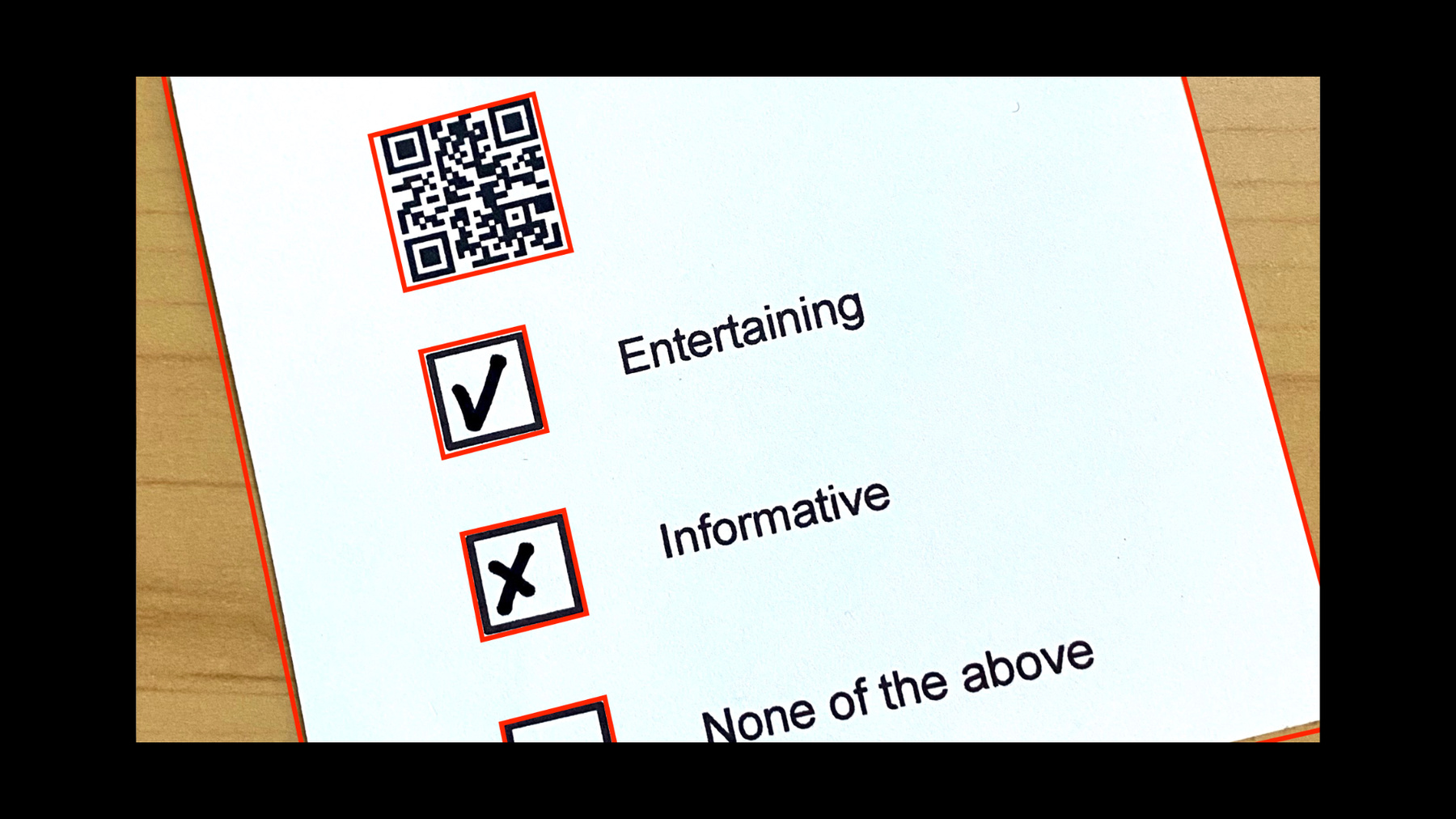

As I mentioned, the document detector finds one document, which we see here with the quadrilateral of the detected object. But the rectangle detector will deliver back multiple observations of all the rectangles it finds in the image, and I highlighted a few here. It's up to the app to decide which rectangle is the document. How about we try all this in a demo? All right, we wanted to create a little survey, how well we are doing at WWDC. Now, unfortunately, you are not with me, so I had to ask the camera team here to fill out the survey for you. So, I created a little app in which I can now scan our survey cards.

And what do we get? QuickDraw for beginners felt outdated. Well, it is bit old by now.

Let's go for the next one. Ah, Vision was entertaining and informative.

And last but not least, Cobol, just what I needed. Somebody is in the wrong session here. Okay, now let's look how we did this in the code. So, I created again a little playground here because it's easier to build this stuff up for us. What you can already see is that I loaded up an image, and I used the CIImage, because I need to do some image manipulation on it. I created a requestHandler, and I used the new VNDetectDocument SegmentationRequest(). Once I perform the request, I get now the results back, and I created a little helper function that I used core image to use now as a perspective corrected image, and we get back just a cropped-out card in a perspective corrected form. So, that's easy. So, what do we have to do next? We need to detect the bar codes, detect the rectangles, and recognize the text.

Once we perform this request, we then have to scan the check boxes to see which ones were ticked. All right, I prepared this a little bit, so let's start with detecting the bar codes.

And I'm using, as the symbologies, just the QR code. I loaded into the document title because I know that is-- the content of my QR code will be the title of what we get out of it. Next, we need to detect the rectangles. Again, we have a little piece of code rectangle for that.

So, I create two arrays. I want to get all the checkBoxImages, which is data needed for the analysis. And I get all the rectangles out. So, I used the VNDetectRectanglesRequest. Now, what I do here is I sort them in the vertical order so that I get the results in the correct order back.

OK, now we need to recognize our text.

That is simple. We store all the resulting textBlocks, and we use the VNRecognizeTextRequest. So now, what we have to do is simply perform the request.

And as you can see, I used the documentRequestHandler, which is the one that used the cropped-out image, and performed the requests on it. And if I go back up here, I can already see that I get my correct QR code, but something is not quite right with my rectangles. I don't get any rectangles. So, what do I have to do? Well, by default, the rectangles detector only looks for rectangles that are at least 20% of the image. So, we need to correct that. So, I go in and set the minimumSize to, let's say, something like 10%.

And once we do that, we get a rectangle.

Okay, well, that's only one. Well, the other thing with the rectangle detector is that I need to tell it how many it should return. By default, the rectangle detection will only return one, the most prominent rectangle. But I want to get all of them. I do this by setting the maximumObservations to 0. And once I have done that, I now get all of our checkboxes and the bar code, because that looks like a rectangle. Okay, so we are good. Now comes the last part, and I need to actually scan the checkboxes. So, for that, I actually prepared a little machine-learning demo.

I have a model here that I trained earlier with Create ML. It's an image classifier, and all I did was I used some of these checkbox images, which were marked, and some of them are not marked, for my "yes" and "no" label. And I also gathered a few images that are neither of them. That's my NotIt.

Again, we can use this in our code.

So, what do we have? We create our request by loading the model and create our Create ML request. And then we iterate, over all the checkbox images, create an ImageRequestHandler from it, and perform our classification.

Now, I can look at my top classification. If that is "Yes," then I just find which text line lines up with the checkbox that I have, and what do we get, in the end? Vision was entertaining and informative. Let's go back to the slides.

Let's recap on what we saw. Document analysis is a focus in the Vision API. Barcode detection in Vision is more versatile than a scanner, and we are introducing a new document segmentation detection. If you want to learn more about how to use OCR, please look at our session from WWDC 2019. The "Vision and core image" session from WWDC 2020 gives you additional insights in doing your own custom document analysis by preprocessing images and detecting contours. Thank you, and enjoy the rest of WWDC. [music]

-

-

6:18 - Barcode Scan

import Foundation import Vision let url = URL(fileReferenceLiteralResourceName: "codeall_4.png") as CFURL guard let imageSource = CGImageSourceCreateWithURL(url, nil), let barcodeImage = CGImageSourceCreateImageAtIndex(imageSource, 0, nil) else { fatalError("Unable to create barcode image.") } let imageRequestHandler = VNImageRequestHandler(cgImage: barcodeImage) let detectBarcodesRequest = VNDetectBarcodesRequest() detectBarcodesRequest.revision = VNDetectBarcodesRequestRevision2 detectBarcodesRequest.symbologies = [.codabar] try imageRequestHandler.perform([detectBarcodesRequest]) if let detectedBarcodes = detectBarcodesRequest.results { drawBarcodes(detectedBarcodes, sourceImage: barcodeImage) detectedBarcodes.forEach { print($0.payloadStringValue ?? "") } } public func createCGPathForTopLeftCCWQuadrilateral(_ topLeft: CGPoint, _ bottomLeft: CGPoint, _ bottomRight: CGPoint, _ topRight: CGPoint, _ transform: CGAffineTransform) -> CGPath { let path = CGMutablePath() path.move(to: topLeft, transform: transform) path.addLine(to: bottomLeft, transform: transform) path.addLine(to: bottomRight, transform: transform) path.addLine(to: topRight, transform: transform) path.addLine(to: topLeft, transform: transform) path.closeSubpath() return path } public func drawBarcodes(_ observations: [VNBarcodeObservation], sourceImage: CGImage) -> CGImage? { let size = CGSize(width: sourceImage.width, height: sourceImage.height) let imageSpaceTransform = CGAffineTransform(scaleX:size.width, y:size.height) let colorSpace = CGColorSpace.init(name: CGColorSpace.sRGB) let cgContext = CGContext.init(data: nil, width: Int(size.width), height: Int(size.height), bitsPerComponent: 8, bytesPerRow: 8 * 4 * Int(size.width), space: colorSpace!, bitmapInfo: CGImageAlphaInfo.premultipliedLast.rawValue)! cgContext.setStrokeColor(CGColor.init(srgbRed: 1.0, green: 0.0, blue: 0.0, alpha: 0.7)) cgContext.setLineWidth(25.0) cgContext.draw(sourceImage, in: CGRect(x: 0.0, y: 0.0, width: size.width, height: size.height)) for currentObservation in observations { let path = createCGPathForTopLeftCCWQuadrilateral(currentObservation.topLeft, currentObservation.bottomLeft, currentObservation.bottomRight, currentObservation.topRight, imageSpaceTransform) cgContext.addPath(path) cgContext.strokePath() } return cgContext.makeImage() } -

14:02 - Survey Scan

import Foundation import CoreImage import Vision import CoreML guard var inputImage = CIImage(contentsOf: #fileLiteral(resourceName: "IMG_0001.HEIC")) else { fatalError("image not found") } inputImage let requestHandler = VNImageRequestHandler(ciImage: inputImage) let documentDetectionRequest = VNDetectDocumentSegmentationRequest() try requestHandler.perform([documentDetectionRequest]) guard let document = documentDetectionRequest.results?.first, let documentImage = perspectiveCorrectedImage(from: inputImage, rectangleObservation: document) else { fatalError("Unable to get document image.") } documentImage let documentRequestHandler = VNImageRequestHandler(ciImage: documentImage) /* TODO Detect barcodes Detect rectangles Recognize text Perform those requests Scan checkboxes */ var documentTitle = "Don't know yet" let barcodesDetection = VNDetectBarcodesRequest() { request, _ in guard let result = request.results?.first as? VNBarcodeObservation, let payload = result.payloadStringValue else { return } documentTitle = "\(payload) was: " } barcodesDetection.symbologies = [.qr] var checkBoxImages: [CIImage] = [] var rectangles: [VNRectangleObservation] = [] let rectanglesDetection = VNDetectRectanglesRequest { request, error in rectangles = request.results as! [VNRectangleObservation] // sort by vertical coordinates rectangles.sort{$0.boundingBox.origin.y > $1.boundingBox.origin.y} for rectangle in rectangles { guard let checkBoxImage = perspectiveCorrectedImage(from: documentImage, rectangleObservation: rectangle) else { print("Could not extract document"); return } checkBoxImages.append(checkBoxImage) } } rectanglesDetection.minimumSize = 0.1 rectanglesDetection.maximumObservations = 0 var textBlocks: [VNRecognizedTextObservation] = [] let ocrRequest = VNRecognizeTextRequest { request, error in textBlocks = request.results as! [VNRecognizedTextObservation] } do { try documentRequestHandler.perform([ocrRequest, rectanglesDetection, barcodesDetection]) } catch { print(error) } let classificationRequest = createclassificationRequest() var index = 0 for checkBoxImage in checkBoxImages { let checkBoxRequestHandler = VNImageRequestHandler(ciImage: checkBoxImage) do { try checkBoxRequestHandler.perform([classificationRequest]) if let classifications = classificationRequest.results as? [VNClassificationObservation] { if let topClassification = classifications.first { if topClassification.identifier == "Yes" && topClassification.confidence >= 0.9 { for currentText in textBlocks { if observationLinesUp(rectangles[index], with: currentText) { let foundTextObservation = currentText.topCandidates(1) documentTitle += foundTextObservation.first!.string + " " } } } } } } catch { print(error) } index += 1 } print(documentTitle) extension CGPoint { func scaled(to size: CGSize) -> CGPoint { return CGPoint(x: self.x * size.width, y: self.y * size.height) } } extension CGRect { func scaled(to size: CGSize) -> CGRect { return CGRect( x: self.origin.x * size.width, y: self.origin.y * size.height, width: self.size.width * size.width, height: self.size.height * size.height ) } } public func observationLinesUp(_ observation: VNRectangleObservation, with textObservation: VNRecognizedTextObservation ) -> Bool { // calculate center let midPoint = CGPoint(x:textObservation.boundingBox.midX, y:observation.boundingBox.midY) return textObservation.boundingBox.contains(midPoint) } public func perspectiveCorrectedImage(from inputImage: CIImage, rectangleObservation: VNRectangleObservation ) -> CIImage? { let imageSize = inputImage.extent.size // Verify detected rectangle is valid. let boundingBox = rectangleObservation.boundingBox.scaled(to: imageSize) guard inputImage.extent.contains(boundingBox) else { print("invalid detected rectangle"); return nil} // Rectify the detected image and reduce it to inverted grayscale for applying model. let topLeft = rectangleObservation.topLeft.scaled(to: imageSize) let topRight = rectangleObservation.topRight.scaled(to: imageSize) let bottomLeft = rectangleObservation.bottomLeft.scaled(to: imageSize) let bottomRight = rectangleObservation.bottomRight.scaled(to: imageSize) let correctedImage = inputImage .cropped(to: boundingBox) .applyingFilter("CIPerspectiveCorrection", parameters: [ "inputTopLeft": CIVector(cgPoint: topLeft), "inputTopRight": CIVector(cgPoint: topRight), "inputBottomLeft": CIVector(cgPoint: bottomLeft), "inputBottomRight": CIVector(cgPoint: bottomRight) ]) return correctedImage } public func createclassificationRequest() -> VNCoreMLRequest { let classificationRequest: VNCoreMLRequest = { // Load the ML model through its generated class and create a Vision request for it. do { let coreMLModel = try MLModel(contentsOf: #fileLiteral(resourceName: "CheckboxClassifier.mlmodelc")) let model = try VNCoreMLModel(for: coreMLModel) return VNCoreMLRequest(model: model) } catch { fatalError("can't load Vision ML model: \(error)") } }() return classificationRequest }

-