-

ShazamKit 살펴보기

ShazamKit을 사용하면 앱 내에서 Shazam의 정확한 오디오 인식 기능을 활용할 수 있습니다. 앱에서 캡처한 비디오의 배경에서 재생 중인 노래를 빠르고 정확하게 인식하고, 방에서 재생 중인 음악을 기반으로 동적 시각 효과를 제공하거나 외부 오디오와 동기화하여 연동 앱 경험을 제공하는 등 방대한 Shazam 카탈로그를 활용하여 다양한 경험을 만드는 방법을 알아보세요. 또한 ShazamKit 내에서 모든 오디오 소스를 인식하도록 맞춤화 카탈로그를 기기에 구축하는 방법을 보여드립니다. 더 자세히 알아보려면 ‘ShazamKit으로 맞춤형 오디오 경험 만들기'를 확인하여 함께 코딩하면서 스트리밍된 비디오 콘텐츠와 완벽하게 동기화되는 교육 앱을 구축하는 방법을 배워보세요.

리소스

관련 비디오

WWDC23

WWDC22

WWDC21

-

비디오 검색…

Hi, and welcome. My name is Marl, and I'm on the Shazam Product team. I hope you're enjoying WWDC21, and I'm here to introduce you to ShazamKit, the framework that gives you the ability to integrate audio recognition into your apps.

Today I'll take you through what ShazamKit is and a few use cases for applying it. Then, I'll turn it over to my colleague James, who will show you how Shazam catalog recognition works and offer tips for getting started. Let's start with what ShazamKit is.

You might already be familiar with Shazam, the audio recognition app that debuted in 2008 as one of the first apps in the App Store.

13 years later, the app's grown quite a bit, and Shazam is even used as a verb. Its technology powers Siri's What Song is This and is integrated in Shortcuts and Control Center. That core technology is audio recognition-- precise and almost instantaneous audio matching-- even with noise in the background. Recognition is achieved by listening for the distinct acoustic signature of the audio and searching for an exact match either within Shazam's own catalog of content or within custom audio catalogs that you will now be able to create. Unlike SoundAnalysis, however, Shazam isn't an audio classifer detecting and analyzing classes of speech, singing, or humming. In fact, Shazam doesn't identify specific sounds, like laughter or applause, in audio at all. James will take you through Shazam's recognition process in a few minutes, and if you'd like to learn about detecting and classifying sounds, check out the SoundAnalysis session as well.

In the meantime, let's talk more about ShazamKit, the framework that brings the recognition functionality to your apps. ShazamKit is divided into three components.

There's Shazam catalog recognition, custom catalog recognition, and library management. Shazam catalog recognition gives you the ability to add song identification to your apps. With custom catalog recognition, you can take this a step further and perform on-device matching against arbitrary audio.

This session will focus primarily on Shazam catalogue recognition and library management, but be sure to code along with our colleague Alex, who will show you how to "Create custom audio experiences for your apps." Next, I'll take you through some of the amazing opportunities we see for you to leverage ShazamKit across your apps. Let's start with finding the perfect selfie.

They can be fun to take and even more fun when creating a specific mood or ambiance. Shazam catalog recognition can help by recognizing the song playing in the environment and fetching song metadata like song title and artist.

However, you can also create a slightly different experience, or vibe, so your selfie goes beyond listening and taping from your couch.

The ShazamKit API also returns metadata like genre, which you can use to simulate enjoying that song at a club downtown instead. ShazamKit also recognizes more than music, which can be useful useful in the context of virtual learning. Remote schooling can create different challenges for teachers and students, including keeping up with on-screen lessons and navigating between apps. Custom catalog recognition presents an opportunity to make your education app experience even more seamless for them.

By using the lesson content's audio as a kind of remote control, the framework can trigger synced activities in student apps as content streams over video conference.

Teachers can rest easier knowing students' apps follow along, even after pausing or repeating portions of the lesson. If you enjoy home improvement television as much as I do, you'll appreciate the challenge of trying to search for furniture as quickly as the pieces flash by on screen. Use ShazamKit to make your show or video shoppable with an interactive second screen AR experience.

Whether watching in real time or on demand, viewers at home can synchronously browse, simulate, and purchase styles as they watch. Now let's try this out in real life.

You ever watch videos of moments that you and your friends have shared and wish you knew the songs playing in the background? Here's one, for example, that my friend sent me to compare our Friday nights.

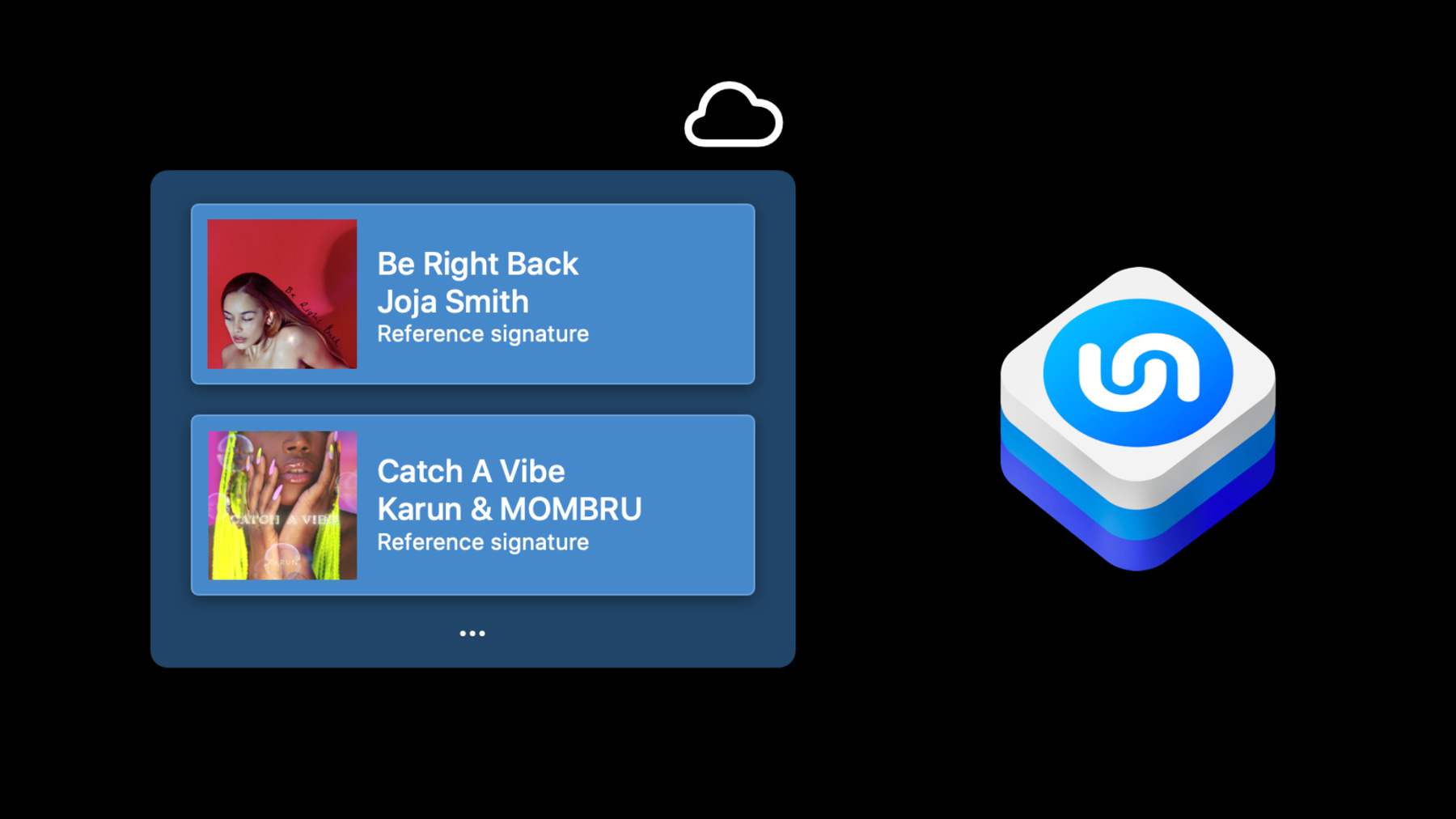

Don't know anyone who makes me catch a vibe, Feel the frequency, catch a vibe, only you and me. Using ShazamKit, my app kicked off the recognition and found the audio match in Shazam's catalog. Now that I know which song was playing, I can save it to a library or use it to start the perfect weekend playlist. Now here's James to show you how Shazam catalog recognition works.

Thanks, Marl, and hi, everyone.

I'm James, an engineering manager on the Shazam team.

Today I'm going to take you through how ShazamKit was used to recognize the music in Marl's video.

So what happened when Marl pressed the Identify Song button? ShazamKit sent a representation of the audio in the video to Shazam's server.

A match was found in Shazam's music catalog, and information about the song was returned, which we displayed in our app. Let's take a closer look at how Shazam matches audio. It is important to note that Shazam does not match against the audio itself.

Rather, it creates a lossy representation of it, which we call a signature, and matches against that.

There are two benefits to this approach. Firstly, a signature is at least an order of magnitude smaller than the audio from which it was derived. This greatly reduces the amount of data that needs to be sent over the network.

Secondly, signatures are not invertible, which means that the original audio cannot be reconstructed from a given signature.

This is very important for protecting the privacy of our customers. So what does a signature sound like? Let me play one and see if you can identify the song.

That's right. It's "My Future" by Billie Eilish. You can even try Shazaming it for yourself. Now let's talk about catalogs.

Catalogs are collections of signatures.

Of course, signatures in a catalog are not very useful without some associated metadata describing the audio from which they were generated. We will refer to these as reference signatures, as the metadata is a reference back to the original audio. The Shazam catalog is a collection of reference signatures comprising much of the world's music. It lives in the cloud and is hosted and maintained by Apple.

It is regularly updated with the latest tracks. Every signature in the Shazam catalog has been generated from the full audio of a song and has a corresponding reference to that song's metadata. Now, what if you wanted to match against your own audio? In this case, you could use a custom catalog.

These are collections of signatures which have been generated from arbitrary audio, not just music, and you can add your own custom metadata to them. As opposed to the Shazam catalog which lives in the cloud, matching custom catalogs takes place within your app.

Watch the code-along "Creating custom audio experiences with ShazamKit" to learn more. When you want to search a catalog, you need a query signature, which is a representation of a small part of the audio that you want to match. In this app, it's the part of the audio that includes the song. In this visualization, a query signature has been compared against a reference signature, which represents the complete audio of a song, and a match has been found. This same process occurs for every song in Shazam's catalog to identify matching candidates. So going back to that first diagram, we now know that we sent a query signature over the network to be matched against the Shazam catalog, which is a collection of reference signatures and song information. When Marl pressed the Identify button, a match was found and the song information returned.

Now, the great thing about ShazamKit is that it handles all of this for you through the session object.

A session can take as input either audio or signatures.

Results are communicated via its delegate. Before you can match against the Shazam catalog, you will need to enable the ShazamKit App Service for your app identifier in the Developer portal. This step is not necessary for matching against custom catalogs.

OK, let's see what this looks like in code. First, I create a session object.

Its default initializer will match against the Shazam catalog. Next, I set its delegate so that I can be notified of any matches that might be found. If you were matching against continuous stream of audio, for example, from a microphone, we recommend you use the matchStreamingBuffer method on the session as it is optimized for that scenario.

However, in our case, I already have access to the complete audio in the video, so I'm going to create a signature generator and pass the audio to it. When I'm ready to perform a match, I will call the signature method on the generator to convert the audio I've passed into a signature. Then I hand the generated signature to the match method of the session object. Back to our app.

After generating a signature from the audio and using a session to find a matching song in Shazam's catalog, the next step is to display the song information. Earlier, I described how catalogs are made up of signatures and metadata.

In the case of the Shazam catalog, this metadata is song information, such as the title and artist of the track, and other properties like its album art. In ShazamKit, the object that represents this information is called a media item.

For signatures that have just been matched, you are also returned extra information, such as whereabouts in the audio the match occurred and any difference in frequency between the matched and original audio. This is called a matched media item and is a subclass of media item. In the previous code example, I set the session delegate. When performing a match, we can rely on it to inform us of success, no match, or an error. It is possible for a signature to match multiple tracks. These are represented as an array of matched media items.

In our app code, we will take the first one.

Now we can start setting some of the properties returned.

The album art was fetched using the artworkURL property. The name of the song and the performer were populated from the title and artist properties. As the song matched is available in the Apple Music catalog, and its details are being displayed, attribution needs to be given as set out in the Apple Music Identity Guidelines. In the app, we've added a button that launches the Apple Music URL returned by the media item.

A customer that has recognized a song in your app may wish to save this in their Shazam library.

The Shazam library is accessible from the Shazam app if installed, or by long-pressing on the Music Recognition Control Center module, and it is synced across devices. Let's try this out. First, I'm going to long press on the Music Recognition Control Center module. It shows that I've already got a few songs in there.

Next, I'll tap the plus button in the app to add the matched song to that list. Now I'll reopen my library and check it's been added. Great, it's in there, and the name of my app shows up as the source of the match.

And here's what the code looks like. SHMediaLibrary offers a default instance that corresponds to a customer's Shazam library. This is stored end-to-end encrypted and is only available on devices that have enabled two-factor authentication. The library only accepts items that correspond to a song in the Shazam catalog.

There is no special permission required to write to the Shazam library, but we strongly recommend you avoid storing content in it without making customers aware.

All songs saved in the library will be attributed to the app that added them. There are many more properties exposed on the MediaItem that we've made available for you to explore, such as matchOffset, which shows whereabouts in the song a match was made. In addition, the new MusicKit framework provides strongly typed objects describing a song and its relationships. We've made this available to you in Swift as the Songs property. For more details, you can check out the "Meet MusicKit for Swift" session. So now we have the complete picture.

We sent an audio signature to match against the Shazam catalog using a session to manage that process. The session then returned song information in the form of a matched media item via its delegate. We also offered a customer of our app the option to add it to their Shazam library. Now I'll hand back to Marl, who'll take you through some best practices.

Over to you, Marl.

Thank you, James.

Now that you know what ShazamKit is, how it works, and how to apply it, you're ready to start building. As you get started implementing with ShazamKit, consider these best practices. First, if you're matching against real-time audio, say, from a microphone, then use matchStreamingBuffer. It's simpler and handles lots of the underlying logic for generating well-scoped signatures. Next, if your use case requires the microphone, stop recording as soon as you have the result you need.

That way, you'll avoid consuming unnecessary resources or launching the microphone for longer than customers intend.

Finally, before writing to a customer's library, we strongly recommend providing the option for the customer to opt in and clarifying this behavior right from the start.

I hope you enjoyed getting to know ShazamKit.

We can't wait to see the magical experiences and features you create.

All of the information we discussed and links to documentation are attached to this session, so you're ready to get going. And don't forget to try the code-along with custom catalog recognition. Thanks for joining. Enjoy the rest of WWDC21. [music]

-

-

9:11 - Matching signatures using SHSession

// Matching signatures using SHSession let session = SHSession() session.delegate = self let signatureGenerator = SHSignatureGenerator() try signatureGenerator.append(buffer, at: nil) let signature = signatureGenerator.signature() session.match(signature) -

10:45 - Receive matches via session delegate

// Receiving matches via the session delegate extension SongResultViewController: SHSessionDelegate { public func session(_ session: SHSession, didFind match: SHMatch) { guard let matchedMediaItem = match.mediaItems.first else { return } DispatchQueue.main.async { self.songView.titleLabel.text = matchedMediaItem.title self.songView.artistLabel.text = matchedMediaItem.artist } } } -

12:24 - Add to Shazam library

// Adding to a customer’s library guard let matchedMediaItem = match.mediaItems.first else { return } SHMediaLibrary.default.add([matchedMediaItem]) { error in if error != nil { // handle the error } }

-