-

What’s new in AVFoundation

Discover the latest updates to AVFoundation, Apple's framework for inspecting, playing, and authoring audiovisual presentations. We'll explore how you can use AVFoundation to query attributes of audiovisual assets, further customize your custom video compositions with timed metadata, and author caption files.

리소스

관련 비디오

WWDC22

WWDC21

-

비디오 검색…

♪ Bass music playing ♪ ♪ Adam Sonnanstine: Hello! my name is Adam, and I'm here today to show you what's new in AVFoundation.

We have three new features to discuss today.

We're going to spend most of our time talking about what's new in the world of AVAsset inspection, and then we'll give a quick introduction to two other features: video compositing with metadata and caption file authoring.

So without further ado, let's jump into our first topic, which is AVAsset async Inspection.

But first, a little bit of background, starting with a refresher on AVAsset.

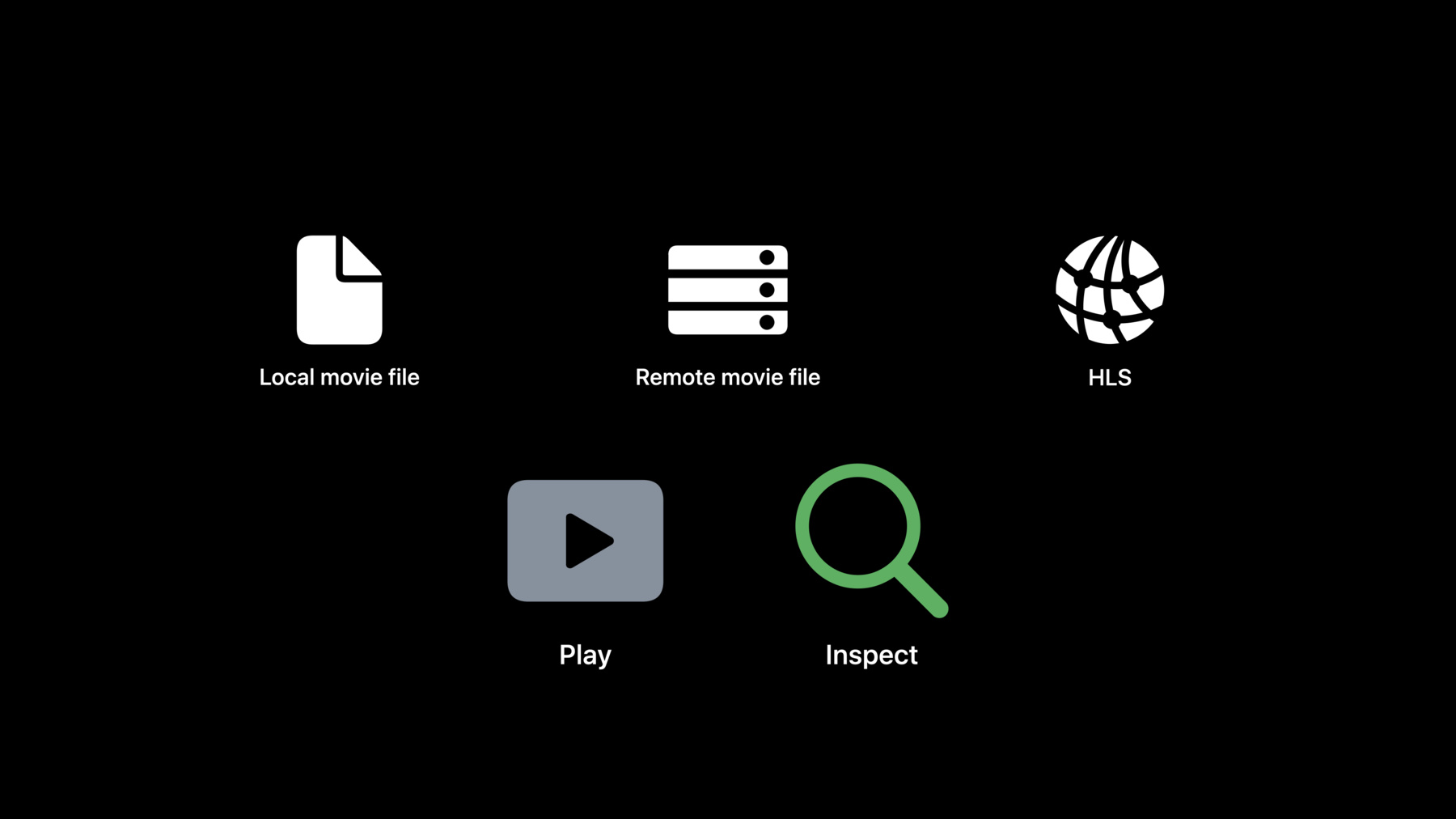

AVAsset is AVFoundation's core model object for representing things like movie files stored on the user's device; movie files stored elsewhere, such as a remote server; and other forms of audiovisual content, such as HTTP Live Streams and compositions.

And when you have an asset, you most often want to play it, but just as often, you're going to want to inspect it.

You want to ask it questions like, what's its duration or what are the formats of audio and video it contains? And that's what we're really going to be talking about in this topic: asset inspection.

And whenever you're inspecting an asset, there are two important things to keep in mind.

The first is that asset inspection happens on demand.

This is mainly because movie files can be quite large.

A feature-length film could be several gigabytes in size.

You wouldn't want the asset to eagerly download the entire file, just in case you ask for its duration later.

Instead, the asset waits until you ask it to load a property value, then it downloads just the information it needs to give you that value.

The second thing to keep in mind is that asset inspection is an asynchronous process.

This is really important because network I/O can take some time.

If the asset is stored across the network, you wouldn't want your app's main thread to be blocked while AVAsset issues a synchronous network request.

Instead, AVAsset will asynchronously deliver the result when it's ready.

With these two things in mind, we have a new API for inspecting asset properties, and it looks a little bit like this.

The main thing to notice is this new load method, which takes in a property identifier -- in this case .duration -- in order for you to tell it which property value to load.

Each property identifier is associated with a result type at compile time, which determines the return type of the load method.

In this case, the duration is a CMTime, so the result is a CMTime.

One thing you may not have seen before is this await keyword.

This is a new feature in Swift, and it's used to mark, at the call site, that the load method is asynchronous.

For all the details on async/await and the broader concurrency effort in Swift, I encourage you to check out the session called "Meet async/await in Swift." For now, as a quick way of understanding how to use our new property loading method, I like to think of the await keyword as dividing the calling function into two parts.

First, there's the part that happens before the asynchronous operation begins.

In this case, we create an asset and ask it to load its duration.

At this point, the asset goes off and does the I/O and parsing necessary to determine its duration and we await its result.

While we're waiting, the calling function is suspended, which means the code written after the await doesn't execute right away.

However, the thread we were running on isn't blocked.

Instead, it's free to do more work while we're waiting.

Once the asynchronous duration loading has finished, then the second half of the function is scheduled to be run.

In this case, if the duration loading was successful, we store the duration into a local constant and send it off to another function.

Or, if the operation failed, an error will be thrown once the calling function resumes.

So that's the basics of loading a property value asynchronously.

You can also load the values of multiple properties at once, and you do this simply by passing in more than one property identifier to the load method.

In this case, we're loading both the duration and the tracks at the same time.

This is not only convenient, but it can also be more efficient.

If the asset knows all the properties you're interested in, it can batch up the work required to load their values.

The result of loading multiple property values is a tuple, with the loaded values in the same order you used for the property identifiers.

Just like loading a single property value, this is type safe.

In this case, the first element of the result tuple is a CMTime and the second element is an array of AVAssetTracks.

And of course, just like with loading a single value, this is an asynchronous operation.

In addition to asynchronously loading property values, you can also check the status of a property without waiting for the value to load at any time using the new status(of: ) method.

You pass in the same property identifier that you use for the load method, and this'll return an enum with four possible cases.

Each property starts off as .notYetLoaded.

Remember that asset inspection happens on demand, so until you ask to load a property value, the asset won't have done any work to load it.

If you happen to check the status while the loading is in progress, you'll get the .loading case.

Or, if the property is already loaded, you'll get the .loaded case, which comes bundled with the value that was loaded as an associated value.

Finally, if a failure occurred -- perhaps because the network went down -- you'll get the .failed case, which comes bundled with an error to describe what went wrong.

Note that this'll be the same error that was thrown by the invocation of the load method that initiated the failed loading request.

So that's the new API for loading asynchronous properties and checking their status.

AVAsset has quite a few properties whose values can be loaded asynchronously.

Most of these vend a self-contained value, but the .tracks and .metadata properties vend more complex objects you can use to descend into the hierarchical structure of the asset.

In the case of the .tracks property, you'll get an array of AVAssetTracks.

An AVAssetTrack has its own collection of properties whose values can be loaded asynchronously using that same load method.

Similarly, the .metadata property gives you an array of AVMetadataItems, and several AVMetadataItem properties can also be loaded asynchronously using the load method.

The last bit of new API in this area is a collection of asynchronous methods that you can use to get at specific subsets of certain property values.

So instead of loading all the tracks, for example, you can use one of these first three methods to load just some of the tracks -- for example, just the audio tracks.

There are several new methods like this on both AVAsset and AVAssetTrack.

So that's all the new API we have for inspecting assets asynchronously.

But at this point, I have a small confession to make.

None of this functionality is actually new.

The APIs are new, but these classes have always had the ability to load their property values asynchronously.

It's just that, with the old APIs, you would've had to write code more like this.

It was a three-step process.

You first have to call the loadValuesAsynchronously method, giving it strings to tell it which properties to load.

Then you need to make sure that each of the properties actually did successfully load and didn't fail.

Then, once you've gotten that far, you can fetch the loaded value either by querying the corresponding synchronous property or by calling one of the synchronous filtering methods.

This is not only verbose and repetitive, it's also easy to misuse.

For example, it's very easy to forget to do these essential loading and status-checking steps.

What you're left with are these synchronous properties and methods that can be called at any time, but if you call them without first loading the property values, you'll end up doing blocking I/O.

If you do this on your main thread, this means that your app can end up hanging at unpredictable times.

So in addition to the fact that the new APIs are simply easier to use, the fact that they also eliminate these common misuses means that we plan to deprecate the old synchronous APIs for Swift clients in a future release.

This is an excellent time to move to the new asynchronous versions of these interfaces, and to help you do that we've prepared a short migration guide.

So, if you're doing that trifecta of loading the value, checking its status, and then grabbing a synchronous property, now you can simply call the load method and do that all in one asynchronous step.

Similarly, if you're doing that three-step process but using a synchronous filtering method instead of a property, you can now call the asynchronous equivalent of that filtering method and do that in one step.

If you're switching over the status of a property using the old statusOfValue(forKey: ) method and then grabbing the synchronous property value when you see that you're in the .loaded case, now you can take advantage of the fact that the .loaded case of the new status enum comes bundled with that .loaded value.

If your app is doing something a little bit more interesting, like loading the value of a property in one part of the code and then fetching the loaded value in a different part of the code, there are a couple ways you could do this with the new interface.

I recommend just calling the load method again.

This is the easiest and safest way to do it, and if the property has already been loaded, this won't duplicate the work that's already been done.

Instead, it'll just return a cached value.

However, there's one caveat to this and that is that, because the load method is an async method, it can only be called from an async context.

So if you really need to get the value of the property from a pure synchronous context, you can do something like get the status of the property and assert that it's loaded in order to grab the value of the property synchronously.

Still, you have to be careful doing this, because it's possible for a property to become failed even after it's already been loaded.

Finally, if you're skipping the loading and status-checking steps and just relying on the current behavior of the properties and methods in that they block until the result is available, well, we're actually not providing a replacement for this.

This has never been the recommended way to use the API, and so we've always discouraged it.

We designed the new property-loading APIs to be just about as easy to use as fetching a simple property, so migrating to the new APIs should be straightforward.

And with that, that's all for our first topic.

I'm really excited about our new way to inspect assets, using Swift's new async features, and I hope you'll enjoy using them as much as I have.

Now let's move on to the first of our two shorter topics: video compositing with metadata.

Here we're talking about video compositing, which is the process of taking multiple video tracks and composing them into a single stream of video frames.

And in particular, we have an enhancement for custom video compositors, which is where you provide the code that does the compositing.

New this year, you can get per-frame metadata delivered to you in your custom compositor's frame composition callback.

As an example, let's say you have a sequence of GPS data, and that data is time-stamped and synchronized with your video, and you want to use that GPS data in order to influence how your frames are composed together.

You can do that now, and the first step is to write the GPS data to a timed metadata track in your source movie.

In order do to this with AVAssetWriter, check out the existing class, AVAssetWriter InputMetadataAdaptor.

Now let's take a look at the new API.

Let's say you're starting with a source movie that has a certain collection of tracks.

Perhaps it has an audio track, two video tracks, and three timed metadata tracks.

But let's say that tracks four and five contain metadata that's useful for your video compositing, but track six is unrelated.

You have two setup steps to perform, and the first is to use the new source SampleDataTrackIDs property to tell your video composition object the IDs of all the timed metadata tracks that are relevant for the entire video composition.

Once you've done that, the second step is to take each of your video composition instructions and do something similar, but this time you set the requiredSourceSampleData TrackIDs property to tell it the track ID -- or IDs -- that are relevant for that particular instruction.

It's important that you do both of these setup steps or you simply won't get any metadata in your composition callback.

Now let's move over to the callback itself.

When you get your asynchronous video composition request object in your callback, there are two new APIs that you use in order to get the metadata for your video composition.

The first is the source SampleDataTrackIDs property, which replays the track IDs for the metadata tracks that are relevant to that request.

Then for each of the track IDs, you can use the sourceTimedMetadata(byTrackID :) method in order to get the current timed metadata group for that track.

Now, AVTimedMetadataGroup is a high-level representation of the metadata, with the value parsed into a string, date, or other high-level object.

If you'd rather work with the raw bytes of the metadata, you can use the sourceSampleBuffer(byTrackID: ) method to get a CMSampleBuffer instead of an AVTimedMetadataGroup.

Once you have the metadata in hand, you can use the metadata along with your source video frames to generate your output video frame and finish off the request.

So that's all it takes to get metadata into your custom video compositor callback so you can do more interesting things with your video compositions.

Now onto our final topic, which is caption file authoring.

New this year for macOS, AVFoundation is adding support for two file formats.

First, we have iTunes Timed Text, or .itt files, which contain subtitles.

The other file format is Scenarist Closed Captions -- or .scc files -- which contain closed captions.

AVFoundation is adding support for authoring these two file formats, ingesting captions from these types of files, and also for previewing captions at runtime to see what they'll look like during playback.

On the authoring side, we have some new APIs, starting with AVCaption, which is the model object that represents a single caption.

It has properties for things like the text, position, styling, and other attributes of a single caption.

You can create AVCaptions yourself and use an AVAssetWriterInputCaptionAdaptor in order to write them to one of these two file formats.

In addition, we have a new validation service in the AVCaptionConversion Validator class, which helps you make sure the captions you're writing are actually compatible with the file format you've chosen.

As an example of why this is important, consider .scc files.

They contain CEA-608 captions, which is a format that has very specific limitations about how many captions you can have in a given amount of time, all the way down to having a fixed bit budget for the data representing the individual characters and their styling.

So the validator will help you not only ensure that your stream of captions is compatible with the file format, it'll also suggest tweaks you can make to your captions, such as adjusting their time stamps, in order to make them compatible.

The new API for ingesting captions is AVAssetReader OutputCaptionAdaptor which allows you to take one of these files and read in AVCaption objects from it.

Finally, we have an AVCaptionRenderer class, which allows you to take a single caption or a group of captions and render them to a CGContext in order to get a preview of what they'll look like during playback.

So that's just the tip of the iceberg for our new caption file authoring APIs.

If you're interested in adopting them, we encourage you to get in touch with us -- either in the forums or in the conference labs -- and we can help answer any questions that you have.

And that was our final topic, so let's wrap up.

Our big topic for the day was inspecting AVAsset properties, the importance of doing so on demand and asynchronously, the new APIs in this area, and some tips for migrating from the old APIs.

We then talked about using timed metadata to further customize your custom video compositions.

Finally, I gave a brief introduction to caption file authoring and the new APIs in that area.

That's all for today.

Thank you very much for watching and enjoy WWDC21.

♪

-

-

2:16 - AVAsset property loading

func inspectAsset() async throws { let asset = AVAsset(url: movieURL) let duration = try await asset.load(.duration) myFunction(thatUses: duration) } -

4:02 - Load multiple properties

func inspectAsset() async throws { let asset = AVAsset(url: movieURL) let (duration, tracks) = try await asset.load(.duration, .tracks) myFunction(thatUses: duration, and: tracks) } -

4:52 - Check status

switch asset.status(of: .duration) { case .notYetLoaded: // This is the initial state after creating an asset. case .loading: // This means the asset is actively doing work. case .loaded(let duration): // Use the asset's property value. case .failed(let error): // Handle the error. } -

6:32 - Async filtering methods

let asset: AVAsset let trk1 = try await asset.loadTrack(withTrackID: 1) let atrs = try await asset.loadTracks(withMediaType: .audio) let ltrs = try await asset.loadTracks(withMediaCharacteristic: .legible) let qtmd = try await asset.loadMetadata(for: .quickTimeMetadata) let chcl = try await asset.loadChapterMetadataGroups(withTitleLocale: .current) let chpl = try await asset.loadChapterMetadataGroups(bestMatchingPreferredLanguages: ["en-US"]) let amsg = try await asset.loadMediaSelectionGroup(for: .audible) let track: AVAssetTrack let seg0 = try await track.loadSegment(forTrackTime: .zero) let spts = try await track.loadSamplePresentationTime(forTrackTime: .zero) let ismd = try await track.loadMetadata(for: .isoUserData) let fbtr = try await track.loadAssociatedTracks(ofType: .audioFallback) -

7:16 - Async loading: Old API

asset.loadValuesAsynchronously(forKeys: ["duration", "tracks"]) { var error: NSError? guard asset.statusOfValue(forKey: "duration", error: &error) == .loaded else { ... } guard asset.statusOfValue(forKey: "tracks", error: &error) == .loaded else { ... } let duration = asset.duration let audioTracks = asset.tracks(withMediaType: .audio) // Use duration and audioTracks. } -

8:09 - This is the equivalent using the new API:

let duration = try await asset.load(.duration) let audioTracks = try await asset.loadTracks(withMediaType: .audio) // Use duration and audioTracks. -

8:36 - load(_:)

let tracks = try await asset.load(.tracks) -

8:51 - Async filtering method

let audioTracks = try await asset.loadTracks(withMediaType: .audio) -

8:58 - status(of:)

switch status(of: .tracks) { case .loaded(let tracks): // Use tracks. -

9:18 - load(_:) again (returns cached value)

let tracks = try await asset.load(.tracks) -

9:49 - Assert status is .loaded()

guard case .loaded (let tracks) = asset.status(of: .tracks) else { ... } -

11:49 - Custom video composition with metadata: Setup

/* Source movie: - Track 1: Audio - Track 2: Video - Track 3: Video - Track 4: Metadata - Track 5: Metadata - Track 6: Metadata */ // Tell AVMutableVideoComposition about all the metadata tracks. videoComposition.sourceSampleDataTrackIDs = [4, 5] // For each AVMutableVideoCompositionInstruction, specify the metadata track ID(s) to include. instruction1.requiredSourceSampleDataTrackIDs = [4] instruction2.requiredSourceSampleDataTrackIDs = [4, 5] -

12:44 - Custom video composition with metadata: Compositing

// This is an implementation of a AVVideoCompositing method: func startRequest(_ request: AVAsynchronousVideoCompositionRequest) { for trackID in request.sourceSampleDataTrackIDs { let metadata: AVTimedMetadataGroup? = request.sourceTimedMetadata(byTrackID: trackID) // To get CMSampleBuffers instead, use sourceSampleBuffer(byTrackID:). } // Compose input video frames, using metadata, here. request.finish(withComposedVideoFrame: composedFrame) }

-