-

Enhance your app with Metal ray tracing

Achieve photorealistic 3D scenes in your apps and games through ray tracing, a core part of the Metal graphics framework and Shading Language. We'll explore the latest improvements in implementing ray tracing and take you through upgrades to the production rendering process. Discover Metal APIs to help you create more detailed scenes, integrate natively-supported content with motion, and more.

리소스

- Rendering reflections in real time using ray tracing

- Applying realistic material and lighting effects to entities

- Accelerating ray tracing using Metal

- Managing groups of resources with argument buffers

- Metal

- Metal Shading Language Specification

관련 비디오

Tech Talks

WWDC23

WWDC22

WWDC21

-

비디오 검색…

♪ Bass music playing ♪ ♪ Juan Rodriguez Cuellar: Hello, welcome to WWDC.

My name is Juan Rodriguez Cuellar, and I'm a GPU compiler engineer at Apple.

In this session, we’re going to talk about the brand-new features we’ve added this year to enhance our Metal ray tracing API.

But first, let’s do a quick recap about ray tracing.

Ray-tracing applications are based on tracing the paths that rays take as they interact with a scene.

Ray tracing is applied in a lot of domains such as audio, physics simulation, and AI; but one of the main applications is photorealistic rendering.

In rendering applications, ray tracing is used to model individual rays of light, which allow us to simulate effects such as reflections, soft shadows, and indirect lighting.

That is just a general definition of ray tracing.

Let’s talk about Metal’s approach to it.

We start with a compute kernel.

In our kernel, we generate rays, which are emitted into the scene.

We then test those rays for intersections against the geometry in the scene with an intersector and an acceleration structure.

Each intersection point represents light bouncing off a surface; how much light bounces and in what direction determines what the object looks like.

We then compute a color for each intersection and update the image.

This process is called shading and it can also generate additional rays, and those rays are also tested for intersection.

We repeat the process as many times as we’d like to simulate light bouncing around the scene.

This year, we focused our new features around three major areas.

First, I will talk about how we’ve added ray-tracing support to our render pipelines, which allows us to mix ray tracing with our rendering.

Then I will introduce you to the new features that focus on the usability and portability.

These features will ease the use of Metal ray tracing API.

Finally, I will cover the production rendering features we’ve added this year that will help you create more realistic content.

Let's start with ray tracing from render pipelines.

Let’s consider the basic case of a render that has a single render pass.

With our new support for ray tracing from render pipelines, this makes it super easy to add ray tracing into the render.

However, without this support, to add ray tracing to this render with last year’s Metal ray tracing API, we need to add a compute pass.

Let’s just start by adding it after rendering to augment the rendered image.

Adding this extra compute pass means writing more output to memory for the compute pass to use for ray tracing.

Now, what if we wanted to use ray tracing in the middle of our render pass to calculate a value such as shadowing per pixel? This means that we need to split our rendering and introduce a compute pass.

Thinking more about what this means, we need to write out pixel positions and normals to memory as inputs to the ray tracing, and then read the intersection results back -- possibly several times.

But with the new support for ray tracing from render stages, we never need to leave our render pass and we just write our outputs to memory.

Let's see how we use our new API.

Preparing our render pipeline for ray tracing is similar to a compute pipeline.

You start by building your acceleration structure and defining custom intersection functions.

To support custom intersection, we need an intersection function table and we need to fill it with intersection functions.

This part has some differences compared to last year’s API.

Let’s walk through how we can do that.

Let’s consider some simple intersection functions.

I have some functions here that will allow us to analytically intersect objects such as a sphere, a cone, or torus.

When we create our pipeline, we add these functions as linked functions that we may call.

In this case, we are adding them to the fragment stage of the pipeline.

To use the functions, we need to create an intersection function table from the pipeline state and stage.

Once we have the table, we can create function handles from the pipeline state and stage and populate the table.

Specifying the functions for the fragment stage reuses the linkedFunctions object that we introduced last year.

Each stage has its own set of linkedFunctions on the render pipeline descriptor.

Creating an intersection function table is much the same as when done for the compute pipeline.

The only change is the addition of the stage argument.

To populate the table, we create the function handle.

Again, the handle is specific to the stage, so we need to specify the stage when requesting the handle.

Once we have the function handle, we just insert it in the function table.

And that’s all you need to do to prepare your function tables in render pipeline.

Now we just have to use everything we have built so far to intersect.

Actual use is straightforward.

The accelerationStructure and the intersection function table are both bound to buffer indices on the render encoder.

The shaders can then use these resources to intersect rays with an intersector the same way you would in a compute kernel.

More details about how to prepare your pipeline for ray tracing can be found in last year’s presentation.

In that talk, you will learn about building acceleration structures, creating function tables, and using the intersector in the shading language.

With ray-tracing support from render pipelines, we are opening the door for even more opportunities like adding ray tracing within a single render pass, mixing ray tracing with rasterization in hybrid rendering, and taking advantage of optimizations such as tile functions on Apple Silicon.

In fact, we will be soon adding ray tracing to our sample app that we demoed during the "Modern Rendering in Metal" session at WWDC 2019.

With ray tracing from render pipelines, we can update the code to use tile functions to keep everything in tile memory.

For more details about this, see this year’s "Explore hybrid rendering with Metal ray tracing" presentation.

Next up, I want to introduce the new features we've added this year to improve the usability and portability of Metal ray tracing API.

These features not only provide simpler use of Metal ray tracing, but they also provide portability from other ray tracing APIs.

One of these new features is intersection query.

With intersection query, we’re allowing you to have more control over the intersection process.

Intersection query is aimed toward simple use cases where intersector can create overhead.

It is a new way of traversing the acceleration structure that gives you the option of performing in-line custom intersection testing.

Let's take a look at how we currently handle custom intersection using last year’s intersector.

Going back to the alpha test example from last year’s ray tracing with Metal presentation, we demonstrated how alpha testing is used to add a lot of geometric detail to the scene, as you see here in the chains and leaves.

We also learned how easy it is to implement alpha testing by customizing an intersector using the triangle intersection function.

The logic inside this triangle intersection function is responsible for accepting or rejecting intersections as the ray traverses the acceleration structure.

In this case, test logic will reject the first intersection, but it will accept the second intersection since an opaque surface has been intersected.

Let’s see how intersection functions are used.

When using Intersector, when you call intersect(), we start traversing the acceleration structure to find an intersection and fill our intersection_result.

Within the intersector, intersection function gets called each time a potential intersection is found.

And intersections are then accepted or rejected based on intersection function logic.

This is a great programming model using intersector since it is both performant and convenient, but it does require creating a new intersection function and linking it to the pipeline.

There might be cases where the logic inside the intersection function is only a few lines of code, as it is the case for alpha test logic.

This is the intersection function that contains the logic to do alpha testing.

With intersection query, we can place this logic in-line without the need of this intersection function.

Here is how.

With intersection query, when you start the intersection process, your ray traverses the acceleration structure, and the query object contains the state of the traversal and the result.

Each time the ray intersects a custom primitive or a nonopaque triangle, control is returned to the shader for you to evaluate the intersection candidates.

If the current candidate passes your custom intersection logic, you commit it to update the current committed intersection and then continue the intersection process.

On the other hand, if candidate fails custom intersection logic, you can just ignore it and continue.

Let me show you the code to do alpha testing using intersection query.

First, you start the traversal.

Note that we loop on next to evaluate all candidate intersections.

Second, you evaluate each candidate starting by checking the candidate type.

For alpha test example, you're interested on intersections of triangle type.

After checking the type, you'll want to query some intersection information about the candidate.

We perform three queries for information needed in the alpha test logic which is now in-lined.

Finally, if the candidate intersection passes alpha test, you will commit it so that it becomes the current committed intersection.

Up to now, you have traversed the whole acceleration structure evaluating candidate intersections and commit the intersections that passed the alpha test logic.

Now, you need to query committed intersection information to do the shading.

First, you will query the committed type.

If none of the candidate intersections met your conditions to become a committed intersection, committed type will be none, which means the current ray missed.

On the other hand, if there is a committed intersection, you would want to query information about the intersection applicable to the intersection type and then use it for shading.

That’s all the code you need to perform alpha testing using intersection query.

With the introduction of intersection query and the introduction of intersector to render pipeline, we are giving you more opportunities to start bringing Metal ray tracing to your apps.

Here are some things to consider when choosing between intersector objects and intersection queries.

Start by considering if you have existing code, such as using the intersector in compute and your plans for porting that code.

If you have existing query code from other APIs, intersection query can help to port that code.

Next, you have the complexity of handling custom intersection.

Intersector requires intersection functions and tables, and it might be easier to use intersection query to handle custom intersection yourself.

The last question is performance.

In simpler cases, intersection query can avoid overhead when building your pipeline for ray tracing, but custom intersection handling requires returning to your code during traversal, which could have a performance impact, depending on the use case.

Also, use of multiple query objects will require more memory.

On the other hand, intersector can support those more complex cases by encapsulating all of the intersection work.

If you have the opportunity, we would recommend comparing the performance of both solutions.

That’s all about intersection query.

Now let’s move on to some other new features.

The next two features we’re going to talk about are user instance IDs and instance transforms.

These features will help you add more information to your acceleration structure and access more of the data that is already there.

Here is why we think these are really useful features.

If we look back to the sample code from last year’s presentation, we have multiple instances of the kernel box.

Underneath this, we have an instance acceleration structure with a set of nodes that branch until you reach the instances.

Looking at two of these instances, they are at the lowest level of the instance acceleration structure.

Currently, when you intersect one of these instances, you only get the system’s instance ID from the intersection results.

With this, you could maintain your own table of data, but there’s data that we can expose in the acceleration structure to help you.

Let’s talk about user-defined instance IDs first.

With this feature, you can specify a custom 32-bit value for each instance and then you get this value as part of the intersection results.

This is really useful for you to index into your own data structures, but it can also be used to encode custom data.

For example, here we’re using the user ID to encode a custom color for each instance.

You could use this for a simpler reflection with no need to look up any additional material information.

This is just one example, but the opportunities are endless.

I can see how you would want to encode things like per-instance material ID or per-instance flags.

We have created an extended version of instance descriptor type that is used to specify these IDs.

Make sure you specify which type of descriptor you’re using on the instance acceleration structure descriptor.

In the shading language, the value of the current user instance ID is available as an input to intersection functions with the instancing tag.

To obtain the values after intersection, the user-defined instance ID is available from the intersection result when using an intersector object.

And when using intersection query object, there is a corresponding query to access the user-defined instance ID for both candidate and committed intersections.

Just like user instance ID, we have added support for accessing your instance transformation matrices.

This data is already specified in the instance descriptor, and it is stored in the acceleration structure.

This year, we’ve exposed these matrices from the shading language.

You can access the instance transforms in the intersection functions when you apply the instancing and world_space_data tags.

Similarly, the instance transforms are provided in the intersection results when using an intersector with instancing and world_space_data tags.

When using intersection query with instancing tag, there are corresponding queries for accessing the instance transforms for both candidate and committed intersections.

To summarize, this year we are improving the usability and portability of Metal ray tracing API by introducing three new features.

Intersection query comes as an alternative to intersector that provides more control over the intersection process.

And with the introduction of user instance ID and instance transforms features, we are providing you the ability to access data from the acceleration structure instead of having to handle some external mapping in your code.

In addition, these three features offer portability from other ray tracing APIs, making cross-platform development easier.

So far in the session, we have talked about our new support for ray tracing in render pipeline and the different usability and portability features we have added this year.

Now, let me show you what features we are introducing to enhance production rendering.

Since Metal ray tracing API was introduced last year, people have been using it to render some amazing high-quality content.

This year, we’ve added two new features to make it possible to render even better content.

Let's start with extended limits.

Since we released the Metal ray tracing API, some users have started hitting the internal limits of our acceleration structures, especially in production-scale use cases.

So we are adding support for an extended-limits mode to support even larger scenes.

Last year, we chose these limits to balance acceleration structure size in order to favor performance with typical scene sizes.

There is a potential performance trade-off to turning on this feature, so you’ll need to determine which mode is best for your application.

Extended-limits mode increases the limit on the number of primitives, geometries, instances, as well as the size of the mask used for filtering out instances.

To turn it on, you first specify extended limits mode when building your acceleration structures.

Then specify the extended_limits tag on the intersector object in the shading language.

That’s all you need to do to turn on extended limits! Next, let’s talk about motion.

In computer graphics, we often assume that the camera exposure is instantaneous.

However, in real life, the camera exposure lasts for a nonzero period of time.

If an object moves relative to the camera during that time, it will appear blurred in the image.

In this extreme example, the person in the center has been standing still during the whole exposure while everyone else has been moving, causing them to be blurred.

This effect can go a long way towards making computer-generated images look more realistic.

In this example, the sphere is animated across several frames, but each frame is still an instantaneous exposure, resulting in a choppy animation.

Using the motion API, we can simulate camera exposure lasting for a nonzero amount of time.

This results in a smoother and more realistic-looking animation.

If we freeze the video, you can see that the boundaries of the sphere are blurred in the direction of motion just like a real camera.

Real-time applications like games often approximate this effect in screen space.

But ray tracing allows us to simulate physically accurate motion blur, which even extends to indirect effects like shadows and reflections.

Let’s take a look at how the motion-blurred version was rendered.

Motion blur is a straightforward extension to ray tracing.

Most ray-tracing applications already randomly sample the physical dimensions such as incoming light directions for indirect lighting.

To add motion blur, we can just choose a random time for each ray as well.

Metal will intersect the scene to match the point in time associated with each ray.

For instance, this ray will see the scene like this.

Another ray will see the scene like this.

As we accumulate more and more samples, we’ll start to converge on a motion-blurred image.

You could actually already implement this today using custom intersection functions.

You could compute the bounding boxes of each primitive throughout the entire exposure and then use these bounding boxes to build an acceleration structure.

However, this would be inefficient; the bounding boxes could be so large that some rays would need to check for intersection with primitives that they will never actually intersect.

Instead, we can use Metal’s built-in support for motion blur which is designed to efficiently handle cases like this.

The first thing we need to do is associate a random time with each ray in our Metal shading language code.

We start by generating a random time within the exposure interval, then we just pass it to the intersector.

The next thing we need to do is to provide our animated geometry to Metal.

We do this using a common method of animation called keyframe animation.

The animation is created by modeling the ball at key points in time called keyframes.

These keyframes are uniformly distributed between the start and end of our animation.

As rays traverse the acceleration structure, they can fetch data from any keyframe based on their time value.

For instance Ray A would see the scene as it was modeled in Keyframe 11 because its time happens to match Keyframe 11.

In contrast, Ray B’s time is in between Keyframes 3 and 4.

Therefore, the geometry of the two keyframes is interpolated for Ray B.

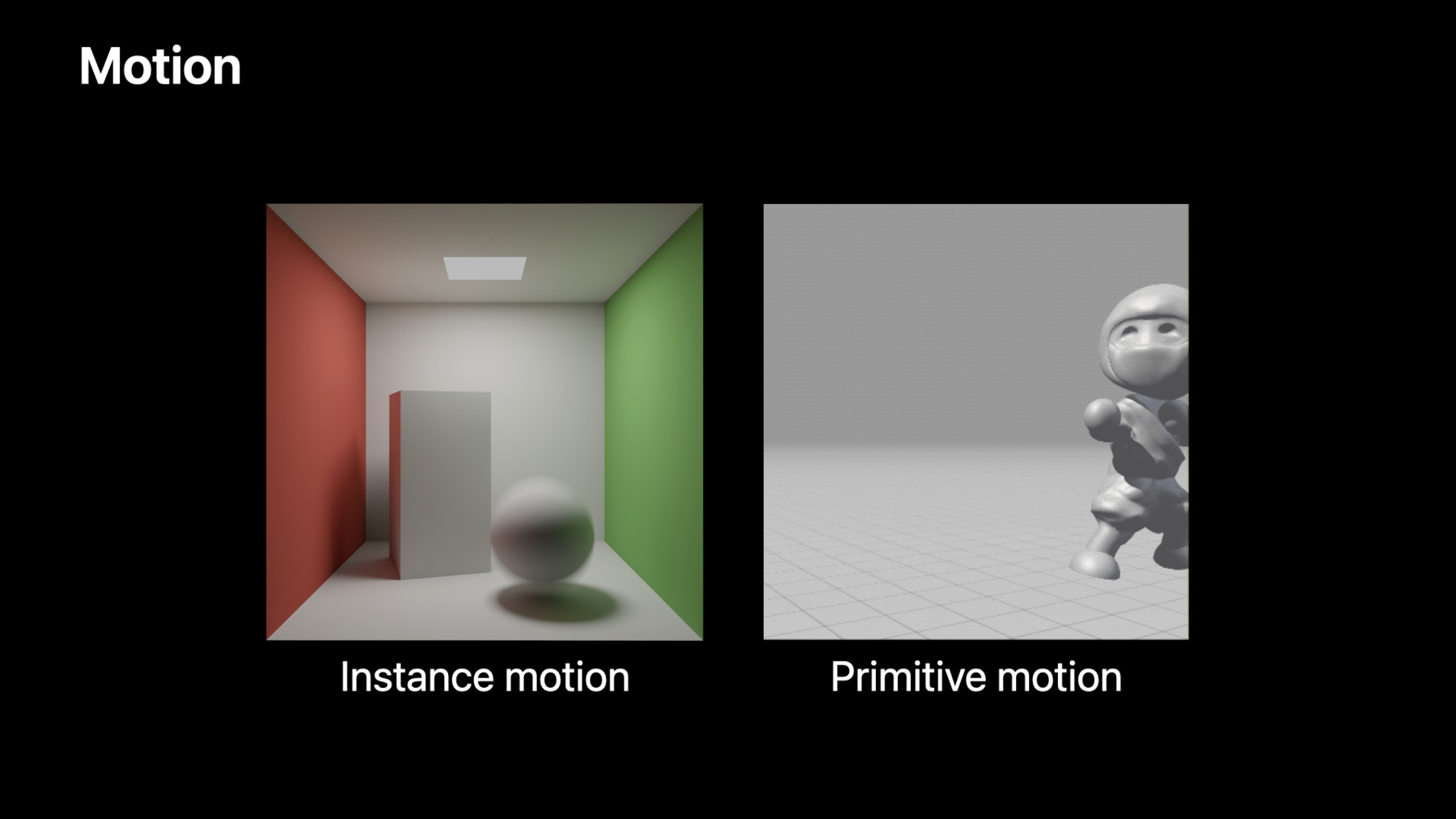

Motion is supported at both the instance and primitive level.

Instance animation can be used to rigidly transform entire objects.

This is cheaper than primitive animation but doesn’t allow objects to deform.

On the other hand, primitive animation is more expensive, but can be used for things like skinned-character animation.

Note that both instance and primitive animation are based on keyframe animation.

Let’s first talk about instance motion.

In an instance acceleration structure, each instance is associated with a transformation matrix.

This matrix describes where to place the geometry in the scene.

In this example, we have two primitive acceleration structures: one for the sphere and another for the static geometry.

Each primitive acceleration structure has a single instance.

To animate the sphere, we will provide two transformation matrices, representing the start and end point of the animation.

Metal will then interpolate these two matrices based on the time parameter for each ray.

Keep in mind that this is a specific example using two keyframes, but Metal supports an arbitrary number of key frames.

We provide these matrices using acceleration structure descriptors.

The standard Metal instance descriptor only has room for a single transformation matrix.

So instead, we’ll use the new motion instance descriptor.

With this descriptor, the transformation matrices are stored in a separate buffer.

The instance descriptor then contains a start index and count representing a range of transformation matrices in the transform buffer.

Each matrix represents a single keyframe.

Let's see how to set up an instance descriptor with the new motion instance descriptor type.

We start by creating the usual instance acceleration structure descriptor.

We then specify we’re using the new motion instance descriptor type.

Then, we specify the instanceDescriptorBuffer that contains the motion instance descriptors.

Finally, we’ll need to bind the transformsBuffer containing the vertex buffer for each keyframe.

The remaining properties are the same as any other instance acceleration structure, and we can build it like any other acceleration structure as well.

We only need to make one change in the shading language, which is to specify the instance_motion tag.

This tells the intersector to expect an acceleration structure with instance motion.

And that’s all we need to do to set up instance motion.

Next, let’s talk about primitive motion.

With primitive motion, each primitive can move separately, meaning it can be used for things like skinned-character animation.

Remember that we need to provide a separate 3D model for each keyframe, and Metal will then interpolate between them.

We’ll need to provide vertex data for each keyframe.

Let’s see how to set this up.

We’ll start by collecting each keyframe’s vertex buffer into an array.

The MTLMotionKeyframeData object allows you to specify a buffer and offset.

We’ll use it to specify the vertex buffer for each keyframe.

Next, we’ll create a motion triangle geometry descriptor.

This is just like creating any other geometry descriptor, except we use a slightly different type.

And instead of providing a single vertex buffer, we’ll provide our array of vertexBuffers.

Finally, we’ll create the usual primitive acceleration structure descriptor.

Next, we provide our geometryDescriptor.

Then we’ll specify the number of keyframes.

Similar to instance motion, we’ll need to make a small change in the shading language to specify the primitive_motion tag.

And that’s all we need to do to set up primitive motion! Keep in mind that you can actually use both types of animation at the same time for even more dynamic scenes.

Next, let’s take a look at this all in action! This is a path-traced rendering created by our Advanced Content team.

The video was rendered on a Mac Pro with an AMD Radeon Pro Vega II GPU.

The ninja character was animated using a skinned skeletal animation technique which allows each primitive to move separately.

Each frame was rendered by combining 256 randomly timed samples taken using the primitive motion API.

We can slow it down to see the difference more clearly.

The version on the left doesn’t have motion blur, while the version on the right does.

And we can increase the exposure time even further to simulate a long exposure.

Motion blur can make a big difference in realism and it’s now easy to add with the new motion API.

So that’s it for motion.

Thanks for watching this talk.

We have been putting a lot of work into our Metal ray tracing API to provide the tools you need to enhance your app.

We can’t wait to see the amazing content that you will create with it.

Thank you, and have a great WWDC! ♪

-

-

4:48 - Specify intersection functions on render pipeline state

// Create and attach MTLLinkedFunctions object NSArray <id <MTLFunction>> *functions = @[ sphere, cone, torus ]; MTLLinkedFunctions *linkedFunctions = [MTLLinkedFunctions linkedFunctions]; linkedFunctions.functions = functions; pipelineDescriptor.fragmentLinkedFunctions = linkedFunctions; // Create pipeline id<MTLRenderPipelineState> rayPipeline; rayPipeline = [device newRenderPipelineStateWithDescriptor:pipelineDescriptor error:&error]; -

5:02 - Create intersection function table

// Fill out intersection function table descriptor MTLIntersectionFunctionTableDescriptor *tableDescriptor = [MTLIntersectionFunctionTableDescriptor intersectionFunctionTableDescriptor]; tableDescriptor.functionCount = functions.count; // Create intersection function table id<MTLIntersectionFunctionTable> table; table = [rayPipeline newIntersectionFunctionTableWithDescriptor:tableDescriptor stage:MTLRenderStageFragment]; -

5:14 - Populate intersection function table

id<MTLFunctionHandle> handle; for (NSUInteger i = 0 ; i < functions.count ; i++) { // Get a handle to the linked intersection function in the pipeline state handle = [rayPipeline functionHandleWithFunction:functions[i] stage:MTLRenderStageFragment]; // Insert the function handle into the table [table setFunction:handle atIndex:i]; } -

5:48 - Bind resources

[renderEncoder setFragmentAccelerationStructure:accelerationStructure atBufferIndex:0]; [renderEncoder setFragmentIntersectionFunctionTable:table atBufferIndex:1]; -

5:57 - Intersect from fragment shader

[[fragment]] float4 rayFragmentShader(vertex_output vo [[stage_in]], primitive_acceleration_structure accelerationStructure, intersection_function_table<triangle_data> functionTable, /* ... */) { // generate ray, create intersector... intersection = intersector.intersect(ray, accelerationStructure, functionTable); // shading... } -

9:32 - Triangle intersection function

[[intersection(triangle, triangle_data)]] bool alphaTestIntersectionFunction(uint primitiveIndex [[primitive_id]], uint geometryIndex [[geometry_id]], float2 barycentricCoords [[barycentric_coord]], device Material *materials [[buffer(0)]]) { texture2d<float> alphaTexture = materials[geometryIndex].alphaTexture; float2 UV = interpolateUVs(materials[geometryIndex].UVs, primitiveIndex, barycentricCoords); float alpha = alphaTexture.sample(sampler, UV).x; return alpha > 0.5f; } -

10:36 - Custom intersection with intersection query

intersection_query<instancing, triangle_data> iq(ray, as, params); // Step 1: start traversing acceleration structure while (iq.next()) { // Step 2: candidate was found. Check type and run custom intersection. switch (iq.get_candidate_intersection_type()) { case intersection_type::triangle: { bool alphaTestResult = alphaTest(iq.get_candidate_geometry_id(), iq.get_candidate_primitive_id(), iq.get_candidate_triangle_barycentric_coord()); // Step 3: commit candidate or ignore if (alphaTestResult) iq.commit_triangle_intersection() } } } -

10:39 - Custom intersection with intersection query 2

switch (iq.get_committed_intersection_type()) { // Miss case case intersection_type::none: { missShading(); break; } // Triangle intersection was committed. Query some info and do shading. case intersection_type::triangle: { shadeHitTriangle(iq.get_committed_instance_id(), iq.get_committed_distance(), iq.get_committed_triangle_barycentric_coord()); break; } } -

15:30 - Specifying user instance IDs

// New instance descriptor type typedef struct { uint32_t userID; // Members from MTLAccelerationStructureInstanceDescriptor... } MTLAccelerationStructureUserIDInstanceDescriptor; // Specify instance descriptor type through acceleration structure descriptor accelDesc.instanceDescriptorType = MTLAccelerationStructureInstanceDescriptorTypeUserID; -

15:47 - Retrieving user instance IDs 1

// Available in intersection functions [[intersection(bounding_box, instancing)]] IntersectionResult sphereInstanceIntersectionFunction(unsigned int userID[[user_instance_id]], /** other args **/) { // ... } -

15:58 - Retrieving user instance IDs 2

// Available from intersection result intersection_result<instancing> intersection = instanceIntersector.intersect(/* args */); if (intersection.type != intersection_type::none) instanceIndex = intersection.user_instance_id; // Available from intersection query intersection_query<instancing> iq(/* args */); iq.next() if (iq.get_committed_intersection_type() != intersection_type::none) instanceIndex = iq.get_committed_user_instance_id(); -

16:36 - Instance transforms

// Available in intersection functions [[intersection(bounding_box, instancing, world_space_data)]] IntersectionResult intersectionFunction(float4x3 objToWorld [[object_to_world_transform]], float4x3 worldToObj [[world_to_object_transform]], /** other args **/) { // ... } -

16:51 - Instance transforms 2

// Available from intersection result intersection_result<instancing, world_space_data> result = intersector.intersect(/* args */); if (result.type != intersection_type::none) { output.myObjectToWorldTransform = result.object_to_world_transform; output.myWorldToObjectTransform = result.world_to_object_transform; } -

17:03 - Instance transforms 3

// Available from intersection query intersection_query<instancing> iq(/* args */); iq.next() if(iq.get_committed_intersection_type() != intersection_type::none){ output.myObjectToWorldTransform = iq.get_committed_object_to_world_transform(); output.myWorldToObjectTransform = iq.get_committed_world_to_object_transform(); } -

19:17 - Extended limits

// Specify through acceleration structure descriptor accelDesc.usage = MTLAccelerationStructureUsageExtendedLimits; // Specify intersector tag intersector<extended_limits> extendedIntersector; -

22:30 - Sampling time

// Randomly sample time float time = random(exposure_start, exposure_end); result = intersector.intersect(ray, acceleration_structure, time); -

25:54 - Motion instance descriptor

descriptor = [MTLInstanceAccelerationStructureDescriptor new]; descriptor.instanceDescriptorType = MTLAccelerationStructureInstanceDescriptorTypeMotion; // Buffer containing motion instance descriptors descriptor.instanceDescriptorBuffer = instanceBuffer; descriptor.instanceCount = instanceCount; // Buffer containing MTLPackedFloat4x3 transformation matrices descriptor.motionTransformBuffer = transformsBuffer; descriptor.motionTransformCount = transformCount; descriptor.instancedAccelerationStructures = primitiveAccelerationStructures; -

26:33 - Instance motion

// Specify intersector tag kernel void raytracingKernel(acceleration_structure<instancing, instance_motion> as, /* other args */) { intersector<instancing, instance_motion> intersector; // ... } -

27:24 - Primitive motion 1

// Collect keyframe vertex buffers NSMutableArray<MTLMotionKeyframeData*> *vertexBuffers = [NSMutableArray new]; for (NSUInteger i = 0 ; i < keyframeBuffers.count ; i++) { MTLMotionKeyframeData *keyframeData = [MTLMotionKeyframeData data]; keyframeData.buffer = keyframeBuffers[i]; [vertexBuffers addObject:keyframeData]; } -

27:39 - Primitive motion 2

// Create motion geometry descriptor MTLAccelerationStructureMotionTriangleGeometryDescriptor *geometryDescriptor = [MTLAccelerationStructureMotionTriangleGeometryDescriptor descriptor]; geometryDescriptor.vertexBuffers = vertexBuffers; geometryDescriptor.triangleCount = triangleCount; -

27:57 - Primitive motion 3

// Create acceleration structure descriptor MTLPrimitiveAccelerationStructureDescriptor *primitiveDescriptor = [MTLPrimitiveAccelerationStructureDescriptor descriptor]; primitiveDescriptor.geometryDescriptors = @[ geometryDescriptor ]; primitiveDescriptor.motionKeyframeCount = keyframeCount; -

28:10 - Primitive motion 4

// Specify intersector tag kernel void raytracingKernel(acceleration_structure<primitive_motion> as, /* other args */) { intersector<primitive_motion> intersector; // ... }

-