-

Discover Metal debugging, profiling, and asset creation tools

Explore how Xcode can help you take your Metal debugging, profiling and asset creation workflows to the next level. Discover the latest tools for ray tracing and GPU profiling, and learn about Metal Debugger workflows. We'll also show you how to use the Texture Converter tool, which supports all modern GPU texture formats and can easily integrate into your multi-platform asset creation pipelines.

리소스

- Metal Developer Tools on Windows

- Debugging the shaders within a draw command or compute dispatch

- Metal

관련 비디오

WWDC23

WWDC22

WWDC21

- Discover compilation workflows in Metal

- Enhance your app with Metal ray tracing

- Explore hybrid rendering with Metal ray tracing

- Optimize high-end games for Apple GPUs

WWDC20

-

비디오 검색…

Hi! My name is Egor, and today, I’d like to tell you about all the improvements and new features in Metal Debugger. This year, we are bringing support for more Metal features, such as ray tracing and function pointers.

We have added brand-new profiling workflows, like the GPU timeline and consistent GPU performance state, to help you get the most out of GPUs across Apple platforms.

We’ve made improvements to other debugging workflows you know and love, including broader support for shader validation, and precise capture controls.

We are also introducing advances in texture compression, which my colleague Amanda will talk about later. First, let’s talk about ray tracing.

Last year, we introduced a new Metal ray tracing API, and now, in Xcode 13, we support it in Metal Debugger, along with function pointers and function tables, which bring flexibility to your shaders. And dynamic libraries, which give you a way to build well-abstracted and reusable shader library code. Also, for ray tracing, we are introducing a brand-new tool, the Acceleration Structure Viewer. Take a look at ray tracing in the Metal Debugger.

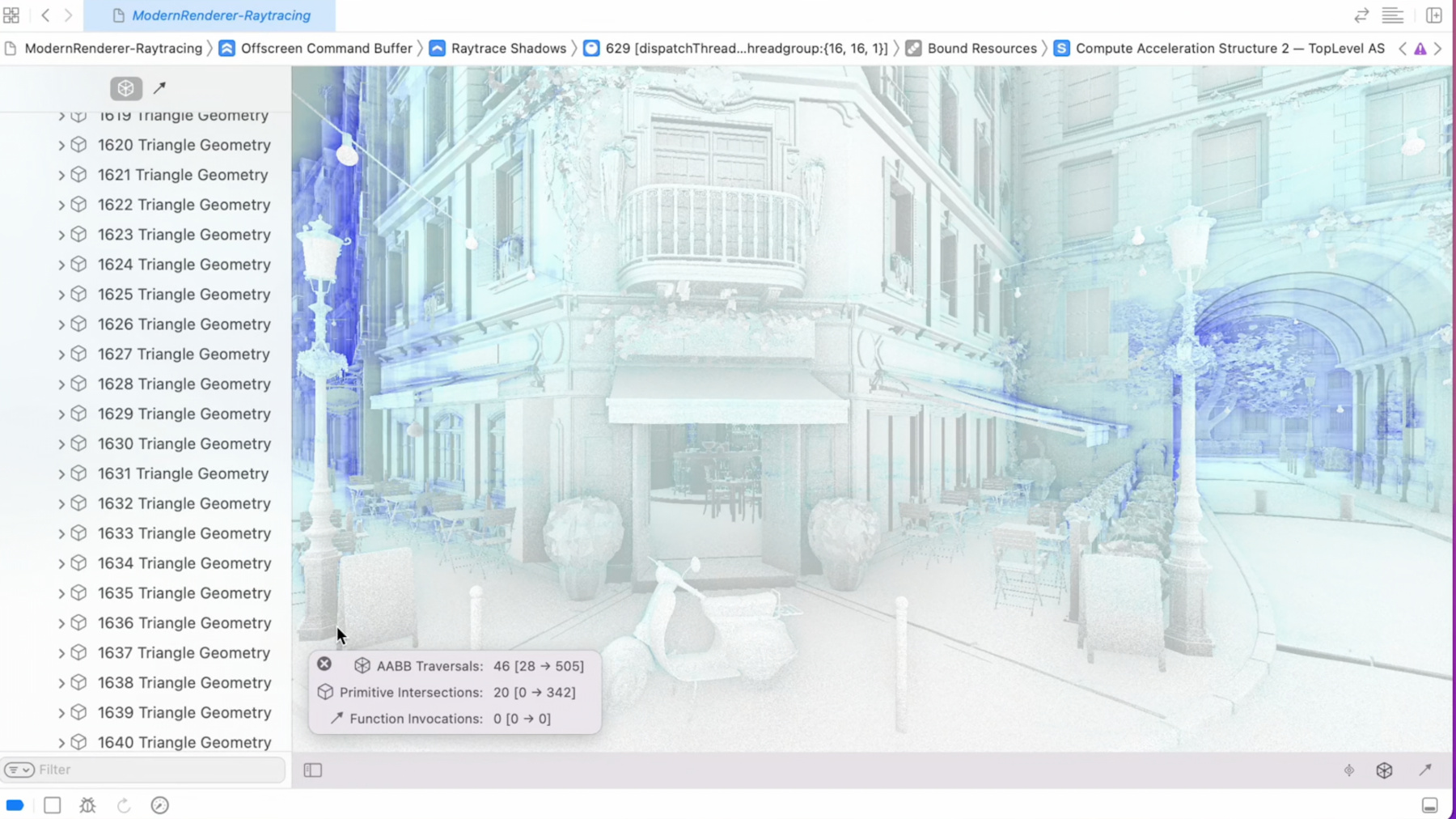

I’ve opened a GPU trace of the ModernRenderer sample app. It was modified to use the Metal ray tracing to achieve effects such as shadows and ambient occlusion. This encoder creates a beautiful ray traced shadow map. I’ve selected a dispatch call so that you can see the acceleration structure in bound resources. From here, I will open the acceleration structure to go to our new acceleration structure viewer. Here, you can see the geometry of the familiar bistro scene on the right, and its outline on the left. Clicking on an instance in the scene will select it in the viewer and also in the scene outline. You can see the transformation matrix and other instance properties by expanding it. You can also select an individual geometry by holding the Option key while clicking in the scene viewer. This will also select it in the scene outline, and vice versa.

You can also see the relevant intersection functions used with the acceleration structure right here in the viewer. But the Acceleration Structure Viewer can do much more than simply display geometry. Here on the bottom-right, you will also find a number of highlighting modes that will help you visualize some of the properties of your scene. For example, the bounding volume traversals mode can help you visualize the complexity of your geometry. A deeper blue color shows areas where the bounding volume hierarchy is more computationally expensive to traverse relative to other parts of it. For all of the modes, we have this small view that shows the relevant information when you hover over different parts of your scene. Here, it displays the number of bounding box traversals and primitive intersections.

To give you more flexibility, we also included traversal settings. With them, you can configure the acceleration structure viewer, using same properties you can find on an intersector object inside your shaders.

There’s so much more to talk about when it comes to ray tracing.

If you’d like to learn more, check this year’s session “Explore hybrid rendering with Metal ray tracing.” And if you want to know more about the API in general, check out last year’s talk, “Discover ray tracing with Metal.” Next, let’s talk about profiling.

Profiling your app is an important step, and we already have a lot of great tools at your disposal. For example, using the Metal system trace in instruments, you can explore a timeline view that shows CPU and GPU durations for different rendering stages, GPU counters, and shader timelines. And in the Metal Debugger, GPU counters show a rich set of measurements directly from the GPU, either per encoder or per draw. Both are excellent tools, which provide complementary views of your app’s performance. But aligning those views may take additional effort. So, that’s why I’m excited to show you a new GPU profiling tool that combines Metal system trace and GPU counters in a unified experience.

Introducing GPU Timeline in Metal Debugger, a new tool designed specifically for Apple GPUs. It gives you a different perspective on the performance data, and it can help you find potential points of optimization in your app. Let’s walk through this latest addition to our suite of profiling tools.

The GPU Timeline is available under the Performance panel. You can find it in the debug navigator after you’ve captured a frame from your app. When you open the Performance panel, you’ll be greeted by a set of different tracks laid out in parallel. Before we continue, I want to explain why encoder tracks are in parallel. On Apple GPUs, vertex and fragment stages of different render passes, and also compute dispatches, can run simultaneously. This is enabled by Apple GPU architecture and use of rendering technique called “tile-based deferred rendering.” We thought it is important for you to be able to see this parallel nature of Apple GPUs in the context of your app. And that’s where GPU Timeline comes in handy.

At the top, you can see the Vertex, Fragment, and Compute encoder timelines, with each encoder showing the resources it uses at a quick glance. Below the encoders, you will find Occupancy, Bandwidth, and limiter counters. Let’s take a closer look at the encoder timeline.

You can expand each encoder track to see an aggregated shader timeline.

Expanding the timeline even further will show you each individual shader in a waterfall-like fashion.

It’s easy to navigate the encoders. Select an encoder track to see a list of all the encoders on the right. There, you can sort them by their average duration. Clicking on an individual encoder in the timeline will show you more information about it in the sidebar. For example, here you can see the attachments for this render command encoder.

You may have noticed that when you select an encoder, time ranges where it’s active become highlighted across all the tracks. With this, you can easily examine how different stages overlap, and also correlate counter values for the encoder.

Moving away from the timeline view, you can access the GPU counters by switching to the Counters tab, or you can just open encoder’s context menu and reveal it in Counters from there.

And this is just a sneak peek at the GPU Timeline. To learn more about using Metal Debugger to understand your app’s performance, check out this year’s session “Optimize high-end games for Apple GPUs.” Now that I’ve showed you a new way to profile your app, it’s important to understand that its performance depends on several factors.

When we are talking about Metal, the GPU performance state is a very important factor. It’s managed by the operating system, which will lower or raise the state depending on device thermals, system settings, GPU utilization, and other parameters.

These state changes can affect profiling results you are seeing.

This year, we are introducing new ways for you to profile your app with more consistent results. We have added ways for you to see and change the GPU performance state across our whole suite of Metal tools, starting with Instruments and Metal system trace for live performance recordings, continuing with Metal Debugger, for profiling GPU traces, and finally, Device conditions in Xcode, for general use cases. First, let’s talk about Instruments. This year, we’ve added a track for GPU performance state to the Metal system trace. Use it in conjunction with the other tracks to correlate your app’s performance with the device’s performance state. Keep in mind, though, that being able to see the performance state is just part of the equation. In order to get profiling results that are consistent and reproducible, you also need a way to set a GPU performance state on a device.

New this year is the ability to induce a specific GPU performance state when you are recording a trace in Instruments. Simply go to Recording Options, and choose a performance state before the recording starts. After that, you can record the performance trace as usual. Instruments will induce the state you chose for the duration of the trace, if the device can sustain it. Sometimes, you might need to check if an existing Instruments trace had a GPU performance state induced during recording. You can find this information in the “Recording Settings” section in the information popover.

And now, you know how to view and induce the GPU performance state from Instruments. The second way to leverage a consistent GPU performance state is by using Metal Debugger. By default, when you capture a GPU trace of your app, Xcode will profile the trace for you. And it will do so using the same performance state the device was in at the time of the capture. That state may have fluctuated, depending on the factors we mentioned previously. If, instead, you would like to select a certain performance state yourself, use the Stopwatch button in the debug bar. After you make a selection, Metal Debugger will profile your GPU trace again. After it’s done, the button is highlighted to reflect that the consistent performance state was achieved. Also, “Performance” section on the summary page now shows new performance data at a glance, as well as the selected performance state. These two approaches are tied to the suite of Metal tools. But sometimes, you might want to induce a consistent performance state outside of profiling workflows. The third way to set a GPU performance state is through Device conditions.

If you want to test how your app performs under different GPU performance states, this is the option for you. In Xcode 13, we have added the GPU performance state device condition. It forces the operating system to use the specified state on a device, as long as it can sustain it and it stays connected to the Xcode.

You can add this condition from Xcode, if you go to Window, Devices and Simulators, choose your device there, then scroll to the “Device Conditions” section, and add a “GPU Performance State” condition with the desired level. Press Start when you want to apply the GPU performance state change on the device. Then, when you are done, press Stop.

These new ways to see and change GPU performance state right from our tools should help you with profiling and testing your apps. And I think you are going to love our latest additions and improvements to profiling workflows, and I hope they will help you make your apps even better. Now, let’s talk about some other improvements we are bringing to Metal Debugger this year. First, I’ll tell you about improvements to shader validation. Then, I’ll show you precise capture controls. And after that, I’ll give you a look at the new pipeline state workflows. Finally, I want to introduce two new features related to shader debugging and profiling, separate debug information and selective shader debugging.

Last year, in Xcode 12, we introduced shader validation, which helps you diagnose runtime errors on the GPU, like out of bounds access, and others.

Remember that if shader validation is enabled, and an encoder raises a validation error, you will get a runtime issue in the issue navigator, showing both the CPU and GPU backtraces for the call that faulted.

We already have a session that covers this in a greater detail, so to learn more about using shader validation, check out last year’s talk, called “Debugging GPU-side errors in Metal.” This year, we are extending shader validation to support more use cases, making it available when you are using indirect command buffers, dynamic libraries, and function pointers and tables. This should allow you to use shader validation more extensively throughout your app during its development. Next, I wanna show you our new precise capture controls. But first, take a look at the Capture button, which now looks like the Metal logo. It’s located on the debug bar, at the bottom of the Xcode window. When you click it, a new menu appears. This menu lets you choose a scope for your capture. The default is to capture one frame, but you can specify how many you want to capture, up to five. You can also choose to capture a number of command buffers that have the same parent device, or a command queue, as well as those that present a certain Metal layer, and even custom scopes that you can define in your app’s code using MTLCaptureScope API. These new controls give you incredible power out-of-the-box in deciding how and when your Metal calls are captured.

Next, let’s talk about Metal libraries and pipeline states. These are the essential building blocks of your Metal app. And in Xcode 13, we’ve made it easier than ever to examine all the pipeline states and libraries your app is using. Now, let’s see how it looks in practice.

Here, I’ve captured a GPU trace from a ModernRenderer sample app. I wanted to see how the GBuffer pipeline state works, so I selected this draw call. If I look in bound resources, I can now see the pipeline state which was used. Opening it takes me to the Pipeline State Viewer. From here, I can examine the functions and see other properties the pipeline state was created with. Further, from the viewer, I can either check out the performance data associated with the state, or I can go to Memory Viewer and reveal the state there. In Xcode 13, Memory Viewer now shows how much memory the pipeline states are taking up in your app. These are just some of the additions that make it easier to inspect pipeline states across the Metal Debugger when you’re looking at GPU traces of your app. Next, let’s talk about shader debugging and profiling in Metal Debugger. Right now, if you wanna use these features, you have two choices. First option is compiling your libraries from source code when the app is running.

A second, better option is building Metallib files with sources embedded offline, and then loading those at runtime. But then, App Store rules don’t allow you to publish your apps with these debug Metallibs. All of that means that if you compile your libraries offline and you want to be able to debug your shaders, you have to compile them twice: once with sources embedded, for use during development, and once without sources, for distribution. This year, we are changing that. You can now generate a separate file with sources and other debugging information while compiling a Metallib. These files have a Metallibsym extension, and they allow you to debug and profile shaders without embedding additional information in the libraries themselves. The most important benefit of having them separately, is that now you don’t need to have two versions of the same Metallib. Another benefit is that with these Metallibsym files, you will now be able to debug shaders even in release versions of your app, without having to compromise your shader sources.

I’ll show you an example of how to compile a shader source file into a Metallib with Metallibsym file alongside it.

I’ll start with xcrun terminal command that compiles a Metallib as normal. To generate a Metallibsym file, I simply need to add the flag “record-sources” with the “flat” option, and then run the compiler. Now, when I try to debug a shader that was compiled with a separate debug information file, I’ll be prompted to import it. Clicking on Import Sources opens up a dialog that lists all the libraries and whether they have their source files imported.

From here, I can import any Metallibsym files, and once imported, the libraries and their sources will be matched automatically.

When I’m done importing, I can close the dialog, and now I can see the sources for the shader and debug it.

There is one last debugging improvement I wanna show you. It’s called “selective shader debugging.” If your app uses large shaders, you might have noticed that the shader debugging may take a while to start. To help in such cases, this year, we are bringing selective shader debugging. It helps you narrow down the debugging scopes, so you can debug your shaders quicker. Let’s see it in action with one of such large shaders.

I would like to debug this GPU ASTCDecoder. I know that if I tried to debug this whole kernel, Shader Debugger would take a long time to start. I wouldn’t want to wait that long, so instead, I can narrow down the debugging scope to just this function, decodeIntegerSequence. To do so, I can right-click it and select Debug Functions. This opens “functions to debug” menu, with the function scope already selected. Now, the debugger will start almost instantly.

Selective shader debugging is a great way to pinpoint bugs in huge shaders quickly. These are all the Metal tools improvements I wanted to show you today. And now, Amanda will tell you about advances we’ve made in texture compression. Amanda? Thanks, Egor. I’m going to walk you through the updates we’ve made this year to texture compression tools. Before I dive into the tools, I’m going to briefly discuss the basics of texture compression on Apple platforms. Texture compression, in this case, is fixed-rate, lossy compression of texture data. This is primarily intended for offline compression of static texture data, such as decals or normal maps. While you can compress dynamic texture data at runtime, that’s not something I’m covering today. Most texture compression works by splitting a texture into blocks and compressing each block as a pair of colors. This pair defines a localized palette, including other colors interpolated from these endpoints, and a per-pixel index that selects from this palette. Each format has different strengths that suit different kinds of texture data. Apple GPUs also support lossless frame-buffer compression starting in our A12 devices, and is great for optimizing bandwidth. Check out last year’s session “Optimize Metal apps and games with GPU counters” to learn more about measuring the memory bandwidth the GPU is using for your app. Another option is to perform lossless compression of texture files on top of the GPU texture compression I’m covering in this presentation. This can give you additional reductions in the size of your app download. Now that I’ve defined texture compression for this talk, I’ll talk about the benefits texture compression can bring to your app. Texture compression is an important step in the development of your apps. In general, most of the memory footprint of games consists of textures. Using texture compression allows you to load more textures into memory, and use more detailed textures to create more visually compelling games. Compression may also allow you to reduce the size and memory footprint of your app. Now that I’ve covered the basics, I’ll discuss the current state of texture compression tools on Apple platforms. The existing TextureTool in the iOS SDK has a relatively simple pipeline. TextureTool reads the input image, generates mipmaps if desired, compresses the texture, block by block, then writes the results to a new output file. But as graphics algorithms increase in complexity, textures need more advanced processing. The core of these processes is performing operations in the correct color space, while minimizing rounding from transformations between numeric precisions. Understanding this, we’ve designed a new compression tool called TextureConverter to handle the necessary increase in texture processing sophistication, and give you access to a host of new options. Let’s take a closer look at how we’ve revamped the texture processing pipeline on Apple platforms.

The texture processing pipeline has been rebuilt from the ground up to give you access to a fully-featured texture processing pipeline with TextureConverter. TextureConverter leverages a set of industry-recognized compressors to support a wide range of compression formats, and give you the option to tradeoff between compression speed and image quality. You can specify which compressor to use, or allow TextureConverter to select based on the compression format, quality level, and other options. Each stage is now fully configurable by you, and texture processing is gamma-aware. To support integration into all your content pipelines, TextureConverter is available for both macOS and Windows, and is optimized for use with Apple Silicon.

Let’s step through each stage of the expanded pipeline, starting with gamma. Gamma correction is a nonlinear operation to encode and decode luminance in images. Textures can be encoded in many gamma spaces. The best choice is dependent on the type of data that the texture represents. Most visual data, such as decals or light maps, do best when encoded in a non-linear space, like sRGB. Non-visual data, like normal maps, should be encoded in linear space. This choice gives you more accuracy in the dark areas where it’s needed. Non-visual data, like normal maps, should be encoded in linear space. Compression should be performed in your target color space, specified with the “gamma_in” and “gamma_out” options. You can either input a float value for linear gamma space, or use the string “sRGB” to specify that color space. You also have the flexibility to use these options to convert to a different target space. Other operations, such as mipmap generation, should be performed in linear space. I’ll walk through the linear space processing stages now.

Now that the input has been converted to linear gamma space, the linear space operations are performed before the input texture is converted to the specified target gamma space. The three stages are physical transforms, mipmap generation, and alpha handling, and some of these have substages. I’ll start with physical transforms. By defining the maximum size in any axis, you can downscale your image as you need for your top-level mipmap. In this stage, you also have control over the resize filter and resize rounding mode. The resize filter options use different algorithms to help you reduce blurriness of your mipmaps as they go down in dimension size. Resize round mode is used in conjunction with max_extent when resizing your image. If max_extent is exceeded, the source image is resized by maintaining the original image’s aspect. The specified round mode will be used when finding the target dimensions. If you’re unsure which resize filter or rounding mode to use, we’ve picked defaults that work well in most cases. And the flip options in this stage give you control over linear transformations on the X, Y, and Z axes. After transforms is mipmap generation, used in the majority of common texture processing situations. Mipmaps are a precalculated sequences of images that reduce in resolution over the sequence, used to increase rendering speed and reduce aliasing. The height and width of each level is a power of two smaller than the previous level.

When customizing mipmap generation, specify the maximum number you want, and which mip filter to use. TextureConverter defaults to Kaiser filtering, with options for “box” and “triangle” filtering.

The last stage in linear space processing is alpha handling.

If alpha to coverage is enabled, this is applied first, using the specified alpha reference value. Alpha to coverage replaces alpha blending with a coverage mask. When antialiasing or semitransparent textures are used this gives you order-independent transparency, and is a particularly useful tool for rendering dense greenery in your game. Afterwards, the option to discard, preserve, or premultiply the alpha channel is presented. In premultiplied alpha, partly transparent pixels of your image will be premultiplied with a matting color.

At the end of the linear space processing stages, we’re ready to move back to the target gamma space and compress the processed mip levels.

The last step in texture processing is the compression. The compression stage can be divided into two substages, channel mapping and encoding. Channel mapping is a technique to optimize general purpose texture compression algorithms for particular data types.

Specifying a channel mapping in TextureConverter is optional. If you do want to use it, TextureConverter currently supports two modes of channel mapping, RGBM encoding and normal map encoding. I’m going to cover both of these formats in more depth, starting with RGBM encoding. RGBM encoding is a technique to compress HDR data in LDR formats by storing a multiplier in the alpha channel and scaling the RGB channels by this multiplier. Here’s an example HDR image of a classroom. And here’s the same classroom image again with the multiplier stored in the alpha channel visible in grayscale. I’ll show you how to calculate the multiplier to encode to RGBM with a code example. EncodeRGBM is a simplified pseudocode function that I’ll walk you through to help you understand the mechanics of encoding to RGBM. This snippet includes use of RGBM_Range, the brand-new parameter for setting the range of RGBM and defaults to 6.0. In order to calculate the RGBM alpha value, the multiplier, first, I’ll determine the maximum of the input texture’s red, green, and blue channels. This is done with Metal’s max3 function. Then this maximum is divided by RGBM_Range. In order to calculate the encoded RGBM’s red, green, and blue channel values, first, the previously calculated multiplier is multiplied back by RGBM_Range, which was used to scale the value for storage in the alpha channel. Then, the input texture is divided by the final multiplier value. To decode RGBM in your shader, you multiply the sample’s RGB by alpha and the fixed factor, as I showed you in the encoding function. I’ll walk through the DecodeRGBM code snippet to show you how to do this. The scaling factor is recalculated by multiplying the RGBM alpha channel, where the multiplier is stored, by RGBM_Range. The original texture’s RGB is calculated by multiplying the RGBM sample by the calculated multiplier. Now that I’ve introduced you to RGBM encoding, I’ll move on to normal map encoding. In most cases, when referring to normal maps, we’re specifically referring to object-space normal maps. When encoding our normals in object-space, we know that each normal is a unit vector, which has the benefit that it can be represented in two axes with the third axis trivially derivable at runtime. This allows us to remap these two channels to best take advantage of texture compression algorithms, and achieve superior compression quality compared to compressing XYZ as RGB. How you remap channels varies depending on the compression format. I’ll walk through an example of encoding a normal with ASTC, using this chart as a guide. When encoding with ASTC, the red, green, and blue channels are set to the X component, and the alpha channel is set to the Y component. The colors correspond to which channel the X and Y components will be reassigned back to when sampling the encoded normal. TextureConverter takes care of encoding remapping for you by automatically remapping to your chosen format if you pass the normal map parameter. When sampling normal maps in your shader, it’s important to know the channel mapping. While the X component is read from the red or alpha channel, the Y component comes from the alpha or green channel depending on the compression format. Coming back to the ASTC example, to sample a texture, the X component is sampled from the red channel, and the Y component is sampled from the alpha channel, the reverse of how the normal was encoded. If you’re encoding to multiple formats to achieve the best possible quality on any device, then this mapping is something that you’ll need to handle at runtime. I’ll walk through an example of runtime normal sampling using Metal texture swizzles.

Encoding to multiple formats could lead to needing multiple shader variants if the different formats used different channel mappings. To avoid this, Metal allows you to apply custom swizzles to your texture. Swizzles allow you to remap X and Y components to red and green channels so your shaders can be compression format neutral. Here’s an example of remapping channels to red and green for a normal map compressed with ASTC, as we saw in the diagram previously. After the texture descriptor is initialized, the red channel is set to MTLTextureSwizzleRed, and the green channel is set to MTLTextureSwizzleAlpha.

Since this is a normal map, only two channels are needed for sampling. Since the red and green channels are now assigned to the X and Y components originally encoded to the red and alpha channels, the blue and alpha channels are set to zero. Once that’s done, the last line is to assemble the final swizzle with the remapped channels using MTLTextureSwizzleChannelsMake. Once the X and Y channels are sampled in your shader, you can reconstruct the Z component. I’ll walk you through the ReconstructNormal function to show you how.

First, the code rebiases the X and Y components into the correct range, which is negative one to one for a normal. The next step is to subtract the dot product of the X and Y components from one, to ensure the result of the dot product has the right sign. The saturate function is then used to clamp this result within the range of zero to one. The last step to calculate the Z component is to take the square root of the output of the saturate function.

Now that I’ve explained RGBM and normal map encoding options available for channel mapping, I’ll finish the discussion of the texture compression pipeline with the final compression substate, encoding. All TextureConverter command lines require specification of the target compression format with the compression_format argument. You can also specify which compressor to use or let TextureConverter make the selection based on the compression format and other options you’ve selected. You may also select the compression quality from these four options. Note that there’s a tradeoff between compression speed and image quality, and you may wish to select a lower compression quality while iterating on your game, but use the highest quality for released builds. Now, I’ll cover the texture compression formats available for you to select.

Here’s an overview of the texture compression format families supported on Apple platforms. iOS and Apple Silicon platforms support ASTC and PVRTC families, and all macOS platforms support BCn families. I’ll go over each of these format families in more detail, and give you some guidelines to help you choose the best ones for your needs. I’ll start with BCn formats. BCn is a set of seven formats that all operate using four-by-four blocks of pixels, and either use four or eight bits per pixel. Each compression format is ideal for a different data format. BC1 and BC3 are commonly used for RGB and RGBA compression, BC6 is ideal for HDR images, and BC5, with its dual independent channels, is ideal for normal map encoding. Next is ASTC, a family of RGBA formats in LDR, sRGB, and HDR color spaces. The ASTC family of formats allows for the highest quality at all sizes, and is therefore more generally recommended over PVRTC. There’s a range of bits per pixel versus quality for each format.

With ASTC, the byte size of each block is the same regardless of the format, while the number of texels it represents varies. This gives you a continuum between the highest quality compression but lowest compression rate at the four-by-four block size versus the lowest compression quality but highest compression rate at the 12-by-12 block size. The LDR, sRGB, and HDR variants describe the color range for compressed ASTC textures. LDR and sRGB are both in the zero-to-one range, in either linear or sRGB space, while the HDR variant is for data outside of the zero-to-one range.

Lastly, PVRTC formats are available in RGB and RGBA in 2-bit or 4-bit mode. A data block in this format always occupies eight bytes, so in 2-bit mode there’ll be one block for every eight-by-four pixels, and in 4-bit mode, there’s one block for each four-by-four pixel.

Now that I’ve introduced the supported format families, I’m going to give some recommendations for choosing formats for your app.

On iOS devices, you should always be using ASTC compression by default, with the addition of PVRTC compression and per-device thinning only if you’re supporting A7 GPUs and earlier. If you have any HDR textures, you can take advantage of ASTC HDR on A13 and later GPUs. For macOS, BCn is available across the board. On Apple Silicon Macs, you also have the option of using ASTC, and you should consider this option if you’re also targeting iOS devices. While PVRTC is available on Apple Silicon, we don’t recommend this option, and it’s intended only for iOS legacy support. Since there are a lot of different formats within each compression format family to choose between, the guideline for selecting the most effective texture compression formats for your app is to select per-texture and per-target when possible. Unless all of your textures are RGB or RGBA data, you should select the compression format based on the type of data you’re compressing, like choosing a format that allows compression as two independent channels for normal data. When compressing to an ASTC format, you may want to select a subset of the formats. Consider bucketing textures by those that require the highest quality versus those that are acceptable at higher compression rates.

Now, let’s review what we’ve covered.

We’ve completely remade the texture processing pipeline from TextureTool to give you complete control over every stage of the pipeline with our new TextureConverter tool. I’ve walked through each stage of this new pipeline and explored all of the options available for you to use at each stage, and introduced you to the channel mapping and texture compression format families supported on Apple platforms. We want to make it as easy as possible to update your workflows from using TextureTool to TextureConverter, so we’ve added a compatibility mode to help you switch over your command lines. Whether using TextureTool compatibility mode or calling TextureConverter with native options, invoke with xcrun TextureConverter. Here’s an example command line of TextureConverter being called with TextureTool options. TextureConverter will translate the options to native TextureConverter options, do the compression, and then tell you what the new native options are, so that you can update your build scripts easily.

That was an introduction to TextureConverter. Here’s how to get it. TextureConverter ships as a part of Xcode 13 and is available to use in seed 1. On Windows, TextureConverter ships as a part of the Metal Developer Tools for Windows 2.0 package, available from developer.apple.com. Seed 1 is available now. Be aware that in Windows, there’s no support for compressing to PVRTC formats, as PVRTC is available in macOS for supporting legacy iOS platforms. Another important part of the Metal Developer Tools for Windows is the Metal Compiler for Windows. The Metal Compiler for Windows was introduced last year, with support for Metal Shading Language version 2.3. Updates throughout the year mirrored the updates to the Metal compiler shipped in Xcode. The latest release version is 1.2, which includes support for Metal Shading Language on Apple Silicon Macs. Seed 1 of version 2.0 is now available with support for all of the great new features in Metal Shading Language 2.4.

Here’s a summary of everything we’ve covered today: Egor discussed support for more Metal features, like ray tracing and function pointers. He introduced brand-new profiling workflows, like GPU Timeline and consistent GPU performance state, to help you get the most out of the GPUs across all Apple platforms. And he demonstrated improvements to debugging workflows you’re already familiar with to give you more support for shader validation and precise capture controls. And I introduced you to TextureConverter, a new tool to help you take full advantage of the texture processing pipeline and all of the supported texture compression formats available on Apple platforms. Thanks, and have a great rest of WWDC 2021. [music]

-

-

27:51 - RGBM Encoding Pseudocode

float4 EncodeRGBM(float3 in) { float4 rgbm; rgbm.a = max3(in.r, in.g, in.b) / RGBM_Range; rgbm.rgb = in / (rgbm.a * RGBM_Range); return rgbm; } -

28:41 - RGBM Decoding Pseudocode

float3 DecodeRGBM(float4 sample) { const float RGBM_Range = 6.0f; float scale = sample.a * RGBM_Range; return sample.rgb * scale; } -

30:55 - MetalTextureSwizzles

// Remap the X and Y channels to red and green channels for normal maps compressed with ASTC. MTLTextureDescriptor* descriptor = [[MTLTextureDescriptor alloc] init]; MTLTextureSwizzle r = MTLTextureSwizzleRed; MTLTextureSwizzle g = MTLTextureSwizzleAlpha; MTLTextureSwizzle b = MTLTextureSwizzleZero; MTLTextureSwizzle a = MTLTextureSwizzleZero; descriptor.swizzle = MTLTextureSwizzleChannelsMake( r, g, b, a ); -

31:55 - ReconstructNormal

// Reconstruct z-axis from normal sample in shader code. float3 ReconstructNormal(float2 sample) { float3 normal; normal.xy = sample.xy * 2.0f - 1.0f; normal.z = sqrt( saturate( 1.0f - dot( normal.xy, normal.xy ) ) ); return normal; }

-