-

DockKit 的新功能

探索 DockKit 中的智能跟踪如何助你实现更顺畅的主体转换。我们将介绍什么是智能跟踪、它如何使用 ML 模型来选择并跟踪主体,以及如何在你的 App 中加以运用。

章节

- 0:00 - Introduction

- 2:49 - Introduction to Intelligent Tracking

- 4:07 - How it works

- 7:08 - Custom control in your app

- 9:52 - Button controls for DocKit

- 14:03 - New camera modes

- 14:46 - Monitor accessory battery

资源

相关视频

WWDC23

-

搜索此视频…

大家好 我叫 Dhruv Samant 我是 DockKit 团队的工程师 今天 很高兴与大家分享 我们为 DockKit 推出的 精彩更新和创新 去年 我们推出了 DockKit 这是一个开创性的框架 它将 iPhone 内容创作 和视频通话体验提升到了新的高度 我有一个好消息 首批基于 DockKit 的支架 现在已经可以 在 Apple Store 商店购买 让我们快速了解一下如何设置 并开始使用 DockKit 支架

首先 请轻点以将 iPhone 与 DockKit 设备配对

配对卡将提供 完成配对过程的分步说明

配对完成后 只需固定 iPhone 即可

就是这样 你的 DockKit 设备 已准备就绪

现在 我可以启动 iOS“相机”App 基座会自动将我置于取景框中

我可以四处走动 DockKit 会无缝跟随

DockKit 跟踪也适用 FaceTime 通话 事实上 现在任何使用相机的 App 都能跟踪你 并将你置于取景框中 借助 DockKit 设备 和我们的用户友好 API 你现在可以设计 个性化的非凡体验 包括视频拍摄、 视频会议以及教育和医疗保健 有了这类设备 顾客现在可以专注于内容 而不必担心有没有被置于取景框中 今年 我们为 DockKit 带来了一项精彩的更新 即智能主体跟踪 智能跟踪使用机器学习技术 来增强 DockKit 跟踪体验 在本讲座中 我们将探讨什么是智能跟踪、 它的工作原理以及如何在你的 应用程序中使用智能跟踪 接下来 我们将重点转移到 按钮控制上 并揭示一类全新的 DockKit 配件 然后我们将介绍现在支持 DockKit 功能的全新相机模式 最后我们将介绍如何轻松监控 DockKit 配件的电池状况 太好了!我们来深入探讨一下

那么 什么是智能主体跟踪? 智能主体跟踪旨在解决 一个老生常谈的问题 即 要在视频场景中聚焦的对象 试想一下 在一个场景中 一些主体正在与彼此互动 或与相机互动 而另外一些主体 则出现在背景中 确定要跟踪和聚焦的 最相关个体极具挑战性 例如 在这个简单的场景中 作为摄影师 你可能希望聚焦 前面正在彼此互动的两个主体 而忽略后面的人 随着场景越来越复杂 我们需要更加复杂的方法 来做出这类决定 这就是智能主体跟踪 可以发挥作用的地方

利用先进的算法和机器学习技术 智能主体跟踪可以实时分析场景 它识别主要主体 如人脸或物体 然后根据各种因素 如动作、语言和 与相机的距离 确定要跟踪的最相关人物 这种跟踪流畅无缝 让你能够专注于内容创作 而不需要手动干预

现在让我们来看看实现这一功能的 底层算法和框架

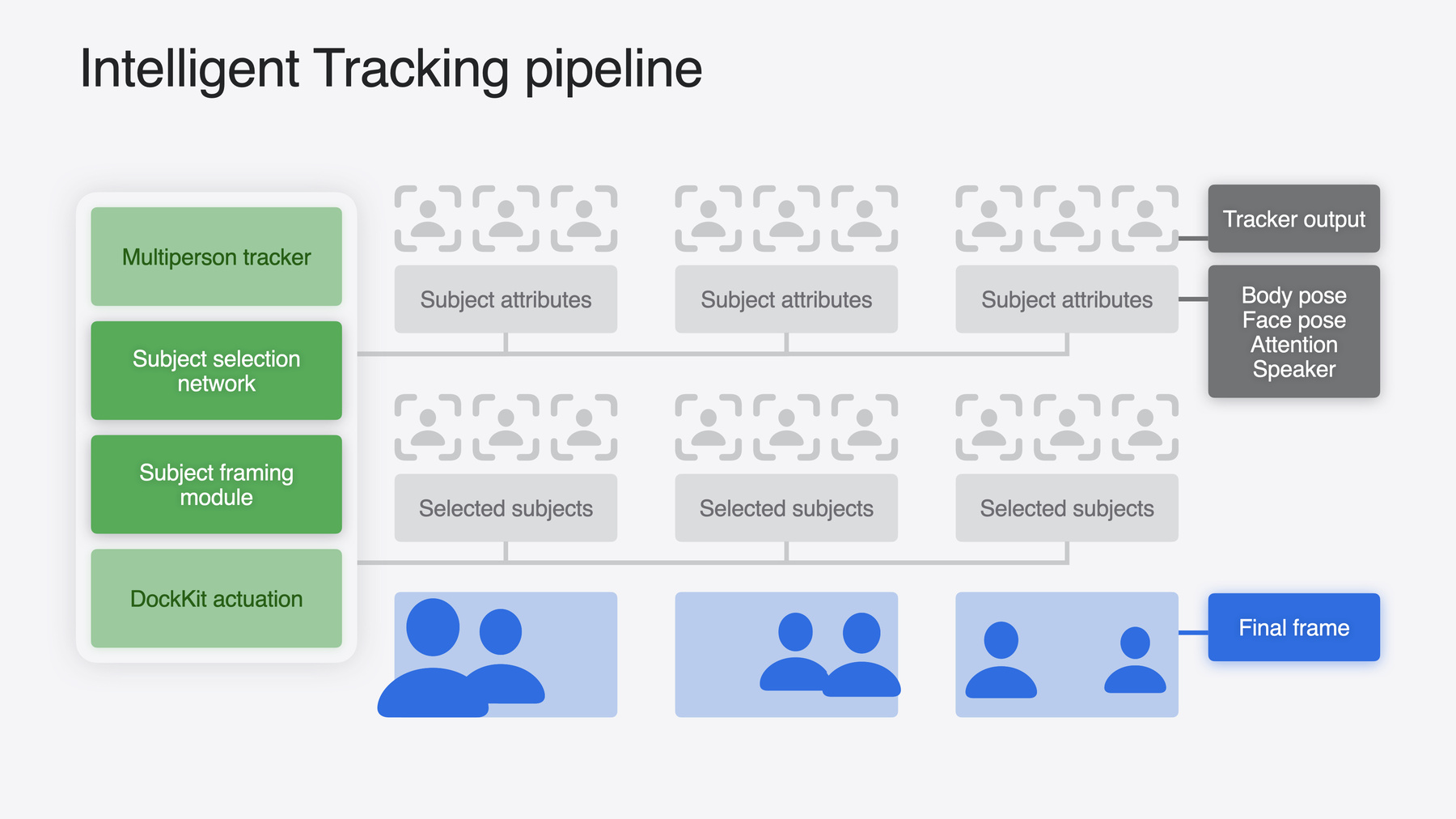

iOS 17 中的多人跟踪器 利用 iPhone 的图像智能 来估算场景中多个主体的轨迹 在这一基础上 我们在 iOS 18 中开发了 全新的智能跟踪管道 这个管道从多人跟踪器中 获取数据并通过先进的 主体选择机器学习模型 处理这些数据

这个模型会分析人体位姿、 面部姿势、关注点 和讲话置信度等各种属性 以确定场景中要聚焦的最相关主体

然后 我们还有一个主体取景模块 它将选定的主体作为输入 并使用先进的算法 确定最具视觉吸引力的取景方式

计算出最终场景后 我们使用电机位置和速度反馈 来实现最终的执行器命令 以发送给 DockKit 配件

虽然我们的智能跟踪系统 可以出色地处理许多场景 但我们也承认 人类摄影师的专业技能 因此 我们为所有 DockKit 设备 推出了手表控制功能

通过手表控制功能 用户可以 在 iOS“相机”App 中 对跟踪和取景进行更精确的控制 他们还可以 手动控制 DockKit 配件 进一步完善拍摄效果

现在 与其口头讨论 不如让我们看看它的实际应用!

我这里有一个 DockKit 支架 我的 iPhone 也在手边 我们的新智能跟踪管道正在运行

由于取景框中只有我一个人 因此 它很容易就能做出跟踪我的决定

现在 我想邀请 我的朋友 Steve 过来跟我一起进行演示 嗨 Steve

Steve 现在也是关注对象 智能跟踪系统 将选择并跟踪我们两个 现在我只需使用 Apple Watch 轻点 Steve 的脸部 DockKit 就会只跟踪 Steve

Steve 你能朝这个方向 走几步吗?

如你所见 DockKit 现在只跟踪 Steve

Steve 是我的好朋友 但现在我想取消选择他 再继续演示 为此 我只需在手表上轻扫 手动移动 DockKit 配件 然后轻点我的脸部 这样就能重新开始跟踪我了

以上是我们为 DockKit 系统跟踪 带来的一些巨大改变 你无需在自己的 App 中 添加任何额外的代码 不过 我们希望 赋予你更多的控制权 为此 我们将在你的 App 中 提供对机器学习信号的访问权限 这使你能够创建深受顾客喜爱的 与众不同的创新功能

如果你的应用程序使用了 DockKit 智能跟踪 你可以获得包含重要属性的 跟踪主体摘要

我们可以使用跟踪状态异步序列 来查询跟踪摘要 跟踪状态包括捕获状态的时间 和跟踪主体列表 跟踪主体可以是人或物

跟踪主体具有 identifier、rect 即面部矩形和 saliencyRank 参数 如果主体是人 我们还会提供 speakingConfidence 和 lookingAtCameraConfidence

saliencyRank 表示我们 对场景中最重要主体的评估 等级从 1 开始单调递增 等级越低 表示特定主体的重要性越高 例如 等级 1 比等级 2 显著性更高

speakingConfidence 是 人物正在讲话的可能性分数 置信度分数为 0 表示人物没有讲话 置信度分数为 1 表示人物正在讲话 同样 lookingAtCameraConfidence 是人物直视相机的可能性分数

现在 让我们来看看 如何在 App 中 使用这些参数来创建自定跟踪功能

现在 假设我正在编写一个 始终跟踪正在讲话者的 App 首先我以异步序列形式查询跟踪状态 并将结果保存在 我的变量 trackingState 中 每当 DockKit 向我提供更新时 我都会更新这个变量 现在 为了跟踪正在讲话者 我编写了一个函数 它首先获取作为跟踪主体的 所有人物列表

然后进行筛选以获取 置信度大于 80% 的 所有正在讲话者

然后我可以将这个列表 传递给 selectSubjects API 这样 DockKit 现在就可以跟踪 场景中所有正在讲话者了

这非常棒 因为它使你能够 利用机器学习信号 来确定对用户最重要的因素 并毫不费力地设计 与众不同的创新功能

这就是在 App 中 从智能跟踪中获益的方式

我们还为 DockKit 配件 添加了按钮支持 我们来深入了解一下按钮 在第一方和第三方应用程序中 是如何工作的 对于“相机”和“FaceTime 通话” DockKit 开箱即支持 三种配件事件: 快门、翻转和变焦 通过快门事件 用户可以 快速拍摄照片或视频 通过翻转事件 用户可以在前置摄像头 和后置摄像头之间无缝切换 通过变焦事件 用户可以放大或缩小场景 我们还可以将这些事件 传送到你的 App 这样你可以实现自定行为 从而提升用户体验 cameraShutter 和 cameraFlip 事件 是切换事件 没有任何关联的值 cameraZoom 事件具有相对系数 例如 如果值为 2.0 则图像大小将增加一倍 视野将减小一半 你可以根据自己的喜好 处理这个系数 配件还可以发送 自定按钮事件 其中包括 用于标识按钮的 ID 和表示按钮是否被按下的布尔值

这提供了更大的灵活性 使你能够设计出 顾客真正认可的自定行为 在这一基础上 我们将引入一类新的 DockKit 配件 即 云台 它们将极大地从按钮支持中获益

云台为充满动感的运动摄影 带来了颠覆性改变 它们使你能够锁定运动员 而无需手动平移和倾斜

这些新的 DockKit 云台 有助于保证相机平稳移动 从而制作出流畅且专业的视频 让我们来看看云台的实际应用!

我手边恰好有 dockKit 云台

我已经将它与我的 iPhone 配对 我只需固定手机 它就会神奇地与云台连接

现在 我可以打开“相机”App 这样云台就能精确地跟踪我

现在 我可以使用这个云台 完成一些新鲜而炫酷的事情 我可以将它拿在手里 它仍然可以跟踪我

我可以按下云台上的翻转按钮 切换到后置摄像头 向你展示我雅致的房间

看看谁在这里 又是 Steve 很高兴见到你 Steve 现在我可以点击录制按钮 开始录制视频 并使用滚轮放大 Steve

有了云台 DockKit 可以 为动态手持体验提供支持 这些新按钮有助于实现这类体验 让我们来探索如何 在 App 中利用按钮控制

假设我正在编写一个 拍摄全景照片的相机 App 我有一个 DockKit 云台 它具有 ID 为 5 的自定按钮 我想利用这个按钮 开始和停止旋转云台来拍摄全景照片 首先 我编写了两个函数 一个用于开始旋转以拍摄全景照片 另一个用于停止旋转

然后我订阅配件事件 当用户触发基座上 按钮 5 的事件时 这个事件就会被传达给我的 App

如果按下按钮 5 则会开始以恒定的速度 旋转 DockKit 配件 当松开这个按钮时 则会停止旋转 现在只需几行代码 我就能 使用 DockKit 配件 设计出色的相机体验 我们在智能主体跟踪和 远程控制方面做了很多工作 我很高兴地宣布 在 iOS 18 中 我们将 DockKit 支持扩展到了 iOS“相机”App 中的新相机模式: 照片模式、全景模式和电影效果模式 现在 在“相机”App 中 我们可以在照片模式下跟踪主体 你可以使用 Apple Watch 或 DockKit 云台 来捕捉主体和风景

在全景模式下 只需按下一次按钮 你就能拍摄出精美的全景照片 自主捕捉物体和 环境的丰富表现

在电影效果模式下 你现在能够 像拍摄电影那样跟踪聚焦的人物

在 iOS 18 中 我们还添加了一项功能 使你能够在你的 App 中 监控 DockKit 配件的电池状况 你可以利用这些数据实现自定行为 并向用户显示相关的状态信息 你可以订阅异步序列电池状态 一个基座可以报告 多个电池的充电状态 电池由名称标识 基座会报告当前的 电池电量百分比和充电状态 例如 连接到电源的基座 可能会报告电池电量为 50% 充电状态为正在充电

我们推出了智能跟踪功能 它充当 AI 摄影师 来自主选择和跟踪场景中的主体

你也可以使用 Apple Watch 手动控制这个摄影师 或使用 API 向这个摄影师 发出指示 我们还推出了 DockKit 云台 来支持动感十足的运动摄影

我希望通过这些新的 API 和配件 为 DockKit 创造 激动人心的新用例 DockKit 在创新方面 蕴藏着巨大潜力 很期待看到这一旅程 会带我们走向何方!

-