-

在 CPU 上助力实现实时 ML 推理

探索如何使用 BNNSGraph 加快机器学习模型在 CPU 上的执行。我们将展示如何使用 BNNSGraph 在 CPU 上编译和执行机器学习模型,并介绍它通过哪些方式为音频或信号处理模型提供实时保证,例如避免运行时内存分配,以及采用单线程运行。

章节

- 0:00 - Introduction

- 1:18 - Introducing BNNS Graph

- 6:47 - Real-time processing

- 8:00 - Adopting BNNS Graph

- 14:52 - BNNS Graph in Swift

资源

相关视频

WWDC24

-

搜索此视频…

大家好 我叫 Simon Gladman 来自 Apple 的 Vector & Numerics Group 今天 我将介绍 我们的机器学习库、 基本神经网络子程序 (BNNS) 新增的一些精彩功能

过去几年 BNNS 为基于 CPU 的 机器学习推理和训练 提供了一整套全面的 API 资源

现在 随着我们推出新功能 BNNS 将变得更快速、 更节能 并且远比以往 更加易于使用 在这个视频中 我将介绍我们为基于 CPU 的 机器学习推出的又一个强大 API BNNS Graph

首先 我要向大家 介绍我们的全新 API 并讲解这个 API 可以如何帮助你 优化机器学习和 AI 模型 然后 我将介绍一些重要功能 这些功能可以帮助 BNNS Graph 满足实时用例的需求 稍后 我将介绍在 App 中采用 BNNS Graph 需要完成哪些步骤 最后 我将逐步讲解如何 在 Swift 中实现 BNNS Graph 以及如何将这个 API 与 SwiftUI 和 Swift Charts 搭配使用 不妨给自己泡杯茶 找个最舒服的位子坐下来 然后一起深入探索 BNNS Graph

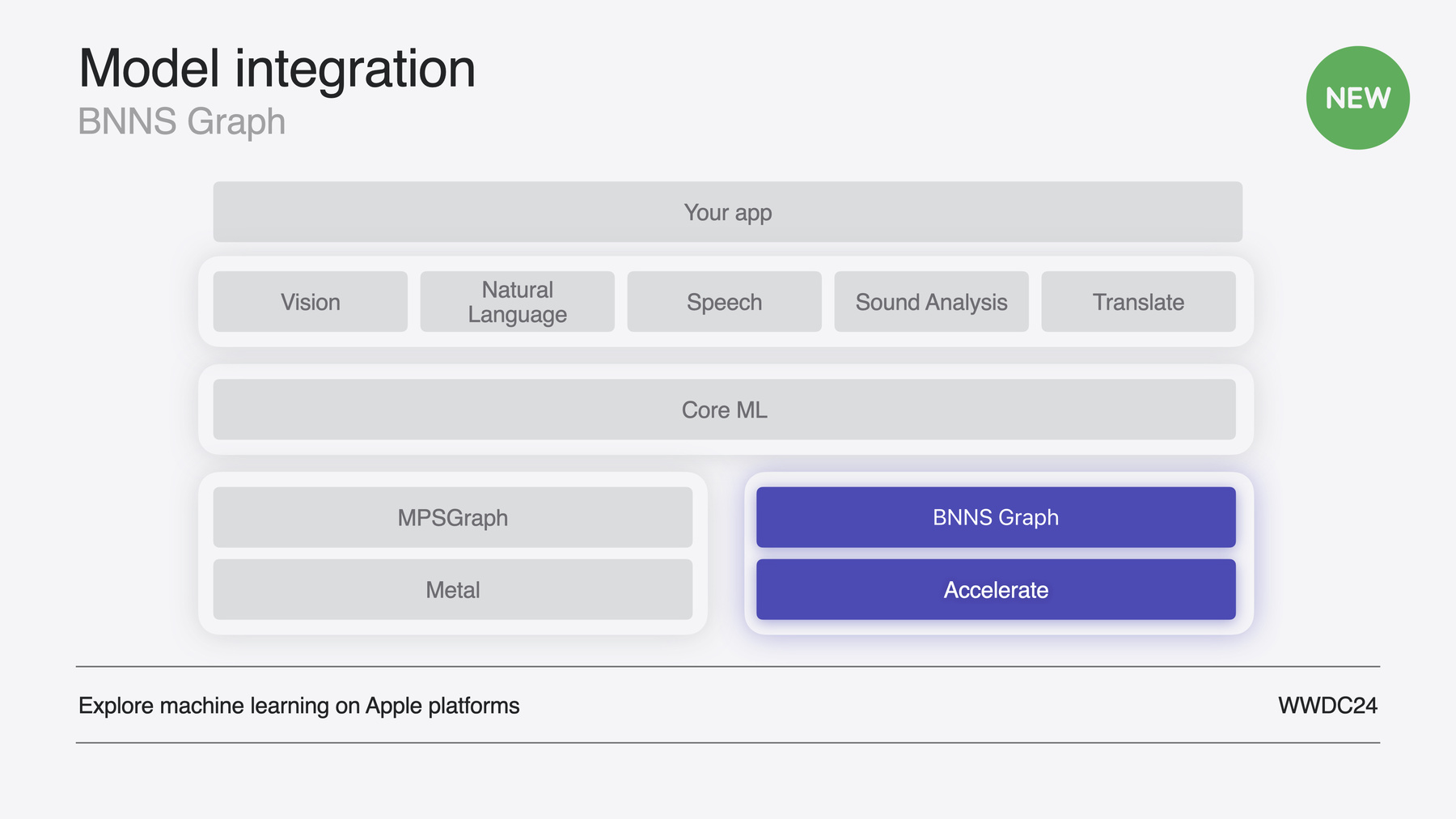

在正式开始之前 我想介绍一下 BNNS 在 Apple 的整个机器学习框架 生态系统中所处的位置

BNNS 是我们 Accelerate 框架中的一个库 可以将模型整合到你的 App 中

它能够加快 CPU 上的 机器学习速度 而且 Apple 的机器学习框架 Core ML 也在使用这个库

BNNS Graph 是我们为基于 CPU 的 机器学习新推出的 API 它让 BNNS 库能够处理整个图 而不是处理单独的机器学习原语

训练是将你的模型部署到 Apple 平台上的第一步 完成模型训练后 必须让模型做好在设备上部署的准备 也就是进行优化和转换 模型准备就绪后 就可以将它 整合到你的应用程序中了 在这个视频中 我将重点介绍 这个工作流程中的整合环节

为了方便理解 BNNS Graph 我想先介绍一下经典的 BNNS API

直到现在 BNNS 一直提供 一组围绕分层架构设计的 API 并提供了单独的性能原语 它们是用于机器学习的构建块 对于每一个单独的运算 比如一次卷积 通常都需要配置许多细节

需要使用 n 维数组描述符 来指定相应层的参数 和它们的属性 这意味着 需要为输入创建描述符 为输出创建描述符 为卷积核 也就是权重矩阵 创建描述符 而且你可能已经猜到 BNNS 还需要使用卷积偏差 作为又一个数组描述符

然后 你需要使用这些数组描述符 来创建参数结构体 并将这个参数结构体传递给 一个会自行创建层的函数 最后 在推理期间应用这个层

如果你想使用 BNNS 来实现已有的模型 则必须将每个层编码为 BNNS 原语 并为所有中间张量编写代码

过去几年 我们一直在 开发一个全新 API 即 BNNS Graph BNNS Graph 可接受整个图 将其中包含的多个层 以及这些层之间的数据流 作为单个图对象来处理 对你来说 这意味着无需 为每一层单独编写代码 而且 你和你的用户 都将受益于更快的性能和更高的能效 我们来简要了解一下 将 BNNS Graph 整合到 App 中的工作流程

首先需要创建 Core ML 模型包 或 mlpackage 文件 如需详细了解如何创建 mlpackage 请观看“利用 Core ML 在设备端 部署机器学习和 AI 模型”视频

Xcode 会自动将这个软件包 编译成 mlmodelc 文件 然后你需要编写代码 根据 mlmodelc 文件构建图 最后一步是创建上下文 来封装图 这也是执行推理的上下文 由于 BNNS Graph 能够理解整个模型 因此它能够完成很多 以前无法完成的优化 更棒的是 这些优化都是自动进行的

举个例子 这是一个模型的一小部分 这部分中的第一层 对张量 A 和 B 执行元素级加法运算 并将结果写入 C 下一层执行卷积 然后 模型将激活函数应用于卷积结果 最后 切片层将元素子集写入张量 D

BNNS Graph 的优化包括数学变换 在这个示例中 切片运算是最后一层 这意味着上述所有运算 都会作用于整个张量 而不仅仅是切片

数学变换优化 将切片移到模型的开头 因此 BNNS 只需计算 这个切片中的元素子集

通过层融合进行优化 就是将多个层合并成一个运算 在这个示例中 BNNS 融合了卷积层和激活层 还有一种优化方式是拷贝省略 通过这种优化 切片运算可以 将切片中的数据直接拷贝到新张量

BNNS Graph 会对切片进行优化 让它能够传递原始数据窗口

BNNS Graph 还可以 确保张量尽可能共享内存 从而优化内存使用情况 同时消除不必要的资源分配 在这个示例中 张量 A 和 C 可以共享同一个内存 张量 B 和 D 也可以共享同一个内存

BNNS Graph 的权重重打包优化 可以对权重进行重新打包 例如从行优先布局 重新打包成分块迭代顺序 以改善缓存局部性

你无需编写任何代码 就能从这些优化中获益 优化会自动实现! 这些优化还能提升性能 速度与之前的 BNNS 原语相比 平均提升了至少 2 倍

由于我们的新 API 非常适合实时用例 因此我要看一下这类用例 通过将 BNNS Graph 添加到 Audio Unit 来处理音频

借助 Audio Unit 你可以创建或修改 iOS 或 macOS App 中的 音频和 MIDI 数据 这些 App 包括“Logic Pro” 和“库乐队”等音乐制作 App Audio Unit 可以利用机器学习 来提供以下功能: 进行音频分离以分离或消除人声 根据内容将音频划分成不同的片段 或是应用音色转换 让一种乐器 发出另一种乐器的声音

在本次演示中 我会进行简化 创建一个 Audio Unit 来对音频 进行“比特粉碎”或量化处理 以产生失真效果

实时处理的主要要求 是避免在执行阶段 进行内存分配或多线程处理 因为这个操作可能会导致 上下文切换到内核代码 从而导致无法实现 实时处理所需的及时性 BNNS Graph 让你能够 对模型编译和执行进行精细控制 这意味着 你可以管理 内存分配等任务 决定模型执行是采用单线程 还是多线程

接下来 我要展示 如何创建采用 BNNS Graph 的 Audio Unit 项目

Xcode 让创建 Audio Unit 变得非常简单 因为它包含了一个用于创建 “Audio Unit Extension”App 的模板

首先将 bitcrusher mlpackage 文件 拖放到项目导航器中

Xcode 将 mlpackage 编译成 mlmodelc 文件 我们将使用这个文件 来将 BNNS Graph 实例化 这样就完成了前两步!

现在 我在 Xcode 中 有了 mlmodelc 文件 我可以创建 BNNS Graph 对象 并将它封装到可修改的上下文中 图的构建只需进行一次 通常在 App 启动后 在后台线程中 完成这个操作 或者 当用户首次使用 依赖于机器学习的功能时完成

图编译会将已编译的 Core ML 模型处理成 优化的 BNNS Graph 对象 这个对象包含了 推理所调用的内核列表 以及中间张量的内存布局图

Xcode 模板将 Swift 和 SwiftUI 用于业务逻辑和用户界面 将 C++ 用于实时处理

在这个项目中 所有信号处理的操作都发生在 C++ DSP Kernel 头文件中 我要在这个位置 添加 BNNS Graph 代码

为此 我要获取 mlmodelc 的路径 构建 BNNS Graph 创建上下文 设置参数类型 然后创建工作区 接下来 我们看看如何编写这段代码

这是获取 mlmodelc 文件 路径的代码 回想一下 我刚才将 mlpackage 文件 拷贝到了项目中 Xcode 从这个软件包 生成了 mlmodelc 文件

为了指定这个图在执行期间 仅使用单线程 我要创建一个采用默认设置的 编译选项结构体 默认行为是 BNNS Graph 在多线程上执行 所以 我要调用 SetTargetSingleThread 函数来更改这项设置

现在 我要使用编译选项 和 mlmodelc 文件路径 来进行图的编译 GraphCompileFromFile 函数会创建图 请注意 我传递了 NULL 作为第二个参数 以指定这个操作对源模型中的 所有函数进行编译

当编译完成时 我可以安全地取消分配编译选项

这就完成了第三步! 我们已经创建了图 现在 我们已经有了不可变的图 ContextMake 函数会将图 封装在可变的上下文中 BNNS Graph 需要 采用可变上下文来支持动态形状 和特定的其他执行选项 这个上下文还让你能够设置回调函数 以便自行管理输出和工作区内存

BNNS Graph 可以使用张量结构体 来指定形状、步长和秩 并指向底层数据 也可以直接使用 指向底层数据的指针

由于本次演示将直接使用 音频缓冲区 因此我要将上下文的参数 指定为指针

我们需要确保 BNNS 在处理音频数据时 不会分配任何内存 所以 在初始化过程中 我会创建按页对齐的工作区

我需要更新上下文 以便让它知道要处理的 数据的大小上限 为此 我要将一个形状传递到 SetDynamicShapes 函数 这个形状基于 Audio Unit 可渲染的最大帧数

现在 GetWorkspaceSize 函数 会返回我需要为工作区 分配的内存量

工作区内存必须按页对齐 我将调用 aligned_alloc 来创建工作区

务必要注意 原始 Python 代码 中的参数顺序可能会 不同于 mlmodelc 文件 中的参数顺序 GraphGetArgumentPosition 函数 会返回每个参数的正确位置 我稍后会将正确位置 传递给 execute 函数

现在 我们有了正确配置的上下文!

在继续介绍之前 我要 简单谈谈一些其他选项 当我们使用编译选项时 我们能够设置优化偏好 默认情况下 BNNS 会对图进行性能方面的优化 这非常适合 Audio Unit

进行性能方面的优化意味着 可能会将额外的工作转移到编译阶段 即使会增加 BNNS Graph 对象 占用的空间也是如此

但是 如果你注重 App 占用的空间 可以在大小方面进行优化 在大小方面进行优化意味着 采用尽可能小的数据形式 但为此需要进行转换 相应的代价可能是降低执行性能

还有一点提示 BNNS Graph 包含了一个能启用 NaNAndInfinityChecks 的函数 这项调试设置可以帮助你检测问题 例如当 16 位累加器溢出时 张量出现无穷大 但是 在生产代码中 最好不要启用这项检查!

这样就没问题了 我们已经 对图和上下文进行了初始化 并指定了单线程执行 还创建了工作区来确保 BNNS 不会执行任何分配 现在可以执行这个图了! 接下来 我们看看 为此需要运行的代码!

SetBatchSize 函数 将输入和输出信号形状 第一个维度的大小 设置为帧中的音频样本数 在这个示例中 第二个参数会引用 源文件中的函数名称 但是 由于源文件仅包含一个函数 因此可以传递 NULL

我要将五个参数 包括输出和输入信号 以及定义量化程度的标量值 以数组的形式传递给 execute 函数 我要添加的第一个参数是输出信号 根据当前音频通道的 outputBuffer 来指定 data_ptr 和 data_ptr_size 字段

下一个参数是输入信号 同样 指定 data_ptr 和 data_ptr_size 字段 但这次是基于当前音频通道的 inputBuffer

接下来的参数是三个标量值 这些值从用户界面中的 滑块派生而来

现在可以执行函数了! GraphContextExecute 函数接受上下文、参数 当然 还有那个非常重要的工作区 返回时 输出指针会包含推理结果 和 SetBatchSize 函数一样 第二个参数会引用 源文件中的函数名称 而由于源文件仅包含一个函数 因此我会传递 NULL

最后 我们来看看如何将 BNNS Graph 整合到 Swift 项目中

Swift 的理想用例之一 是用于在 Audio Unit 的 SwiftUI 组件中 实现 BNNS Graph 这让 Audio Unit 的用户界面 能够显示一个正弦波 处理这个正弦波时 使用的模型和参数 与音频信号本身相同 Audio Unit 的用户界面组件 使用 SwiftUI 并将 Bitcrusher 模型应用于 包含 sampleCount 元素的数据 从而表示一个平滑正弦波

在 BNNS Graph 应用这个效果之后 srcChartData 缓冲区 会储存正弦波表示 而 dstChartData 缓冲区 会储存正弦波数据

这三个缓冲区用于存储标量值 用户可通过用户界面中的滑块 来控制这些标量值

这个 API 在 C 语言 和 Swift 之间是一致的 所以 就像我们刚才看到的 音频处理代码一样 我要定义图和上下文

尽管 Swift 不能 像我们在 C++ 中的操作一样 通过提供工作区来保证实时安全 但 BNNS Graph 在执行期间 不需要进行内存分配

这将有助于提升 Audio Unit 的 性能和能效

接下来 我要声明参数索引变量 这些变量定义了参数 在 arguments 数组中的位置

在这里可以看到 构造器方法中波形显示组件的代码 我会在这里创建 图、上下文和工作区

第一步是获取 mlmodelc 文件的路径 这个文件是 Xcode 从 mlpackage 编译得到的

接下来 我要将 mlmodelc 编译成 BNNS Graph 对象

然后 就像我在 C++ 代码中 完成的操作一样 创建图上下文

你可能想知道我为什么 不用相同的上下文 来进行用户界面和音频处理 这是因为上下文一次只能 在一个线程上执行 由于在 Audio Unit 处理数据时 用户很可能会调节某个滑块 因此我们需要为项目的各个部分 提供单独的上下文

我要快速检查一下 确保 BNNS 已成功创建图和上下文

就像我在音频处理代码中 完成的操作一样 我将直接使用指向源图表数据 和目标图表数据的指针 我会告诉上下文 参数是指针 而不是张量

在这个示例中 批次大小 即源信号数据缓冲区 和目标信号数据缓冲区 第一个维度的大小 是示例正弦波中的样本数

在 SetBatchSize 函数 返回结果后 GetWorkspaceSize 函数 会针对样本计数返回正确的大小

我会计算 arguments 数组的索引 每当用户更改滑块的值时 Swift 就会调用 updateChartData() 函数 这个函数会将 Bitcrusher 效果 应用于正弦波

updateChartData() 函数中的第一步 是将标量值拷贝到对应的储存空间中

然后 我会使用索引按正确顺序 创建 arguments 数组

现在 我可以对示例正弦波 执行 Bitcrusher 了! 在 execute 函数返回结果时 SwiftUI 会更新用户界面中的图表 以显示经过比特粉碎处理的正弦波

下面 我要回到 Xcode 中 在这里 我已经添加了 可显示波形的 Swift 图表 我要声明用于储存参数的缓冲区

声明图、上下文和参数索引

在构造器中 我将初始化 图和上下文

创建工作区并计算参数的索引 然后在下面的 updateChartData() 函数中 使用索引来创建 arguments 函数 以确保顺序正确

接着再执行这个图!

我们来按下 Xcode 运行按钮 以启动 App 听听 Bitcrusher 的实际效果!

非常感谢大家的观看 最后 我来总结一下 BNNS Graph 提供了一个强大的 API 能够助你在 CPU 上实现 高性能 高能效 具有实时性和延迟敏感性的 机器学习 这非常适合音频 App! 再次感谢观看 祝大家一切顺利!

-

-

0:01 - Create the graph

// Get the path to the mlmodelc. NSBundle *main = [NSBundle mainBundle]; NSString *mlmodelc_path = [main pathForResource:@"bitcrusher" ofType:@"mlmodelc"]; // Specify single-threaded execution. bnns_graph_compile_options_t options = BNNSGraphCompileOptionsMakeDefault(); BNNSGraphCompileOptionsSetTargetSingleThread(options, true); // Compile the BNNSGraph. bnns_graph_t graph = BNNSGraphCompileFromFile(mlmodelc_path.UTF8String, NULL, options); assert(graph.data); BNNSGraphCompileOptionsDestroy(options); -

0:02 - Create context and workspace

// Create the context. context = BNNSGraphContextMake(graph); assert(context.data); // Set the argument type. BNNSGraphContextSetArgumentType(context, BNNSGraphArgumentTypePointer); // Specify the dynamic shape. uint64_t shape[] = {mMaxFramesToRender, 1, 1}; bnns_graph_shape_t shapes[] = { (bnns_graph_shape_t) {.rank = 3, .shape = shape}, (bnns_graph_shape_t) {.rank = 3, .shape = shape} }; BNNSGraphContextSetDynamicShapes(context, NULL, 2, shapes); // Create the workspace. workspace_size = BNNSGraphContextGetWorkspaceSize(context, NULL) + NSPageSize(); workspace = (char *)aligned_alloc(NSPageSize(), workspace_size); -

0:03 - Calculate indices

// Calculate indices into the arguments array. dst_index = BNNSGraphGetArgumentPosition(graph, NULL, "dst"); src_index = BNNSGraphGetArgumentPosition(graph, NULL, "src"); resolution_index = BNNSGraphGetArgumentPosition(graph, NULL, "resolution"); saturationGain_index = BNNSGraphGetArgumentPosition(graph, NULL, "saturationGain"); dryWet_index = BNNSGraphGetArgumentPosition(graph, NULL, "dryWet"); -

0:04 - Execute graph

// Set the size of the first dimension. BNNSGraphContextSetBatchSize(context, NULL, frameCount); // Specify the direct pointer to the output buffer. arguments[dst_index] = { .data_ptr = outputBuffers[channel], .data_ptr_size = frameCount * sizeof(outputBuffers[channel][0]) }; // Specify the direct pointer to the input buffer. arguments[src_index] = { .data_ptr = (float *)inputBuffers[channel], .data_ptr_size = frameCount * sizeof(inputBuffers[channel][0]) }; // Specify the direct pointer to the resolution scalar parameter. arguments[resolution_index] = { .data_ptr = &mResolution, .data_ptr_size = sizeof(float) }; // Specify the direct pointer to the saturation gain scalar parameter. arguments[saturationGain_index] = { .data_ptr = &mSaturationGain, .data_ptr_size = sizeof(float) }; // Specify the direct pointer to the mix scalar parameter. arguments[dryWet_index] = { .data_ptr = &mMix, .data_ptr_size = sizeof(float) }; // Execute the function. BNNSGraphContextExecute(context, NULL, 5, arguments, workspace_size, workspace); -

0:05 - Declare buffers

// Create source buffer that represents a pure sine wave. let srcChartData: UnsafeMutableBufferPointer<Float> = { let buffer = UnsafeMutableBufferPointer<Float>.allocate(capacity: sampleCount) for i in 0 ..< sampleCount { buffer[i] = sin(Float(i) / ( Float(sampleCount) / .pi) * 4) } return buffer }() // Create destination buffer. let dstChartData = UnsafeMutableBufferPointer<Float>.allocate(capacity: sampleCount) // Create scalar parameter buffer for resolution. let resolutionValue = UnsafeMutableBufferPointer<Float>.allocate(capacity: 1) // Create scalar parameter buffer for resolution. let saturationGainValue = UnsafeMutableBufferPointer<Float>.allocate(capacity: 1) // Create scalar parameter buffer for resolution. let mixValue = UnsafeMutableBufferPointer<Float>.allocate(capacity: 1) -

0:06 - Declare indices

// Declare BNNSGraph objects. let graph: bnns_graph_t let context: bnns_graph_context_t // Declare workspace. let workspace: UnsafeMutableRawBufferPointer // Create the indices into the arguments array. let dstIndex: Int let srcIndex: Int let resolutionIndex: Int let saturationGainIndex: Int let dryWetIndex: Int -

0:07 - Create graph and context

// Get the path to the mlmodelc. guard let fileName = Bundle.main.url( forResource: "bitcrusher", withExtension: "mlmodelc")?.path() else { fatalError("Unable to load model.") } // Compile the BNNSGraph. graph = BNNSGraphCompileFromFile(fileName, nil, BNNSGraphCompileOptionsMakeDefault()) // Create the context. context = BNNSGraphContextMake(graph) // Verify graph and context. guard graph.data != nil && context.data != nil else { fatalError()} -

0:08 - Finish initialization

// Set the argument type. BNNSGraphContextSetArgumentType(context, BNNSGraphArgumentTypePointer) // Set the size of the first dimension. BNNSGraphContextSetBatchSize(context, nil, UInt64(sampleCount)) // Create the workspace. workspace = .allocate(byteCount: BNNSGraphContextGetWorkspaceSize(context, nil), alignment: NSPageSize()) // Calculate indices into the arguments array. dstIndex = BNNSGraphGetArgumentPosition(graph, nil, "dst") srcIndex = BNNSGraphGetArgumentPosition(graph, nil, "src") resolutionIndex = BNNSGraphGetArgumentPosition(graph, nil, "resolution") saturationGainIndex = BNNSGraphGetArgumentPosition(graph, nil, "saturationGain") dryWetIndex = BNNSGraphGetArgumentPosition(graph, nil, "dryWet") -

0:09 - Create arguments array

// Copy slider values to scalar parameter buffers. resolutionValue.initialize(repeating: resolution.value) saturationGainValue.initialize(repeating: saturationGain.value) mixValue.initialize(repeating: mix.value) // Specify output and input arguments. var arguments = [(dstChartData, dstIndex), (srcChartData, srcIndex), (resolutionValue, resolutionIndex), (saturationGainValue, saturationGainIndex), (mixValue, dryWetIndex)] .sorted { a, b in a.1 < b.1 } .map { var argument = bnns_graph_argument_t() argument.data_ptr = UnsafeMutableRawPointer(mutating: $0.0.baseAddress!) argument.data_ptr_size = $0.0.count * MemoryLayout<Float>.stride return argument } -

0:10 - Execute graph

// Execute the function. BNNSGraphContextExecute(context, nil, arguments.count, &arguments,

-