-

Improve Object Detection models in Create ML

When you train custom Core ML models for object detection in Create ML, you can bring image understanding to your app. Discover how transfer learning allows you to build smaller models with less training data. We'll also take you through some of the advanced parameters in Create ML that help you control training iterations, batch size, and grid dimension for the input image, giving you more control over the accuracy of your models.

For an introduction to object detection, be sure to see the video "Training Object Detection Models in Create ML" from WWDC19.리소스

관련 비디오

WWDC19

-

비디오 검색…

Hello. I am Shreya Jain, an engineer from the Create ML team. Today we're going to explore enhancements in the object detection template and leverage them to create better models. If you're not already familiar with object detection in Create ML, I encourage you to watch this video from WWDC 2019. Object detection can enable some engaging app experiences. You can build an app to help people sort their trash, try virtual glasses on their pet cat...

and even an app that can recommend recipes based on detected ingredients. Building a model for this app is a great way to see Create ML and its new features in action.

Significant improvements have been made to object detection. You can train accurate and smaller models with less training data and exposing more configuration options to customize training.

So let's jump right in.

To get started, I'll open the Create ML app from Spotlight. The first thing I see is a template picker, where I'll select Object Detection. This opens a dialogue box for entering details about the Create ML project. I'll name this project "FindMyRecipe" and add a description that it helps detect ingredients. I can choose to change the project's location before creating it. Next, I land on the settings tab. Data and configuration options can be tweaked here before training. Before loading the data, I'll take you through how this data is prepared.

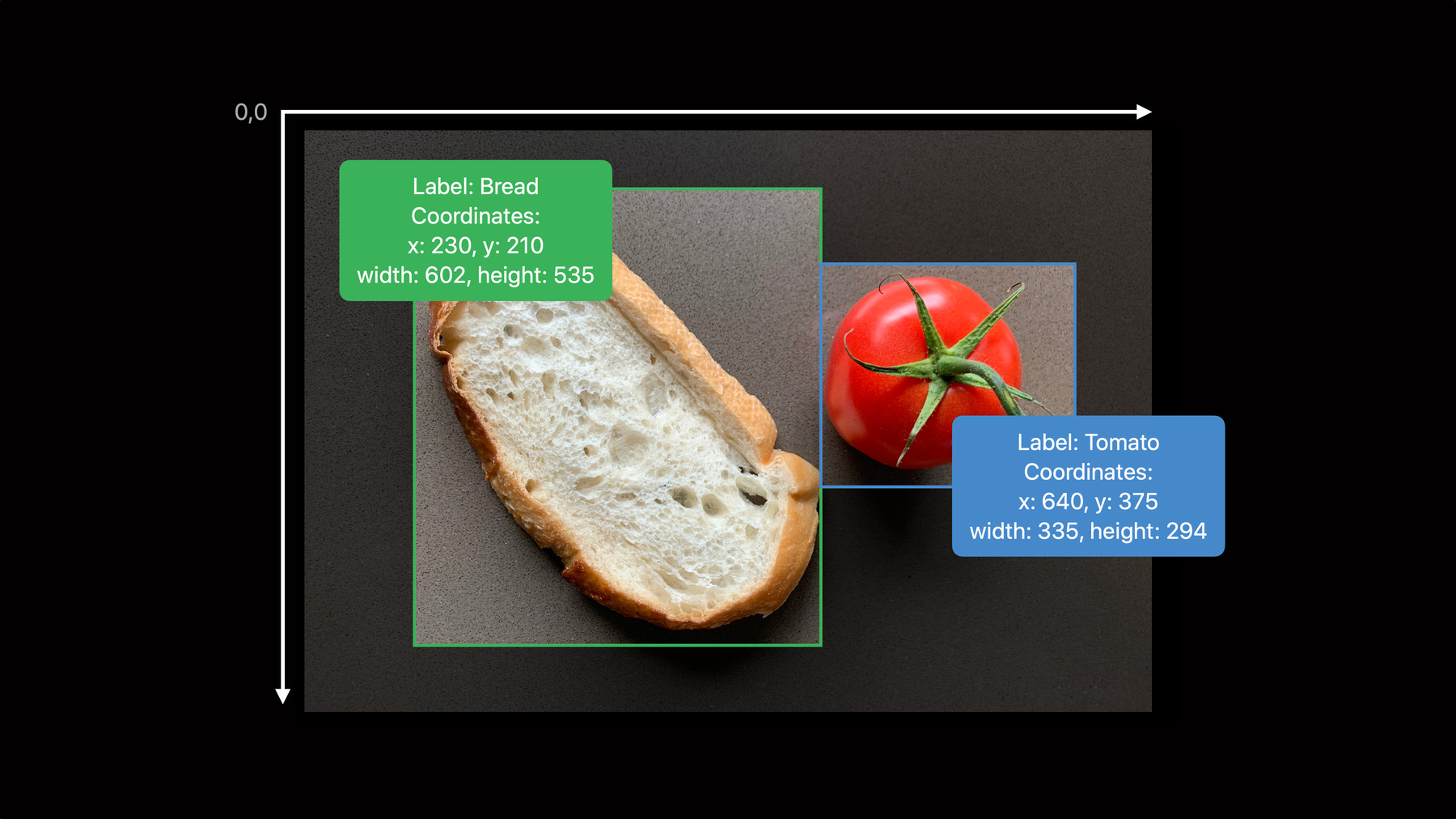

Object detection data must be stored in a folder which contains all training images and the annotations in our JSON file. Contents of annotations.json can be understood by taking this image as an example, which has two objects: a slice of bread and a tomato. Each object annotation consists of the object's label and its location in the image. The location is based on the top left corner of the image.

All objects in the images of the training data can be annotated in this way.

All these annotations are added to a single JSON file in this format.

I'll use this information to prepare my training data.

After the data is prepared, I can load it in the Create ML app.

Clicking on the View button shows the class distribution of my data set. As you can see, my classes are tomato, cheese, bread and basil. Moving back to the Settings tab, validation data can be provided optionally to ensure that the model performs well on unseen data. Here, I set Validation Data to Automatic, letting Create ML use a small portion of my data set.

There are also new training parameters that allow you to better control training of your model. They are algorithm, iterations, batch size and grid size. There's two algorithms for training.

First is the full network. Let's look deeper into the full network algorithm.

Full network was introduced in Create ML in 2019 and has been the default training algorithm since.

This algorithm is based on the YOLOv2 architecture.

All parameters of this network are trained using your data.

The resulting Core ML model encodes all the learned parameters.

This Core ML model has been quantized to store weights with 16-bit precision. The resulting model size is half of what we got earlier. So a model that was previously around 65 megabytes will now be 33 megabytes.

This algorithm is recommended when you have large amounts of training data, like over 200 bounding boxes per class. The resulting model is backwards compatible, going all the way back to iOS 12. We wanted to enable you to build highly accurate models with less training data, which is why we're introducing the transfer learning algorithm for object detection.

Transfer learning leverages machine learning models already in the operating system. For example, Photos app includes models that power Search and Memories.

One of the pre-trained backbones that Photos uses is called object print. This is trained on huge amounts of diverse data. With transfer learning, you can take advantage of this to reduce your data requirement.

Transfer learning algorithm in Create ML uses object print along with the head network. Only the head network is trained on your data, reducing the number of parameters that need to be learned.

As a result, the Core ML model contains only the head network parameters, which makes your model five times smaller than the full network. The same model that was 65 megabytes in 2019 and 33 megabytes after quantization will be just seven megabytes using the transfer learning algorithm.

Transfer learning is a great option when you have limited data and want a lightweight model. It does well with as few as 80 training examples per class. The resulting model requires iOS 14 to leverage the object print shipped with the OS.

Algorithm is just one of the new configurations. Parameters like iterations and batch size have also been added.

Iterations is the number of times the model's parameters are updated. A default value is picked based on the data set size. For your particular use case, you can increase iterations if the model has not yet converged or reduce them if the model is doing well early.

Batch size refers to the number of training examples utilized in one iteration. A default value is chosen based on hardware restrictions. Although higher batch size is better, you may want to use the default value or reduce it based on performance restrictions.

Finally, for the full network, you can customize grid size. Understanding grid size requires knowledge of how predictions work for the full network. Let's take a closer look.

Starting with this input image... passing it to a trained full network model...

results in a number of predicted objects with bounding boxes.

To find the objects in the image, the model leverages a grid and a set of anchor boxes. The specified grid defines the aspect ratio of the input image as well as where the model will look for detected objects. For example, let's see how the model will behave with a grid dimension of 5-by-5.

The image will be resized to fit the grid-- in this case, to a square image-- and then broken up into the defined number of cells.

The network then produces predictions, one for each grid cell.

Each prediction contains the following information: whether a cell has an object in it, the class of the object and its bounding box. YOLO works fine for multiple objects where each object is associated with one grid cell. As you can see in this image, the centers of banana and dog fall in the same cell. Since each cell can only predict one class, it is supposed to pick either the banana or the dog.

In order to predict both the banana and dog, anchor boxes are defined. Anchor boxes have a set aspect ratio and detect multiple objects within a grid cell.

Create ML uses a default grid dimension of 13-by-13, totaling 169 cells. A fixed set of 15 anchor boxes of varying aspect ratio are evaluated for each cell. Therefore, the default model is doing a total of 2,535 predictions per image.

Consider this image of dice and how object detection would work with a grid dimension of 3-by-3.

As multiple dice of similar aspect ratio are present in a single cell, only one of them would be detected.

With a large grid size, more dice are detected.

However, this will increase the number of predictions per image. It's important to consider the computational cost when altering the grid size.

For a non-square input image like this one, with dimensions 1500-by-800, using an 8-by-8 grid on this image results in loss of information and distorts the natural shape of objects. This prevents the model from capturing more fine-grain patterns while training as well as hinders its predictive power.

Choosing a grid size of 15-by-8 preserves the original aspect ratio of the input image and results in a model that has learned more information and can give better results.

Getting back to training the model for the FindMyRecipe project, I can pick transfer learning algorithm, set 1000 iterations and Auto for batch size.

On clicking the play button, the model starts training. The Training tab shows that the batch is being prepared. This step performs a set of standard image augmentations to help with robustness and generalizability to real-world data. Soon, a graph appears, plotting loss values for each iteration.

As training proceeds, the Snapshot button can be clicked to obtain a model at that time. Snapshots are helpful in checking on training progress.

I can use this model to preview predictions on a few images.

For every image, model predictions are shown in the Preview tab. I can click on these bounding boxes to see confidence for each class at the bottom. A snapshot can also be used to experiment within an app.

After training is complete, evaluation metrics on training and validation data can be seen in the Evaluation tab. What do these numbers mean? Evaluation for object detection models needs to be twofold. Not only do we want correct labels, but they must also be at the right locations. Getting the bounding boxes to exactly match the annotated box is hard. A number that captures how close predicted boxes are to annotated boxes becomes necessary.

This is measured by a score called intersection-over-union. It is a value between 0%, which is no overlap... to 100%, which is perfect overlap.

For a prediction to be considered correct, it needs to have the correct class label and an intersection-over-union score greater than a predefined threshold.

If either the intersection-over-union score is less than the threshold or the predicted class is incorrect, the overall prediction is incorrect. This information is used to compute a metric called mean Average Precision, or mAP.

I'll go back to the evaluations tab to see these numbers.

These numbers represent average precision per class calculated at two thresholds. One is fixed at 50% and the other varied at multiple thresholds. The overall mean Average Precision for our data set can be seen at the top right corner. Higher mAP reflects more correct predictions.

The mAP of our model looks good overall. I'll preview the model on a few examples to make sure that the model predictions look right.

Everything looks great. I can now drop this model into our app.

With the additional features that you just saw, creating an object detection model using Create ML is simple.

Create ML helps you customize models by providing more control over training. It helps you build accurate models with less data and smaller output size. We can't wait to see the wonderful ideas that you bring to the table using these brand-new features.

-