-

Discover Metal enhancements for A14 Bionic

Explore how Metal is bringing sophisticated rendering and powerful compute features to A14 Bionic. We'll take you through the Metal capabilities delivered in the Apple GPU Family 7 feature set, including new texture addressing modes, fast SIMD reduction and matrix multiplication operations, and a deep dive into implementing a visibility buffer using barycentric coordinates and primitive ID.

리소스

관련 비디오

WWDC21

WWDC20

Tech Talks

WWDC19

-

비디오 검색…

Welcome to Metal updates for the A14 Bionic. My name is Anand Poovekurussi, and I work in GPU software at Apple.

The A14 Bionic is at the heart of iPhone 12 and the new iPad Air. In this video, my colleague Swami and I will introduce you to the GPU capabilities of the A14 Bionic and the new Metal features that it enables.

The A14 has big updates to our CPU, GPU, neural engine and other custom technologies that drive the iOS experience. It uses breakthrough 5-nanometer process technology, which brings new features, increased performance and even more power efficiency in nearly every aspect of the chip.

We have built a world-class mobile GPU into the A14 Bionic. It has four cores scaled to deliver the maximum sustainable performance at the lowest possible power.

Now, in terms of Metal, there are some specific changes in the A14 that will improve the performance of your Metal apps... starting off with GPU-driven pipelines. Indirect command buffers in Metal enable you to move work to the GPU and let you encode commands on the GPU timeline. This frees up valuable CPU time for you to perform other tasks in your apps. The A14 GPU architecture makes such usage models more efficient.

Next, we have made significant performance improvements to the design of GPU threadgroup memory to better support parallel computing. We expect synchronization primitives such as threadgroup scoped atomics to see great performance benefits as a result of these changes.

Finally, the ability to conserve memory bandwidth continues to be a significant design advantage of our platforms. The A14 GPU leverages brand-new compression hardware which brings even more bandwidth savings to your apps. Frame buffer compression is expected to improve by 15% or more on average and depth buffers should see 40% or more improvement on average. All the new capabilities of the A14 GPU belong to a new Metal feature set called Apple GPU family 7.

Here's what we'll be covering in this video. First up, I will talk about barycentric coordinates and primitive ID. I will also show you how both of these features can be used together to implement deferred rendering techniques such as the visibility buffer.

After that, I will quickly introduce the new texture addressing modes in A14. We also have brand-new features for apps doing compute.

In the second section of the talk, my colleague Swami will talk about the new SIMD reduction instructions that provide an efficient way for applications to perform reduction operations at SIMD group scope.

He will also cover the new SIMD matrix multiply instructions in Metal on A14. These new instructions provide significant performance boost for large matrix multiplies that are used in machine learning and image compute.

Let's look at each of these in more detail... starting with barycentric coordinates and primitive ID.

Apple GPU family 7 now provides access to barycentric coordinates and primitive ID. Barycentric coordinates define the exact location of a fragment within a primitive. And now, you can access these coordinates right in your fragment shader. As you can see in the picture on the right, the location of the fragment at point P is defined as a weighted sum of the vertices that form the triangle, where the sum of the weights is 1.

In the Metal shading language, the barycentric coordinates of the fragment are exposed as a vector of floats. Let's take a look at a technique which can benefit from using barycentric coordinates.

Procedural generation is a class of techniques used to algorithmically generate models, animations and effects. You can do all sorts of procedural effects on the surface of the primitives, such as particle systems, terrains or vegetation on the fly. Drawing custom lines is one such example. Let's take a look at how barycentric coordinates can be used for this.

Let's say you wanted to use procedural generation to draw custom high-quality anti-aliased lines and borders on triangles.

To do this, you can apply interesting mathematical functions on the distance of the fragment from the edges of your primitive.

Barycentric coordinates of your fragment in essence give you the distance from the edges.

The examples above show some interesting functions applied on the barycentric coordinates in your fragment shader to generate on-the-fly effects.

Another new feature of Metal on A14 is primitive ID.

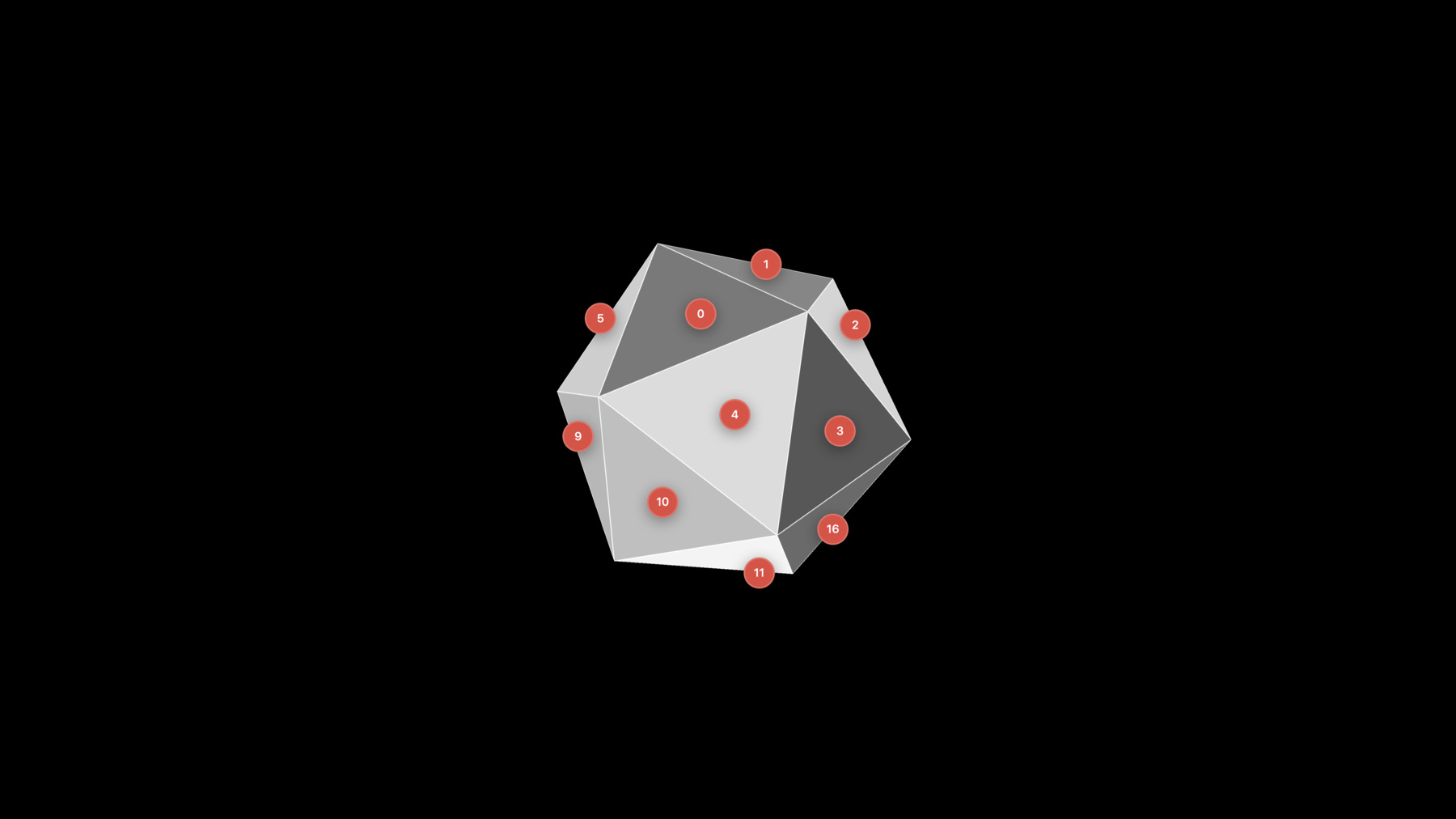

Primitive ID tells you which primitive the current fragment corresponds to in the input geometry. On the right, the IDs listed on triangles will be returned when primitive ID is fetched for any fragment within that triangle in your fragment shader. Now if the triangle gets clipped by the hardware, a child triangle inherits the primitive ID of its parent. And in the presence of tessellation, the primitive ID simply corresponds to the patch ID.

In the Metal shading language, primitive ID is an unsigned integer. Let's take a look at a use case for primitive ID.

Temporal anti-aliasing is a technique that reduces the ghosting and shimmering artifacts caused by motion between frames. It works by accumulating pixel data from the previous frame and blending it with the current render results for the fragment. Primitive ID can be used with temporal anti-aliasing to validate the sample from the previous frame. So how would you actually do this in practice? First, in your fragment shader, you re-project the results from the previous frame to fetch pixel history. Then you introspect the pixel to ensure that the data is consistent with the current frame. This can be done by comparing the primitive IDs of the two samples. If the IDs match, you can choose to accumulate the contribution from the previous frame. The pixel history can be accumulated or reset post-introspection, forwarding the results on to the next frame.

Now that we have introduced barycentric coordinates and primitive ID, let's take a deeper dive...

starting with deferred rendering, a technique you're likely already familiar with. Deferred rendering classically operates in two stages. The first stage, generating the surface attribute buffer, called the G-buffer, and the second stage, which consumes the G-buffer and applies lighting on the scene. But if you're running at high resolution or using multi-sampling, the size of the G-buffer can be quite large, and reading and writing of these buffers could have prohibitive bandwidth costs. To address this problem, the G-buffer could be replaced with a thin buffer, which contains the minimal set of surface attributes. This buffer is called a visibility buffer.

The visibility buffer minimizes the work in the geometry stage by greatly simplifying the output from this stage. All the material logic you would typically perform in the fragment shader in deferred rendering to generate material properties in the G-buffer are now moved to the lighting phase. By doing this, we no longer have to store all material properties in the intermediate buffer. Without material function, the complexity of the geometry stage is greatly reduced, allowing for a high fill rate and minimum traffic between the vertex shader and the fragment shader.

This technique adds a new reconstruction step during the lighting pass to reconstruct the material inputs from the minimal data set inside the visibility buffer. Let's take a look at what the visibility buffer will contain.

The visibility buffer only needs to contain two attributes to facilitate geometry reconstruction. The primitive ID can be used to manually retrieve vertex data from the vertex buffer, effectively doing a deferred vertex fetch.

Barycentric coordinates are used to interpolate vertex data for the current fragment. As mentioned before, you can access these attributes in your fragment shader on A14. Let's take a closer look at the two stages of the visibility buffer pipeline.

At a high level, the visibility buffer approach has two stages: the geometry stage, which produces the visibility buffer, and the lighting stage that consumes it.

In the geometry stage, the vertex shader's transformation only generates the position needed for rasterization. The fragment stage can generate the primitive ID and barycentric coordinates with the new A14 Metal shading language attributes without the need for any additional varyings.

In the lighting stage, a new reconstruction step will use a deferred vertex fetch based on the primitive ID and interpolate the vertex data using barycentric coordinates to generate material function inputs.

The material and lighting shaders are effectively untouched and are identical to the deferred rendering implementations.

Let's take a look at how interfaces between these stages have been minimized.

The vertex shader only requires the position to rasterize.

The fragment shader generates the surface ID and barycentric coordinates for the visibility buffer that is fed into the reconstruction step. And only after the reconstruction step do we have the large reconstructed material input data in memory, which is used by the material model and the lighting functions. Now that we have introduced the interfaces, let's take a look at the shaders that are generating these inputs and outputs.

Let's start with the geometry face, which has a simple vertex and fragment shader.

The vertex shader only needs to transform and output the position. In the fragment shader, we make use of the new primitive ID and barycentric coordinates available in the Metal shading language. We combine the primitive ID and additional draw index into our surface identifier so we can identify primitives across vertex buffers, as we will see later. Now let's take a look at the lighting stage.

At a high level, the lighting stage has three steps: reconstruction, material model and lighting function. The reconstruction step will reconstruct material input from our surface identifier and barycentric coordinates. Then we can execute our material model steps, as we do in our deferred shader. And finally we apply our lighting function to write out the final lit pixel. As mentioned previously, the material and the lighting stages are identical to classic deferred. So let's take a look at the reconstruction step in more detail.

The main purpose of the reconstruction step is the transformation of the incoming visibility buffer fragment to material inputs.

First, this requires generating vertex data for a given fragment using primitive ID and draw ID contained in the surface identifier. An additional index facilitates having different vertex buffers across fragments. For this, we use a draw identifier to reference the draw call.

Second, the barycentric coordinates are used for interpolation in the reconstruction step. Let's examine the dereferencing tree.

Here we show the dereferencing tree needed to resolve a visibility buffer pixel back to the original interpolated vertex attributes.

The visibility buffer contains two barycentric coordinates. The third coordinate can be retrieved from the other two since the sum of all three is unity.

It also contains a combination of the draw ID and primitive ID, as mentioned before. From the draw ID, the draw state can be retrieved, allowing access to per-draw properties such as view projection matrix.

From there, we can retrieve the vertex and the index buffer from the mesh state. These indices are needed to fetch the vertex data.

And we can also use the same path to retrieve material-specific data that we need.

Now if our material model becomes more complicated, we can add a material function pointer to execute specific material logic.

Let's take a look at how reconstruction looks in shader code.

The first thing to do in your reconstruction function is to retrieve the primitive ID, draw ID and barycentric coordinates from the visibility buffer.

After that, you can proceed to retrieve the mesh ID from the draw state using the draw ID. The index buffer needs to be dereferenced to retrieve the vertex indices within the vertex buffers using primitive ID and mesh ID.

Then the vertex buffer data can be read and interpolated across barycentric coordinates. Additionally, matrices and other per-draw info can be retrieved to do deferred vertex transformations on geometries such as normals and tangents.

Once the material input has been generated, the data is fed into the material logic and lighting functions, as discussed previously.

Let's take a look at visibility buffer in action.

Here's a modern rendering scene that is using the visibility buffer running on the iPhone. It is implementing a tile lighting technique that makes use of multiple materials and a scene filled with lights. Normally, in classic deferred, this would have required multiple G-buffer channels such as normal, albedo, roughness, etc., accounting for quite a large G-buffer. But with visibility buffer, we only need a surface ID and barycentric coordinates.

First, we store the generated surface identifier, visualized here with a brightness and a hue showing draw ID and primitive ID respectively.

Second, we store the barycentric coordinates, visualized here, with the red, green and blue color channels.

These two properties are enough to efficiently reconstruct the geometry and apply deferred material shading and lighting models.

With this technique, the G-buffer size is significantly reduced on the A14 using the thin visibility buffer. In our example, we save more than 40% of our G-buffer size compared to classic deferred.

So that wraps up our deep dive on barycentric coordinates and primitive ID.

Now let's take a quick look at the new texture addressing modes in A14. These modes specify how to handle texture coordinates that are outside the sampling range and are quite handy when you're using texture atlas.

We've added two new addressing modes in Metal for A14.

With mirror clamp to edge addressing mode, the texture coordinates within the range of the extents are mirrored across the axis. And when they fall outside, they're clamped. You can see this in the picture on the right. We've also added border color clamp mode, where you can choose one of the presets between transparent black, opaque black and opaque white. The usage is fairly simple.

First, you need to create a sampler descriptor object. Then you can specify the clamp modes for depth, width and height coordinates independently by setting the address modes. If you're using clampToBorderColor, you can set one of the presets supported in Metal.

After this, you can create the samplerState object with the sampler descriptor.

So that was an overview of the new graphics features in A14.

Now I would like to hand it off to my colleague Swami to describe the new compute features that the A14 GPU enables, starting with SIMD reduction.

Thank you, Anand. I am Swami Narayanan, and I work with GPU software. Metal is designed to enable high-performance graphics as well as to perform data parallel calculations and it provides a variety of advanced compute features that leverage the tremendous power of the GPU. On the A14 Bionic, Metal now provides SIMD scope reduction instructions, which provide a way for apps to perform reductions incredibly efficiently.

To understand how they work, let's briefly review parallel reduction operations. Reduction operations are used to reduce the elements of an array to a single result. For example, a sum reduction is used to add up all the elements of an array. This can be useful for computing averages. Another example is to compute the min and max values of a scene. Those values can then be used in tone mapping algorithms. Classically, all these operations were computed serially on the CPU. However, Metal can take advantage of the parallel nature of the GPU to compute reductions much more efficiently.

On the A14 GPU, Metal now supports several SIMD scope reduction instructions.

simd_sum and simd_product generate the sum and product of a variable across all threads in a SIMD group.

simd_minimum and simd_maximum can be used to find the minimum and maximum values. These four instructions work on floating point and integer types.

In addition, Metal now supports reductions using bit-wise operators "and," "or" and "xor." Naturally, these work only on integer types. Before going over the reduction operations in more detail, let's look at how threads are organized into threadgroups and SIMD groups.

Compute dispatches launch a set of individual threads that are represented as a grid.

This grid of threads are divided into smaller subgrids that are called threadgroups.

Threadgroups are further organized into groups of 32 threads that are called SIMD groups. The threads of a SIMD group run concurrently in lockstep.

SIMD group functions exploit this lockstep execution to share data between these threads.

Now that we have seen how SIMD groups and threadgroups are organized, let's take a look at how threads are executed in SIMD groups.

A SIMD group has 32 lanes that are represented down the left side here. Each of these lanes will run a thread from the compute dispatch.

Now let's have all the threads in the SIMD group store their lane IDs into the variable X. Notice how each lane has its own value of X.

In the SIMD group execution model, the instruction that sets the variable X to the lane ID is only fetched once and is then executed simultaneously in lockstep by the 32 threads. SIMD group functions allow each of these threads to inspect and use variables of the other threads in the SIMD group with minimal overhead. Let's take a look at simd_sum, one of the new instructions added in A14.

simd_sum adds up values of a variable across all active threads in the SIMD group and broadcasts the sum back to all the threads. Here we are adding the values of the variable X across the 32 lanes of the SIMD group.

Once the instruction is executed, the resulting sum is then available in the variable F.

Note that all active threads can inspect their copy of the variable F to get at the computed sum of 496.

Inactive threads are skipped in the computation correctly. They do not contribute to the final sum.

Now let's take a look at how we can use simd_sum to speed up adding the elements of a large array. Here the input array is in device memory.

Each SIMD group reads a subregion of the input array and computes its sum using the simd_sum instruction.

This sum is then written to an array in threadgroup memory. Every SIMD group has a distinct ID that it can use to index into the threadgroup sum array.

The last executing SIMD group in the threadgroup uses the simd_sum instruction again to get the final sum. By using simd_sum, we have decreased the number of threadgroup barriers and the usage of threadgroup memory. The simd_sum instruction also gets executed only once to compute the sum of all the threads in the SIMD group. Now let's go to the implementation of the compute kernel.

Here we have the code that implements parallel reduction using simd_sum.

Each thread in the SIMD group reads its corresponding element from the input array.

Then it computes a first simdgroup_sum using the simd_sum instruction.

This sum is then written to an array in threadgroup memory which is indexed using the SIMD group ID.

Note that we need a barrier before we can access the array in threadgroup memory.

The last SIMD group in each threadgroup then uses the simd_sum instruction again to get the final sum.

Let us take a look at the other reduction operations we have introduced in A14.

We have already seen simd_sum, which adds up the values of X across all the active lanes.

Now let's look at simd_max and apply it to the variable X. Every lane then gets the maximum value, which is 31, across all the threads in the SIMD group.

Similarly, simd_min stores the minimum value of X, which happens to be 0 in this case, across all the active lanes.

Finally, we have simd_product, which multiplies up all the values of X. As one of the variables happens to be 0, the final product that is broadcast is 0. These instructions work on both integer and floating point scalar and vector types.

Reduction operations are also supported for bit-wise operations on integral types. Each lane now has a bit field value manufactured using the lane ID as shown here.

We can then use the simd_or instruction on the variable X. The values of X across all the active lanes are or-ed together and broadcasted back to the variable F. This final value happens to be 0x1F3 in our example.

Similarly, simd_xor computes the xor values of X and broadcasts back the resulting value, which turns out to be 0x0.

Finally, we have simd_and, which adds up all the values of X across all the threads in the SIMD group. This turns out to be the constant 0x3.

That was an overview of the new SIMD scope reduction instructions available in the A14 GPU.

Now let's take a look at a new set of SIMD scope instructions that greatly improves matrix multiplication. Matrix multiplication is a very common operation for GPU compute and is the basic building block for many parallel computing workloads. For example, in machine learning, it is used when computing convolutions and fully connected neuron layers.

Linear algebra is used to represent and solve systems of equations. The A14 introduces a brand new set of SIMD scope instructions which allows you to implement large matrix multiplies very efficiently.

Like the reduction operations we saw before, these are SIMD group scope operations. You can now easily build larger, more sophisticated functions on top of these building blocks. In the Metal shading language, we now have SIMD group scope data structures to represent 8 by 8 and 4 by 4 matrices. You can then use multiply or multiply_accumulate versions of the SIMD group scoped matrix operations. Let's look at an example of multiplying two 16 by 16 matrices in a threadgroup using these functions.

We're going to use the 8 by 8 SIMD group matrix operation to build a 16 by 16 third group matrix multiplication.

First we partition the result matrix across four SIMD groups. Each SIMD group will be responsible for computing one 8 by 8 quadrant of the result. Next, we partition the first input matrix. Here, each SIMD group in a row shares a single block column.

Then we partition the second input. Here each SIMD group in a column shares a single block row.

Then we will accumulate the products from the first set of 8 by 8 results... and the second. Now let's look at how to write this in the Metal shading language. With A14, we have introduced new SIMD group matrix objects and multiplication operations into the Metal shading language. These new primitives will greatly improve the performance of your matrix multiplications in your shader. It's easy to use and can be done with just a few lines of shader code.

Here we define three objects to represent 8 by 8 matrices with data in 32-bit floating point format. We have a matrix for each of our inputs and one for our result.

Next, we perform the address arithmetic necessary to partition our four SIMD groups across each quadrant of the result.

Then we accumulate some matrices from each source matrix, broadcasting the appropriate row and column to each SIMD group.

Finally, we store the results. You can see how easy it is to construct more complex shaders using these new primitives.

If you're using Metal performance shaders, you will benefit from SIMD group scope matrix multiplies to accelerate not only matrix multiplication but also CNN convolutions. Matrix multiplication for arbitrary sizes can be performed using MPS matrix multiplication.

Here we're encoding a kernel to compute a result with M rows and N columns.

CNN convolutions can be performed using MPSCNNConvolution. Here we're encoding a convolution kernel on a batch of images. Both of these kernels are available using the new Metal Performance Shaders graph introduced this year with iOS 14.

MPS Graph allows you to take these basic kernels and build complex machine learning networks with them. As an example, let's look at how the matrix multiplication kernel from earlier can be used in the graph. Here we'll initialize a new graph for our operation. Then we define nodes to represent our two input matrices. Then we construct a new result node from our inputs using a matrix multiplication operation.

Finally, we execute the graph. For more details on how to use MPS kernels and graph operations, please refer to previously available WWDC presentations. As mentioned before, MPS will automatically take advantage of A14 for these operations.

Here we can see the improved throughput of general matrix multiplications on A14 relative to A13.

On A14, using the new SIMD group matrix multiply operations, average performance is improved by 37%.

CNN convolutions on A14 show an average improvement of 36% from A13. And when we look at training a full machine learning network like Inception V3, A14 improves by 22%.

That wraps up SIMD group scope matrix multiplication.

Let's recap the new Metal features for the A14.

Barycentric coordinates and primitive ID enable new deferred rendering techniques including visibility buffer rendering.

New texture addressing modes, which are useful when using texture atlases. SIMD group scope reduction instructions that enable better communication between threads.

We have also improved compute and machine learning performance with SIMD group scope matrix multiply instructions. And finally, Metal on A14 takes advantage of a host of architectural improvements, saving bandwidth with improved lossless compression, faster and more efficient local memory and better GPU-driven pipelines.

Thank you for watching.

-

-

9:50 - Visibility buffer - Geometry stage

// Vertex Shader - Geometry stage vertex float4 geoVertex(float3* pos [[buffer(0)]], float4x4* vpm [[buffer(1)]], Uint vid [[vertex_id]]) { float3 p = pos[vid]; // Position fetch return doTransform(p, vpm); // Transform step } // Fragment Shader - Geometry stage fragment VisBufferFragment geoFrag(uint primid [[primitive_id]], float3 baryc [[barycentric_coords]], uint drawid [[buffer(0)]]) { VisBufferFragment out; out.surface_id = makeID(primid, drawid); // Combine draw ID and primitive ID out.barycentric = baryc.xy; return out; } -

10:21 - Visibility buffer - Lighting stage

// Lighting Compute Kernel kernel lightingCompute(Draws* draws [[buffer(0)]], texture2d<float4> lit [[texture(0)]]) { /* Reconstruction using Visibility Buffer */ MaterialInput mi = doReconstruct(surface_id, baryc, draws); /* Apply material model */ MaterialOutput mo = doMaterial(mi, mat); /* Apply lighting function */ lit[thread_id] = doLighting(mo, lights); } -

12:27 - Visibility buffer - Reconstruction step

MaterialInput doReconstruct (uint32 surface_id, short2 baryc, Draws* draws, Mesh* meshes) { MaterialInput mi; /* Retrieve primitive ID and Draw ID */ uint primid = surface_id & 0x0000FFFF; uint surface_id = ids >> 16; /* Retrieve the 3rd barycentric coordinate */ float3 bc = float3(baryc.xy, 1.0 - baryc.x - baryc.y); /* Retrieve (mesh ID, vertex ID) using primitive ID and draw ID */ uint meshid = draws[drawid].meshid; uint3 vertid = uint3(draws[meshid].ib[primid*3+0], draws[meshid].ib[primid*3+1], draws[meshid].ib[primid*3+2]); /* Interpolate vertex buffer data cross barycentric coordinates */ mi.uv = meshes[meshid].vb[vertid.x].uv.xy * bc.x + meshes[meshid].vb[vertid.y].uv.xy * bc.y + meshes[meshid].vb[vertid.z].uv.xy * bc.z; /* Other draw state such as normals */ mi.normal = normalize(draws[drawid].vpm * float4(normal, 0)).xyz; return mi; } -

15:25 - Texture addressing modes

/* * rAddressMode : The address mode for the texture depth (r) coordinate. * sAddressMode : The address mode for the texture width (s) coordinate. * tAddressMode : The address mode for the texture height (t) coordinate. * borderColor : The border color for clamped texture values. */ let device = MTLCreateSystemDefaultDevice()! let samplerDesc = MTLSamplerDescriptor() /* … Program other sample state… */ samplerDesc.magFilter = .linear; samplerDesc.sAddressMode = .mirrorClampToEdge samplerDesc.tAddressMode = .mirrorClampToEdge samplerDesc.rAddressMode = .clampToBorderColor samplerDesc.borderColor = .transparentBlack let samplerState = device.makeSamplerState(descriptor: samplerDesc)! -

20:45 - SIMD reduce_sum

void reduce_sum(device float const* input_array, device float* total_sum) { threadgroup float SSA[32]; float a = input_array[read_offset]; float simdgroup_sum = simd_sum(a); SSA[sg_index] = simdgroup_sum; threadgroup_barrier(mem_flags::mem_threadgroup); if (simdgroup_index_in_threadgroup == 0) { *total_sum = simd_sum(SSA[sg_index]); } } -

24:56 - SIMD 16x16 matrix multiplication

// SIMD 16x16 matrix multiplication void matmul(threadgroup float const* A, threadgroup float const* B, threadgroup float* C) { simdgroup_float8x8 matA, matB, matC(0.0f); A += A_increment(sg_index, A_row_stride); B += B_increment(sg_index, B_row_stride); C += C_increment(sg_index, C_row_stride); for (ushort k = 0; k < 16; k += 8) { simdgroup_load(matA, A + k, A_row_stride); simdgroup_load(matB, B + k * B_row_stride, B_row_stride); simdgroup_multiply_accumulate(matC, matA, matB, matC); } simdgroup_store(matC, C, C_row_stride); } -

25:51 - Metal Performance Shaders

// General matrix multiplication let matMulKernel = MPSMatrixMultiplication(device: device!, resultRows: M, resultColumns: N, interiorColumns: K) matMulKernel.encode(commandBuffer: commandBuffer, leftMatrix: A, rightMatrix: B, resultMatrix: C) // CNN convolution let convKernel = MPSCNNConvolution(device: device!, weights: convDataSource) convKernel.encodeBatch(commandBuffer: commandBuffer, sourceImages: sources, destinationImages: results) // MPS Graph let graph = MPSGraph() let A = graph.placeholder(shape:[M, K], dataType: .float32) let B = graph.placeholder(shape:[K, N], dataType: .float32) let C = graph.matrixMultiplication(primaryTensor: A, secondaryTensor: B) graph.run(feeds: [A, B] targetTensors: C targetOperations: nil)

-