-

Metal for Machine Learning

Metal Performance Shaders (MPS) includes a highly tuned library of data parallel primitives vital to machine learning and leveraging the tremendous power of the GPU. With iOS 13 and macOS Catalina, MPS improves performance, enables more neural networks, and is now even easier to use. Learn more about these advances in MPS and gain a practical understanding of how to implement innovative techniques such as Style Transfer.

리소스

- Training a Neural Network with Metal Performance Shaders

- Metal Performance Shaders

- Metal

- 프레젠테이션 슬라이드(PDF)

관련 비디오

WWDC20

Tech Talks

WWDC19

-

비디오 검색…

Good afternoon. My name's Justin. I'm an engineer in GPU Software, and this is Metal for Machine Learning.

Today we'll be discussing the Metal Performance Shaders framework, and new machine learning features that we added this year. The Metal Performance Shaders or MPS is a collection of GPU-accelerated primitives, which allow you to leverage the high-performance capabilities of metal in the GPU. MPS provides kernels for image processing, linear algebra, ray tracing, and machine learning. Now machine learning kernels support both inference and training, and they're optimized for both for iOS, macOS, and tvOS. MPS also provides a convenient way of building neural networks through the graph API. So, here we can see how MPS fits into the larger Apple ML ecosystem.

You have higher-level frameworks like Core ML and Create ML that give you a convenient way to implement many of your networks. But if you want a little more flexibility and control over your program, you can use a lower-level framework like MPS.

And this year, we have expanded our machine learning support with several new features.

We've added kernels to support even more networks than before, we've improved performance on existing networks, and we've made MPS even easier to use. Now, as we go over these new features, it's going to be helpful to know a few things about how inference and training work in machine learning. So, let's briefly review these concepts.

So, inference is the process of applying a network on an input, in this case an image, and producing an output or a guess of what it is.

Now, the network is made up of a variety of functions such as convolutions and neuron activations, and these layers in turn depend upon a set of parameters. During inference, these sets of parameters are fixed, but their values are determined during the training process. So, what happens during training. During training, we give the network many images of known objects. The training process involves repeatedly classifying these images, and as we do so, we update our parameters, and each iteration of the network produces a better set of parameters until we finally reach a set of parameters that allows us to best classify the images. Now, at that point we stop the training process, and our parameters are ready to be used in inference. So, let's look at how we can MPS to implement some of these ideas. But I would like to mention that there's a lot more to inference and training than what we just covered here. So if you want more details, please see some of our talks from the past couple years.

Now, we added several new features this year to better support a wide range of inference and training networks.

So, first we made creating graphs of your network simpler by supporting implicit creation of your training graphs from your inference graphs. We've added kernels for separable loss layers and random number generation to enable a variety of new networks and we've added support for things like predication and better control over how MPS commits its work to improve performance. So, let's start with implicit graph creation. With implicit graph creation, we can implicitly create our training graphs from our inference graphs.

So, let's first review how we create a graph for our network. Here we have a simple inference network. It's made up of some convolution layers, some pooling layers, and finally some fully connected layers. So, we're going to create a graph for this network by creating nodes for each layer. We're going to create a convolution node for each of the convolution layers, a pooling node for each of the pooling layers, and finally some fully connecting nodes for the fully connected layers. So now with our inference graph defined, we can extend it to a training graph.

We do this by first attending a loss node at the end of our inference graph, and then then we add gradient nodes for each of our forward nodes, moving in the reverse order of our inference graph. So, we can look at the code for this section. As before, we start by adding the loss node, and then we add each gradient node, just moving in the same order we just mentioned. So, here we can see that each gradient node is pretty easily created from the forward node, but with implicit graph creation, this is even simpler.

Now, once you've initialized your gradient image with the loss node, we can automatically create the entire training graph corresponding to the inference graph.

So, as before, we create our loss node. Then with a single line of code, we can create our entire training graph. Now, in this case, we're creating the training graph from the loss node. We're going to use a nil argument for our source gradient, which tells the loss node to use its result to initialized the gradients. But we could use another image if we wanted. And we're also providing nil for the second argument. This is called a node handler. The node handler allows you to provide a block, which you can use to execute some custom code to configure your nodes after they're created.

I also want to mention another useful feature, the stop gradient property.

So, typically, when you generate your training sequence, all of your trainable layers will update their weights.

In this case, those are convolutions and the fully connected layers. But in some cases, you may only want to update the weights for some of the layers of the network, as in transfer learning, for example. Now, in transfer learning, we are going to use pretrained weights for many of the layers, and we only want to train the weight for some of the layers. Let's say, for example, the final fully connected layers.

Implicit graph creation also supports creating graphs for these types of networks through the stop gradient property. So, to do this, we're going to set the stop gradient property on the first, on the first layer, whose weights we want to update.

In this case, the fully connected layer. And then when the graph is generated, none of the subsequent gradient nodes will be created. So as you can see, using implicit graph creation is a very easy way of generating your training graphs from your inference graphs.

So now let's look at a feature we added to support some new networks. Separable loss kernels.

So, earlier, we just saw how to use a loss node using MPS CNN loss.

MPS CNN loss consumes a final image, which is usually the result of something like a soft max layer along with the ground truth data in order to compute gradient values to begin the back-propagation phase. But there are some networks which use multiple intermediate loss values in order to produce a final loss. So, to support this, we added separate forward and gradient loss kernels. So, here we can see two loss values being computed using forward loss nodes, and then we take those results, add them together to produce a final loss. Now, we need to initialize the gradient value to begin the back-propagation phase.

Before this happened implicitly through the loss node now, we need to add an initial gradient kernel. This is going to generate just a gradient image of ones, and it's going to be sized to the result of the final loss calculation. So with the gradient values initialized, we can start the back-propagation phase. We're going to use gradient kernels for each of our forward kernels with an addition gradient and gradients for each forward loss kernel. Now, let's take a look at a network that uses separable losses. Specifically, we're going to look at style transfer. Now, the style transfer network produces images which are combinations of a style and an original image.

The model we'll be looking at is one that you can find and to re-create, and it's implemented using MPS.

Now in inference, this network consists of a transformer node, which is made up of things like convolutions and instance normalization layers, and their weights make up the trained parameters. This is where the style is incorporated. It's learned into the parameters through the training process.

So let's look at how we do the training. So here we have an overview of the network.

Now, as an inference, we're going to apply the transformer to produce a stylized image. Now, in this case, this is going to be the network's current guess at the best styled image, which combines the style and the content. And since the goal of the network is to match both the desired style and the content of the original image, we're going to need two loss values. So, the first loss value is computed by this sum network that we're going to call the style loss network.

This loss value is going to help ensure that the network converges on a result, which closely matches our desired style. And then we also want to make sure that the generated image also retains the features of the original. So, for this we're going to use a second loss network. This is the content loss. And we can use our new forward loss kernels for each of these loss calculations.

But let's take a closer look at the style loss network.

So, in order to compute the style loss, we need a way of sort of measuring the style of an image. So, to do this we're going to calculate what's called the Gram Matrix for several intermediate feature representations of the images. This year, Gram Matrix calculations are natively supported in MPS with both forward and gradient kernels. So let's take a quick look at the Gram Matrix and how it's computed. So, the Gram Matrix represents uncentered cross-correlations between feature vectors. Now, each feature vector results from spatially flattening the results from a single image in a single feature channel. We compute dot products between each feature vector to produce a Gram Matrix. So let's take a look at how it's used. So, before we get to the Gram Matrix, we're going to use the VGG image classification network to extract some features from both our style and our stylized input image. Now, as we described before, the Gram Matrix gives us correlations between feature vectors.

Now, when we take these from the features extracted from the style, this gives us our sort of ground truth for the style that we want to apply, and we're also going to do the same thing for our current guess at the best stylized image.

Now, we take these two values together to form our style loss.

So now let's look at how we compute the second of our two losses, the content loss. So, as before, we're going to extract features using VGG and then compute a loss using those features and our features from our stylized image.

And then the network's final loss is going to be the sum of the content loss and the style loss.

So, now let's look at how we can use MPS to compute these values and initialize gradients. So first, let's assume we have our feature representations, as produced by VGG.

First, we're going to add our Gram Matrix calculation nodes to compute the Gram Matrix for both the style and our stylized image.

We're going to feed these results into a forward loss node to just compute the loss for our style.

The source image here is the result of the Gram Matrix calculation for our network stylized image.

The Gram Matrix for the reference style image is going to be used in the labels argument.

Now this shows an important feature of the new forward loss kernels. Previously, you had to pass labels using MPS state objects. But now, you can used MPS images. So now we can add the loss node for the content loss using the features of the stylized image and the original image, and we can combine them to get our total loss value. And now we need to initialize the final loss gradient to begin the back-propagation phase.

We do this using the initial gradient node we discussed before.

Now we saw before how the result of the loss node can be used to implicitly generate the training graph. This is because it generates the initial gradient. But now, as I mentioned before, with the separable loss kernels, we do this explicitly using the initial gradient node. So, this is the node that were going to use to generate our training graph.

So, with the graph generated, let's take a look at what this network does in action.

So, here we can see the style transfer network running on the GPU using MPS. This was run on a Mac Book Pro with an AMD Radeon pro 560 graphics card. Now this is showing the results of the style transfer training at each iteration as it progresses. As you can see, the style is being applied progressively, but the content of the image is being retained. I also want to mention that these iterations have been sped up for this video from real time to better illustrate the progression of the training network.

So now, I'd like to look at another feature that we added this year, random number generation.

This year we added support for two types of random number generators in MPS. We have a variant of the Mersenne Twister called MTGP32 and a counter-based generator called Philox.

Now these generators were chosen because their algorithms are well suited to GPU architectures, and they still provide sequences of random numbers with pretty good statistical properties. Now, you can use these kernels to generate large sequences of random numbers using buffers and GPU memory. And since you have this result available in GPU memory, you can avoid having to synchronize large rays and numbers from the CPU.

And generating random numbers like this is important for several machine learning applications. They're required, for example, for initializing your weights of your networks for training and also for creating inputs when training generated adversarial networks, or GANs.

Now GANs are an especially important use case for random number generators. You had to generate the random input at each iteration of your training. If you had to synchronize an array of numbers from the CPU, every iteration, it could make training your network prohibitively expensive.

So, let's take a closer look at those networks and how we can use the new random number generators.

So, generative adversarial networks or GANs are built around two networks. We have a generator network and a discriminator network.

Here we have an example of a generator, which just generates images of handwritten digits. Now, similar to image classification during training, we're going to provide the network with many examples of handwritten digits. However, instead of attempting to classify them, the network is going to attempt to generate new images from a random initial set of data to look similar to its training set. So, in order to perform this training process, we needed some way of determining how similar these images should be. So, for this second network, we're going to use what we call the discriminator.

Now as this name suggests, it's designed to discriminate between training images and those images which are simulated by the generator. So, in this case, it acts as an image classifier network but with only two possibilities. The input is either real, from the training set, or it's a generated, or a fake image. So you can see, here's the discriminator, looking at some numbers and coming with whether they're real or fake.

Now, typically both the generator and the discriminator are trained together. We trained the generator to produce more realistic images, while we trained the discriminator to better distinguish synthetic images from the training images. So, here we have a high-level overview of the nodes for your training network. So here's our discriminator training, training network.

It consists of two loss calculations. So, this is an example of where you could use the separable loss nodes we just talked about.

We have one loss where we attempt to ensure the discriminator properly classifies the simulated images as fake, and we have a second loss where we trained the discriminator to classify the real images from the training set as real. After computing the separate loss values, we can use an initial gradient node to initialize your training graph. And secondly, here we have the generator training network. This one is a little simpler. It just has a single loss value.

But in this case, we use a label value of real to ensure that our generator generates images, which the discriminator subsequently classifies as real.

Now, I mentioned earlier that the generator network begins with a random set of data that we're going to use our random number generator for. So, let's take a closer look at random number generation. Now random number generation kernels belong to the MPSMatrix subframework, and they're accessed through MPSMatrix random classes.

So, they operate on MPSMatrix and MPSVector objects, which means they work with metal buffers, and they support generating random integers with the underlying generator, or you can generate floating point values using a uniform distribution. So, here, we're going to create a distribution descriptor for uniform distribution of values between 0 and 1. Then we're going to create our generator, testing the proper data types, and then we give it an initial seed.

Finally, we create a matrix to hold the result, and we encode the operation to the command buffer. So, now let's go back to the network and see how we can use it. So, here's a closer view of the generator network. We have some convolution layers, some ReLu layers, and the hyperbolic tangent neuron. Now the input image is going to be the output of our random number generator.

As we saw before the random number generator works with matrices, but the graph and all the neural network kernels require images. So, we're going to use our MPS copy kernel to copy the data from the matrix into an image.

So, first we'll create a matrix to hold our random values.

Then we'll also create an image, which is going to serve as the input for our network.

And we're going to initialize a copy kernel to perform the copy.

Then were going to encode our random number generator to generate the values. We're going to encode the copy to copy them into the image, and now we're going to encode the network using the image. Now, for more details on this network and using MPSMatrix random number generation kernels, please see the online documentation. There's also some sample code.

Now we also added features to help improve the performance and efficiency of networks using MPS. So let's take a look at one of them now, predication. With predication, you can now conditionally execute MPS kernels.

The kernels' execution is predicated on values which exist in GPU memory, and they're referenced at the time of execution of the kernel.

So, let's take a look at a network, which illustrates how this can be used. This is image captioning. This is a network we showed a couple years ago, and it generates captions of images using a convolutional neural network and a recurrent neural network.

The convolution network is the common classification network. In this case, we're using Inception V3. It's going to be used to extract features from the source image. Then we take these feature maps, and we feed them into a small LSTM-based network where those captions are generated from the extracted features. Now, then we iterate this network to produce the image caption. In this case, we need to know, in this case, we need to run the LSTM-based network for some number of iterations, which is going to be fixed, and we need to do it at least as many times as we believe will be needed to generate the captions for the image.

In this case, for example, we run the LSTM-based network 20 times. Each iteration then computes the best captions by appending a new word to the captions produced in the prior iteration. But if the caption were to only require five words, then we've had to run many more iterations than we need.

With predication, we can end the execution early. In this case, after the five-word caption has been generated.

So let's look at how we can use this in MPS. But to do so, we need to first discuss how we provide predicate values to MPS commands, and for this, we introduce the MPSCommandBuffer. Now, MPSCommandBuffer is a class that conforms to the MTLCommandBuffer protocol, but it adds a little bit more flexibility. It can be used anywhere you're currently using metal command buff, and like a MTLCommandBuffer, it's constructed from a MTLCommandQueue. Now, it provides several important benefits. It allows you to predicate execution of MPS kernels, and as we'll discuss later, it allows you to easily perform some intermediate commits as you encode your MPS work, using a method called commitAndContinue, but we'll get back to that later. First, let's look at how we an use MPSCommandBuffers to supply predicates to MPS kernels. So an MPS predicate object contains a metal buffer, which contains 32-bit integer predicate values, and they're at an offset.

We take the value within the metal buffer at the offset as the execution predicate. Now, a value of 0 means we don't want the kernel to execute, and a nonzero value means to execute as normal. So, in this diagram here, we've effectively bypassed the execution of this kernel by setting the value at the offset to 0. And the offset is important. It can allow you to share a single metal buffer among multiple MPS predicate objects so you can send a predicate to multiple kernels. Each predicate value will be referenced with a different offset. Now, in order to use a predicate value, we have to attach it to an MPSCommandBuffer. This way, any MPS kernels that we encode on that command buffer will perceive the predicate values. So, let's take a look at how we can create a predicate and set it on an MPSCommandBuffer. So, first, we create an MPSPredicate object, and we attach the predicate to our MPSCommandBuffer. Now, we'll encode an operation that modifies the predicate values. Now because of the existing metal buffers, we need a kernel that produces its result in a metal buffer. You can use your own kernel, or you may be able to use one of the MPSMatrix kernels, which is what we're going to do here. So, we're going to start by wrapping the predicate in an MPSMatrix object. Then we're going to encode a kernel to modify the predicate value.

So, here, we're just using a linear neuron kernel, and we're going to use it to do something simple. We're just going to decrement the value of the predicate. And finally, we're going to encode a cnnKernel to read the value of the predicate prior to execution.

So, using predication in MPSCommandBuffers is an easy way of eliminating unnecessary work in your networks. If you have kernels, which can be bypassed, you can use predication to take advantage of the reduced workload. And if there are multiple kernels for which this applies, you can use multiple predicates and use only a single metal buffer by setting unique offset values. So, now let's talk about the other feature of MPSCommandBuffers, commitAndContinue. Now this is a method which allows you to easily get better GPU utilization when executing your work.

So, to see how it can benefit, let's first review how a typical workload is executed.

Now, the usual way of executing MPS kernels is to encode your work onto a command buffer and then commit it for execution. So, here we have a case of a single command buffer, you encode some work, and then we execute it afterwards.

Now, in reality, the CPU's encoding time is going to be less than the GPU's execution time, but we want to avoid any idle time due to throttling and things like that.

So you can see we're going to get some stalling here between the CPU and the GPU. Now, one way of solving this is to use double buffering. With double buffering, we're going to keep around two command buffers, and we're going to encode work to one while executing the other. Now, this should pretty well eliminate the idling that we saw before, but it has some limitations. So, first off, as I mentioned, you're going to have to keep two sets of work, which means you're going to have to find a way to partition your work into two independent workloads. And as a result, you can have substantially increased memory requirements.

However, we the commitAndContinue method, we can gain much of this performance benefit by dividing each workload into smaller portions.

So, here we're going to break down the work by utilizing independence of layers within each command buffer.

Then we're going to commit the smaller groups of work using double buffering. Now, commitAndContinue is automatically going to handle this internal division of work while also ensuring that any temporary objects that you allocated on the command buffer will remain valid for subsequent work to be encoded. As with double buffering, it allows you to execute work on the GPU while continuing to encode it on the CPU. And by easily allowing you to partition your workload, you can avoid the increased memory requirement of double buffering while still getting much improved GPU utilization.

So let's see how you can take advantage of this in your own code.

So here we have four MPS kernels we're encoding to a MTLCommandBuffer. And finally, we commit the work for execution.

As we showed earlier, this is going to give you the stalls that we saw. However, by using MPSCommandBuffers and the new CommitAndContinue method, we can easily improve this.

So, here we're going to create an MPSCommandBuffer.

We'll encode our first two kernels.

Then we'll call commitAndContinue. This will commit the work that we've already encoded, move any allocations forward, and allow us to immediately continue encoding the other two kernels. Finally, we can commit the remaining work using a regular commit. So you can see, using commitAndContinue requires very few changes to your code, but if you're taking advantage of the graph, it's even easier.

When you encode and MPS in graph using MPSCommandBuffer, it will automatically use commitAndContinue to periodically submit work throughout the encoding process. No further changes are needed. Simply use an MPSCommandBuffer instead of a MTLCommandBuffer. And finally, I want to point out that you can still combine commitAndContinue with double buffering and get even better performance. So, as you can see here, it allows you to eliminate even the small stalls that we saw with commitAndContinue. So, we now have a variety of options for committing our work for execution. You can use a single command buffer, executing a single piece of work at a time. For better performance, potentially with increased memory consumption, you can use double buffering.

And now, with MPSCommandBuffer, you can achieve nearly the same performance using commitAndContinue. And if you still want even better performance, you can use commitAndContinue and double buffering. So let's take a look at how these approaches perform on a real-world network. So for this case, were going to look at the ResNet 50 network running on a CIFAR-10 dataset. Now this data was measured using an external AMD Radeon Pro Vega 64 GPU.

It's a common image classification network with many layers, so it's a good example of what we can see with commitAndContinue. So we're going to start with our single buffering case as our baseline. We have performance and memory consumption here on the vertical axis. So, let's see how double buffering compares. Now we've improved the performance quite a bit, but we've also increased our memory consumption by a similar amount.

That's because we achieve double buffering by maintaining twice as much work in flight at any given time. So, let's look at using CommitAndContinue.

We come very close on the performance and with significantly less memory overhead, and here we also see CommitAndContinue along with double buffering.

We still get a little bit better performance, but we still use a lot more memory as well. So, you can see, using CommitAndContinue is a very easy way to achieve much better performance with minimal increase in memory pressure. So now, let's put all of these approaches together by looking at another application of machine learning, denoising. Now as this name suggests, denoising seeks to remove noise from a noisy image and produce a clean one.

Now, we're going to be looking at this in the context of ray tracing. If you saw the earlier metal for ray tracing session, you saw another example of denoising, one using image processing techniques. Here, we're going to be looking at a solution based on machine learning. So for this example, we'll look at three phases. We're going to create an offline training process. We're going to run the training network, and finally we're going to deploy the inference graph to filter new images.

So, first, we need to create the graph. Let's take a closer look at the structure. So here we're going to start with our input image, which is our noisy image, which came out of our ray tracer. We're going to feed this image into encoder stages. Now encoders are small subnetworks which extract higher-level feature representations while spatially compressing the image. We're going to pass these results into our decoder stages. Now these perform the reverse process. They're going to reconstruct the image from the feature maps. Now we're also going to use what are called skip connections. These boost features from the encoded image into each decoder stage.

This is done by forwarding the result from each encoder to its decoder. Finally, the denoised image is fully reconstructed.

So, let's take a closer look at the encoder stages. The encoder stage compresses the images while trying to learn how to preserve its features, consists of three pairs of convolution and ReLu layers and finally a max pooling layer.

Let's look at the code.

Now, as we saw before, we can construct each node in the sequence in the same order they appear in the network. And we'll construct the decoders in the same way. You start with an upsampling layer.

After this, we add the result of the corresponding encoder via the skip connection, and then finally we have two pairs of convolution and ReLu layers.

Again, as before, we're going to insert nodes corresponding to each layer in the network.

Now we can put our encoder and decoder stages together.

So, first we're going to connect our encoder nodes.

But before we move on and connect our decoder nodes, we need to put in one more encoder node, which we're going to call the bottleneck node. It's identical to an encoder except it doesn't have the final max pooling layer. And after the bottleneck nodes, we're going to connect our decoder nodes. Now, by passing the result image from the corresponding encoder nodes, we're going to satisfy the skip connections.

So now we have the inference graph. Let's look at the training phase.

To begin the training phase, we need to compute the loss value. So we're going to start we the inference, we're going to start with the result of the inference graph, which for a training iteration is now our network's best guess at the current denoised image. Now, we're going to take the clean RGB image for our ground truth, and we're going to use that to compute a loss value. Now, we're also going to want to compute a second loss. We're going to perform some edge detection. We're going to do this doing a Laplacian of Gaussian filter.

Now, we want to do this because we want our network to learn how to denoise the image, but at the same time we also want to make sure that it preserves the edges of the original image.

So, were going to implement the Laplacian of Gaussian or the LoG filter using convolutions here.

Finally, we're going to combine these two losses. The first loss we're going to call the RGB loss and the second the LoG loss, and we're going to combine these into the final loss.

So now let's take a closer look at how we do this. So, we're going to create our RBG loss node using the result of the inference graph and the ground truth RGB images. So, as you mentioned earlier, we can use separable loss kernels, and we're going to pass both of our, we're going to pass images for both our source and our labels. For our LoG loss, we need to apply the LoG filter to the target RBG images as well as the result of the inference graph.

So, were going to implement the LoG filter using convolution nodes.

We're going to compute the LoG loss using the results of the convolutions, and finally with both loss values computed, we can add them together to produce the final loss. Now with the final loss value, we can begin the back-propagation phase and look at the training graph.

So, we're going to do this as before by computing the initial gradient. With the initial gradient value, we can begin the training graph.

So, this involved several gradient nodes first for the addition followed by gradient nodes for each forward loss and then for the encoder and decoder stages. Now, implementing graph nodes for each of these layers would take a substantial amount of code and introduce plenty of opportunity for errors. However, with implicit graph creation, we can have the graph do all of this work for us. So, here's all we need to write to generate the training graph.

First, we add the initial gradient node using the result of the final loss.

Then using implicit graph creation, we generate all of the remaining gradient nodes.

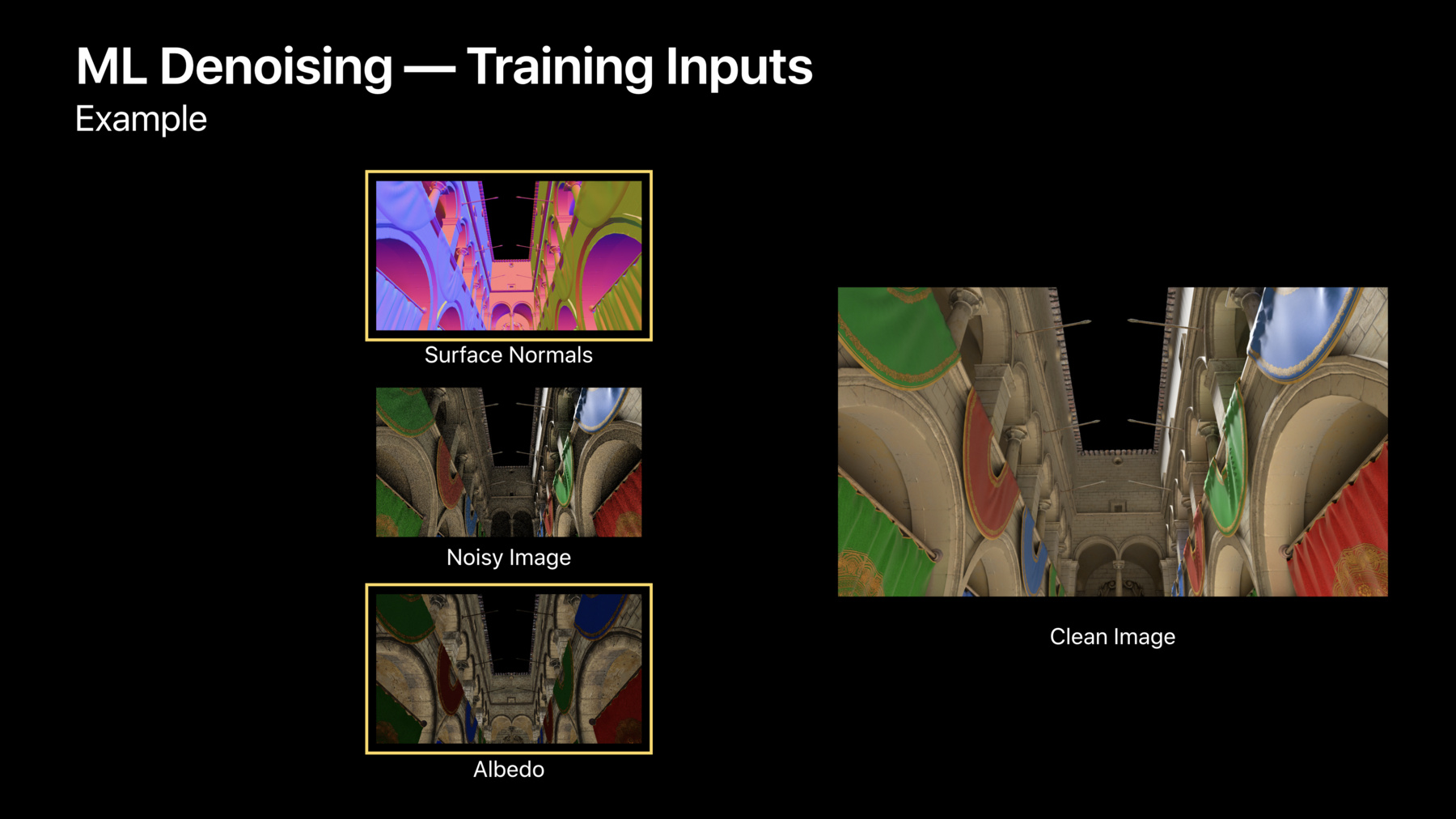

So now that we have our graph created, we can begin training it. So first, let's discuss our input training data. Now, the inputs are images for which we know the desired result. In this case we have noisy images and we have the corresponding clean images.

Now both images were generated using a ray tracer built on top of MPS. We generated the noisy images by only letting the ray tracer run for a short period of time. And the clean images we obtained by running the ray tracer for an extended period of time. Now, by training with these images, we hope our network will learn to approximate the clean ones from the noisy ones. And further, we're going to augment our input data with a few other images, also produced by a ray tracer.

Surface normal and albedo.

The albedo image is a three-channel image containing values which for the amount of reflected light, the surface normals are a three-channel image where each channel is going to contain a component of the surface normal vector. Now, before we can begin training, we need to do a little bit of preprocessing. So, as I mentioned, these all contain their data in three channels.

However, MPS networks and MPS cnnKernels use their images as four-channel textures. So, we're going to have to concatenate these values together. Now, because each image is three channels, we need to concatenate these into a single metal texture array, and we can't necessarily use the MPS cnn concatenation because it requires feature channels in a multiple of four. However, we can write a simple kernel to do this for us.

So here's a simple metal compute shader to concatenate these images together. We're going to start using a grid of threads mapped to each four-channel pixel the result. Our arguments are going to be a result to hold the concatenated image, the RGB input, the albedo input, and our normal image. So we're going to start having each thread read a pixel from each input at its location in the grid.

We're going to concatenate those values together, and we're going to fill the remaining unused channels with 0.

Finally, we're going to write out the result at its same location in the grid. So now that we have a shader which can concatenate these values together into a single MPS image, let's look at how we hand it to the graph.

Or rather, let's look at how we encode it first. So here's an example of how we encode our kernel and wrap the result in an MPS image.

So our inputs are images containing the data, and we're going to want to use the result as an input to the graph. So, we need to construct an MPS image. We're going to use its texture to hold the result of our concatenation kernel. Next, we're going to bind each argument at its appropriate location.

We'll dispatch our threads and then finally return the image ready to be passed into our network. So, now that our inputs are prepared, let's look at executing the training graph. Now during training, we'll be executing the graph from many iterations. We're going to be executing multiple batches within each training set, and then we're going to be executing multiple batches over each epoch.

So, here we're going to run one iteration of the training graph. We're going to concatenate our images together using the kernel we just showed except for each image in the batch.

We're going to put these together into and array because the graph requires an array of images, one for the source images and one for our labels. Now we're going to use MPSCommandBuffers here, because as we saw earlier, it's an easy way of getting improved GPU utilization.

So finally, we're going to encode the graph and then commit it for execution. So, now let's look closer at each training epoch. Now in this scheme, we're going to process the full training data set, each epoch, to allow for better convergence. We're also going to update the training set every some number of epochs, in this case every 100, and at that point, we're also going to perform our network validation. Finally, at every thousandth epoch, we're going to decrease the learning rate of our optimizer. This will also help improve convergence. So let's look at the code for this. So, we're going to begin by processing the entire training set once each epoch. Here we see every hundredth epoch. We're going to update our training data set, and we're going to run the validation.

And finally, every thousandth epoch, we'll decay our learning rate by a factor of 2. So, now that we've trained the graph, we can begin denoising new images. Now, because MPS is available and optimized across multiple platforms, we can easily deploy the training network on a different device. For example, you may want to execute the computationally expensive task of training on a Mac and then use the train network to filter images on an iPad. So, first, let's take a look at serialization support in MPS. Now all MPS kernels as well as the graph support a secure coding. This allows you to easily save and restore your networks to and from disk.

And for networks which load their weights from a data source, you're going to have to implement secure coding support on your data source yourself. Now this requires the support secure coding property and the init and encode with coder methods. Now, once your data source conforms to secure coding, it's easy to serialize and save the graph. So, first we're going to create a coder in which to encode the graph. Then we're going to call encode with coder on the graph. Now, when this happens, it's going to serialize each of the individual kernels, and if those kernels have data sources, it will serialize those as well. That way, the resulting archive contains all of the information necessary to restore and initialize the graph.

Finally, we can save the data to a file. Now let's look at loading it. So, in order to ensure at the unarchived kernels initialize on the proper metal device, we provide you with the MPSKeyedUnarchiver. It's like a regular unarchiver except you initialize it with a metal device, and then it will provide this device to all the kernels as they're initialized. So, after we load our data, we'll create an unarchiver with the device. We'll restore the graph on the new device, and with the train network now initialized, the graph is ready to be used to denoise new images. So, let's take a look at this network in action. So, here we applied our denoiser to a scene. The top region shows how the scene looks in our input noisy image. The center region shows the result of our denoiser, and you can see the bottom region shows the ground truth clean image. As you can see, the denoised region looks nearly as good as the clean target, except we're achieving this with significantly less work because were not running the full ray tracer. So as you saw, using MPS, we can easily implement complex networks like denoising and style transfer.

This year we've expanded support for inference and training to a new class of networks with features like separable loss and random number generation. And with MPSCommandBuffering, we now support improved performance and better utilization through things like predication and commitAndContinue, and we made all of these features easier to use through implicit graph creation. So, for more information about MPS and metal, please see the online documentation and our sample code, and for more information about MPS and ray tracing, please see the Metal for Ray Tracing session earlier.

Thank you. [ Applause ]

-