-

Accessibility design for Mac Catalyst

Make your Mac Catalyst app accessible to all — and bring those improvements back to your iPad app. Discover how a great accessible iPad app automatically becomes a great accessible Mac app when adding support for Mac Catalyst. Learn how to further augment your experience with support for mouse and keyboard actions and accessibility element grouping and navigation. And explore how to use new Accessibility Inspector features to test your app and iterate to create a truly great experience for everyone.

To get the most out of this session, you should be familiar with Mac Catalyst, UIKit, and basic accessibility APIs for iOS. To get started, check out “Introducing iPad apps for Mac” and "Auditing your apps for accessibility."리소스

관련 비디오

WWDC19

-

비디오 검색…

Hello and welcome to WWDC.

Hello, everyone. My name is Eric, and I'll be joined by my colleague Nathan to talk about accessibility design for Mac Catalyst.

Mac Catalyst has been a huge success for Apple. It is incredibly easy to use, and the developer communities absolutely love it. And we already have some of the best apps on the App Store that are made of Mac Catalyst. And with all that great work out there, it is important to make your app usable for all of your customers.

And this is what brings us here today to talk about accessibility.

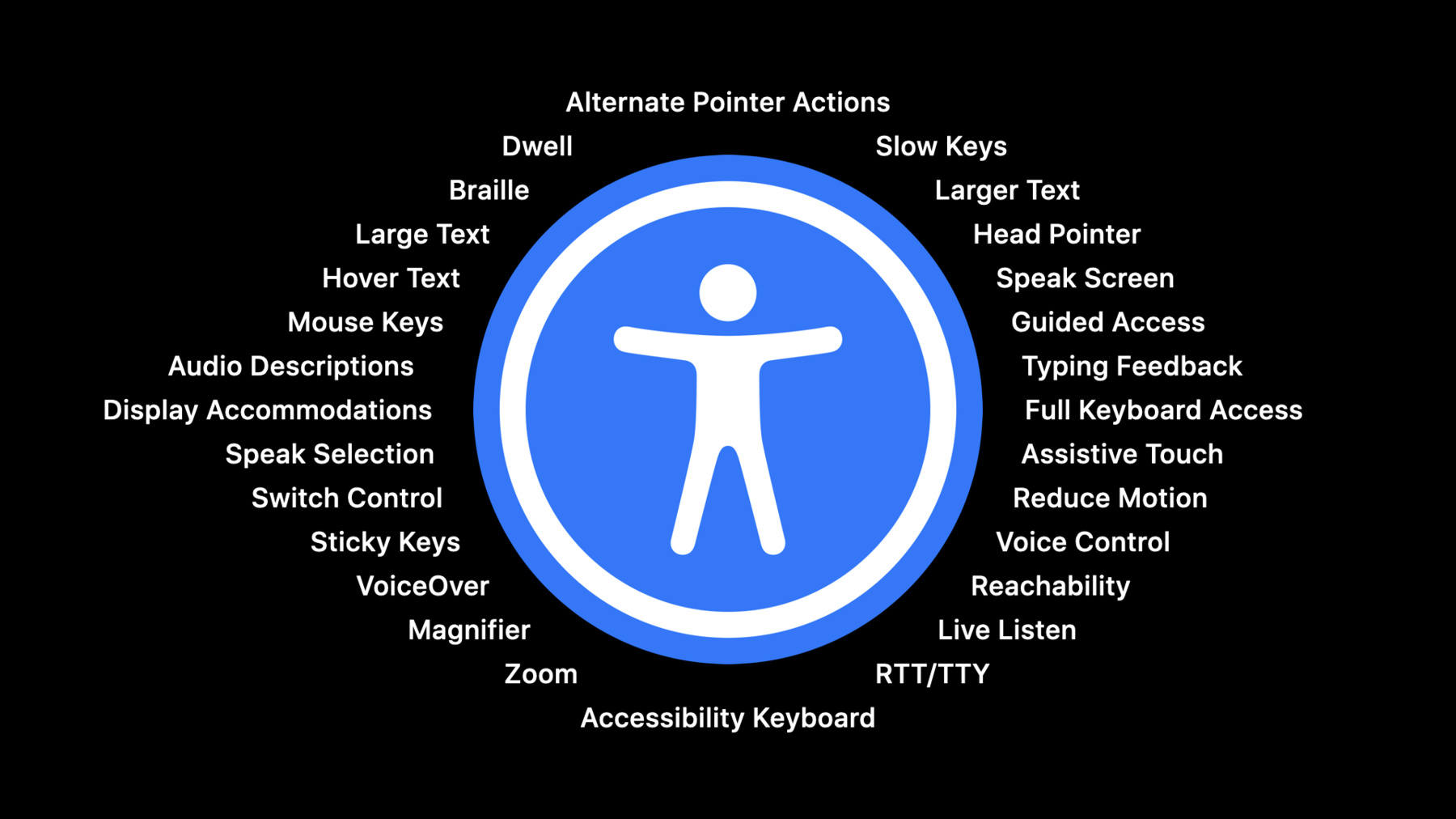

At Apple, accessibility is one of our core values. We have an array of assistive technologies on all of our platforms to help people with disabilities. Our accessibility team has been working so hard to make sure that all the great work you've done for iOS accessibility are converted to Mac Catalyst. So, if you make your iOS app accessible, it is automatically accessible when bringing it to the Mac. This is so that you can continue to think in terms of iOS when working on Mac Catalyst apps. We'll first talk about how to optimize your app experience for keyboard usage by improving focus behavior and adding keyboard shortcuts.

Then we'll walk through what you can do to enhance your app's navigation efficiency for assistive technologies. And finally, we'll give you some tips to test accessibility on macOS for Mac Catalyst apps.

So let's start with keyboard usage.

On macOS, the keyboard isn't just a supplementary interaction method like it is on iPadOS. Instead, it's the primary medium users will interact with your app. So the goal is to make as many functionalities of your app accessible with the keyboard.

And the first thing you could do is to check keyboard focus.

Keyboard focus determines which UI element is currently receiving inputs from the keyboard, and we want to make sure that all UI elements of app that could be interacted by the user can become focused. To demonstrate this, we will look at a sample app called Roasted Beans. It is a spin-off of the Peanut Butter app that we made accessible in previous years. While our Peanut Butter app was a great success, many were asking for a way to find the ideal cup of coffee to enjoy with their peanut butter toast.

So having finished building the iOS app and making it accessible, we now want to bring it to the macOS using Mac Catalyst.

Let's see how this app interacts with the keyboard. To test this, we first need to turn on a system setting that enables keyboard interaction with controls. It is located in the System Preferences app...

the Keyboard section, and under Shortcuts menu.

With the checkbox, use keyboard navigation to move focus between controls.

So go back to our app, and see what happens if we press tabs. We'll see that the add button on the right of the navigation bar now has a focus ring around it.

This means that the add button has the keyboard focus, and if you press the space bar, the button will be activated. The next tab highlights the first item of the sidebar.

The users could press up and down arrow keys to change the selection of the table view.

And subsequent tabs highlights the rest of the interactable controls of the app, the Share button, the Favorite button and the Gift button.

Wow, that is awesome! Without us doing any work, UIKit already makes all of our controls accessible with keyboard, with tab keys looping us through the controls of the app and arrow keys changing the selection of the table view.

Now, if you have a UITableView or a UICollectionView elsewhere in your app, you might notice that arrow keys don't move the selection. And all you need to do for arrow keys to change the selection is to set this new API selectionFollowsFocus to true on your UITableView or CollectionView. In our sample app, because our tableView is a sidebar of a UISplitView, UIKit sets this to true for us so we don't need to do this step.

To learn more about how to further customize your keyboard experience, please check out the sample app and watch the session "What's New in Mac Catalyst." Now we've made sure that all of the interactable controls of the app can take focus. We can talk about the next thing you could do about keyboard usage. Add keyboard shortcuts.

Right now, in order for our users to add a new coffee or rate a coffee, they can only do so by clicking on the screen.

For people who use assistive technologies, finding on-screen UIs to interact can become tedious.

So it would be nice if they are able to perform actions through a quick keyboard shortcut.

So let's see what we need to do if we were to add a keyboard shortcut for sharing a roast with friends.

To do that, I first need to find out the best keyboard combination to use because we want it to be as intuitive as possible for the customers.

You can first check out the Apple guideline for a list of commonly used keyboard shortcuts. If your app has similar functionalities, you could consider using these keyboard combinations for the shortcuts because users are already familiar with them.

For us, sharing isn't on the list here, so another way is to check out the existing apps on the Mac to see if they have something similar. So I checked out the Safari app on the Mac that everybody loves. It uses Command-I as a shortcut for sharing. And I've just decided to do the same.

To do this, we first need to override the buildMenu function in AppDelegate.

We need to create a UIKeyCommand that responds to the keyboard shortcut. We assign a localized string as the title, because this would show up in the menu bar. Then we assign the action that gets triggered for the command. We need to create a UIKeyCommand that responds to the keyboard shortcut. We assign a localized string as the title, because this would show up in the menu bar. Then we assign the action that gets triggered for the command and set the input to letter "I" for modifierFlags command.

Then we need to create a UIMenu that takes the shareCommand as the only child for the menu, and insert this new menu item to an appropriate place on the menu bar. For this demo, I chose to insert it at the end of the edit menu. Now if you open the edit menu, you would see that our new keyboard shortcut, Share, appears nicely under the edit menu, and it can be accessed by pressing Command-I.

And don't forget that all the great work you are optimizing for Mac Catalyst are also great optimizations for full keyboard access on iOS, which is a feature that allows users to use the device with just the keyboard.

In addition, your app can get the exact keyCode from a UIPress object starting in iOS 13.4. This is useful if you're developing a game, for example, because this API gives you the full control of the keyboard. And don't forget to check out our sample app for these usages. For a quick recap, a great app designed for the keyboard is a great app for accessibility.

It is important to make sure that interactable controls are accessible with keyboard focus.

And we recommend you to add some useful keyboard shortcuts for your app.

So that's it for keyboard usage. Next, I will hand it over to my colleague, Nathan, to talk about how you can improve navigation efficiency for assistive technologies.

Thanks, Eric. Hey, folks, my name is Nathan. I'm a software engineer on the Accessibility Team. My team and I have been working really hard to improve how our macOS assistive technologies are going to interact with your Catalyst apps. Like Eric touched on earlier, making your app accessible is to make it usable for everyone. Part of making your app usable is to give users an efficient way to access content.

Today we'll focus on VoiceOver. It's a screen reader that exists on all of our platforms, and it allows users with low or no vision to interact with your app by reading out content on screen. VoiceOver does this through interacting with a tree of accessibility elements based off of your user interface.

On macOS, you might be delivering a more complex app with a bigger user interface, to take advantage of the additional screen real estate. With a more complex interface, it means there's even more accessibility elements for VoiceOver to navigate. This means users need an efficient way to navigate or they could begin to feel overwhelmed.

That's why today, I'm very excited to talk to you about accessibility groups. It's a strategy you can use to improve your app's navigation for VoiceOver users. We've made it possible to use grouping to deliver a more native macOS experience. But before we can talk about improving navigation efficiency, it's important to first understand how VoiceOver sees your app.

This is how VoiceOver sees your app. It's what we call the accessibility tree. It's a collection of elements that are visible by all of our assistive technologies.

Views that are accessibility elements are determined by the isAccessibilityElement property. Each element is a leaf node, which results in a single-level tree of elements. This model works great on iOS. Users navigate with a touchscreen, which means they can navigate elements one by one or quickly jump to them by tapping them on screen.

However, on macOS, VoiceOver users navigate using a keyboard. Without a touchscreen, they cannot quickly jump between elements.

So if we were to use the same model, all accessibility elements would have to be navigated one by one.

To illustrate this navigation challenge, let's take a look at the accessibility elements in our Roasted Beans app. Here you can see there are 26 visible accessibility elements. That means there's at least 26 elements a user would have to navigate through at any given time.

Imagine if it took you 26 keystrokes in Xcode to reach that Compile button. You would probably find programming quite a bit more challenging. While a keyboard shortcut could be added so that navigation to a specific element isn't required, it won't solve every use case. So what can we do to improve this experience? What if we were to take inspiration from a dinner menu? Instead of the menu presenting a long list of every dish, they are categorized into relatable sections, such as salads, main course and side dishes. This would allow a VoiceOver user to navigate between groups before navigating individual elements in a specific group. We can apply this same idea to the accessibility tree. You can define a relation between elements through the use of accessibility containers.

When you set accessibilityContainerType on an element, our assistive technologies will be able to use that information to provide a better navigation experience for the accessibility elements it contains.

You may have already known about the accessibility container API and how it helps users on iOS navigate. For example, it allows them to perform a touch gesture to navigate to the first accessibility element in the next container. VoiceOver would then focus on elements like this.

Since our goal here was to improve navigation efficiency for Mac Catalyst, VoiceOver on macOS will leverage this same API, but adapt the behavior. Accessibility containers on macOS will behave as an accessibility element. This means VoiceOver can navigate focus to the container itself. From here, users can choose to skip past the container to the next element or interact with a container, allowing for exclusive navigation of the containers' accessibility elements.

So when I say accessibility containers will behave as an accessibility element, this is because when we build the accessibility tree for a Mac Catalyst app, the container becomes its own node on the tree and its accessibility elements become their own subtree. This structure closely aligns with the accessibility API built around AppKit.

Its model works great for macOS because it significantly reduces the number of accessibility elements a user needs to navigate at any given time.

The key takeaway here is that accessibility containers are an accessibility element on Mac Catalyst, so ensuring you have set standard accessibility properties such as accessibility label on your containers is essential. Looking back to our Roasted Beans app, what would we see now that the accessibility containers behave as an independent node in the accessibility tree? Here you can see a dramatic efficiency improvement. This reduces the number of visible elements from 26 to 8. Now that we've seen how much of an impact accessibility containers have on navigation, let's take a few moments to discuss the different types and when you should use them. Data tables are specifically for when your container, such as a graph, adopts the UIAccessibilityContainer DataTable protocol. Lists are for ordered content. These are primarily used in web pages or in a PDF's table of contents. Landmarks are containers used specifically for web pages and tvOS. Lastly, semanticGroups are a general container type on iOS. On iOS, their accessibility label will be spoken the first time VoiceOver users focus on an element within that container. On macOS, their label will be spoken when VoiceOver focuses on the container itself.

So in our case, we want to be using the semanticGroup type to improve the navigation experience. While grouping can greatly improve the navigation experience, I want to be sure that you understand that too much grouping can overcomplicate the navigation of your app. Since each accessibility container becomes a node in the accessibility tree, elements within the container are not discoverable unless a VoiceOver user explicitly interacts with the group.

So let's take a look at a few examples to see when we should group elements. To start things off, elements belonging to the same functional section should be grouped. But how do we discover these functional sections? Let's take a look at Swift Playgrounds, a recent Mac Catalyst app. Imagine you have a friend who just started learning how to code. They open up Swift Playgrounds and start one of their first lessons and unfortunately, they get stuck on one of the problems. But luckily for them, they have you to call upon. Imagine yourself talking to them over the phone. How would you help them navigate the app? You might ask, "Do you see a list of chapters on the left? Which chapter are you on? In the world view on your right, which tile is your character byte standing on?" Or maybe you're trying to teach them more about writing code, in which case you could separate the middle into two functional groups, the top being the editor where you write code and the bottom being the auto-complete suggestions. By walking through how you might verbally describe your app, you can more easily identify these functional sections that should be placed into accessibility containers.

Another instance of when you should use accessibility containers are when they are related by type or functional purpose.

For example, UINavigationBar, UITabBar, UICollectionView and UITableView are some of the standard UIKit views that are accessibility containers of type semanticGroup by default. So if you have created your own custom UI elements that act as tab bars or navigation bars, please follow the expected default behavior by configuring it as an accessibility container.

Now let's step through an example in our Roasted Beans app to see how easy it to improve the navigation experience in your own apps. Starting on the left, we have a UITableView. It's an accessibility container of type semanticGroup by default.

Recall that accessibility containers are an independent accessibility element for our Mac Catalyst app. Which means that just like any other accessibility element, we recommend giving the container a localized accessibility label. In this case, "Coffee list" would be appropriate. Since our UITableView maintains some state about which coffee is selected, we could make this label even better by adding which coffee is selected to the label.

Here is a great time to take a moment to pause and remind everyone of the importance of adding great accessibility labels to their apps. To learn how to make great accessibility labels, we have a presentation from 2019 which I encourage for you to watch. Coming back to our app, what about the details view on the right? It's a functional section that holds all the information related to the select coffee. Because the details view isn't already grouped, because we did not use a UITableView or UICollectionView, we have to manually define these accessibility containers. Today I'll show you how easy it can be to take something like our availability UI and make it a container. We implemented this as a series of UILabels in a vertical UIStackView. This means a user would have to navigate through each element. We could make this navigation experience better by adding an accessibility container, so the VoiceOver users can quickly navigate past the entire list.

From here, in just a few lines, we can make the UIStackView an accessibility container and give it an accessibility label that describes the group.

These are some fantastic improvements to our app. By placing these related elements into groups, we can greatly improve how VoiceOver users will navigate our Mac Catalyst app. And so I hope you take away the importance of adding accessibility containers to your app. It's a small change you can make that will greatly improve the navigation experience on macOS and on iOS.

Please ensure that your accessibility containers have a concise localized label that describes the group and includes any important details regarding the containers' state.

That's all I have for you today regarding navigation efficiency. I'll now pass things back to Eric, where he will show you how to test your Mac Catalyst app for accessibility.

Thank you, Nathan. With all the great work that you've done to improve your accessibility app experience, now is a great time to talk about testing on the macOS platform. When bringing apps from iOS to the Mac, you think of the app as an iOS app from the inside, but a Mac app on the outside, because it is running on the macOS platform.

As it happens, when you work with accessibility APIs from UIKit, our team automatically converts your iOS accessibility code to macOS without you having to do any work, so that you can continue to think about iOS. To help you better understand what's going on under the hood, we've improved the Accessibility Inspector for Mac Catalyst apps, and now it shows you the iOS APIs when running on macOS.

If you have not used Accessibility Inspector before, I highly recommend you to watch these two videos, "Auditing Your Apps for Accessibility" and "Accessibility Inspector." Together they will give you a complete guide on how Accessibility Inspector can help you find and fix accessibility issues for your apps across all Apple platforms. So now let's see how to use the Accessibility Inspector to audit your app accessibility on Mac Catalyst.

If we use the Inspector to inspect the elements for the cell, we can see the element has a proper label that describes the title of the coffee and a proper value that describes the rating.

We also see that there's a new Catalyst section that shows accessibility traits, container types from iOS. If we then use the Command-Control-Up, the Inspector would then inspect the parent of the cell element, which serves as the container.

We could verify that this is the correct element to inspect by checking the element class. In this case, the class name shows that this is the element that we translated for the tableView class. All classes from UIKit will be eventually translated to a Mac platform element.

In addition, Inspector tells you the view controller of the view if it has one. In this case, it is the RBListViewController from the app. To make sure that VoiceOver reads the container, we can make sure that the tableView container has a proper label which is a localized coffee list.

It also has the correct container type which is a semanticGroup.

And starting this year, Accessibility Inspector would tell you the automation type for all elements. In this case, it is a table, so that you know exactly how to find it in XCUI tests. So that's a brief overview of some of the additional things you can do with Accessibility Inspector for Mac Catalyst apps. We hope you enjoyed it.

So we've covered a lot today for accessibility in Mac Catalyst.

To wrap up, we first discussed how a great keyboard app is a great app for accessibility by making sure the controls of the app is accessible by keyboard focus and adding keyboard shortcuts.

Then we showed you how you can improve navigation efficiency for assistive technologies for Mac Catalyst apps.

We learned how the existing iOS API accessibilityContainerType can make a much bigger impact on the Mac, and how you should adopt it in your app. And our last piece of advice is to use the Accessibility Inspector. This is a great tool that we created to help developers like you.

So thank you for joining us, and we hope this presentation will help you create a better accessibility experience for Mac Catalyst apps.

-

-

4:11 - Ensuring selection automatically triggers when focus moves to a different cell

myTableView.selectionFollowsFocus = true -

6:01 - Creating a keyboard shortcut

extension AppDelegate { override func buildMenu(with builder: UIMenuBuilder) { super.buildMenu(with: builder) let shareCommand = UIKeyCommand(title: NSLocalizedString("Share", comment: ""), action: #selector(Self.handleShareMenuAction), input: "I", modifierFlags: [.command]) let shareMenu = UIMenu(title: "", identifier: UIMenu.Identifier("com.example.apple-samplecode.RoastedBeans.share"), options: .displayInline, children: [shareCommand]) builder.insertChild(shareMenu, atEndOfMenu: .edit) } @objc func handleShareMenuAction() { } } -

7:20 - Responding to raw key codes

extension MyViewController { override func pressesBegan(_ presses: Set<UIPress>, with event: UIPressesEvent?) { switch presses.first?.key?.keyCode { case .keyboardLeftGUI: // Handle command key pressed case .keyboardB: // Handle B key pressed default: } } } -

15:45 - Adding accessibility labels to containers, such as UITableView and UICollectionView

tableView.accessibilityLabel = NSLocalizedString("Coffee list", comment: "") -

15:50 - Making great accessibility labels that include state

extension RBListViewController { override func tableView(_ tableView: UITableView, didSelectRowAt indexPath: IndexPath) { let data = tableData[indexPath.row] let label = NSLocalizedString("Coffee list", comment: "") let selectedLabel = NSLocalizedString("%@ selected", comment: "") tableView.accessibilityLabel = label + ", " + String(format: selectedLabel, data.coffee.brand) } } -

16:45 - Adding accessibility containers to improve the navigation experience

let stackView = UIStackView() stackView.axis = .vertical stackView.translatesAutoresizingMaskIntoConstraints = false let locationsAvailable = viewModel.locationsAvailable let titleLabel = UILabel() titleLabel.font = UIFont.preferredFont(forTextStyle: .body).bold() titleLabel.text = NSLocalizedString("Availability: ", comment: "") stackView.addArrangedSubview(titleLabel) for location in locationsAvailable { let label = UILabel() label.font = UIFont.preferredFont(forTextStyle: .body) label.text = "• " + location label.accessibilityLabel = location stackView.addArrangedSubview(label) } stackView.accessibilityLabel = String(format: NSLocalizedString("Available at %@ locations", comment: ""), String(locationsAvailable.count)) stackView.accessibilityContainerType = .semanticGroup

-