-

Detect and diagnose memory issues

Discover how you can understand and diagnose memory performance problems with Xcode. We'll take you through the latest updates to Xcode's tools, explore Metrics, check out the memgraph collection feature in XCTest, and learn how to catch regressions using a Performance XCTest.

리소스

관련 비디오

WWDC23

WWDC22

WWDC21

WWDC19

WWDC18

-

비디오 검색…

Hi, my name is Tanuja, and I'm an engineer on the OS Performance team. Today Stefan and I are going to talk about how to detect and diagnose memory issues in your applications. We will start with looking into the impact of an application's memory footprint.

We will then talk about the tools to profile your memory usage and the types of memory issues you may encounter.

Let's jump right in.

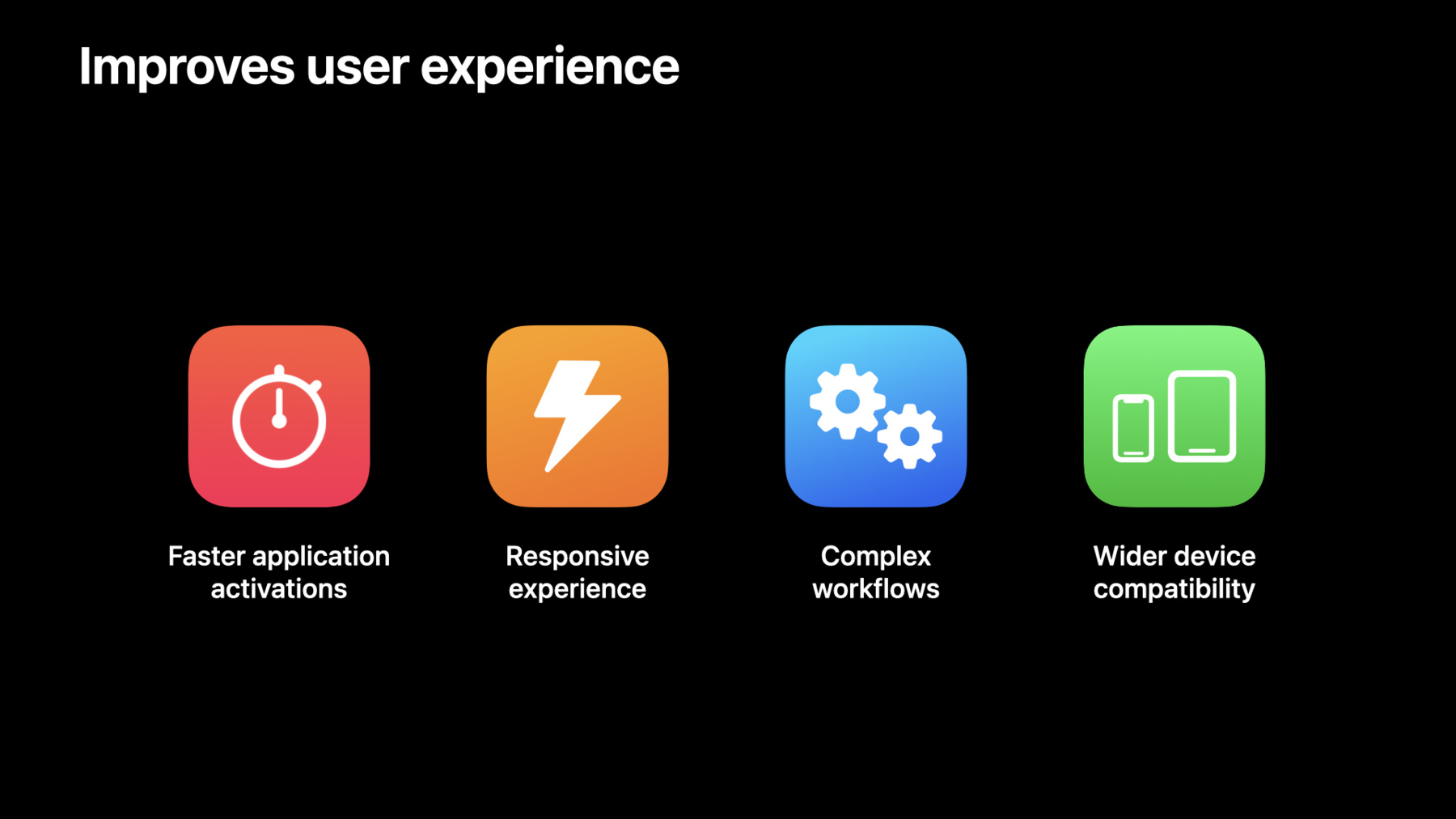

One question you might immediately ask is, why should I care about my application's memory footprint? The key reason is that it improves your app's user experience tremendously. There is a finite amount of memory on the system and monitoring your app's memory use can prevent the system from terminating your app to reclaim that memory.

This means your app can preserve its state when in the background, which is great, because loading into memory takes time, and keeping your memory footprint compact increases the chance of your application remaining in memory, leading to faster app activations.

Reducing your memory use also results in a dynamic, responsive experience which is exactly what your users want as they explore your new features.

By being strategic about with what your app has loaded into memory, your app can avoid the cost of waiting to reclaim memory as your user interacts with your app.

Being strategic about your memory usage also opens the door to a wider range of features you could add to your app, such as loading videos, including animations, and a whole lot more.

Finally, our devices are constantly evolving over time, and our newer devices have more physical memory than before. By reducing your memory footprint, your app will be just as performant on older devices, increasing the audience that can enjoy your app.

By monitoring your app's memory footprint, your app will activate faster, be more responsive, handle complex features, and be performant on a wider range of devices. Let's now take a look at what makes up your memory footprint.

There are three categories we use to break down your application's memory profile: dirty, compressed, and clean memory. Let's take a quick look at what each of these includes.

Dirty memory consists of memory written by your application. It also includes all heap allocations such as when you use malloc, decoded image buffers, and frameworks.

Compressed memory refers to any dirty pages that haven't recently been accessed that the memory compressor has compressed. These pages will be decompressed on access.

Note that we do not have the concept of swap on iOS, which is specific to macOS.

And finally, we have clean memory. Clean memory is memory that has not been written to or data that can be paged out. For example, these can be memory mapped files such as images that are on disk but loaded into memory. Or they can be frameworks.

When we refer your application's memory footprint, we're really talking the your app's dirty memory and compressed memory together. Clean memory does not count here.

This is a high-level understanding of your memory footprint. For a more in-depth, detailed explanation, we recommend you check out the iOS memory deep dive talk from WWDC 2018. Let's now take a look at the tools you can use to profile your memory footprint.

Xcode offers a suite of tools to help monitor your app's memory performance throughout both the development and production workflows.

The XCTest framework helps you monitor your memory footprint directly in your project's unit and UI tests, while MetricKit and the Xcode Organizer allow you to monitor memory metrics in production from your customers.

The continuation of this talk will be in the context of using performance XCTests. But note that these techniques still apply to general memory triage and investigations.

Using performance XCTests, you can measure system resources such as memory utilization, CPU usage, disk writes, and a whole lot more. Let's take a look at an example test together.

Let's say I'm an app developer at Meal Planner, an app that helps you organize what you will eat in a week, and I want to measure the memory usage of this new save meal feature I've added that lets user download the recipe to their device.

In my performance test, I'm using the measure(metrics:options:block:) API and I'm specifying that I want to measure the memory use of my targeted application. In the body of the measure block, I'm launching the application, manually telling the measure API to start measuring, and then tapping on the save meal button.

I wait up to 30 seconds for the recipe download to complete by checking for an update in the UI.

Now I can run this test directly in Xcode's UI to view my measurements.

I can access my measurements by clicking on the gray diamond next to my test. The results popover UI has a drop down that shows me which metrics were measured.

The bar graph on the bottom shows me the measurements for each individual iteration.

The average of all five iterations is computed and displayed.

I can now decide if I want to set this run's average as my baseline for future tests to be compared against.

A future run of the test would now fail if its average is greater than the set baseline. We call this deviation from the set baseline a regression.

A regression indicates that we should stop, investigate, and fix our code to make sure that the test passes.

We are excited to share that in Xcode 13, we've added in a new feature to collect diagnostics to help triage these test regressions. There are two diagnostics that we find valuable: ktrace files and memory graphs.

Ktrace files are powerful and versatile. They can be used for general system investigations, or they can be focused on specific issues, such as diving into the rendering pipeline when investigating hitches or looking into reasons for why your main thread might be blocked, resulting in a hang.

These ktrace files can be opened and analyzed in Instruments using your normal workflow.

The second diagnostic is a memory graph which are great for memory-specific investigations. Memory graphs can be used with Xcode's visual debugger as well as a variety of command line tools, some of which we'll cover later in this talk.

A memory graph is essentially a snapshot of your process's address space at an instance in time. Memgraphs record the address and size of each virtual memory region and each allocated malloc block, as well as pointers between those regions and blocks. This allows you to inspect individual objects on the heap, view data regions associated with linked frameworks, and more.

XCTest automatically enables malloc stack logging, which captures backtraces for newly allocated objects.

To enable diagnostic collection, use the xcodebuild command line tool in conjunction with the enablePerformanceTestsDiagnostics flag. This flag will enable ktrace collection for nonmemory metrics and memgraphs for memory metrics.

Once our previously written performance test is done running, we see the following printed to our console. This is quite a lot, but there are a few key things to look for.

The first thing to look for is if the test failed or passed. In this case, the test failed.

The output also calls out that the test failed specifically due to a regression. Our new average is 12% worse than our baseline.

Finally, we can find the path to our xcresult bundle.

When we open the xcresult bundle in Xcode, we see the memory measurements at the top next to the test name.

We can then expand the test logs and towards the bottom, we can find our attached memgraphs.

Once downloaded and unzipped, we find 2 memgraphs. This is because we append an additional iteration to your test to enable malloc stack logging. We collect an initial memgraph, prefixed with pre, at the beginning of the measured iteration, and we collect a second memgraph, prefixed with post, at the end of the iteration. This allows you to analyze the memory growth over the period of one single iteration if needed.

Now with ktrace files and memory graphs with malloc stack logging enabled, you are ready to not just answer if a regression occurred but also why did the regression occur? I'll now hand it off to my colleague, Stefan, to talk about the types of memory issues you may encounter when looking into your collected memgraph diagnostics. Thanks, Tanuja. Hi, everyone. I'm Stefan, an engineer on the OS Performance Team. I'll be going over some common memory issues you might find in your app as well as how you can diagnose, fix, and prevent them.

I'll go over two types of memory issues: leaks and heap issues, which can be broken down into heap allocation regressions and fragmentation issues. This is not an exhaustive list, but it does cover some of the most common issues. I'll also cover some command line workflows that can be used to diagnose these issues. For a more in-depth summary of the command line tools, check out the iOS memory deep dive talk from WWDC 2018.

Let's start by discussing memory leaks.

A leak occurs when the process allocates an object and loses all references to it without ever deallocating it. Here I have an example object graph, where the gray arrows denote references between objects. Notice that every object has at least one reference to it.

Notice the dotted line reference from object A to object B. Let's say I set this reference to nil, removing it.

With that reference gone, object B has been leaked. There are no references to it at all. It's still dirty, but the process has no way to reference it and no way to free it until it exits. For this reason, you should always fix leaks.

A common way objects leak in Swift is via retain cycles. In this diagram, objects A and B are in a retain cycle. They reference one another, but there are no external references to either of them. This means the process can't access or free either of them, so they're considered leaked.

Fortunately, most objects in Swift are managed by Swift's automatic reference counting system, or ARC, which prevents a lot of leaks. If you're working with objects not managed by ARC, such as unsafe pointers, make sure you deallocate them before you lose your references to them.

Even ARC managed objects are susceptible to being part of a retain cycle. So avoid creating strong circular references in your code. If a circular reference is absolutely necessary, consider a weak reference instead, because weak references won't prevent objects from being deallocated.

Let's look at an example from the Meal Planner app. Tanuja has sent me some pre and post memgraphs from the failing XCTest. Right away, I want to check for leaks in the post memgraph.

To do this, I run leaks on the memgraph. This shows me some helpful information about any leaks I have.

The output shows I have 4 leaks for a total of 240 leaked bytes.

Further down, the output includes a detailed view of the object graph for each of the leaks, which gives me some clues as to what might be leaking.

The top of the object graph says ROOT CYCLE, which means I'm dealing with a retain cycle.

There are some useful symbols here. Looks like this retain cycle probably includes meal plan and menu item objects.

Because malloc stack logging is enabled for XCTests, the output also includes an allocation call stack for each leak. This is extremely useful for finding which objects leaked.

Often, you'll want to find the section of the call stack with symbols from your code. Here's the portion of the call stack from my code.

The leaking meal plan object is allocated in the populateMealData function. I'll open up Xcode and see if I can fix the issue.

Here's the populateMealData function I saw in leaks. Here I'm allocating a meal plan object and a menu item object, which are the two objects I saw in my retain cycle. Hmm, this addMealToMealPlan function looks a bit suspicious. I'll take a look.

Hmm, so it looks like I'm calling addItem on the meal plan, but I'm also calling addPlan on the menu item. This is for a feature allowing us to see all the items for a plan but also which plan an item is associated with.

Here in meal plan, addItem adds the menu item to an array, saving a reference to it. And here in menu item, addPlan saves a reference to the meal plan. So this is definitely a retain cycle because they both hold a strong reference to each other.

Once populateMealData exits, both the meal plan and menu item objects will be out of scope, so there will be no external references to them. But they still reference each other, causing a leak.

I should probably try to find a solution without a cyclical reference. But as a quick fix for now, I'll change menu item to use a weak reference for its meal plan object. This breaks the retain cycle because we no longer have two cyclical strong references.

Let's shift gears now to heap allocation regressions.

The heap is simply a section of your process's address space where dynamically allocated objects are stored. Heap allocation regressions are an increase in memory footprint due to the process allocating more objects on the heap than before. To reduce heap regressions, look to remove unused allocations and shrink unnecessarily large allocations. You should also pay attention to how much memory you hold at once. Deallocate memory you're no longer using and wait to allocate memory until you need it. This will reduce your app's peak footprint, making it less likely to be terminated.

So let's return now to the failing XCTest from the MealPlanner app and check for a heap regression. To understand where I should look, I'll run vmmap -summary on both the pre and post memgraphs to get a nice overview of where memory is being used.

My footprint in the pre memgraph is around 112 megabytes. And in the post memgraph, my footprint is 125 megabytes, so that's about a 13 megabyte difference.

Further down, the output shows my process's memory usage broken down by region.

Because I suspect this is a heap allocation issue, I want to look at the regions starting with MALLOC_ because those regions contain all of my heap objects.

Remember Tanuja's equation: Memory footprint = Dirty memory + Compressed Memory. In this tool, the term "swapped" means "compressed." So of these columns, I only care about "dirty size" and "swapped size." And sure enough, the output shows that the MALLOC_LARGE region is holding about 13 megabytes of dirty memory. That's roughly equivalent to my regression size, so I definitely want to look into that. The next step is to figure out what kinds of objects are contributing to this 13 megabyte regression. To get that information, I'll run heap -diffFrom on my post memgraph.

I pass in my pre and post memgraphs as arguments. This shows me the objects that exist in the post memgraph heap but not in the pre memgraph heap.

Near the top, the output shows I have around 13 megabytes of new objects in the post memgraph.

Below, the heap memory is broken down by object class. For each object class, the output shows the number of objects and the sum in bytes of those objects.

Right away, I notice that I have about 13 megabytes worth of this "non-object" type. In Swift, this usually indicates raw malloced bytes. This type of object can be a bit tricky to track down, but there are some tools I can use to get some information. To start, I want the addresses of these non-objects.

I'll run heap -addresses to grab them.

I'll specify that I only want non-objects whose size is at least 500 kilobytes.

Aha. This non-object is about 13 megabytes, so it's a prime suspect in this investigation. I'll grab its address and see if I can find some clues about what it is. I have several options at this point. Each has its benefits depending on the situation, so I'll walk through each one briefly.

One option I have is to run leaks --traceTree on this address.

This gives me a tree of objects that reference this address. This is useful if I have a specific object I want to get more information about and my memgraph doesn't have malloc stack logging or MSL enabled. Remember that our XCTest memgraphs automatically have MSL enabled, but if you're ever working with a memgraph that doesn't, keep this tool in mind.

I've highlighted the object in the tree that seems relevant. My large non-object probably has something to do with this meal data object in MKTCustomMeal PlannerCollectionViewCell. I can also run leaks --referenceTree.

This gives me a top-down reference tree of all the memory in my process with a best guess of which objects are the roots. With this output, I can get a sense of where memory is aggregated in my app. This tool is extremely helpful if I know I have a large regression but I don't know which specific objects are responsible. I can pass the --groupByType argument to group like types together, shrinking the output and making it a bit easier to parse.

Oftentimes, a large chunk of the regression will be grouped under a single node in this tree, making it easier to find clues about what that memory is.

Again, I've highlighted the section showing the relevant objects. Here's that same meal data object I saw in the leaks -traceTree output. The output shows that there's about 13 megabytes worth of memory allocated to this meal data object. I'd love to know how this object is being allocated. Because my memgraph has MSL enabled, I can use malloc_history -fullStacks to figure that out.

I pass in the address of the large non-object I grabbed from heap -addresses earlier.

And I get an allocation call stack for the object at that address. This is extremely useful when I have MSL enabled and I have the address of the object I care about.

So looks like my meal data object was allocated in the saveMeal function, shown here on line 3. I'll head over to Xcode to see what's going on. Here's the saveMeal function inside my custom cell view controller. And here's the culprit. I'm allocating this raw buffer here and then wrapping it with the meal data object. I'm only allocating this buffer so that I can populate it and save the result to disk. Once I'm done saving to disk, I don't really need this buffer anymore. So why is it sticking around? Well, meal data is a class member, so as long as this class instance exists, the reference will stick around. This means that when I hit saveMeal on any cell, that cell allocates and holds a large buffer that will stick around until that cell is destroyed. That memory could really add up if I'm saving multiple meals.

So how can I fix this? One option would be to just define mealData in the saveMeal function, but I know it's used elsewhere in the class, so I don't want to do that. Another way would be to set mealData to nil once I'm done writing it to disk. The data object in Swift is smart enough to automatically deallocate the buffer once I've lost my final reference to it, so the buffer won't stick around past the end of this function.

Lastly, let's talk about fragmentation.

Let's quickly go over how pages work in iOS. A page is a fixed size, indivisible chunk of memory that the system grants to your process.

Because pages are indivisible, when your process writes to any part of a page, the entire page is considered dirty, and your process will be charged for it, even if most of it is unused.

Fragmentation occurs when a process has dirty pages that are not 100% utilized. To understand how this happens, let's look at an example. To start, I have 3 contiguous clean pages.

As the process runs, allocations begin filling up these pages, dirtying them.

When objects are deallocated, they create empty slots where they used to be marked "free memory" in the diagram. However, these pages are still dirty because there are still some allocated objects on them.

The system will attempt to fill these empty slots with future allocations. Here I have a large incoming allocation notated by the box on the right. Unfortunately, this incoming allocation is too large to fit in my free memory slots. Even though the combined size of the empty slots is large enough, they aren't contiguous and they can't be used for a single allocation.

So because it couldn't fit in any existing free slots, the system placed my allocation on a new dirty page on the right side of the diagram. The free memory slots remain unfilled and are considered fragmented memory.

The best way to reduce fragmentation is to allocate objects with similar lifetimes close to each other in memory. This helps ensure that all of those objects are freed together, giving the process large chunks of contiguous memory to work with for future allocations.

In this example, I manually allocated all the objects marked "my object," and I plan to free them at the same time. But I wasn't careful in my code, and the system ended up interleaving my objects with other objects.

Now, when I free all my objects, I have four slots of free memory, none of which are contiguous because they're broken up by these allocated objects. This results in 50% fragmentation and four dirty pages. Not great.

What if I instead wrote code to allocate all of my objects together? Now they all end up together on two pages. And when I free my objects, the process frees up two clean pages for the system, resulting in only 2 dirty pages and 0% fragmentation. Notice how fragmentation is a footprint multiplier. 50% fragmentation doubled my footprint from 2 to 4 dirty pages. In most real scenarios, some fragmentation is inevitable. So as a rule of thumb, aim for about 25% fragmentation or less.

One way to reduce fragmentation is to use autorelease pools. An autorelease pool tells the system to release all objects allocated inside of it as soon as it goes out of scope. This helps ensure that all objects created in the autorelease pool have similar lifetimes.

Although fragmentation can be an issue for all processes, long running processes can be especially prone, because there are lots of allocations and deallocations to potentially fragment the address space. If your app uses long running extensions, for example, be sure to take a look at fragmentation in those processes.

To take a quick look at my process's fragmentation, I can run vmmap -summary and scroll down to the bottom of the output.

This section is divided into malloc zones. Each zone contains different types of allocations. Usually I would only care about the DefaultMallocZone, because that's where my heap allocations end up by default.

However, because this memgraph has MSL enabled, I actually care about the MallocStackLoggingLiteZone. As long as MSL is enabled, this zone is where all the heap allocations end up.

The % FRAG column shows me what percentage of my memory is wasted due to fragmentation in each malloc zone. Some of these numbers are pretty large, but I only need to focus on the MallocStackLoggingLiteZone.

That's because the MallocStackLoggingLiteZone has by far the largest share of dirty memory, with 4.3 megabytes out of 5 megabytes total. So I can ignore the other zones this time.

The "dirty+swap frag size" column shows me exactly how much memory is wasted due to fragmentation in each malloc zone.

In my case, I'm wasting about 800K due to fragmentation. This seems like a lot, but as I mentioned before, some fragmentation is inevitable. So as long as I'm sitting under 25% fragmentation, I'll consider this much waste acceptable.

Looks like I'm sitting at about 19% fragmentation in the MallocStackLoggingLiteZone. This is comfortably below the 25% rule of thumb, so I'm not concerned.

If I did have fragmentation issues, I could use the Allocations track in the Instruments tool.

Specifically, I'd want to look at the allocations list view and see which objects were persisted and destroyed in my area of interest.

In the context of fragmentation, the destroyed objects create free memory slots, while the persisted objects are the remaining objects, responsible for keeping the pages dirty. Both of them are worth investigating when you're looking into fragmentation.

For more information about how to use the Instruments tool, check out the Getting started with Instruments talk from WWDC 2019.

Now that I've addressed the leaks and heap regressions and verified that fragmentation isn't an issue, I'll run that XCTest again.

Excellent. The XCTest now passes and the regression is resolved. Now that you've learned about detecting and diagnosing memory issues, let's review the workflow you can use for your own app. Anytime you add a new feature, write a performance XCTest to monitor memory, and/or any of the other provided system metrics. For each test, set a baseline. Then use the test to catch regressions and investigate using the collected ktrace and memgraph files.

Use the memgraphs from any failing XCTests to help diagnose your memory issue. The first thing you should do is check for leaks. Run the leaks tool and use the MSL backtraces to help find and fix any leaks. If the regression doesn't include leaks, then check out the heap. Start with vmmap -summary to confirm that the memory is in the heap.

If so, run heap -diffFrom to see which object types are responsible for the growth. If the culprits seem obvious, use heap -addresses to get their addresses. If not, look at leaks -referenceTree for some clues. Finally, investigate culprit object addresses with leaks -traceTree and/or malloc_history.

Lastly, make sure you're developing with these memory best practices in mind. Strive to have zero leaks in your app. If you're working with unsafe types, make sure you remember to free everything you allocate. And be on the lookout for retain cycles in your code as well.

Find ways to reduce your heap allocations, whether that's shrinking them, holding them for a shorter period of time, or just getting rid of unnecessary allocations altogether. Make sure to keep fragmentation in mind. Allocate objects with similar lifetimes next to each other to create nice, large chunks of free memory later on. With these best practices and the XCTest workflow, you'll be equipped to detect, diagnose, and fix memory issues in your app. On behalf of Tanuja and myself, thanks so much for tuning in. [percussive music]

-

-

4:52 - Monitor memory performance with XCTests

// Monitor memory performance with XCTests func testSaveMeal() { let app = XCUIApplication() let options = XCTMeasureOptions() options.invocationOptions = [.manuallyStart] measure(metrics: [XCTMemoryMetric(application: app)], options: options) { app.launch() startMeasuring() app.cells.firstMatch.buttons["Save meal"].firstMatch.tap() let savedButton = app.cells.firstMatch.buttons["Saved"].firstMatch XCTAssertTrue(savedButton.waitForExistence(timeout: 30)) } }

-