-

Coordinate media experiences with Group Activities

Discover how you can help people watch or listen to content all in sync with SharePlay and the Group Activities framework. We'll show you how to adapt a media app into a synchronized, SharePlay-enabled experience for multiple people. Learn how to add Group Activities to your app, explore the Picture in Picture layout, and find out how the playback coordinator object can help you fine-tune playback across multiple devices.

리소스

관련 비디오

WWDC22

WWDC21

-

비디오 검색…

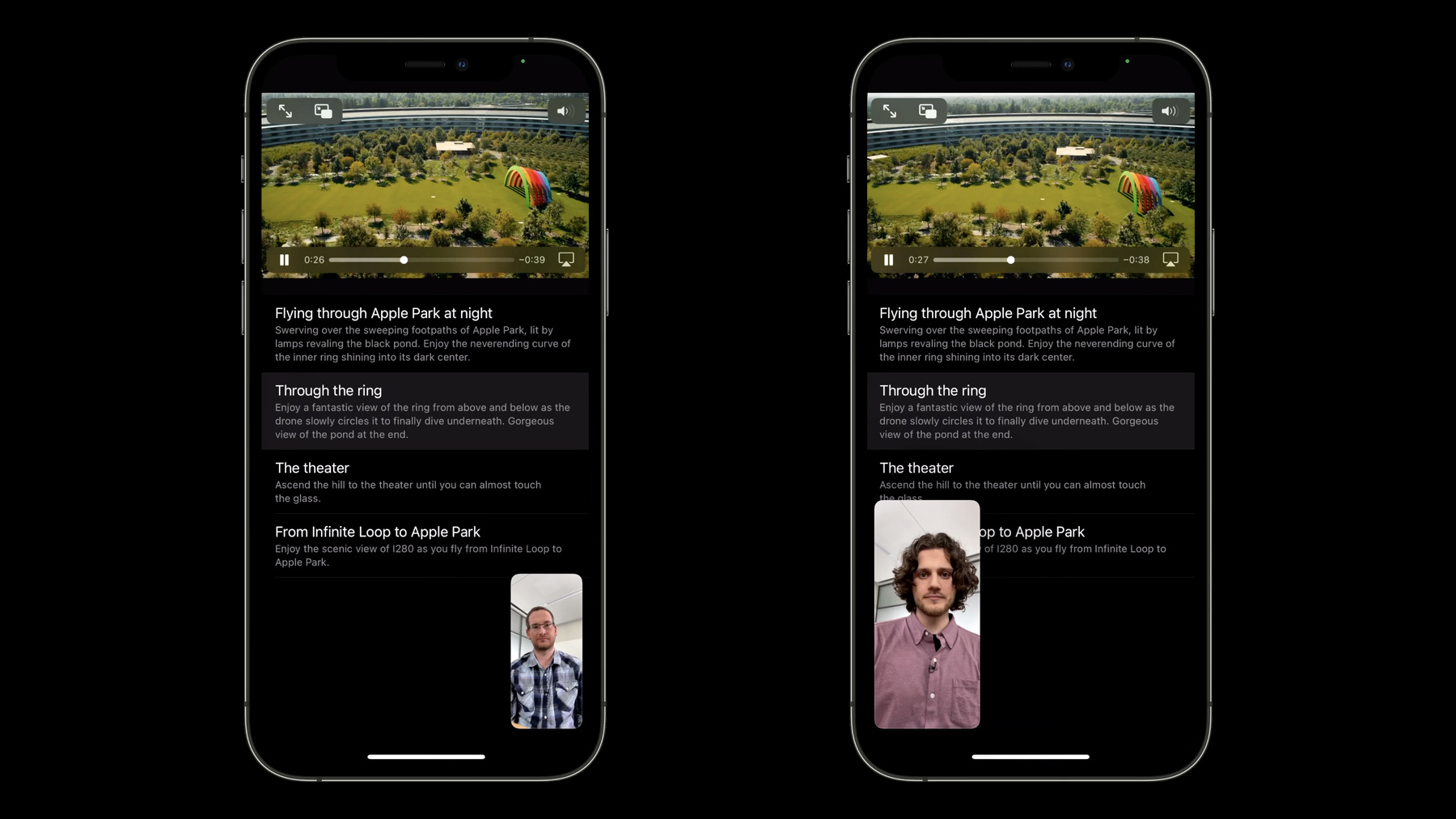

G'day. Welcome to the session, "Coordinate media experiences with Group Activities." My name is Hayden, and I'm an engineer on the Group Activities team here at Apple. Today, we will talk about how to create synchronized media apps that give users the ability to watch and listen to content together across devices. The goal is for your users to feel as if they were physically together, wherever they may be. The GroupActivities API is a Swift framework for creating shared experiences. It takes care of coordinating media for you, via APIs for playback coordination and group session management. And it is supported across a wide range of Apple platforms, such as iOS, iPadOS, macOS, and tvOS. Let's start off with a demo of the GroupActivities API, by calling my colleague, Moritz, and sharing a video from the sample app attached to this talk. Let's see if Moritz is available. Hi, Moritz! Hey, Hayden! I'm gonna home out and open the sample app. You can see it's a list of drone footage from Apple Park. I'm gonna show the theater footage to Moritz. How does that look, Moritz? Do you wanna watch it? That looks great. I really love that one. But did you see the new one I uploaded last week from the rings? Oh, it looks great! I'm gonna start playback, and our videos will be in sync across our devices. You can see if I pause, it'll also pause for Moritz. And if he moves ahead, it'll also move ahead for me.

Looks awesome. OK, thanks, Moritz! Yeah, that was great. Bye, Hayden. There are three main pieces that you need to think about when creating a coordinated media playback app. First is implementing the new GroupActivities API. Second is how you can take advantage of picture-in-picture to make sharing your experiences as seamless as possible. And third, my colleague, Moritz, will do a deep dive into how playback coordination works and the features of the new playback coordination object. Let's familiarize ourselves with the GroupActivities API by looking at the life cycle of a GroupActivities app. Here, we have two iPhones in a FaceTime call, just like in the first demo. My device is on the left, and my colleague, Moritz's is on the right. On my device, I open the shared app. In the app, I then share an activity to the group. The GroupActivities framework creates a session object for the activity, and then delivers it to my app. Meanwhile, the session object is shared to Moritz, where the framework handles opening the same app on his device. Finally, the framework passes Moritz's app the GroupSession. Now, we have two apps able to communicate over the same GroupSession. Now, let's look at the steps you need to take in order to adopt the GroupActivities framework. The first step is creating a GroupActivity along with its metadata. So, let's talk about what a GroupActivity is and how you define it. GroupActivity is a Swift protocol that represents the item that your users are experiencing together. This will be a single piece of content, like a movie or a song. You should include any properties on this type that you need to set up your experience. For example, you may want to include the URL to a video here, so you can load a video in preparation for the session. In order for the framework to send the data over the network, it conforms to Codable. This means your properties must also conform to Codable. You'll notice there are two required properties. ActivityIdentifier is a unique type identifier so the system knows how to reference this activity. GroupActivityMetadata contains information to show this activity in system UI on the remote device. You'll notice this is an example of the new async effectful read-only properties feature that is being introduced with Swift Concurrency this year. For more information see the session, "Meet async/await in Swift" at WWDC this year. So, you've defined a GroupActivity. Now, you wanna share it to the call by calling the activate method on your GroupActivity. When you activate a GroupActivity, the framework creates a GroupSession object, which is delivered to both the local and remote devices. The system is in charge of launching the app, and system UI will show the activity metadata. But first, there's something we glossed over. How does your app know that you're in a FaceTime call? And what if, when a user selects a piece of content, they don't mean to share it to the group, and instead just wanna view it locally? The PrepareForActivation function solves these problems for you, by letting the system work out the user intent. Here's an example of the prepareForActivation API. In the switch statement, there are three cases we need to handle. The first, activationDisabled, will be taken when the user is not in a FaceTime call, or the system has decided that they wish to consume it locally. The second, activationPreferred, will be taken when the user is in a FaceTime call, and they wish to share it to the group. Canceled is taken when the user cancels the share action. This is already enough knowledge to create a GroupActivities app that shares an activity. So, let's jump over to Xcode, and I'll show you it in practice. Before we add GroupActivities support, I'll give a quick run through of the project. It's a simple movie-watching app that lets you select a movie and watch it. It consists of a list of movies to watch, along with a movie detail page to show you the video player. Right now, the app only lets you watch movies alone, so let's change that.

You'll notice we have the Group Activity entitlement set. And then, we'll add a GroupActivity to Movie.swift. First thing to do is import GroupActivities.

And then, we'll add the GroupActivity, MovieWatchingActivity. You'll notice it has a movie property, and it uses that property to fill in the metadata. Let's share this activity now. We'll go to the CoordinationManager and find the prepareToPlay function. Currently, this function starts playback immediately by enqueueing the movie. Instead, we'll replace this with our prepareForActivation function that we showed in the slides.

You can see we immediately enqueue the movie in the activationDisabled case.

And activate() is called when the user wants to share it to their FaceTime call. Later in the talk, we'll enqueue the movie to start playback, but for now, let's see what happens if we run the app as-is. I've grabbed Moritz's device, and I've started a FaceTime call between the two iPhones. I'll home out and then launch the sample app on my phone. I'll share the rings video we watched earlier and launch the app on Moritz's device. You can see that it received the GroupActivity. However, it didn't load the correct video, and there's no playback synchronization. So, let's learn how to add that into the app now. The main thing to learn about receiving a Group Activity is the GroupSession object and the way you receive a GroupSession, the GroupSessions async sequence. Here's a high-level diagram showing the typical GroupSession life cycle. Both the local and remote devices receive a group session. The app should then set up in preparation for playback. And finally, when ready, join the group session. The GroupSession is the object that represents the realtime session between devices of a group activity. It provides state about the session, such as the latest group activity, the connection state, and the set of active participants connected to the session. As we'll see in a moment, it's also used for synchronizing playback. The GroupSession AsyncSequence delivers GroupSessions to your app. Your app never instantiates a GroupSession object directly, so this is the only way to receive GroupSessions. It's important to note that you should get the latest GroupSession from this sequence, on both the local and remote devices. AsyncSequence is covered in the Swift Concurrency talk mentioned earlier. Here's what awaiting on the GroupSessions AsyncSequence looks like in code. When a GroupActivity is activated, the system will return a GroupSession from the AsyncSequence into your await loop. Now that we've received a GroupSession, let's learn how to use it to set up playback synchronization. This latest release of AVFoundation introduces a new type called AVPlaybackCoordinator. Moritz will give more information on this object later in the talk, but for now, I'll show you how to attach a GroupSession to the playback coordinator, which will enable synchronized playback. The way to access the playback coordinator is by the playbackCoordinator property on AVPlayer. Then, to attach the groupSession to the coordinator, we call coordinateWithSession and pass in the GroupSession. And that's it. Under the hood, the framework handles all the intricacies of playback coordination and realtime networking for you. There's one final step to session management, and that's finally joining the session. Initially, a GroupSession is not connected and is instead in a "waiting" state. Calling "join()" connects the GroupSession to the group and begins the realtime connection, allowing messages to be sent and received from other devices in the group. Once the GroupSession has successfully joined, playback synchronization will begin. Let's add this session management code to our sample app. In CoordinationManager, let's add the session's async sequence in an await loop.

Remember, this gives your app GroupSessions when they've been activated from either the local or remote devices. Let's store this groupSession on the CoordinatorManager, which will propagate the change over to our MoviePlayerViewController.

Once set, we'll attach the session object to our AVPlayer via playbackCoordinator .coordinateWithSession.

Then, back in our await loop, we'll get the movie from the session object.

Since the activity can change throughout the session, we use a Combine publisher to get the activity. Then, we enqueue a movie to start playback. And finally, we join the session. Now that we've set up the code to receive a GroupSession and sync playback, let's run the app on our devices and see what happens. Again, the two devices are in a FaceTime call, and on the first device, I'll open the sample app and share the rings video.

You can see Moritz's device received the Group Activity, so we'll launch the app. And this time, both devices show the right video.

Then, if I press "play," we can see that playback synchronization works. And if I pause on one, it'll pause on the other.

Or I can move ahead, and it'll move ahead on both.

And playback is in perfect sync.

One final thing to note about GroupSession is how the session is finished. There are two ways to finish a GroupSession. The first is leave(). This disconnects the local user from the session, but leaves the session active for the remaining participants in the call. The second is end(). This ends the session, not just for the local participant, but for the entire call. For extra details on creating advanced GroupActivities apps, see the following WWDC session, "Build custom experiences with Group Activities." It covers how to change the activity of a GroupSession, observe state of a GroupSession, and how to use advanced features, such as the GroupSessionMessenger, which lets you send arbitrary messages between the group. I highly recommend it. Let's switch gears and look at how you can take advantage of picture-in-picture to make sharing a video GroupActivity as seamless as possible. Why is picture-in-picture worth thinking about for GroupActivities apps? Well, supporting picture-in-picture allows content to start playing instantly after being shared. Since it doesn't take a user out of their current context, there is no need for an explicit user interaction to start playback. This saves your users an extra step and results in a frictionless experience to share content. For more information on setting up picture-in-picture, see the 2019 WWDC session on "Delivering Intuitive Media Playback with AVKit." However, there are some nuances in how picture-in-picture works with GroupActivitities, so let's go over them now. The GroupActivities framework will deliver sessions to your media app while in background, to give your app a chance to set up picture-in-picture. If the GroupSession indicates playback can start in the background, you should set up picture-in-picture, and then go through the regular GroupActivities flow. The system will start playing the content in picture-in-picture without launching the app in fullscreen, so users get that seamless experience and aren't taken out of their current context. But in some cases, you won't be able to set up picture-in-picture because you may need the user to sign in to your app, or the content might not be available without some additional steps from the user. For these cases, GroupSession provides APIs for your app to request to be foregrounded. Another handy thing we've built into picture-in-picture is that it handles leaving and ending a GroupSession for you, via a system dialog, so there's no need to worry about leaving or ending a session when picture-in-picture is active. And now, I'll pass it over to my colleague, Moritz, to do a deep dive on the playback coordinator object.

Hello, my name is Moritz Wittenhagen, and I am an engineer on the AVFoundation team. Hayden has introduced you to AVPlaybackCoordinator as an object that automatically keeps playback on multiple devices in sync, and we've seen it working in the demo. In this part of the talk, I am going to demystify what the coordinator actually does under the hood and how it interacts with your code. For the most part, I will focus on how the coordinator interacts with AVPlayer, how you should select your assets for coordinated playback, how individual participants can suspend coordination temporarily, and I will also give you a brief insight into how to implement coordination when not using AVPlayer. AVPlaybackCoordinator is an object that shares playback state across devices, and it coordinates playback start across those devices, with the goal of no one missing any content. There are two subclasses of the coordinator. An instance of AVPlayerPlaybackCoordinator is always tied to a particular AVPlayer, and it handles all remote state management for you. This makes it the easiest way to jump into coordinated playback, and I highly recommend you start here. We are not going to talk much about AVDelegatingPlaybackCoordinator, but this subclass gives you the flexibility to control any other playback objects that aren't an AVPlayer. Let's look at our device setup again. For the rest of the talk, we are going to represent the GroupActivities objects through the GroupSession. We also assume that your UI presents an AVPlayer playing some AVPlayerItem, and this is where the new AVPlayerPlaybackCoordinator comes in. When you call coordinator coordinateWithSession, as Hayden showed earlier, we have effectively connected the two AVPlayers, and they start to affect each other. The basic rule is the coordinator will intercept any transport control API, so any API that changes rate or current time. It takes those commands, figures out if it needs to coordinate them with someone else, and then, at the appropriate time, lets them take effect on the AVPlayer. Let's look at an example. Here, Hayden and I are in a GroupSession together, and because the GroupActivity told us which URL to load, have enqueued the same AVPlayerItem. Now, if my device changes the AVPlayer's rate property, the playback coordinator will intercept that command and not immediately allow the player to start playback. Instead, it will ask the player to enter a timeControlStatus of WaitingToPlayAtSpecifiedRate. UIs will typically represent this timeControlStatus with a waiting spinner. The coordinator will then send the command over to Hayden's iPad. The AVPlayerPlaybackCoordinator there receives the command and similarly asks Hayden's AVPlayer to change rate and enter a waiting state. The coordinators give everyone some time to prepare for playback with the goal of everyone starting playback at the same time without missing any content. When everyone is good to go, all devices begin playback together. All coordinators in the session are equal, meaning that Hayden can also initiate a command. Let's have him seek this time. Again, the seek API is intercepted, and the coordinator forces the AVPlayer to wait while it shares the command with connected coordinators. Everyone is given some time to complete the seek, and when all devices are ready to go again, playback resumes for everyone together. You may ask yourself, what happens when the AVPlayers are playing different items? And the answer is that the coordinator only applies state to the other player when both players are playing identical content. I will elaborate on the concept of identity later. For now, think that content is identical when you create the items from the same URL. What this means is that any command you send for item A will only be applied if the receiver is also playing item A. And changing the item to B will ignore all state from item A. We do this because distinguishing commands per-item allows for safe joining of participants and transitions between items. Let me show you what I mean. Here's our example again, but this time, we start with Hayden already playing. As I join and connect my playback coordinator to the same session, nothing happens to my AVPlayer, because I am not playing the same current item as Hayden. Even creating that same item has no impact because the item is not current in my player. This means that I can even seek the item without consequences for anyone else. Only when the item becomes current in the AVPlayer will the coordinator start doing its work and try to apply the right state. The rule is that the coordinator always starts with the group state, if there is one. And since Hayden was already playing in the GroupSession, the coordinator prefers his state over my own configuration from before enqeueuing the item. This means that my coordinator will override any configuration in the AVPlayer and AVPlayerItem to match Hayden's device. And with that, we are both in the same state and can play together. Let's repeat this with an item transition. As we both approach the end of item A, my AVQueuePlayer is getting ready to play the next item. Usually, we would expect Hayden to get ready, as well, but for this example, let's assume Hayden's iPad is in a bad network and cannot load the next item yet. So, now that I'm at the end of item A, I already have item B enqueued, and it is ready to play. But because the item is not current, the coordinator is not doing anything yet. Only when my player transitions to the new item, will the coordinator do its job again. And this time, it does not find an existing state for item B, so it just continues with the state my player is proposing. Even though the coordinator shares this new state with Hayden, he is not affected because he is still displaying item A. But when he eventually transitions to match my item, his coordinator will again apply the existing state to his player. And with that, everyone is playing in sync again. After all this, here is my first call-to-action. Be careful about the order of your item changes and transport control commands. Let's say we have a function beginPlayback that enqueues an item and automatically starts playing. This code would be called whenever the user has selected something to play or when our GroupSession informs us of a new activity. It is important that we seek to our start time first, and similarly, we should change player rate before the item is enqueued so that our initial configuration cannot affect anyone else. If we do it in this order, the playback coordinator can decide if we are first and our state should be shared with everyone else, or if another state already exists, and that should override our state instead. Also, audit your transport control commands and consider if they should affect everyone in the group. Usually, an API call should affect the group if it originates from playback UI. So if the user pauses, everyone else should pause, too. In that case, just call the AVPlayer API as usual. So, what should we do with the other API calls not coming from playback UI? Usually, these are automated pauses because your app has encountered some system event. Automatic pauses like this should not usually affect the other participants. In such a situation, where your users are playing together with others, you should first consider not pausing at all. Since everyone else continues to play, users will often prefer to stick with the group, even if it has some kind of drawback, like not being able to see the content temporarily. If you have no other choice but to pause, you have two options: remove the item first, or begin a coordinated playback suspension, which I will cover later in this talk. Now that you have an idea of how the playback coordinator works, let's talk about content for coordinated playback. As I stated previously, we consider two player items on different devices to be the same when their assets were created from the same URL. While this default can work well, there are situations where you want to change this behavior. For example, your app may offer the user a choice to download and cache the content on device. So, if I have downloaded the asset to my local cache, and Hayden is still streaming the content from the cloud, we are no longer using the same URL, meaning the playback coordinator will not keep our playback states in sync. Similarly, some of you may have exciting use cases for coordinating the state of AVMutableMovies or AVCompositions, which don't have a URL representation at all. Again, the playback coordinator does not know what to do here. To solve this problem, you can provide a custom string as an identifier for an AVPlayerItem. If this string is present, the coordinator will use it to decide if two items represent the same content, and it will ignore the URL. You do this by implementing the AVPlayerPlaybackCoordinator Delegate protocol and its playbackCoordinator identifierFor playerItem function. The coordinator will ask its delegate for an identifier whenever you enqueue an item in the player. Whenever the playback coordinator considers two items equal, it is important that a time on one device matches the same time on the others. This means that you need to be careful with content that is automatically injected into the playback stream by a server. So, if Hayden and I request the same URL, but the server decides to inject an ad into only my stream, the devices are now out of sync, as I play into the ad, and Hayden continues with the main content. The right way to approach this problem is to move ads and other interstitials into a separate player. That leaves the main asset unaffected. Now, as I play into the ad, my phone switches to a different player for the duration of the ad. And when the ad is over, the coordinator can easily rejoin everyone else's timing, and we are back in sync. If you are playing HLS content, AVPlayer will be able to help you with this through the new AVPlayerInterstitialEvent API. Check out the dynamic pre-roll and mid-rolls in HLS talk to learn more. In summary, use custom identifiers to match content if the URL does not cover the right information. Make sure times are the same for everyone. And if you want to play personalized interstitials, play them through a different player, so that your main content is not affected. And finally, use date tags when coordinating live content so that the coordinator can share the correct timing with everyone else. So far, we have only dealt with the perfect playback cases, where everyone can stay in sync the whole time. Unfortunately, that is not always possible. Let's say Hayden and I are playing together, but an alarm goes off telling me to feed my cat, Zorro. By the rules of AVAudioSession, my app must now pause, and those rules still apply, even when playing in a group. Yet, we do not want my pause to affect everyone else. Forwarding everyone's little pauses to the whole group would just cause too many annoyances. So, instead, we want Hayden's iPad to continue playing. And when I dismiss my alarm, playback should catch up, and everyone should play in sync again. So, how can we implement behavior like this? You use a new object called an AVCoordinatedPlaybackSuspension. Such a suspension represents a separation between one participant's coordinator and the other participants' coordinators. The participant is separate from the group, and player rate changes or seeks no longer affect anyone else. Similarly, any rate change coming from the group will not change the AVPlayer's rate or time. In the example, this means that it is not possible for Hayden to start my player while the alarm is still playing. There are two different kinds of suspensions: automatic suspensions and suspensions you add yourself. Automatic suspensions are added by AVPlayerPlaybackCoordinator when the player pauses automatically. We already saw the example of the audio session interruption, but this also applies to network stalls or playing an interstitial through the new AVPlayer interstitial API. Suspensions added by the playback coordinator end when the player resumes playback, and it causes the player to match timing to the current group state. For our example, this means that I will automatically rejoin the group when my player rate changes back to one after my app handled the end-interruption notification from dismissing the alarm. Let me show you two examples of how the system uses coordinated playback suspensions.

Here is our sample app playing drone footage of the rings. Let's look at the interruption example we discussed before. I have set a timer to go off in a few seconds, so let's wait for that.

Ah, here we go. This has paused the iPad on my left, but as you can see, the other device is still happily playing. And as I dismiss the timer, playback on the device on the left jumps forward to rejoin the other device and is perfectly in sync. This is not the only way the system makes use of suspensions. AVKit uses them to keep random video frames from flashing in front of everybody during scrubbing. You may have noticed already that scrubbing will show intermediate frames only on the device that I'm interacting with. So, as I touch the scrubber, the device on the right continues playing, while the left device shows the scrubbing frames. Only when I let go of the scrubber will the new time be shared with the other device, and playback resumes for everyone. These are examples of how the system uses suspensions. Now, let's look at how you would adopt one yourself. So, let's jump into Xcode and actually do that. Let's say you wanted to implement a feature that allows one participant to rewatch something they just missed. Maybe there was a particularly exciting moment in the drone footage we are watching. With all the coordinator behaviors we discussed so far, seeking back and playing again will affect everyone. And I want to emphasize that this may very well be the right thing to do. Try to keep your users together as much as possible. But let's say seeking back for everyone is not an option. So, what we will build instead is a way to seek back by a few seconds and play the content at twice the speed until we join back up with everyone. I have already added a button in my UI that a user can use to indicate that they missed something. It is hooked up with this function in our MoviePlayerViewController. Let's fill that in.

Here is just the player logic. We figure out what time to seek to, seek back, and set the player rate to 2. When we have caught up, we just resume playback at rate 1. So far, this would all happen to everyone because the playback coordinator will intercept those API calls. This is where the suspensions come in. Right before we seek back, I want to separate our player from everyone else. And we do that with the coordinator's beginSuspension function. It requires a reason, and to provide that reason, we just extend the Reason struct with a new string constant.

In this case, what-happened. Now, we can use that in our beginSuspension call.

Since the coordinator is now suspended, we can safely seek and set the rate, just for our player. Once we are ready to meet up with everyone else, we need to signal to the coordinator to listen to the group again, so we call suspension.end(). Note that we don't actually need the player rate change anymore. Since ending the suspension will always rejoin everyone else, our player rate change would also change back to whatever the current group rate is.

Now, let's try this out.

Everyone plays together. I miss an exciting event, like the approach to the ring, and I tap our new what-happened button. So, you can see, the device I'm interacting with jumps back to repeat the content. It plays faster to catch up, while the other device is not affected. And now, we are already perfectly in sync again. So, when you begin a suspension on an AVPlaybackCoordinator, you separate its player from the group, and it is now safe to issue any rate change or seek without affecting anyone else. Ending the suspension will rejoin the group's current time and rate. And, new on this slide, you can optionally propose a new time to the group when ending the suspension. This is how you can implement a scrubbing suspension that changes time for everyone only when letting go of the playback control. Here is a summary of when AVPlayer transport control commands are actually shared with other participants. First, you have to connect the AVPlayerPlaybackCoordinator to other participants through a group session, as Hayden showed you at the beginning of the talk. Second, the AVPlayer's current AVPlayerItem must have the same URL as the other participants' player items. Or if you're providing your own identifiers, they must have the same custom identifier. Once an item is enqueued, all seeks and rate changes affect everyone in the group, except when you begin a coordinated playback suspension. And to close out the discussion of AVPlayerPlaybackCoordinator, here's a quick tour of other API related to coordinated playback. If you want other participants to wait during one user's suspension, you can configure this using the coordinator's suspensionReasons ThatTriggerWaiting property. This is how you could request that no one misses any content if participants have different length ads. To learn more about the state of other participants, take a look at the coordinator's otherParticipants property and its corresponding notification. The AVCoordinatedPlaybackParticipant most notably gives you the list of suspensionReasons, which may be useful to inform UI, especially if you use suspension ReasonsThatTriggerWaiting above. Whenever the coordinator requests its AVPlayer to wait, that is reflected in the players reasonForWaitingToPlay, with the new waiting ForCoordinatedPlayback reason. To override waiting and immediately start playback, regardless of the other participants' states, use the player's playImmediately atRate function. Note that this can cause other participants to miss content, so be aware of that when using this API. AVPlayer also has a new rateDidChangeNotification, which provides more information about the rate change, including when another participant caused it. And similarly, AVPlayerItem's TimeJumpedNotification will also tell you if a time jump originates from another participant. There is some AVPlayer API you cannot use with AVPlayerPlaybackCoordinator. The default time pitch algorithm on iOS used to be low quality zero latency. This value is now deprecated in iOS 15. LowQualityZeroLatency is not supported for coordinated playback, and you should watch out for code resetting to this now-deprecated value. Use one of the other algorithms instead. Also, do not use AVPlayer's setRate(time:atHostTime:) function with an AVPlayerPlaybackCoordinator because it is vital that the playback coordinater is in charge of player timing, which is incompatible with external startup sync. After all this talk about AVPlayer, let's quickly dip into the second subclass of AVPlaybackCoordinator, AVDelegatingPlaybackCoordinator. A lot of the concepts that we discussed still apply for delegating playback coordinator, but instead of observing the player for you, the delegating coordinator requires you to provide information about playback state and apply state to your playback objects yourself. A custom playback object setup will look something like this. Your UI controls the player, which uses one of the other system-rendering APIs underneath. The delegating playback coordinator fits in between your UI and your player implementation. As the name suggests, AVDelegatingPlaybackCoordinator requires you to implement a delegate protocol that receives playback commands for playing, pausing, seeking, and buffering. Instead of sending a play command to your player directly, your UI would tell the coordinator first. The coordinator will then decide if it needs to first negotiate with other connected players, before changing your playback object's time or rate. You also have to tell the coordinator whenever your player transitions to a new current item, so that the coordinator knows which commands to send to you. As before, items are identified by arbitrary strings. When the coordinator sends a command, it should affect the originating UI, as well as the receiver UI. And when it is time to play, it should affect all players in the same way. It is your responsibility to make your playback object follow along and to update your UI for commands appropriately. I want to specially call out that the delegating coordinator will often tell you to begin buffering, even if your device is already ready to go. This means the coordinator is still waiting for other connected participants, and your UI should reflect that, even if there is nothing to do on your end. Final words of warning. Be careful with events like route changes that briefly pause and resume your player. It is your responsibility to stick to the requested timing and reapply the group timing as needed. If you cannot keep up for whatever reason, you should communicate that to the coordinator using a suspension. The delegating playback coordinator also does not add any automatic suspensions. This means you should begin and end suspensions for relevant system events yourself. Use the reasons provided by AVFoundation where appropriate. And it is possible to connect a delegating playback coordinator on one device to a player playback coordinator on another, but you must use custom identifiers on the player playback coordinator end. Let's wrap up. To build a coordinated media playback app, use a GroupActivity to define the content you are playing and to propose it to the group. Start to keep track of GroupSessions as soon as your app launches to learn when your users are in a FaceTime call and want to play together. And finally, connect the playback coordinator to your GroupSession to keep your content in sync for everyone. Thank you, and enjoy the rest of WWDC. [music]

-

-

3:06 - Define a GroupActivity

protocol GroupActivity: Codable { /// An identifier so the system knows how to reference this activity static var activityIdentifier: String { get } /// Information that the system uses to show this activity, such as title and a preview image var metadata: GroupActivityMetadata { get async } } -

4:42 - Making your play buttons automatically start a group session when appropriate

func playButtonTapped() { let activity = MovieWatchingActivity(movie: movie) Task { switch await activity.prepareForActivation() { case .activationDisabled: // Playback coordination isn't active. Queue movie // for local playback. self.enqueuedMovie = movie case .activationPreferred: // Activate the activity. The system enqueues the movie // when the activity starts. activity.activate() case .cancelled: // The user cancelled the operation. Nothing to perform. break default: break } } } -

8:31 - Receiving a GroupSession from the GroupSession AsyncSequence

// Receiving a GroupSession from the GroupSession AsyncSequence func listenForGroupSession() { Task { for await session in MovieWatchingActivity.sessions() { ... } } } -

9:03 - Attaching an AVPlayer to the GroupSession

let player = AVPlayer() ... func listenForGroupSession() { Task { for await groupSession in MovieWatchingActivity.sessions() { // Verify content is available, prepare for playback to begin player.playbackCoordinator.coordinateWithSession(groupSession) ... } } } -

31:26 - Custom suspensions

class AVPlaybackCoordinator { func beginSuspension(for reason: AVCoordinatedPlaybackSuspension.Reason) -> AVCoordinatedPlaybackSuspension } class AVCoordinatedPlaybackSuspension { func end() func end(proposingNewTime newTime: CMTime) }

-