-

Working with Metal: Overview

Metal provides extremely efficient access to the graphics and compute power of the A7 chip. Get introduced to the essential concepts behind Metal, its low-overhead architecture, streamlined API, and unified shading language. See how Metal lets you take your iOS game or app to the next level of performance and capability.

资源

-

搜索此视频…

Welcome everyone. Welcome. Welcome to WWDC, and welcome to our Introductory Session about Working with Metal.

Now we're incredibly excited to get to talk to you today about Metal.

We believe it's literally going to be a game changer for you, your applications, and for iOS.

Now as you've heard in the keynote, Metal is our new low-overhead, high-performance, and incredibly efficient GPU programming API.

It provides dramatically reduced overhead for executing work such as graphics and compute on a GPU.

And with the support for precompiled shaders and incredibly efficient multithreading, we designed it to run like a dream on the A7 chip.

Now today in this session, I'm going to talk to you at a relatively high level about the background, the how and why we created Metal and some of the conceptual framework for the API. I'll also touch briefly on the Metal shading language and our developer tools.

The sessions that follow will go into much more detail about exactly how to use the Metal API and how to build your applications, but we'll keep things at a high level for this session.

So, first, a bit of background on Metal itself. Now you also heard in the keynote that Metal provides up to 10x the number of draw calls for your application. Now what does that really mean? Well, it's important to think about draw calls in this way: each draw call requires its own graphics state vector.

So when you're specifying that you would like to draw something with the GPU, you also need to tell the GPU which shaders, which states, how you want to configure the textures and the rendering destinations.

This is very important in order to get the effect you're looking for from the GPU.

But, unfortunately, with previous APIs changing state vectors, it'd be extremely expensive. You have to translate between the API state and the state that the hardware's going to consume.

When I say expensive, I mean, not for the GPU, but for the CPU because it's the CPU that's doing that type of translation. So let's take a look at a quick example.

If your application is setting up for a new draw call, you'll want to specify the shaders and the state and the textures for each draw operation, and you'll do that each time you want to specify a new draw call. And then the CPU gets involved and spends a bunch of time translating those state changes and those requests to draw into hardware commands before sending it to the GPU.

So if we had a way to make that much less expensive, we could give you a lot more draw calls per frame, and you might ask yourself, well, why do I want more draw calls per frame? Well, more draw calls lets you build much better games and applications. You can build many more unique objects, have many more bad guys, or other characters in the scene. You can add a lot more visual variety to your games.

But probably the most important reason is your game artists and designers are going to thank you profusely because you're going to give them more freedom to realize their vision without having to jump through all types of draw call gymnastics to fit what they'd like to see onscreen into a tiny number of draw calls. Now let's take a little bit of a look at what life was like before Metal.

Now, Apple has a long history of GPU programming APIs, both implementing them, maintaining them, developing them.

We've supported standard APIs like OpenGL and OpenCL.

We've supported APIs across a wide variety of domains, both at the high level and the low level of GP programming for things like 2D, 3D, animations, and image processing.

And we've supported a wide variety of architectures in the hardware, across platforms, computers, devices, phones, iPads, and a wide variety of GPUs.

And during all this time, as we're supporting all of this variety, we realized that there was something pretty fundamental missing from all of these APIs, and that was the level of deep integration that Apple brings to its products.

Now Apple, as you know, provides the operating system, the hardware, and the products as a complete package. They're designed to work together seamlessly.

And so we thought to ourselves: what if we could take the same approach to GPU programming that we take to our products as a whole? If we could build these to work seamlessly, we knew we could do the same for GPU programming, and that's what we've done with Metal. Now as I said, we have a long history of looking at these different types of programming APIs, and we realized that if we wanted to get a tenfold -- an order of magnitude -- improvement in performance, we're going to have to do some pretty radical things. We decided to take a clean sheet approach to this design, and the key design goals that we had were outlined here.

First, we knew we wanted to have the thinnest possible API that we could create. We wanted to be -- reduce the amount of code that was executing in between your application and the GPU to a bare minimum.

We knew it needed to support the most modern GPU hardware features. We didn't want it bogged down with the cruft of 20 years of previous GPU designs. We wanted it focused entirely and squarely on the future. We knew that there were some expensive CPU operations that needed to take place, but we wanted to carefully control when those happen and make sure they happen as infrequently as possible. And we wanted to give you, the developer, predictable performance so you would know exactly when and how those expensive operations could take place. We wanted to give you explicit control over when the work was executed on the GPU, so you could decide when and how to kick off that work, and never be surprised by what the implementation might do behind your back. And, last but not least, we knew it had to be highly, highly optimized for the A7 CPU behavior as this will become key in a few moments. So how to think about Metal. Well, where does it fit? Well, let's say you have your application here represented at the top of this diagram in green, and you'd like to talk to the GPU.

Well, Apple provides a wide variety of APIs to do just that, but they come at different levels of abstraction, different levels of conceptual distance between you and the GPU.

So at a high level, you have awesome APIs like SceneKit and SpriteKit that give high level services for scene graphs for 2D and 3D animations, and they're great. They provide a lot of services, but they are relatively far from the GPU in terms of abstraction.

And then, of course, we have our 2D graphics and imaging APIs such as Core Animation, Core Image, and Core Graphics, and these, too, provide a lot of services. And if this is the type of operation you're doing, these are perfect APIs for you. And, as always, we have the standards-based 3D graphics approach with OpenGL ES.

But when we wanted to do something better, and to give you high efficiency GPU access at the thinnest possible API layer, that's where Metal comes in.

So how do we do this? Well, first thing we wanted to understand is how most modern games try to manage their CPU and GPU workloads. So most games will target a frame rate. Oftentimes, it could be 60 frames a second, sometimes at 30. In this example, we're using 30, which works out to be about 33.3 milliseconds per frame.

Now on this timeline, you can see how most games would try to optimize for this type of timeline. They'll try to balance the amount of CPU work and the type of GPU work to fill the entire frame, but they'll do so offset. So the CPU will be working and generating commands for one frame, and the GPU will then consume those commands in the next frame, and while the CPU is doing that, the GPU is busily working on the previous frame, and you lay this out on a timeline, and you can see how you get relatively perfect parallelism. Well, let's look at one frame in particular, and you'll notice in this example we're assuming a completely balanced frame where the CPU and the GPU are occupying all of the time. Now, unfortunately, real life is not quite so balanced, and oftentimes you'll find that the CPU can take more time to generate these commands than the GPU does to consume them, and this is unfortunate because it often leaves the GPU idle. So you're not even using the system to its fullest capacity, and if you were, it would be like having a 50 percent faster GPU, but it's just sitting there dark. So you say to yourself, "Well, I'd like to fix this problem. I'm just going to give more work to the GPU", and, unfortunately, what you'll find is that to give more work to the GPU you have to first spin up more work on the CPU.

And if you were already bound by the amount of work you could do on the CPU, this pushes out your frame time even further. Now we're below our 30 frames per second target.

And to add insult to injury, the GPU is still idle because now our frame time is actually longer, and the GPU can't fill that work either. So to understand what's happening here, it's helpful to realize that the CPU workload is actually split into two parts.

You have the applications workload (what you guys are providing) and then you have all the implementation work for the GPU API itself. So whenever you make a GPU programming call, we're going to have to translate that in the way I described earlier.

Well, it's this GPU programming API time on the CPU that we're focused on with Metal, and Metal dramatically reduces this time down to a bare minimum.

Now in this example, we've actually freed up additional CPU time. Now it's the CPU that's idle, and our frame time has become limited by the GPU, and we're actually beating our 30 frames per second target. So if that was your goal, you can now Render faster than 30 frames per second, but you have another option. You can actually use that CPU time to improve your game and make it even better. You could add more physics, or more AI in, or other types of workloads to your application.

We have a little bit of CPU idle time here left.

We can actually choose to go back to our 30 frames per second target, and add even more draw calls with Metal providing you up to a 10x increase in efficiency, and you can either draw more things with the GPU, or maybe just draw them in a much more flexible manner so you can get more visual variety.

So that's what we're trying to do with Metal.

Now why is this GPU programming so expensive on the CPU? To understand what we did with Metal, it's helpful to take a deeper look here, too, and there are 3 main reasons.

First, you have what's called state validation.

Now some people think state validation is just having the implementation verify that you're calling the API in the right way and that's certainly true, but it also involves encoding the state from the API to the hardware and translating it and oftentimes looking at different aspects of the state and different state objects in the API and figuring out how to combine them into a way that the hardware's going to understand. This can be expensive, and perhaps the most expensive version of this is actually the shader compilation itself.

So you take your source code shaders for your vertex and pixel shaders, and they have to be compiled at run time and to generate the GPU's machine code. What's worse is that in a lot of APIs before Metal, the state and the shader code was not described in the API in a way that really mapped well to what the hardware actually was going to expect.

And so when you change certain states, you could find that behind the scenes, we actually had to go back and recompile your shaders, causing even more CPU work.

And, last, they're sending the work to the GPU itself, and this can be expensive. The resources, the memory, the textures have to all be managed and given to the GPU in the way it can expect. And because those first two items are so expensive, we often have encouraged you with previous APIs to batch up lots of work to send -- to try to amortize these expensive costs across a fewer number of draw calls and just give more per draw call to the GPU.

And, of course, this tends to work at the cost of flexibility to you, the developer, and to your game designers, but it also increases latency because now the GPU has more to work on in a single chunk.

So we wanted to fix these 3 key areas of expense for GPU programming.

To do that, we thought about 3 times, 3 occurrences in the application life cycle to focus in on. There's the application build time, there's when you're loading the content for your game, and then when, there's when you draw. And they occur at different frequencies.

So, first, application build time.

This basically never occurs.

I see some surprised look on your faces because as developers you think you're building your application all the time, and that's true. But from your customer's perspective, from the people using your application, they never see this. So if we can move things to occur at application build time, you've just eliminated this expense from the experience of your game. And then you have content loading time, which is relatively rare, again, from a user's perspective. They're only going to load a level a few times per play. You have draw calls, on the other hand... you're going to do this thousands (ideally thousands) of times per frame.

And before Metal, unfortunately, all three of those expensive bits of operations for the CPU occurred in this last time, at draw call time.

Thousands of time per frame, or more likely, a few hundred times per frame because that's all the CPU could absorb.

Now with metal, we've moved these expensive operations to occur much more optimally.

So you put shader compilation to occur at application build time, again, out of the field of view for the users of your game.

And content loading, that's when we do state validation. This, again, is relatively rare.

This means the only thing we have to do at draw call time is the most important thing to do at draw call time, which is kick off the work on the GPU. So that's the how and the why we created Metal, and now I want to take a deeper look at the API itself, and we'll take a look at some of the conceptual framework for the API starting with the objects in the API.

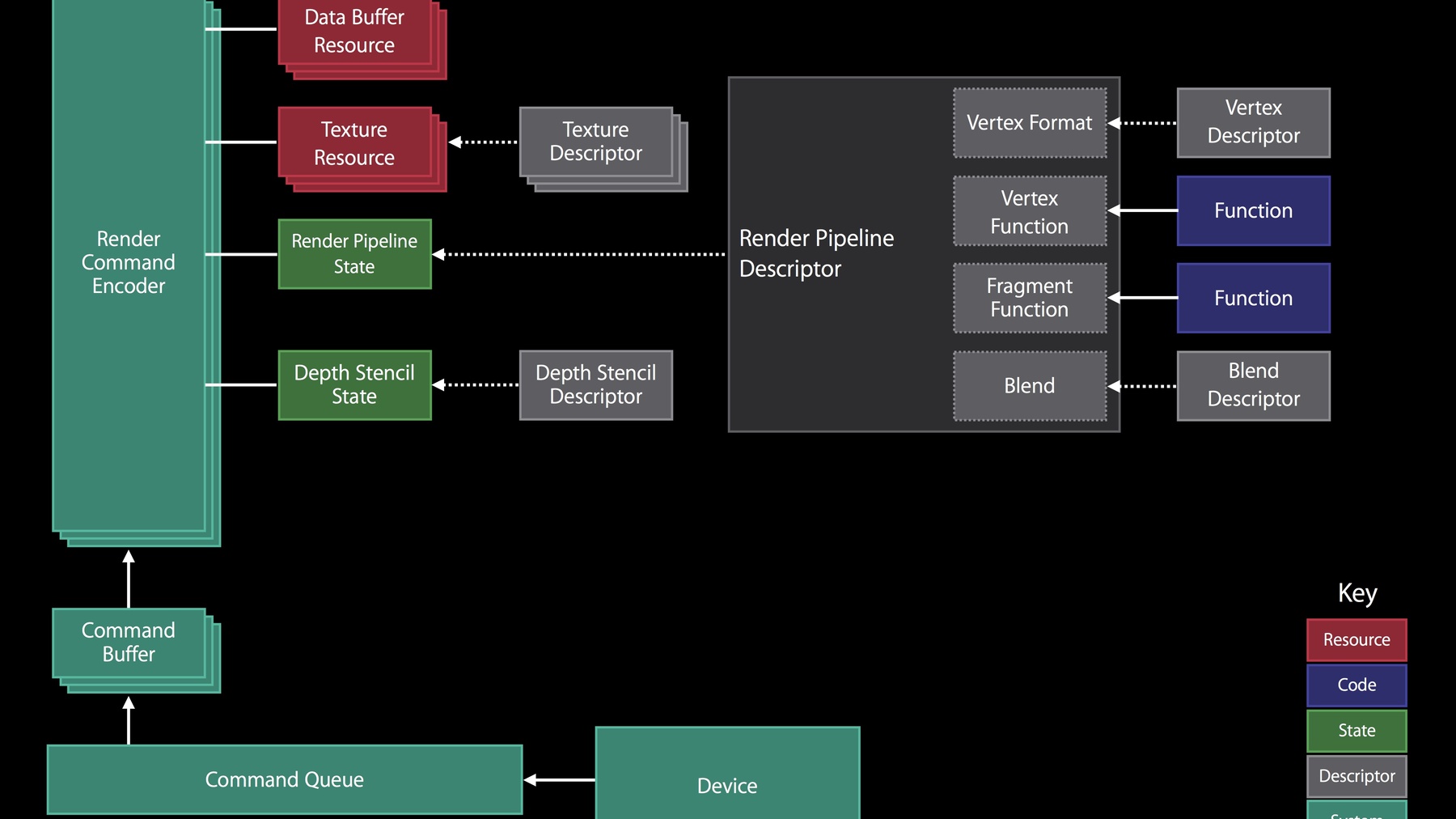

So the first object to talk about in the Metal API is the device, and this essentially is just an abstraction on the GPU, the thing that's going to consume your rendering and compute commands.

Those commands will be stored and submitted to the GPU in a command queue.

Now the command queue, its job is simply to decide and let you specify in what order those commands will be executed.

Those commands themselves are stored in what's called a command buffer.

This stores the translated hardware commands ready for consumption by the GPU. And the command encoder is the API object that's responsible for that translation. So when you create a command encoder, and you use it to write into a command buffer, that's the translation.

The state of the API is described in a series of state objects for things like how your framebuffers are set up, how you're blending and your depth and your sampling state is set up. These are all described to the API in objects, which we'll talk about in all three sessions today.

And you have the code: your shaders, your vertex, your pixel shaders. And, last, you have your data: your resources, your memory, the textures and the vertex buffers and the shader constants. Those are all stored in resources. So let's take a look next at kind of how these objects fit together by walking through a bit about how we construct the objects in the API. So first thing you have is the device.

So like we said, this is the device in the GPU, the thing that's going to consume your commands. And from that you create the command queue, and typically you'll have one of these in your application.

From the command queue, you'll create one or more command buffers to use to store the hardware commands, and you'll write into those commands with a Render Command Encoder (in this example).

Now, in order to generate those commands, you need to specify some information of the Render Command Encoder, and you do that by attaching various objects to the Render Command Encoder before you use it. So, for instance, you specify your data buffers and your textures that you want to use to do this rendering operation.

Now you'll notice in this diagram we also show something called the texture descriptor, and the texture descriptor is used to create the textures. It specifies all of the necessary state used for texture object creation.

Probably the biggest state object is the Render Pipeline state, and it, too, is constructed from a descriptor, and the descriptor in this case describes all of the 3D rendering state you need to create a single Render Pipeline.

Similarly, you have descriptors for your depth and stencil state that you use to create those objects, and we have a series of state for describing the render pass itself; how you're going to output your rendering.

Now the reason we split the state up into descriptors and state objects is because once you've specified what all these state combinations should be, Metal bakes all of these into a small number of state objects, that -- Now, all of the state has been translated, all the shaders have been compiled, and the system is ready to go. You can now issue your draw calls incredibly quickly without worrying that the implementation's going to have to come around and do some late binding translation or figure out if what you're trying to do is valid in the first place. A couple more notes. So, the source resources (the textures and buffers here), you can change those as you go. The render targets themselves are fixed for a given render command decoder. We'll talk more about why that's important in just a moment. Now inverting this diagram a little bit, we can see how objects are actually sending data to the device. You can have one or more Render Command Encoders, one for each rendering pass, writing into the command buffer.

You can also interleave different types of commands. In this case, I'm using compute commands in the middle of my frame.

And, as we said, Metal supports efficient multi-threading. You can actually construct multiple command buffers in parallel using multiple threads. So let's talk a little bit more about the command submission model itself to understand how this works.

Just as we've said, command encoders will encode the API of your, uh, it will encode the API commands into the hardware state, and then they'll store those commands in the hardware command buffer.

There are 3 types of command encoders for generating 3 different types of work on the GPU. We have Render, Compute, and Blit, and we'll talk about each of them in detail.

But important to realize here is that you can interleave these different types of operations in a single command buffer and within a single frame very efficiently. And this is because the command encoders themselves encapsulate all the state necessary so that we don't have to do implicit transitions between different types of operations and behind the scenes we don't have to try to save and restore all of the state necessary for using these different types of operations. It's all under your explicit control. The command buffers themselves are incredibly lightweight, and most applications are going to create a large number of these in their frames. And you control exactly when and how these get submitted to the GPU. Once they've been submitted, Metal signals you (the application) when those command buffers have completed their execution on the GPU. And so you get very fine grain control and clear notifications about exactly what's happening at any one point in time.

And very important to realize is that these command encoders, they're not just sending some work off that later is going to be consumed by the CPU. They're actually generating these commands immediately. There's no deferred state validation. It's essentially like they're making a direct call to the GPU driver. Now as I mentioned, you can also multi-thread this command encoding to get even more performance.

Multiple command buffers can be encoded in parallel across threads, and, yet, you get to decide exactly when those commands will be executed. So you have the control to both -- to split out the order in which the commands will be constructed while maintaining the order you wish for their execution on the GPU.

And we've provided an incredibly efficient implementation with no automic locks, to make sure you can get scalable performance across CPU cores. So that's the commands.

Next we're going to take a look at the resources, the memory, the objects in the API for specifying data.

Now we've designed the resource model in Metal explicitly for the A7's unified memory system.

This means that the CPU and the GPU are sharing the exact same memory for exchanging data. There's no implicit copies. We're not coming in behind the scenes and taking the data and moving it somewhere else for the GPU to see it. We also manage the CPU and GPU cache coherency for you. You don't have to worry about flushing caches between the CPU and the GPU. You simply have to make sure that you schedule your work to occur such that the GPU and the CPU are not writing to the same chunk of memory at the same time, but you don't have to do anything to manage caches.

And by you taking on this responsibility for synchronization between the GPU and the CPU, we can offer you significantly higher performance because we're not implicitly synchronizing things that didn't necessarily need to be synchronized.

There are 2 different types of resources for you to use in your application.

The first is textures, which are simply formatted image data that you use for rendering for source data or for rendering destinations, and there are data buffers which are essentially unformatted ranges of memory. You can think of them like just a bag of bytes to store your vertex data, your shader constants, any output memory from your GPU workloads. The important thing to remember with Metal is that the structure of these resources is immutable. You cannot change it. Once you create a texture of a given size or given level or a given format, it's baked, and this lets us avoid costly resource validation that would previously have taken place in other APIs where we'd have to figure out, "hey, now that you've changed how a texture is structured, is it still valid? Can we still use it as a rendering destination? Is it still mipmap complete?" We don't have to do any of that because you've specified it all up front.

If you want to use two different textures with - Ah, sorry. If you want to use two different formats for your texture, you simply create two different textures, and this is actually much more efficient. We can rapidly switch between these on, ah, in the Metal implementation. Of course, you can still change the contents of your textures for your buffers, and to do that, we provide several methods.

So you can update data buffers directly. You simply get a pointer to the underlying storage. There's no lock API needed because, again, this is running on a unified memory system, and for textures, we have what's called an implementation private storage. Now this doesn't mean that we're copying the data and moving it somewhere else. This simply means that we will reformat the textures for efficient rendering on the GPU, but we provide some blazing fast update routines in Metal to do that. If you need an asynchronous update routine for your textures or your buffers, you can do this via the Blit Encoder, and we'll talk in just a moment about how that works.

Now one other thing you can do with resources, and textures in particular, is you can actually share the underlying storage across multiple textures and reinterpret the pixel data differently in each one.

So, for instance, if you have a texture that is using a single 32-bit integer pixel format, you can have another texture which is using a 4-component "RGBA eights" pixel format, and they can actually be pointing at the same underlying storage because the pixel size is the same between the two.

And you can also share the texture storage with data buffers. This will allow you direct access to the underlying storage with the CPU and to use the underlying storage as a data buffer, and we simply assume row-lineal -- row-linear pixel order in this particular case. OK. Those are resources. Now I'm going to talk briefly about the command encoders themselves, and as I mentioned, there are 3 basic command encoder types.

You have your Render, Compute, and Blit command encoders, and I'm going to talk about each of these in sequence.

So first off: the Render Command Encoder. Now the Render Command Encoder, you can think about this as all of the hardware commands necessary for generating a single rendering pass, and by pass, I mean all of the rendering to one framebuffer object, one set of render targets. If you want to change to another set of render targets, to another framebuffer, you simply use another Render Command Encoder, and this is all the state for specifying the vertex and fragment stages of the 3D pipeline. It allows you to interleave these state changes, the resources, and any draw calls necessary for constructing your pass, but, critically, there's no draw time compilation.

You get to control -- the app developer -- exactly when and how all of the state compilation and expensive validation actually takes place.

And you do that with a series of API calls and state objects, and I'll show you just a few of those here.

So as we talked about, you have your depth and stencil objects, sampler objects, the Render Pipeline, and they describe various aspects of the 3D rendering state.

Basically, almost everything fits in the Render Pipeline where you -- that's where you specify your shaders, your blend states, all, how your vertex data is actually specified, and all of that can fit into these state objects. Now important to realize in Metal is that not all state objects are created equal. Some of the states are actually very expensive to change, and for these, we've put them in what's called immutable or unchangeable state objects, and you can't change these states after you've created the objects. These are the types of things that affect expensive operations or cause recompilation to occur.

So things like the shaders themselves we don't want to be -- you don't want us to be -- recompiling them all the time. So once you specified those into a state object, you can't change them.

But other things, like the viewport and scissor, are actually really cheap to specify and really cheap to change, and so those remain flexible. Now I talked a few times about how the API was designed with the A7 in mind, and one of the key ways you can see this is in how we handle framebuffer loads and stores. Now the A7 GPU is a tile-based deferred-mode renderer. Now I'm not going to go into the details of what this means, but one important piece of that is that it has what's called a tile cache, and a tile cache is used at the beginning and end of each rendering pass to load the contents of the previous framebuffer or to write it up to memory. And so you need some explicit control over this tile cache to get optimal performance and to make sure you're not doing needless reads and writes to memory.

In Metal, you can specify load and store actions on the framebuffer to make this incredibly efficient, and I'll show you an example of how that works here.

So let's say, as an example, this is before Metal, you had one frame for which you wanted to do two rendering passes, and in this example, you're going to take the color framebuffer, you're going to have to redo it at the beginning, you're going to write it out at one pass through, and then you're going to do the same for the second pass... and you'll probably want to use a depth buffer as well. So before Metal, you can actually see here there was quite a lot of memory bandwidth taking place across these passes. You end up with 2 reads and 2 writes for each of the color and the depth buffer, which is a lot of memory traffic.

Well, with Metal, we actually let you specify which of those reads and writes you actually need to occur. So, for instance, you can say, well, I'm going to render every pixel of my frame anyway. There's no point in loading the tile cache at the beginning of the frame, and I can save that bandwidth.

And if you're going to write out the pass and then read it in the second pass, you probably would need to actually do that, but when you look at the depth buffer, you can see a lot of unnecessary reads and writes. And so you can specify that we should probably just clear the depth buffer at the beginning of the frame because you're not going to need its previous contents, and you don't need to write it out at the end of the frame because you're not using it in a second pass. You're simply going to clear it again, and then you don't need to write it out there either, and we can get some dramatic reductions in memory traffic as a result of this.

You can reduce the overall amount of reads and writes to a fraction of what it was before, and you can gain significant performance for your application.

So this is just one of the ways in which we've designed Metal with the A7 in mind. Alright. That's rendering. Now we're going to look at the next area we introduced with Metal, a functionality, which is Compute.

So we're not going to spend a lot of time today talking about general-purpose data-parallel computation in the GPU, but for those of you who have done this type of operation before, you're going to find that this is a very familiar run-time and memory model. It uses the same textures and data buffers from the Metal graphics APIs, has the same basic kinds of memory hierarchy, barriers, memories, and load stores you might find in other data parallel programming APIs, and you can configure and specify exactly how the work groups for your Compute operations will be executed by the GPU.

Probably more important to realize with Metal is that it is -- the Compute operations are fully integrated with graphics.

This means you get a unified API, a single shading language, and a single set of fantastic developer tools for both graphics and compute operations on the GPU. And probably more important for performance, we can efficiently interleave these operations between Compute, Rendering, and data operations and Blits. Similarly, to graphics, there's no execution time compilation with the Compute operations. You control exactly when these expensive compilation activities will occur. And probably a little bit different from how graphics looks in Metal, there's very little state in the Compute Command Encoder. There's some state for specifying exactly which Compute kernels you're going to be using and how you're going to configure work groups, and that's pretty much it. And that's it. If the Compute Command Encoder seems simple, that's because it is, and that brings us to our last command encoder, the Blit Command Encoder, and this is what you're going to use for asynchronous data copies between resources on the GPU, and this can happen in parallel with your compute and your graphics operations.

You can use this to upload textures, to copy data between textures and buffers, or to generate your mipmaps.

You can also update the data buffers themselves, again, copying to and from textures or other buffers, and you can actually fill the buffers themselves with constant values in Blit Command Encoder. Alright. That's it for the API high level summary. Now I'm going to take a quick tour through the shading language itself.

Alright. So the Metal shading language is a unified shading language. This means using the same language, syntax, constructs, tool chains for both graphics and compute processing, and it's based on C++11, essentially a static subset of this language, and it's built out of LLVM and clang, which means you get incredibly high performance and incredibly high quality code generation for the GPU. The only thing we've really had to do was add some syntax for describing certain hardware functionality such as how you're going to control texture sampling and rasterization, and then we've added a few things to make shader authoring much more convenient, such as function overloading and basic template support.

But that's it. If you know C++, you understand the Metal shading language. As I said, we added just a few data types and some syntax for handling graphics and compute functionality. Some basic data types you'll probably find very useful such as scaler, vector, and matrix types, and then we've used what's called the attribute syntax to specify a lot of the connection between the Metal API and the Metal shading language. So things like, how do you get data in and out of your shaders? You specify those with function arguments and you use the attribute syntax to actually identify which textures and buffers you want to use.

Or how to implement programmable blending in your shaders? You can actually use the attribute syntax to specify pixels you want to read from the framebuffer. The Metal shading language itself is incredibly easy to use. You can put multiple shaders in a single source code file, and then we will build those source code files with Xcode, when you're building your application, into Metal library files. And the Metal library files are essentially just an archive of your Metal shaders.

With the Metal run-time APIs, you can actually load a Metal library, and then you can finalize the compilation into this device's machine-specific code.

We also include an awesome standard library of very commonly-used and incredibly performant graphics and compute functions. One other thing to realize about the Metal shading language is you need to get data in and out of your shaders. So we specify -- use a method called argument tables to let you specify which arguments, which resources, and which data needs to move between the API and the shading environment.

So each command encoder will include what's called an argument table, and this is simply a list of the resources you want to use in the API that you want to read and access and write in the shader themselves. There's one table for each type of resource, and then the shader and the host code will simply use an index into that table to refer to the same resource. Let's see an example. So on the right here, I simply have a representation of one of the command encoders; it could be Render Command Encoder, for instance.

And you have a table in the command encoder that describes the resources. So you have a list of all of the textures you want to use in your shaders.

Similar tables exist for buffers and samplers. Now I've simplified this a little bit because there's actually one of these sets of tables for each of the vertex and fragment shader stages. Well, let's take a look at an example shader. So in this, we have a vertex shader and a fragment shader in Metal shader code on the left, and you'll see it looks quite familiar. You'll get a lot more information about how this works in the next session, but you can get a good sense of how this works just from looking at the code itself right here. So in the fragment shader, we're simply referring to texture at index 1 to identify that we would like to use the texture that we set up in the command encoder to read in this fragment shader.

And, similarly, in the vertex shader, we can do the same thing with the buffers, and use those as inputs to the vertex shader and refer back to the argument index table in the command encoder. So you set up the command encoder's argument tables with the API and simply refer to those resources in your shaders using the attribute syntax in the function arguments themselves. And that's it. The shading language is actually pretty simple, and we think you're going to really like it. And, next, I'm going to talk about how to build your Metal application using our awesome developer tools. And probably the most important of those developer tools is the Metal shader complier.

Now, ideally, you don't even know this exists because we're simply going to build your shader sources just like we build the rest of your application sources at application build time. There's no need for you to separate out your shaders and specify them at run time. You can actually ship them with your application already having been precompiled. So there's no source code that needs to go with your application for your shaders. But probably more important from the developer's point of view is that we can actually give you errors and warnings and guidance about your shaders at the time you're building your application. So you don't have to wait until your customers are running your game to find out that you have an error in one of your shaders.

This is awesome.

Those shaders will then be compiled into Metal libraries by Xcode and then compiled to device code at the time you create your state objects themselves. So, again, there's no draw time compilation, and we will cache this compilation -- this final device machine-specific compilation -- on the device.

So we won't be continually doing even this last translation step.

Now for those of you who like to specify your source code at run time -- perhaps you're constructing strings on the fly -- we do provide a runtime shader compiler as well. This can also be really useful for debugging.

It, too, has no draw time compilation overhead. It operates just like the precompile shaders, but for best performance, we generally recommend you use the offline compiler. We can give you the most information to help you build the best possible shaders if you do it this way.

Well, let's see what this would look like. So here's an example. You're building your application in Xcode, and you have a series of Metal shader files, and these shader files are passed to the Metal shader compiler, the compiler of the Metal shader sources, at the time you build your application, and a Metal library file is generated.

That Metal library file will be packaged with your application, and so then when you deploy your application to a device, that Metal library file goes along for the ride. Then at application run time when you're constructing your pipeline objects, you specify which vertex and fragment shaders you'd like to use from that Metal library.

Metal implementation then will look up those shaders to see if it's already cached them once before, and if not, will compile them to the final machine device code and send it to the GPU. And that's it. Most of this happens automatically for you, but, critically, exactly when you want it to. Now if you've used our OpenGL ES debugging tools in Xcode, you're going to feel right at home. We have an awesome set of GPU programming, debugging and profiling tools integrated right into Xcode, and I'm going to give you a quick tour of those now. So this is an example application inside Xcode where you get visual debugging tools built right into the same environment you're using to build the rest of your application. On the left-hand side, we have what's called the Frame Navigator, which shows you exactly which Metal API calls, draw calls you issued and in what order they occurred, and in the middle, you have the Frame Navigator -- or, sorry, the Framebuffer View, where you can actually see the results of those draw calls and state operations happening live as you're constructing your frame.

We also have the Resource View, where you can see all of the textures, resources, data buffers, everything you're using to construct your frame, inspect them, and make sure that they're exactly as you set them up. And at the bottom, you can see the Metal State Inspector, where you can see all of the API state and all of the important bits of control and information you have about your application in Metal.

We also provide some really fantastic profiling and performance tools. So in this example, we're showing the Metal Performance Report.

You can see things like your frame rate or how much you're using the GPU. You can also see exactly which shaders are the most expensive, and you can see how much time they're taking per frame.

This is awesome. You can also see for each of those shaders in milliseconds how much time they're taking as a whole, but you can get a feel for exactly what are the most expensive lines of each shader. So if you want to really optimize your shaders, you can find out which line is taking up the most time and focus in on that, and this is all built for you right inside of Xcode.

Yeah, excellent. I'm glad you like it.

So you can also build your shaders, as we said, inside Xcode. You can actually edit them as well, and you get all the familiar syntax highlighting and code completion, and you get warnings and errors right for you inside of Xcode, too. We think this is really fantastic. It makes writing and editing and profiling shaders a much more natural part of the process, and we've designed Metal to work seamlessly in this environment.

Alright. So that's the developer tools, the API concepts, and the shading language. Now as you heard in the keynote, we've been working, with Metal, with some leading game engine providers such as Electronic Arts, Epic, Unity, and Crytek, and we've been amazed at what they've been able to create in just a short amount of time. Well, I'm very happy to introduce up on stage Sean Tracey from Crytek, who's going to show you what they've been able to create with Metal in just a few weeks. Sean? Thanks a lot, Jeremy.

Crytek is known for pushing the boundaries and for developing blockbuster hits such as Crysis and Ryse for the consoles.

The CRYENGINE is going mobile, and our latest game, The Collectables, was recently released on the App Store. Integrating Metal into the game gives us opportunities that we didn't have before, and the added performance changes the way we think about creating mobile game play and mobile game content.

Today, we'd like to show you the next version of The Collectables, and to show you how we used Metal to change the game. Let's take a look.

In The Collectables, you control a renegade team of mercenaries, and today we're on a daring hit-and-run mission to take out the enemy artillery.

As you can see, the environment is incredibly dynamic and very rich with wind affecting vegetation and more.

Looks like the enemy's set up a roadblock ahead. Let's take out these jeeps with our RPGs. Booyah! So you can see this level of cinematic destruction simply wasn't possible on mobile before. When those jeeps explode, there's over 168 unique pieces that come flying off. The tires, windshield, engine block, and more.

Using the power of Metal, we're able to leverage our Geom cache technology as seen in Ryse on console.

And there it is, our objective, the enemy artillery. It's a pretty big mother. So let's go ahead and call in our AC130 gunship for air support.

Geom cache uses cache-based animation to realize extremely complex simulation and special effects, as you saw in the destruction of those buildings.

So as our players make their way to their next objective, let's take a look at another example of how we can use this power to turn the environment into a weapon for our player. Sounds like trouble ahead. Yeah, they've got a tank. Alright. We need to block off that road and get out of here, or we're going to be toast. Let's try taking out the chimney.

And that's how you stop a tank in The Collectables.

When that building and chimney come down, there's over 4,000 draw calls on screen, 4,000 draw calls -- on an iPad! Oh, they've got another tank and, um, we're out of chimneys. So mission accomplished. Let's get out of here.

As you can see, the performance gained with Metal gives us a tenfold performance increase that allows us to bring the Crytek DNA alive on mobile. We look forward to seeing you guys in the game. Thanks a lot. Alright. Thanks, Sean.

That's really amazing. So 4,000 draw calls is a tenfold increase over what was previously possible before Metal, and we're are truly, truly excited to see it in action.

Alright. So that's Metal. Metal is our new low overhead, high-performance GPU programming API which can provide you with dramatic increase in efficiency and performance for your game.

We've designed it from scratch to run like a dream on the A7 chip and our iOS products. And we've streamlined the feature set and the API footprint to focus on the most modern GPU features, such as unified graphics and compute, and the most modern GPU game programming techniques, such that require fine-grained control and efficient multi-threading.

With support for precompiled shaders and a fantastic set of developer tools, we believe you're going to be able to use Metal to create an entirely new class of games for your customers.

We're really looking forward to what you guys are going to be able to create with Metal. We think it's just a truly, truly fantastic thing. For more information about Metal, you can come see our Evangelists, Filip and Allan, and we have an amazing set of documentation about Metal on our developer relations website. You can also check out the developer forums where you can talk with folks about Metal, and you can come to the next two sessions which we're going to go into Metal in a much more level of detail. So you can see how Metal works in your application, how to build your first Metal application, how to build scenes out of Metal and use it to control the GPU, how the Metal shading language itself works, and then we'll have a second session where we cover some more advanced techniques such as using multi-pass rendering and Compute.

We're really looking forward to what you're going to be able to create with this API. Thank you very much for coming.

-