-

Optimizing App Assets

Learn how to use assets to bring visually compelling and data efficient artwork to your apps, leveraging new features in iOS 12. Gain insight into organizing, optimizing, and authoring artwork assets by using asset catalogs to their fullest. Learn techniques to better streamline workflows between designers and developers. Ensure better app delivery and a smaller footprint, maximizing target audiences of your app with full artwork asset fidelity.

리소스

관련 비디오

WWDC21

WWDC17

-

비디오 검색…

Good afternoon, welcome to Optimizing App Assets, my name is Will, engineer on the Cocoa Frameworks group and today make my coworker Patrick and I are going to go through some of the best practices for optimizing assets in your application.

In this day and age, many apps and games offer great user experience by incorporating high fidelity artwork and other type of assets in their application. And by doing so they're able to attract a large number of audience, as well as they're engaging. We want this to be true for all of your apps as well and that is why we're here today to showcase some of the best practices with Asset Catalog and also more importantly, on how you can better deploy the assets in your application to your users and how to translate that to the overall user experience.

And throughout this talk we're going to touch on a variety of different aspects through the traditional, design, develop, and deployment workflow. But first I'd like to spend a little bit of time to talk about a topic and that is image compression.

Image compression is at the heart of the Asset Catalog editor and is the last step in the Asset Catalog compilation pipeline. And is greatly related to some of the other optimizations that happen throughout the pipeline.

By default Asset Catalog offers a variety of different types of compression types and is also by default able to select the most optimal compression type for getting any given image or texture asset.

While that may be sufficient for most projects, it's still a good idea to understand what are some of the options offered and more importantly, to understand what are their trade-offs, as well as what are the implications on your project.

Now before I dive into the specifics of any image compression, I'd like to talk a little bit about another optimization in Asset Catalog that has a huge implication on all of the compression that we do, and it's called automatic image packing.

Traditionally, before the inception of Asset Catalog, one way to deploy assets in your application is just to dump a bunch of image files in the application of your project.

It's important to be aware that there are many drawbacks, as well as trade-offs when doing this approach. There are two sides of downsize that you have to be aware of. The first comes from the additional disk storage that comes with doing so.

Traditional image container formats uses extra space to store metadata, as well as other attributes associated with the underlying image.

Now if your application has a huge number of assets, and if they have similar metadata, the same information gets duplicated over and over on disk for no real benefit.

Additionally, if most of your assets are fairly small then you do not get the full benefit of most image compression.

The other type of drawback comes mainly from the organizational overhead that you have to pay for. It is very hard work with a large cache of loose image files and it's also much harder to interact with them from the NSImage and UIImage family of APIs.

Last but not least, you also have to deal with the inconsistency in image format, as well as other image attributes as well. For example, in your artwork collection you can have a mix of images but some of them support transparency while the others do not.

The same applies to other attributes, such as color space and color gamut.

As the catalog is able to address all these problems by identifying images that share a similar color spectrum profile and group them together to generate larger image atlases. This way you do not have to store the same metadata over and over for all of your image artwork. And you also benefit better from all the underlying image compression.

Now let's take a look at a real-world example.

Here on the left-hand side of the screen there are a dozen image artwork. These may look familiar to you and that is because they are taken directly from one of our platforms.

Now these image artwork are all fairly small, but still the overall size add up to over 50 kilobytes.

This automatic image packing Asset Catalog is able to identify that all of these image artwork share very similar color spectrum and if so it'll group them together to generate one single larger image atlas.

This way the overall disk size gets reduced to only 20% of the original size. That is an 80% size reduction saving and that is huge.

It's also important to be aware of these optimization scales very well. The larger the amount of our asset in your application, the more benefit you're going to get out of this optimization.

So that's automatic image packing.

Now let's talk a little bit about lossy compression.

Lossy compression is all about trading minor losses in vision fidelity for the large savings that you gain from the underlying compression. So it's really important to understand what are the scenarios in your application where lossy compression is most applicable to.

Typically we recommend you use lossy compression for image artwork that have fairly short on-screen duration. For example, that will be artwork that is shown on the splash screen of your application or through animations and effects.

Now it wouldn't be exciting for me to just stand here to talk about lossy compression without introducing a new lossy compression in the Asset Catalog. So I'm very happy to announce that this year we're extending support of high-efficiency image file format in Asset Catalog.

If you followed our announcements from last year, you know that we introduced high-efficiency file image format on all of our platforms, as well as in the Asset Catalog editor.

This year we're taking it one step further, we're making high-efficiency image file format with default lossy compression in Asset Catalog.

Thank you. Now let's have a quick recap of some of the benefits that we get from high-efficiency image file format. The most important thing to know that it's able to offer much better compression ratio than compared to some of the existing lossy compression that we already offer. One that you may be already familiar with is JPEG.

There are many benefits that come with this high-efficiency image file format, such as support for transparency out of the box.

And more importantly, it's important to be aware that Asset Catalog is able to automatically convert image files from other formats to high-efficiency image file formats, which means that as long as your image assets are tagged to this lossy compression there are no extra required, no extra action required on your end. This all happens automatically in the Asset Catalog compilation pipeline.

For more in-depth information on high-efficiency image file format, I suggest you refer to our session from last year.

Now let's shift our focus to lossless compression.

Lossless compression is the default compression type and it's used for the majority of application assets.

Therefore, it is really important to understand how you can get the most benefit out of lossy compression.

Typically image artwork can be categorized into two groups based on their color spectrum profile, and they each benefit differently from any lossless compression. Let's take a look at that.

The first category of images are commonly referred as simple artwork.

And they're referred this way because they have a fairly narrow color spectrum and a fairly small number of discrete color values and that is because of the simplistic designs. And they're best represented as many application icons. On the other hand, the other type of image artwork are referred as complex artwork.

Again, both these types of image assets benefit differently from lossless compression. And generally speaking, any lossless compression will do really great to either one of them because they're optimized for it.

We realize that both of these are really important in many projects. And we also want to have all of your assets to be deployed through the best lossless compression possible.

So I'm very happy to announce this year we're introducing a new lossless compression in Asset Catalog and it's called Apple Deep Pixel Image Compression.

Thank you again.

Apple Deep Pixel Image Compression is a flexible compression that is adapted to the image color spectrum.

What that means is that it's able to select the most optimal compression algorithm based on the color spectrum characteristics of any image artwork.

This year not only we're extending this new compression to all of you guys, we're also enabling it on all of our platforms, as well as first party apps. And by doing so we're able to observe on average 20% size reduction across all of our built projects, which is a pretty big deal. Now let's look at some numbers.

Here's a chart that shows you the overall size of all the Asset Catalogs from some of our select platforms. And it is immediately obvious that we're able to see about up to 20% size reductions across all of our platforms.

When it comes to lossless compression, compression ratio is only half the story. Because of the fact that lossless compression is used for the majority of your application artwork, decode time is just important as well.

Apple Deep Pixel Image Compression is also able to offer up to 20% improvement in decode time.

So that was lossless compression.

Now I'd like to shift gears to touch on two separate but strongly connected subjects that have a huge implication on all the optimizations and compressions that I just talked about, and their deployment and App Thinning.

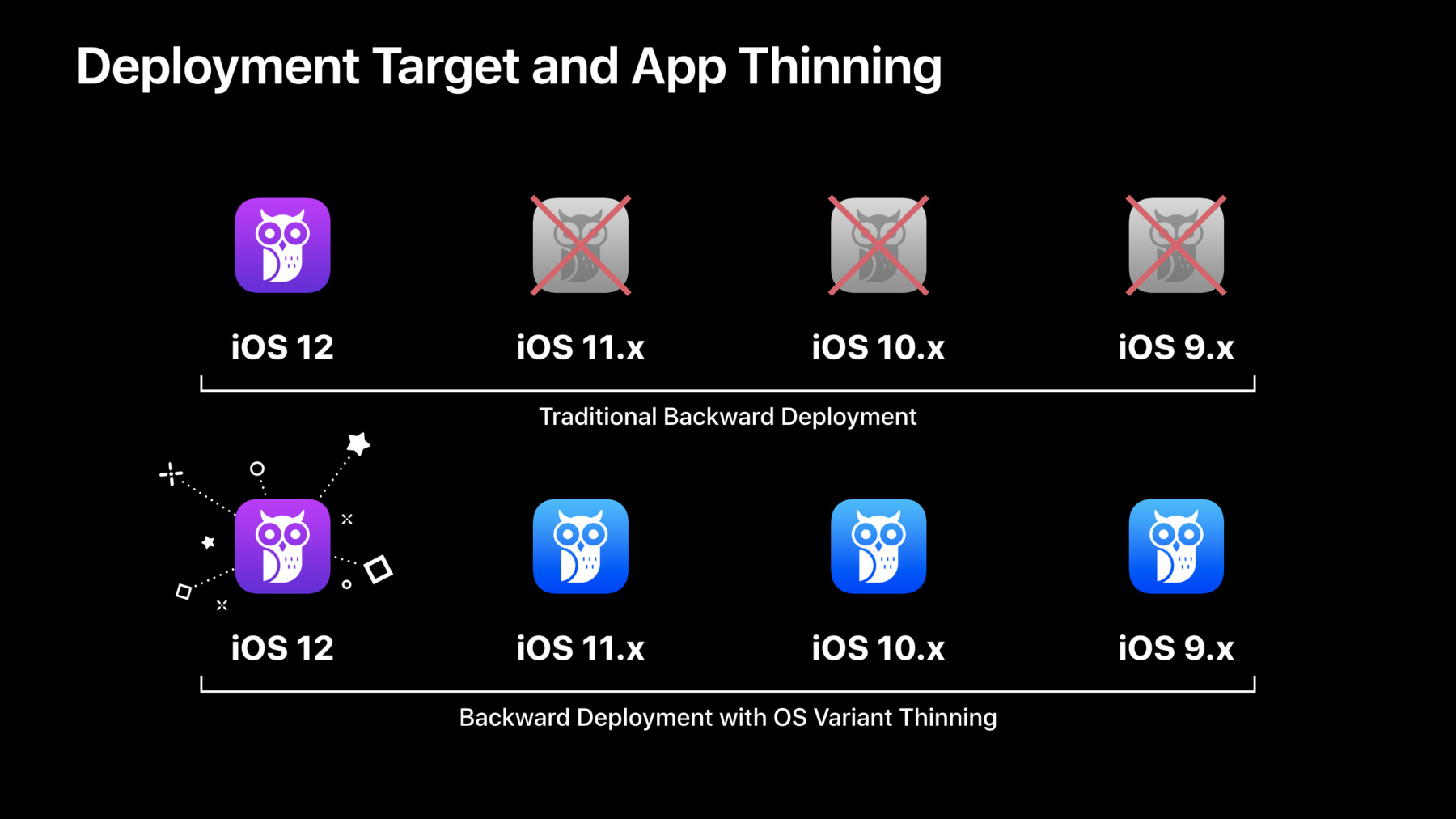

Here's a quick recap of what App Thinning is. App thinning is a process that takes place in the App Store that generates all variants of your project targeting all the device models, as well as versions of your deployment target.

When we take advantage of App Thinning is to have the deployment target of your application to a version that is lower than the latest version of the platform you're targeting. This way you'll be able to reach more audience. App thinning is able to take care of generating all the variants of your project and deploy the most optimal one across all of your user base.

This year if you build your project with Xcode 10 and the iOS 12 family of SDKs, your project is automatically going to benefit from all the optimizations and new compressions that I just talked about.

However, if you back deploy your application to an earlier version the new optimizations are not preserved.

And that is because App Thinning has to generate variants that are compatible to the earlier versions of the targeted platform.

This isn't ideal and more importantly, we really want all of your assets to be deployed in the most optimal manner. So I'm happy to announce this year we're introducing a new version of App Thinning, called OS Variant Thinning.

With OS Variant Thinning your application can still target those that are on earlier versions of your target platform, say in this case from iOS 9 all the way to iOS 11.

And for those that are running on the latest version of iOS, OS Variant Thinning is able to generate a special variant of your project that has all the latest optimizations and compression types. This way everybody is able to get the most effective version of your project and everybody's happy.

So that was App Thinning and backward deployment.

Now I'd like to walk you through an example of how you can exercise the same App Thinning expert workflow locally within Xcode.

It is a fairly simple process, all you have to do is go to Xcode archive button.

This will simply instruct Xcode to generate all variants of your project. Once that is done simply click on the Organizer button and that will bring up a window that shows all the variants generated for your project.

And here's a window for the garage band project that we took to perform this exercise.

The first thing that Xcode is going to ask is to select a type of distribution method that you can distribute all the variants that it just generated.

For the purpose of this exercise, simply select Ad Hoc Distribution.

And on the next window, in the App Thinning field, simply select all compatible device variants.

This will instruct Xcode to export all the variants that are targeting all the supported device types. Once this is done Xcode is able to synthesize a report that summarizes all the variants that it just generated.

And there are a few key data points that you can extract from the report to help you better understand the deployment of your project and again help to answer a few key questions such as how many variants are generated from my project, what do their sizes look like, and are there any rooms left for the optimization and fine-tuning for any particular variant. And it turns out that actually half the numbers generated for the garage project that it just exported and let's take a look at that.

So here on this chart it's going to show you the sizes of all the variants generated for a select set of device models.

And these are the sizes generated for the iOS 11 and earlier versions of the variants.

Now because garage band is a fairly large project, with tens of thousands of image artwork the sizes of the generated variants range from 90 to over 100 megabytes.

And here are the numbers for the iOS 12 variants.

And again from this graph it is immediately obvious that we're getting about from 10 to 20% saving sizes in size reductions. Now if this number looks familiar to you by now all of these optimizations are from all the optimizations and compressions that I just talked about.

So that is image compression.

Now I'd like to hand it to my colleague Patrick to talk about design and production of your application asset. Thank you Will.

So that's great, so you just heard about some amazing ways that you can get your assets improved just by using Asset Catalogs in Xcode. I'm going to talk a little bit more about a few other things that you can do with just a little bit of effort in Asset Catalogs to really optimize your application's assets. So and I'd like to begin with design and production because this is really where it all begins.

So assets as you know they come from many tools, many different workflows, many different sources but they have one thing in common, they ultimately all came from humans at some point. And it really pays to be organized in terms of understanding that process of how those assets come into your software workflow and to pay attention to some of those details that can really pay big dividends in your application efficacy. So the topic I'd like to talk about first is color management, often overlooked but still quite essential.

So on disk an image asset is just a bunch of boring bytes right, it doesn't really mean anything until you apply color to it. How does it get the color, how does the system even know what each of the numbers on those bytes means? Well the answer is it comes from the color profile, that is what actually gives each one of those color a value and an absolute colorimetric value, it tells the system how it should look. As such, I want to emphasize that it's really important to maintain those color profiles in your assets as source artifacts. These are vital pieces of metadata that keep the designer intent intact on how that asset was delivered. And resist the temptation to strip those profiles out because you think they're just extra metadata that you know take up a bunch of payload. These are source artifacts that are checking into your project, let the tools worry about optimization for deployment.

So why is any of this color stuff important? Well the answer is our devices have a broad range of displays with different characteristics and something needs to make sure that the actual colors in your assets match appropriately and look appropriate and get reproduced appropriately on all those different displays, that's the job of color management. This is a computational process, it can be done either on a CPU or at times on a GPU, but it is some work.

Now Asset Catalogs come into play here because what they will do is at build time in the compilation process they will perform this color matching for you. And this is really great because it means that computation is not happening on device when it really doesn't have to. And your assets are ready to go on device and ready to be loaded and ready to be displayed without any further ado. And as a bonus this extra processing we do to do this color management at build time eliminates the profile payload that you might've intended to strip earlier and replaces it with an ultra-efficient way of annotating exactly what color space we now have and the pixels on disk, so that's color management. A related topic I'd like to talk about here is working space.

Now by working space I'm really referring to the environment in which these assets actually got originated in the first place, this is the designer or maybe as an engineer are working on some artwork yourself, you're working in a design tool, you're creating content. It's important in these contexts to use consistent color settings for all the design files that you have for your project. This actually is a good practice and it actually has positive technical benefits because it ensures a consistency between how you organize everything across your application. There are two specific formats that are most talked about and most recommended for creating working design files. sRGB 8 bits is by far the most common, a very popular choice and it has broadest applicability across all of our devices and your content types really. However, if you're working on a really killer take it up to the next notch vibrant design like this wonderful flower icon here for example you may want to take advantage of the wide color characteristics, capabilities of some of our devices and use the wide color, use a generated wide color asset. For this we recommend you use Display P3 as your working profile and 16 bits per channel to make sure you don't lose anything in executing that design. Now Xcode and the platforms, runtime platforms have a wide range of processing and management options to handle this wide color asset. I'm not going to go into too much depth here, but I encourage you to refer to and look at the Working with Wide Color session that I did two years ago where I went into some depth on these topics and it gives you some more background for this. Also, new since last year there's a great treatment of working with P3 assets up on the iOS Design Resources section of the developer.apple.com website.

Okay now let's get into some actual software art here. Okay so you may have, your UI typically has to adapt to a lot of different presentations and layouts, this can commonly call for artwork that actually needs to stretch and grow to adapt to those layout changes. How do you accomplish this with artwork? Well the most common approach is to identify a stretchable portion of the image and the unstretchable portions of the image. Why is there a difference? Well considering, this is a crude example here on the slide, but imagine that we had a beautiful shape to the overall asset and like round corners that you wanted to preserve at all possible sizes like a frame. You want to make sure that you don't stretch those pieces, the blue pieces in this slide but you can stretch the yellow pieces. So traditionally the way this is done is with the modern design tools is to slice all these items up, identify all these regions, and distribute them as individual assets. Then the programmer would reassemble these in the final design size using a draw 3 or a nine-part API for example. Now this works fine and has been a tried and true practice for many years, but it does have a downside. Reassembling those images at a final size is a pretty CPU intensive task and it can be a bit complex and inefficient and it's not really a good fit for modern GPU UI pipeline like core animation.

What's a better approach? A better approach is to take a single image and just provide the stretching metadata for it that identifies what the stretchable portion is. And that really enables the most optimal smooth GPU animation of that resizable image. And I'm happy to tell you that Asset Catalogs makes this really easy to do and it's called the Show Slicing editor.

It's really easy to work with, you just click the Start Slicing button and then you start manipulating the dividing lines here which actually lets you identify the stretchable portions of the image and the unstretchable portions of the image. In this example the left and right end caps and then that middle slice that's orange is the one that's the stretchable piece. Now you may notice there's a big piece of this image that has got this white shading over it, what is that all about? Well that's actually a really interesting thing, that part of the asset is not actually going to be needed anymore because we can represent any possible size with the three remaining pieces. Okay why is this important? Well the nice thing is now that Xcode knows this at build time we can actually just take the pieces we need and leave the rest behind. So that large section we don't have to include that in the bytes on disk that we actually ship in your app, that's great. And it also means that it has a secondary benefit and this is a more subtle one, but I really like this. It means that you can tell your designer to feel totally comfortable delivering assets at sort of their natural size and don't have to worry about pre-optimizing them to be the smallest possible things so that it's efficiently deployed right. That shouldn't be the concern of the designer it's actually much more meaningful over the long-term to put something in the source code that's easy to look at and obvious what it is and let the tools worry about these deployment details. So in addition to the graphical inspector and graphical way of identifying the stretchable portion there is of course also the Show Slicing Inspector which where you can have fine control over these edge insets and also control the behavior of the centerpiece when it stretches or tiles.

All this of course adds up to keeping the stretching metadata close to the artwork which will then yield enormous benefits the next time, which inevitably happens, the designer comes up with a new update to your design. Now you can update everything in one place and don't have to remember the five or six places in code where you might have a hang code of the edge insets previously, now it's all tied together in one place. Thank you. Okay next up I'd like to talk about vector assets. So because our displays on all of our products have a variety of different resolutions you're probably very used to delivering 1x, 2x, 3x depending on what platform you're targeting, distinct assets. And that's fine and works really well, but it's kind of a mess to have to deliver three or two or three assets every time for a single design for no other reason than just resolution.

What if you can actually get away with this with just one asset? Well you can and we've been supporting vector assets in Asset Catalogs for a number of years now in the PDF format. And with Xcode Asset Catalogs you can actually supply a PDF and Xcode will generate and rasterize that PDF into all of the applicable scale factors that your app is currently targeting depending on platform. And that's really great because it means you don't have to worry about paying any cost at runtime on device to render an arbitrary, potentially arbitrarily complex PDF vector asset.

So it gives you some peace of mind about using vectors. Now you may have a scenario where actually you want to present your assets in some circumstances at a different size or scale than the most common natural size that the asset was designed for. Well new since last year in iOS 11 and Xcode 9 we now allow you to preserve the vector data so that when that image is put into an image view that is larger than the natural size of that asset it'll go ahead and find that original PDF vector data, which by the way we've linked it out and cleaned of any extraneous metadata and profiles as well so it's nice and tight and it's slim as possible. And we'll go ahead and re-rasterize that at runtime but only if you're going beyond the natural size, otherwise we'll use that optimized prerendered bitmap. So this is great because it means your app might be able to more flexibly respond to dynamic type and automatically your images will look more crisply when you resize your UIImage view.

That's vector assets.

Okay next, I'd like to talk a bit about designing for 2x, 2x commonly known as retina is the most popular and common display density that your apps are probably being experienced on. And it's great right, it was a huge step forward, however, there are still cases where you can have designs where a stroke or an edge might land on a fractional pixel boundary and result in a fuzzy edge. It's still not high-resolution enough that you won't notice a difference between a sharp edge and a fuzzy edge.

And this can still be a challenge in designing assets at times.

Well what are some techniques that can be used to address this? One common design technique is to turn on point boundary snapping in your vector design tool, set up a grid at one-point intervals and turn on snapping so that when you adjust your shape or your control points that you know that they can snap, when they snap to a boundary you know that that's going to be a pixel boundary and that's great. But there's still some cases that you might have with a design where some of the edges are still perhaps landing somewhere in between one and two and you're not sure, but you'd really like to know, especially on a retina 2x device what's going to happen there and can I optimize further for the actual display density. Well what you can do is you can actually use a 2x grid, make your asset twice as nominally big in your vector design tool and make that grid now be a one-pixel grid where every two points, every two units is going to be one point for retina.

And then adjust your assets and use the point snapping to adjust your strokes and edges to fit there. Okay that's great, now what do you do with this thing once you've got it, it's too big right, it doesn't work? Yes it does, all you have to do is just drop it into the 2x slot in the Asset Catalog scales bins and that will automatically enable Xcode to process that artwork, realize it's actually a 2x piece of artwork, it's slightly too big, one point is not equal to two pixels of retina, but rather the other way around. We'll do all the math, we'll render all the right rasterized bitmaps for all the other scale factors and handle that for you. Freeing the designer to use that 2x grid which can be rather helpful.

Of course, if the automatic scaling that we do is insufficient or still presents problems in some areas, you can have ultimate control of your results as always by dropping in hinted bitmaps into the appropriate scale factor bins and we'll go ahead and use that and prefer that over the generated PDF rasterizations.

Okay so that's a bit about design and production ends of things, now let's talk about cataloging and sort of the organizational aspects once you're in Xcode. So those of you who have played around a little bit with Xcode Asset Catalogs it can be a bit overwhelming to see how many things there are in front of you there and what you're supposed to use and how many options there are. Well I'm here to tell you, you really should only use what makes sense, what makes sense for your project and what makes sense for the content that you're working with. There's a lot of options here and we've made a very powerful engine and organizational scheme here that has lots of capabilities, but you really need to fit it to the need that you have and use the simple, start with simple first and then go from there. So I'd like to talk about two organizational techniques that can help in this area. The first is bundles, now why would I be talking about bundles in an asset talk that seems rather incongruous? Well I'm really trying to address large projects. So if you have a large project where there are perhaps multiple frameworks involved, maybe you even work with multiple teams. Dealing with assets can sometimes be a pain if you have to pour them all into the main app bundle and have to manage them all there and make sure names don't conflict and appropriately sourced to the relevant parts of your application. One of the ways you can solve this problem is by building those assets into multiple bundles because Xcode will always generate a unique Asset Catalog deployment artifact per bundle or target. So for example, consider creating an artwork only bundle as an example and this can be for a good reuse strategy for example to have a single consolidated component that contains all your artwork, that has a consistent namespace, that can provide images to the rest -- to the other components of your application.

How do you retrieve these? It's simple, you just use the image constructors like UIImage named in bundle compatible with trait collection that gives the bundle argument. On the macOS side of course there's the NS bundle category image for resource.

And keep in mind, that each of these bundles provide a unique namespace, so within them the names have to be unique. But across bundles you feel free to use any naming convention you like.

So speaking of namespaces, there's another feature I'd like to call attention to and another challenge with large projects. Now in this case the problem I'm addressing is large collections where they might have some structure in them right. So let's imagine you have an app that deals with 50 different rooms, each one of those rooms has a table and a chair in it and there's assets for each of those. In your code you'd really like to refer to them as table and chair, that seems like the most natural thing but unfortunately there's 50 of them what are you going to do. One alternative is to just generate a naming convention of some form and figure out how to demux that in your code, that's not ideal. One solution that Asset Catalogs can offer is to use the provide namespace option. By checking this box after organizing your artwork into a folder, we will automatically prepend the folder name into each image's record in the Asset Catalog, which you then use to retrieve it. This can be a nice way to organize large structure collections of assets.

Okay so we talked about cataloging, now let's talk about some exciting stuff around deployment which is really where the exciting stuff starts to kick in. So Will talked about App Thinning, I'd like to give some overall perspective on what we try to do with Asset Catalogs in App Thinning. So overall what you're trying to do is you're providing all the content variants for your application, you're adapting your content to the needs of the various devices your app runs on. The most common technique for doing this is you know split across product family iPad or iPhone, tv or watch or different resolutions, 3x and 2x. You provide all those content variants to effectively adapt your content and then App Thinning is responsible for making sure that we just select the right subset of that content that's appropriate for the device that your customer is running the application on.

Well I'd like to talk about a different way you can approach the same sort of content adaptation and that is performance classes. It's a different way of looking at the exact same problem. What if the entire product mix the way your application saw that continuum was instead segmented by performance capability, not by other characteristics? Well this is what you can do with Asset Catalogs. There's such a broad range of hardware capabilities between the supported devices that we have, even if you go back a few iOS, I mean all the way from say an iPhone 5 up to the latest iPhone 10, that's a huge range of performance capability. Wouldn't it be nice to take advantage of that and avoid the needing to constrain your app to the least capable device that your application needs to support? That's the goal here to be able to have your cake and eat it too and to do that you can solve it with adaptive resources. I'm going to tell you how now.

So there are two main ways that we divide the performance continuum. The first is memory classes and this is perhaps the most important one. We have four memory tiers, 1 GB through 4 GB and that corresponds to the installed memory on the various devices and again this is across our entire product mix, it doesn't matter what it is, it's in one of these bins.

The second access of collection is graphics classes. Now these actually correspond to two things. One, they correspond to Metal feature family sets, which if you're a Metal programmer you may be familiar with, this is the GPU family concept. But they actually correspond also exactly with a particular processor revision in your device. So Metal 1 corresponds to Apple A7 all the way through Metal 4 which is the Apple A11 processor. And we allow you to catalog and route assets to each of these particular graphics classes.

That can be pretty powerful by itself either one of those, but where it gets really interesting is when you can combine these two traits together to form a full capability matrix that you can really finally calibrate how you want to adapt your assets to this hardware landscape.

Now how does this work? I'd like to explain this to you by walking through a simple example and this is really key to understand how we do things, it helps you understand how you might be able to use it. So in this example, we've provided three specialized assets, one any any which is just the backstop for the lower capability devices. And then we provide two optimized assets, one for 3 GB devices with Metal 3 or better and one for 2 GB devices with Metal 4. So let's imagine that I'm selecting the asset from the context or the viewpoint of an iPhone 8 Plus. So I'm 4 GB, Metal 4, that's where I'm starting and I'm searching, I'm finding nothing in 4 GB memory tier. So next, I'm going to go drop down a memory tier and look for anything that can be found in 3 GB memory tier.

I do that and I find this asset here and I'm going to select that. Now what's important here is that I have selected this asset at 3 gigabyte Metal 3 even though there is an asset that actually matches exactly to my GPU class. But because we prefer and scan through things in memory priority order before we do graphics classes, we're going to select this first. This is really important because we have decided that memory is really the most important way that you can characterize the overall performance of a device, so we're going to prefer that as we go through the selection matrix.

Okay that's how it works, how do you think about using it. Memory really represents the overall headroom of your device and is really the best aggregate indicator of capability. So it's a really good choice to use with larger or richer assets, more detailed stuff, things that are bigger on disk, things that are going to take a little more memory when they're expanded in in memory for rendering. Just a richer user experience is usually associated with higher memory. Now higher graphics is a little more subtle since that tracks the raw processing capability, both CPU and GPU, of the device so it's better for more complex assets. Maybe you use a shader that takes advantage of certain features that are only available on certain GPUs or not or you put an asset that requires a little more processing than others. I'd like to give two simple examples as food for thought on how this could work. And the way I'm going to give the example is by talking about NSDataAsset. NSDataAsset is a very simple piece of Asset Catalog but it can be very powerful. All it is is a way to provide a flexible container that you can put in your Asset Catalog with content variants around arbitrary files. This doesn't have to be an image, it doesn't have to be a very specific format, this can be anything. But you can use this with Asset Catalogs in App Thinning to route arbitrary data to these different performance classes. So that's an example, consider a cut scene video in a game. So you might provide, have a nice video that you put in sort of the mid-tier of the performance spectrum and then you might have a really awesome high resolution, maybe it's even HDR who knows video that you put in the really capable quadrants of that capability spectrum. And then on the lower end you put a still image or a very simple image sequence that's not going to take any time or excessive resources on those devices and still give those customers running those older devices a nice and responsive user experience.

So that's one example. Another more intriguing example is plist, well why I put a plist in an Asset Catalog, it seems like there's much better ways to deploy plists than Asset Catalogs. Well when you use it in conjunction with NSDataAsset for example you could consider using a plist to tune your application with different configuration parameters that scale according to the performance class that you cataloged that plist in, in your NSDataAsset. So for example if you have an app that renders a crowd for example you could set the size of the crowd based on the capability of the underlying hardware and your code would automatically be self-tuned based on what device it's actually running on at the moment. So that's an interesting idea about how to use performance classes.

Next, I'd like to talk about Sprite atlases. So Sprite atlases were introduced a few years ago in support of SpriteKit applications in SpriteKit games.

Now, but I'm not going to talk about them in the context of SpriteKit based games, I'm going to talk about them in the context of regular applications.

Now they have some attributes that are very similar to what Will talked about with automatic image packing, you're taking all of the related images in that Sprite atlas and packing them into a single unit, they get loaded at once, and then all the images that you reference that are contained within that atlas are just lightweight references to locations within that atlas.

So that's great. But the key thing is that you don't really need to use SpriteKit to access these, you can just use this as a grouping mechanism because the one difference that Sprite atlases have over automatic image packing is you get to control the grouping and you to assign a name to it. So you can deal with it, you can have a little bit of control and sort of organize things that way. But you can still access the images contained within using the standard UIImage and NSImage APIs, and the names within that. In addition, there is an intriguing way that you can use SpriteKit framework even though you're not building a SpriteKit application by taking advantage of the SKTextureAtlas preload texture atlas named API if you have a case where you have a large number of images that have to be loaded fairly quickly and have to be used right away. So what this API will do is it'll preload or load from disk, decode, get ready and warmed up in memory asynchronously with a callback completion handler for a set of named atlases. So this is great, but I will caution you, do not use this API indiscriminately because it does exactly what it says it's going to do and that means it's going to potentially consume a large amount of I/O and memory to load all those images. So please be sure that you're about to use them right away and that it's the right choice, otherwise a jetsam awaits you.

So another powerful thing about Sprite atlases is that any image within the Sprite atlas has all of the regular features of any other normal image set within code Asset Catalogs, including all the cataloging features, all the compression settings, and all the App Thinning features. We will take care to automatically split and collate all of the images that you provide appropriately, split them by pixel formats, by different device traits, and different compression types. And make sure everything gets baked appropriately and then thinned appropriately so that the data gets routed to the right device in the right way. So those are some interesting details about deployment, we're in the homestretch here. So I'd like to remind you of the important things about optimizing app assets here. First and foremost, I think Xcode Asset Catalogs are really the best choice for managing the image resources in your application. This year you get 10 to 20% less space on disk just by using our new compression algorithms. No matter what deployment target you have, your iOS 12 customers will get those benefits thanks to the improvements in App Thinning, which now optimizes for the latest OS going forward. And we have a bunch of cataloging features that you can use to adapt the resources of your app to the devices your customers use. For more information please look at this link and hope you all had a nice day. Thank you. [ Applause ]

-