-

Dive into RealityKit 2

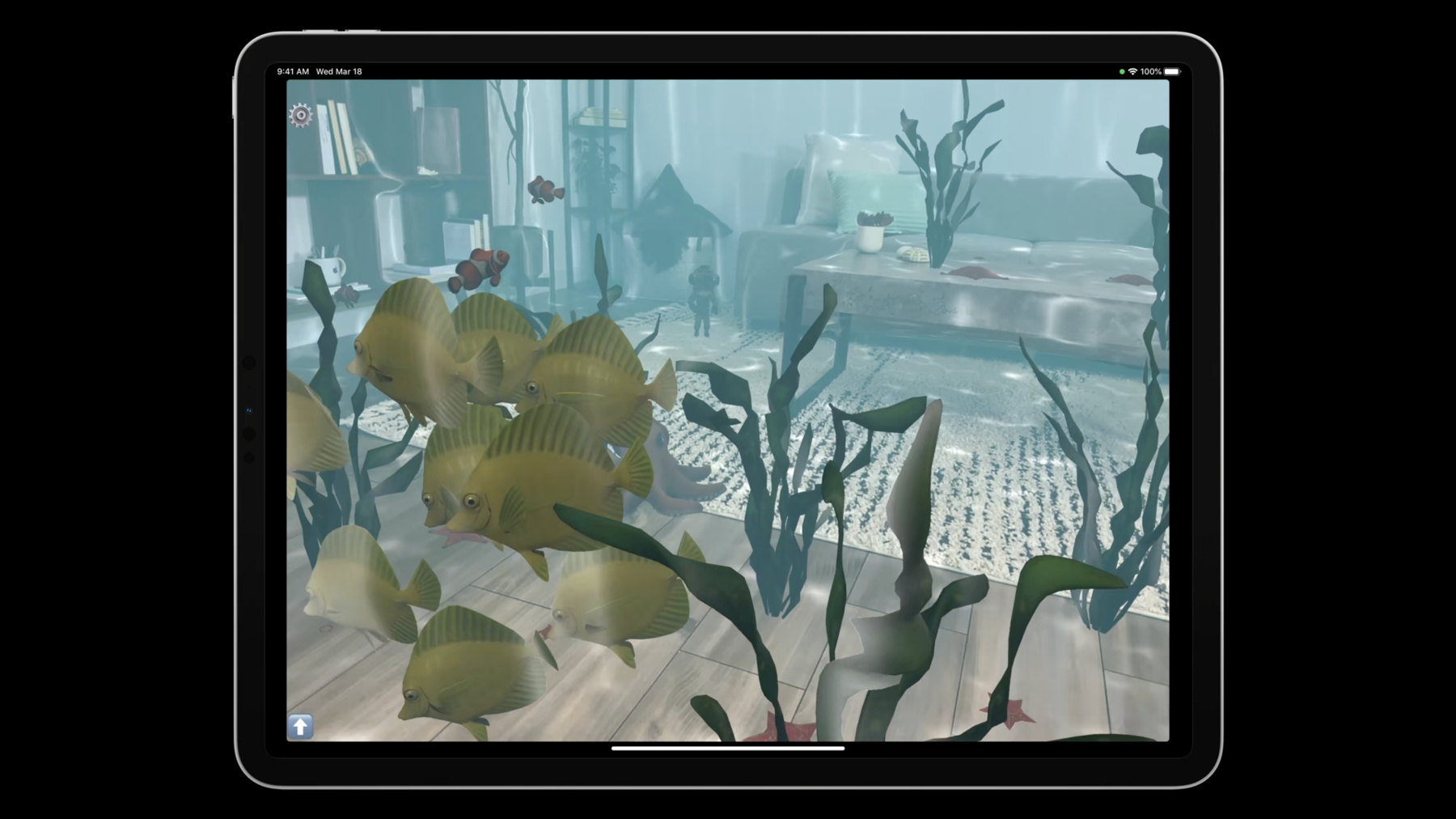

Creating engaging AR experiences has never been easier with RealityKit 2. Explore the latest enhancements to the RealityKit framework and take a deep dive into this underwater sample project. We'll take you through the improved Entity Component System, streamlined animation pipeline, and the plug-and-play character controller with enhancements to face mesh and audio.

리소스

- Creating an App for Face-Painting in AR

- Building an immersive experience with RealityKit

- Applying realistic material and lighting effects to entities

- PhysicallyBasedMaterial

- Explore the RealityKit Developer Forums

- Creating a fog effect using scene depth

- RealityKit

관련 비디오

WWDC23

WWDC22

WWDC21

WWDC20

WWDC19

-

비디오 검색…

♪ ♪ Hi there. I’m Amanda, and I’ll be joined in a bit by my colleague, Olivier. In this talk, we’ll explore the features that we’ve added to RealityKit in 2021.

RealityKit is an augmented reality authoring framework introduced in 2019 focused on realistic rendering and making it easy to create AR apps. Leveraging ARKit to read the device’s sensor data, RealityKit allows you to place 3D content in the real-world environment and make that content look as realistic as possible.

Here are some great examples of RealityKit experiences in action. Go on a scavenger hunt in the real world, bowl virtually against your friends, even become a sculpture in a museum, and find some colorful bugs. Over the past couple of years, we’ve seen some amazing apps created with RealityKit and received really good feedback to make this framework even better.

And we’ve listened to your feedback. We are happy to share that RealityKit 2 introduces a bunch of new features to help you make even more immersive AR apps and games.

In this session, we’ll be highlighting some of them, including our most-requested features, like custom shaders and materials, custom systems, and our new character controller concept. So put on your snorkel mask and let’s dive in.

When I was growing up in the Middle East, I learned to scuba dive in the Gulf. Although I didn’t get to wear one of these super cute steampunk helmets, I loved seeing all the colorful fish schooling. I thought it might be fun to re-create that underwater vibe right here in my living room. Olivier and I wrote this demo using a bunch of the features that we’ll be showing you in this session and in our second RealityKit session later this week. We’ve got post processing to create the depth fog effect and the water caustics, a custom geometry modifier to make the seaweed dance in the waves, and a bunch more. Basically, RealityKit 2 lets you customize so many things now. This sample code is available for you to try out at developer.apple.com.

There are five main topics we’ll cover today. We’ll do a recap of what an ECS is and how we used our new custom systems feature to implement the fishes’ flocking behavior in our app. We’ll show you advancements in what you can do with materials as well as animations, the new character controller, which is how we got the diver to interact so seamlessly with the AR mesh of the living room, and how you can now generate resources at runtime.

So let’s start with the ECS. ECS, short for entity component system, is a way of structuring data and behavior, and it’s commonly used in games and simulations. It’s different from object-oriented programming in which you tend to model an item as an encapsulated bundle of both its functionality and the state associated with that item. But with ECS, you have three prongs: entity, component, and system, where the functionality goes in the system, the state goes in the components, and the entity is an identifier for a group of components. This year, with RealityKit 2, we’re moving towards a more pure ECS implementation, guiding you to keep more of your functionality in the system layer with our new custom systems.

What does entity mean to us? An entity represents one thing in your scene. Here are entities that represent the sea creatures in our scene. An entity can have child entities, giving you a graph structure to work with. For example, the transform component uses the parent entity’s transform to add its own position onto. An entity itself doesn’t render anything on screen. For that, you need to give it a model component or create a model entity, which’ll do that for you.

To add attributes, properties, and behaviors, you add components to your entity. Speaking of which, let’s talk about components.

Components are for storing state between frames and for marking an entity’s participation in a system. You don’t need to include any logic for dealing with that state here, though. Your logic and behavior go in your custom system.

There are some components that will be already present on any entity you create. Not shown here are the built-in components: the transform and synchronization components. They’re on all three of these entities. There are others that you’ll often want to add, like the model component, which contains the mesh and materials that make your entity show up on screen.

You can add and remove components from your entities at runtime too, if you want to dynamically change their behavior.

We’ll mark this first fish as participating in the Flocking System, and we’ll tell it that it likes to eat algae. This second fish, it’s also going to flock with the first fish, but it prefers to eat plankton right now. This third guy is a plankton. It will be food for the second fish. It should watch its back, because in our app, we’ve got some hungry creatures.

We know which ones are hungry because they have the AlgaeEater or the PlanktonEater components on them. Every frame, our Eating System has its update function called. In here, it finds all the entities in the scene that have either of these components, plus all the entities that are food, so it can guide the hungry fish toward the food they prefer to eat. But what’s a performant way for the Eating System to figure out which entities are hungry, which ones are the food, and which ones are neither? We don’t want to have to traverse our entity graph and check each one’s components. Instead, we perform an entity query. Let RealityKit do the bookkeeping for you. The Flocking System wants to find all entities that have a FlockingComponent on them. While the Eating System wants both kinds of hungry entities, plus the entity that’s a type of food. So let’s take a closer look at what exactly is happening when a system uses an entity query. Systems have their update functions called every frame.

Let’s look at the Flocking System for our Yellow Tang fish. We’ll pause at this frame to see what’s going on. In the Flocking System’s update function, we query for all entities in the scene that have both the FlockingComponent and the MotionComponent on them. Lots of things have the MotionComponent, but we don’t want all of them, we just want our flock. Our query returns our flocking fish, so now we can drive our custom game physics by applying the classic Boids simulation to each fish in the flock.

We add forces on each fish’s MotionComponent, where we keep our state between frames, forces for sticking together, for preferring to stay a certain distance apart, and for trying to point their noses in the same direction.

When the Motion System runs, in the same frame but after the Flocking System runs, it rolls up all these forces to decide the fish’s new acceleration, velocity, and position. It doesn’t care which other system added them. There are others, like the Eating System and the Fear System that also operate on the MotionComponent to push the fish in various directions.

So let’s see the code.

Here’s an outline of our Flocking System. It’s a class that conforms to the RealityKit.System protocol. When you register your custom system at app launch time, you’re telling the engine that you want it to instantiate one of this type per scene in your app. The init is required, and you can also provide a deinit. We can specify dependencies. This system should always run before the MotionSystem, which is why we’ve used the enumeration value.before here. In our update function, we’ll be altering the state stored in the MotionComponent, and the MotionSystem will be acting upon the state we provide, so we need to make sure the FlockingSystem runs before the MotionSystem, sort of like a producer-consumer relationship. You can also use the .after option. If you don’t specify dependencies, your systems’ update functions will be executed in the order you registered them in.

Our EntityQuery says that we want all entities that have the Flocking and Motion Components. It’s a static let because it’s not going to change for the duration of our simulation.

In a multiplayer AR experience, components that conform to codable are automatically synced over the network. However, data in systems are not automatically synced over the network. Data should be generally stored in components. Now let’s dive in to our FlockingSystem’s update function.

It takes a SceneUpdateContext, which has in it the deltaTime for that frame and a reference to the scene itself. First, we perform our EntityQuery on the scene, which returns a query result that we can iterate over for the entities that have the FlockingComponent on them.

We get each one’s MotionComponent, which we’re going to be modifying. Why are we not getting the FlockingComponent itself? Because it doesn’t have any data associated with it. We use that one like a tag to signify membership in the flock. Then we run our standard Boids simulation on them to guide the flock, modifying the collection of forces in the MotionComponent. At the end, since we’ve added forces to each fish to push it in the desired direction, and because components are Swift structs which are value types, we need to store our MotionComponent back onto the entity it came from.

Systems don’t have to implement a custom update function. It can also be useful to create a system which only provides an init, like to register event handlers for scene events.

So far, we’ve been looking at the relationships between entities, components, and custom systems.

Now let’s zoom out for a second and talk about some high-level architecture changes that we’ve brought in RealityKit 2. Before, you would subscribe to the SceneEvents.update event using a closure that would be called every frame. These kind of event handlers would often live, or at least be registered in, your Game Manager-like classes. Instead of closures like that, you can now have your update logic cleanly separated and formally ordered in separate system update functions.

So that means your Game Managers can play less of a role. Instead of doing all your registrations for event updates there and then managing the order in which you call update on all the things in your game, now the Game Managers only have to add components to the entities to signify to your systems that those entities should be included in their queries.

Previously, you would declare protocol conformances on your entity subclasses to express that that entity type has certain components. Now you don’t need to subclass entity anymore, since it, too, can play less of a role.

It can be merely an identifier for an object, and its attributes can be modeled as components. Because when you don’t subclass entity, you don’t tie your object to forever keeping those components. You’re free to add and remove components during the experience. So with RealityKit 2, your custom components are a lot more useful because you have custom systems.

But you can still do it either way. That’s the beauty of game development. The world is your oyster. In our underwater demo, we’re using both methods.

We’ve also added a new type of component: TransientComponent. Say, for example, your fish were afraid of the octopus, but only if they had ever looked at it. When you clone up a new fish entity, you might not want the clone to inherit that fish’s fear of the octopus. You could make your FearComponent conform to TransientComponent. That way, it wouldn’t be present on the new entity.

The TransientComponent is still included in a network sync, though, if it conforms to codable, like any other kind of component that does so.

Another addition is our new extension on cancellable. You don’t need to manually manage unsubscribing to events for an entity anymore. We’ll do it for you when you use storeWhileEntityActive.

Here, we’re handling collision events for a fish entity. We don’t need this subscription to outlive the fish itself, so we use storeWhileEntityActive.

As always, when building a game, there’s a whole bunch of settings that you want to tweak on the fly without having to recompile.

In our game, we built a Settings view in SwiftUI, and we pass its backing model down into our various CustomSystems by way of wrapping them in CustomComponents.

We create our Settings instance as a @StateObject and pass it into both our ARViewContainer and our SwiftUI view as an environmentObject.

We wrap the Settings object in a CustomComponent, a SettingsComponent. Then when we create our fish entity, we give it a SettingsComponent. That way, when any CustomSystem comes along that wants those settings, it can read them from there, like take the “top speed” value and use it to cap each fish’s velocity. And now I’ll hand it over to my colleague, Olivier, to tell you about materials.

Thanks, Amanda. This year, we added new APIs for materials. We already had a few types, such as SimpleMaterial, with a baseColor, roughness, and metallic property. We also had UnlitMaterial, with just a color and no lighting. We had OcclusionMaterials, which can be used as a mask to hide virtual objects. And last year, we introduced VideoMaterials, which are UnlitMaterials using a video as their color. Note that this year we added support for transparency. If the video file contains transparency, it will be used to render the object. This year, we added new APIs that give you a more advanced control over materials, starting with the type PhysicallyBasedMaterial, which is very similar to the schema for materials in USD. It is a superset of SimpleMaterial and has most of the standard PBR properties that you can find in other renderers.

This is the material that you will find on entities that have been loaded from a USD. You can, for example, load the USD of the clown fish and then modify individual properties on its materials to make it gold or purple.

Among the properties of the material, you can, for example, change the normal map to add small details that are not part of the mesh. You can also assign a texture defining the transparency of the model. By default, the transparency is using alpha blending, but if you also assign an opacityThreshold, all the fragments below that threshold will be discarded.

You can set the texture for ambient occlusion, defining vague shadows in the model. And an example of a more advanced property is clearcoat, which will simulate an additional layer of reflective paint on the material. And there’s many other properties available on the type PhysicallyBasedMaterial.

We also added a new type called custom material to make materials using your own metal code. This is what we used to make the color transition effect on this octopus model. We will explain this shader and custom materials in the second talk about rendering.

In addition to materials, we also added more control over animations in RealityKit.

First, let’s go over the existing API for animations, which are mostly about playback of animations loaded from a USD. If you load an animation from a USD, you can play it once.

You can also repeat it so that it loops infinitely, which is what we want for the Idle animation of our diver here. You can also pause, resume, and stop an animation.

Finally, when playing a new animation, you can specify a transition duration. If you don’t specify one, the character will instantly switch to the new animation. If you do specify a transition duration, RealityKit will blend between the old and new animations during that time. This is useful, for example, when transitioning between the Walk and Idle cycles of the diver.

But we can still improve the animation of the feet there.

We can use the new API for blend layers to make the animations more realistic. We play the Walking animation and the Idle animation on two separate blend layers, and since we played the Walking animation on the top layer, that’s the only animation that we currently see. But we can change the blend factor of the Walking animation to reveal the Idle animation underneath. Notice how, as the blend factor gets smaller, the footsteps also get smaller.

And we can also change the playback speed of the animations to make the diver walk faster or slower. Here, the diver is walking at half speed.

Finally, we use the speed of the character relative to the ground to control both of these values. This way, we can make the animations smoother and reduce the sliding of the feet compared to the ground.

So far, we have been using multiple animation clips, such as the Idle and Walk cycles. These are stored as AnimationResources in RealityKit. And there are multiple ways to load them from USD files. The first way is to have one USD file per clip. We can load each USD as an entity and get its animations as AnimationResources. The AnimationResource can then be played on any entity, as long as the names of the joints in its skeleton match the animation.

Another way to load multiple animation clips is to have them in a single USD on the same timeline and then use AnimationViews to slice this timeline into multiples clips.

This requires knowing the timecodes between each clip.

Each AnimationView can then be converted to an AnimationResource and used exactly the same way as the previous method. Let’s now go over the animations of the octopus in the app. The octopus is hiding, but when the player gets near, it will be scared and move to a new hiding spot. Let’s see how to animate it. We start with loading the skeletal animations of the octopus: jumping, swimming, and landing. These animations are loaded from a USD, just like what we did for the diver. But we also want to animate the transform of the octopus to move it from one location to the next. To animate the transform, we use a new API to programmatically create an animation of type FromToByAnimation.

This way, we can animate the position. Let’s see what it looks like on the octopus.

To make it more interesting, let’s also animate the rotation.

The octopus now rotates while it moves, but it’s swimming sideways, which is not very realistic. We can improve this by making a sequence of animations. First, we rotate the octopus towards the new location. Then we translate it to the new location. And finally, we rotate the octopus back towards the camera. And here is the full animation.

In addition to new APIs for animations, we also added a way to manage the physics of characters. It’s called Character controller. This allows us to create characters that can physically interact with the colliders in the scene. Here, we see the diver jumping from the floor to the couch and walking on it. This is achieved by adding a character controller to the diver. With that, the diver will automatically interact with the mesh of the environment, generated from the LiDAR sensor.

Creating a character controller is simple. All you need to do is to define a capsule that matches the shape of your character. On creation, you have to specify the height of the capsule and its radius. After the character controller has been assigned to the entity, you can call the move(to:) function every frame. It will make the character move to the desired location, but without going through obstacles. On the other hand, if you do want to ignore obstacles, you can use the teleport function. Now I will hand it off to Amanda, who will take you through a few more fun features that we’ve added to this release of RealityKit. Great. Thanks, Olivier. OK, so I’ll highlight two new APIs that are available for creating resources on the fly without having to load them from disk.

First I’ll show you how you can get the mesh of a person’s face from SceneUnderstanding, and then I’ll take you through how to generate audio. There’s a sea of possibilities that these open up for procedurally generated art.

First up, the face mesh. I was so inspired by the look of the purple and orange octopus we have in our demo app, I tried my hand at painting one on my face, but virtually, using the new face mesh capability. SceneUnderstanding can now give you entities that represent people’s faces, and those entities have ModelComponents on them, which means you can swap out the properties of the materials on the face entity’s mesh. We had a lot of fun generating the textures to apply to the face mesh on the fly with our live drawings.

Let’s look at the code. The SceneUnderstandingComponent now has an enum property called entityType, which is set by the SceneUnderstandingSystem and can take one of two values: face, meaning it’s the representation of a real-world person’s face, or meshChunk, meaning it’s some other part of the reconstructed world mesh. It can also be nil, meaning its type is not yet known.

Here’s an EntityQuery again. You can query for entities that have the SceneUnderstandingComponent and check their entityType to find faces. Then, you can get the ModelComponents from those entities and do whatever you want with them. In our face painting sample, we’re using PencilKit to let people draw on the canvas and then wrapping the resulting CGImage onto the faceEntity by creating a TextureResource from that CGImage. We’re using a PhysicallyBasedMaterial so that we can make this face paint look as realistic as possible, and we’re setting a few of the properties on it to dial it in for our look. To make the glitter paint effect, we use a normal map texture which tells the physically based renderer how it should reflect the light differently on this surface than it would if we had simply left the material metallic. We then give the pencil-drawn TextureResource to the material and set it on the entity. So that’s one way to work with our new generated resources. Another type of resource you can now generate is the AudioBufferResource. You can get an AVAudioBuffer however you like: by recording microphone input, procedurally generating it yourself, or using an AVSpeechSynthesizer. Then you can use the AVAudioBuffer to create an AudioBufferResource and use that to play sounds in your app.

Here’s how we turn text into speech by writing an AVSpeechUtterance to an AVSpeechSynthesizer. We receive an AVAudioBuffer in a callback. Here, we’re creating an AudioBufferResource and setting its inputMode to .spatial to make use of 3D positional audio.

The other available inputModes are nonSpatial and ambient. Then we tell an entity to play that audio. You could, of course, process the audio buffer with fancy tricks to make it sound like your fish are blowing bubbles as they talk under water or whatever fun things you can come up with.

So this was an overview of some of the new features in RealityKit this year. We’ve really focused on giving you more control over the appearance and behaviors of your scenes. We’ve modified our ECS to provide you with custom systems, which gives you way more flexibility in structuring your app’s behaviors. We’ve added lots of advancements to our materials and animation APIs. We’ve introduced the character controller to make it easy for your entities to interact with the real-world environment. Finally, we’ve highlighted a few of the ways in which you can generate resources on the fly. But that’s definitely not an exhaustive list of everything that’s new in RealityKit 2. In our second RealityKit session later this week, you can learn more about the new rendering capabilities and see how we implemented some of the things in our underwater demo. A geometry modifier is what we use to animate our seaweed. The octopus uses surface shaders to transition beautifully between its colors. The blue depth fog effect as well as the water caustics were created using post processing. And in the theme of generative resources, you’ll learn how to use dynamic meshes. For a refresher, you might also want to check out the session “Building Apps with RealityKit” from 2019.

Thank you, and we look forward to seeing the depths of your creativity with these APIs.

[upbeat music]

-

-

7:10 - FlockingSystem

class FlockingSystem: RealityKit.System { required init(scene: RealityKit.Scene) { } static var dependencies: [SystemDependency] { [.before(MotionSystem.self)] } private static let query = EntityQuery(where: .has(FlockingComponent.self) && .has(MotionComponent.self) && .has(SettingsComponent.self)) -

8:34 - FlockingSystem.update

func update(context: SceneUpdateContext) { context.scene.performQuery(Self.query).forEach { entity in guard var motion: MotionComponent = entity.components[MotionComponent.self] else { continue } // ... Using a Boids simulation, add forces to the MotionComponent motion.forces.append(/* separation, cohesion, alignment forces */) entity.components[MotionComponent.self] = motion } } -

11:58 - Store Subscription While Entity Active

arView.scene.subscribe(to: CollisionEvents.Began.self, on: fish) { [weak self] event in // ... handle collisions with this particular fish }.storeWhileEntityActive(fish) -

12:36 - SwiftUI + RealityKit Settings Instance

class Settings: ObservableObject { @Published var separationWeight: Float = 1.6 // ... } struct ContentView : View { @StateObject var settings = Settings() var body: some View { ZStack { ARViewContainer(settings: settings) MovementSettingsView() .environmentObject(settings) } } } struct SettingsComponent: RealityKit.Component { var settings: Settings } class UnderwaterView: ARView { let settings: Settings private func addEntity(_ entity: Entity) { entity.components[SettingsComponent.self] = SettingsComponent(settings: self.settings) } } -

21:26 - FaceMesh

static let sceneUnderstandingQuery = EntityQuery(where: .has(SceneUnderstandingComponent.self) && .has(ModelComponent.self)) func findFaceEntity(scene: RealityKit.Scene) -> HasModel? { let faceEntity = scene.performQuery(sceneUnderstandingQuery).first { $0.components[SceneUnderstandingComponent.self]?.entityType == .face } return faceEntity as? HasModel } -

22:03 - FaceMesh - Painting material

func updateFaceEntityTextureUsing(cgImage: CGImage) { guard let faceEntity = self.faceEntity else { return } guard let faceTexture = try? TextureResource.generate(from: cgImage, options: .init(semantic: .color)) else { return } var faceMaterial = PhysicallyBasedMaterial() faceMaterial.roughness = 0.1 faceMaterial.metallic = 1.0 faceMaterial.blending = .transparent(opacity: .init(scale: 1.0)) let sparklyNormalMap = try! TextureResource.load(named: "sparkly") faceMaterial.normal.texture = PhysicallyBasedMaterial.Texture.init(sparklyNormalMap) faceMaterial.baseColor.texture = PhysicallyBasedMaterial.Texture.init(faceTexture) faceEntity.model!.materials = [faceMaterial] } -

23:09 - AudioBufferResource

let synthesizer = AVSpeechSynthesizer() func speakText(_ text: String, forEntity entity: Entity) { let utterance = AVSpeechUtterance(string: text) utterance.voice = AVSpeechSynthesisVoice(language: "en-IE") synthesizer.write(utterance) { audioBuffer in guard let audioResource = try? AudioBufferResource(buffer: audioBuffer, inputMode: .spatial, shouldLoop: true) else { return } entity.playAudio(audioResource) } }

-