-

Explore HDR rendering with EDR

EDR is Apple's High Dynamic Range representation and rendering pipeline. Explore how you can render HDR content using EDR in your app and unleash the dynamic range capabilities of your HDR display including Apple's internal displays and Pro Display XDR.

We'll show you how game and pro app developers can take advantage of the native EDR APIs on macOS for even more control, and provide best practices for deciding when HDR is appropriate, applying tone-mapping, and delivering HDR content.리소스

- Processing HDR images with Metal

- Edit and play back HDR video with AVFoundation

- Export HDR media in your app using AVFoundation

- Editing and playing HDR video

- Metal

- Metal Shading Language Specification

관련 비디오

WWDC23

Tech Talks

- 참조 모드 알아보기

- Discover Metal enhancements for A14 Bionic

- Support Apple Pro Display XDR in your apps

- Metal Enhancements for A13 Bionic

- An Introduction to HDR Video

- Authoring 4K and HDR HLS Streams

- Updating Your App for Apple TV 4K

WWDC22

WWDC20

WWDC19

-

비디오 검색…

Hi, I'm Ken Greenebaum, on the Display and Color Technologies team. Thank you for attending this "High Dynamic Range, rendering with EDR" talk. It is a technology I am thrilled to be sharing with you. Let's quickly run down what we'll be covering and who might best benefit from attending.

We will start with what EDR actually is, describe the four steps used to add EDR to your existing app, discuss the native EDR API for more control of rendering, and finally, we will explore a series of best practices related to EDR.

Now, what is EDR? EDR is an acronym you might not be familiar with.

It stands for "Extended Dynamic Range." We use "EDR" to refer to both the HDR representation and the HDR rendering technology used on our platforms.

It is somewhat akin to color management, which you might already be familiar with.

Developers of many types of apps will find this talk interesting.

To begin, any developer interested in computer graphics technology, High Dynamic Range displays, and especially the new XDR displays that Apple has been releasing over the last couple of years.

Next are hardcore game developers who want to create the most realistic and exciting experience, unlocking bright details that might already be present in their rendering engine.

Critically, there are the Pro app developers who are interested in achieving studio reference response for HDR video and still images.

Finally, there are developers of apps whose emphasis isn't specific to graphics, but who would like to embrace the HDR still image and video content now entering the ecosystem.

HDR is here, and it is enabled by our EDR technology. As we will be discussing, we have displays with incredible capabilities already in users' hands. Stunning HDR content in the ecosystem that users are capturing, streaming, and downloading, as well as HDR apps, both games and professional, available in the App Store.

EDR is an adaptive technology. As we will discuss, EDR not only maps HDR content to displays of wildly differing capabilities, but also supports an even wider range of viewing conditions.

Have you ever noticed displays look much different in a bright environment versus when viewed in the dark? The bright, vibrant colors you experience indoors likely look dim and muted with low dynamic range when viewed outdoors. The display didn't change. At a given brightness setting, it is always making the same light. is the environment that changed, as well as your vision that rapidly adapted to the environment. In a dark environment, your vision adapts to the displayed content, the predominant source of light. But in a bright environment, the user's vision largely adapts to the environment, over the much dimmer display, causing the content to look dim and washed out, crushing blacks and shadow detail.

This is why EDR and the underlying ambient adaptation technologies dynamically adapt display parameters, such as white point, black point, reference white brightness, and dynamic range, that we describe as "EDR headroom," to map the display to the user's adapted vision.

EDR's adaptation provides a number of benefits. Perhaps most surprisingly, this results in EDR creating a true HDR response, even on conventional, standard dynamic range displays.

That is, when those displays are viewed in dimmer environments with correspondingly dimmer brightness settings. Similarly, EDR's dynamics produce a situation-optimized HDR capability on bright HDR displays. This allows HDR content to look stunning in HDR reference dim environments, while providing a reduced but optimal dynamic range in brighter environments, all while preserving shadow detail, such as the patches on this illustration. EDR rendering is supported on most devices we've shipped for years now.

We described HDR capability in terms of EDR headroom, which is how many times brighter than the conventional maximum SDR white the brightest EDR pixels can be rendered to the display. EDR headroom varies based on display capability, as well as other parameters, including the current brightness slider setting.

On conventional backlit displays, as found on most recent Macs and iPads, up to a very noticeable 2x SDR, is available. iPhones with XDR display provide up to an exciting 8x SDR EDR headroom. The new iPad Pro's Liquid Retina XDR display has up to an incredible 16x SDR. Roughly 5x SDR is provided when driving typical external HDR 10 displays via Mac, iPad, and AppleTV. And the Pro Display XDR renders to a jaw-dropping 400x SDR in the default XDR preset, when brightness is set to the minimum 4-nit value. Not only are HDR-capable displays already in many users' hands, but HDR content is becoming mainstream. In addition to commercial HDR content, users are adding HDR still and video content, captured on their own iPhones and other devices, to the ecosystem.

An awe-inspiring list of applications have already adopted EDR. Top-tier games supporting EDR include "Baldur's Gate 3," "Divinity: Original Sin 2," and "Shadow of the Tomb Raider." A growing number of AppleTV services provide HDR content, including TV app, Netflix, and YouTube. Finally, an exciting and growing list of Pro apps have adopted EDR, both upping the ante and increasing the amount of HDR content developed on the platform. Affinity Photo, DaVinci Resolve, Cinema 4D, Final Cut Pro, Nuke, and Pixelmator Pro are just some of the apps.

We chose to name our technology "EDR" in part to differentiate it from "HDR," which tends to mean different things to different people.

When many think of High Dynamic Range, they imagine bright displays capable of producing deep blacks. The production-minded may think content formats, perhaps HDR10 or Dolby Vision. To the technical, it brings transfer functions, like PQ or HLG, to mind. And perhaps to the artistic, HDR can even suggest the surrealistic, painterly tone map representing bright highlights, as well as dim shadow detail as SDR. Consider this SDR tone map of an RIT produced, ultra-High Dynamic Range OpenEXR image. In the tone map, the glowing light bulb and the shadow detail are all present. However, the dramatic dynamic range of the original HDR image is lost. EDR is not a painterly HDR-to-SDR conversion, preserving all details while losing the intention, but rather, a true High Dynamic Range rendering.

The RIT team combined the 18 bracketed Digital SLR exposures, seen here, to create their HDR image with its amazing 500,000:1 dynamic range. EDR fully represents this in floating point, with the image's subject, the Luxo lamp itself, as pixel values under reference white's 1.0, and with brighter details, such as the emissive orb of the lightbulb, represented as values approaching 500. That's 500x brighter than the lamp base. EDR's implementation creates a mapping between the reference whites of every element of the system. From the reference white of the content to that of the display, through to that of the user's adapted vision. This mapping is fundamental to providing many of the benefits of EDR, including allowing HDR and SDR content to co-exist side-by-side, optimally exposing an HDR display's dynamic range across environments, even allowing conventional SDR displays to render true HDR, while always allowing EDR to render as great a dynamic range as possible. For instance, on a Pro Display XDR, with brightness slider set to the maximum 500 nits, EDR values up to 3.2 will be rendered without clipping. Brighter elements, such as the lamp head, with correspondingly greater EDR values, will clamp to the display's 1,600-nit peak. Lowering the display's brightness setting toward its 4-nit minimum, occurring when in a dim to dark environment, either automatically via auto brightness, or manually using the brightness slider, EDR values up to 400 become renderable, thereby delivering most of the dynamic range of this exceptional image.

The image's brightest elements, while still rendered to the display's 1,600-nit peak brightness, now clearly define the lightbulb's contour. Similarly, other details are revealed, such as the colors of the brightly illuminated color checker. With the user's vision now adapting to the image's 4-nit reference white, the image's brightest elements are now amazingly rendered 400x brighter than the lamp base.

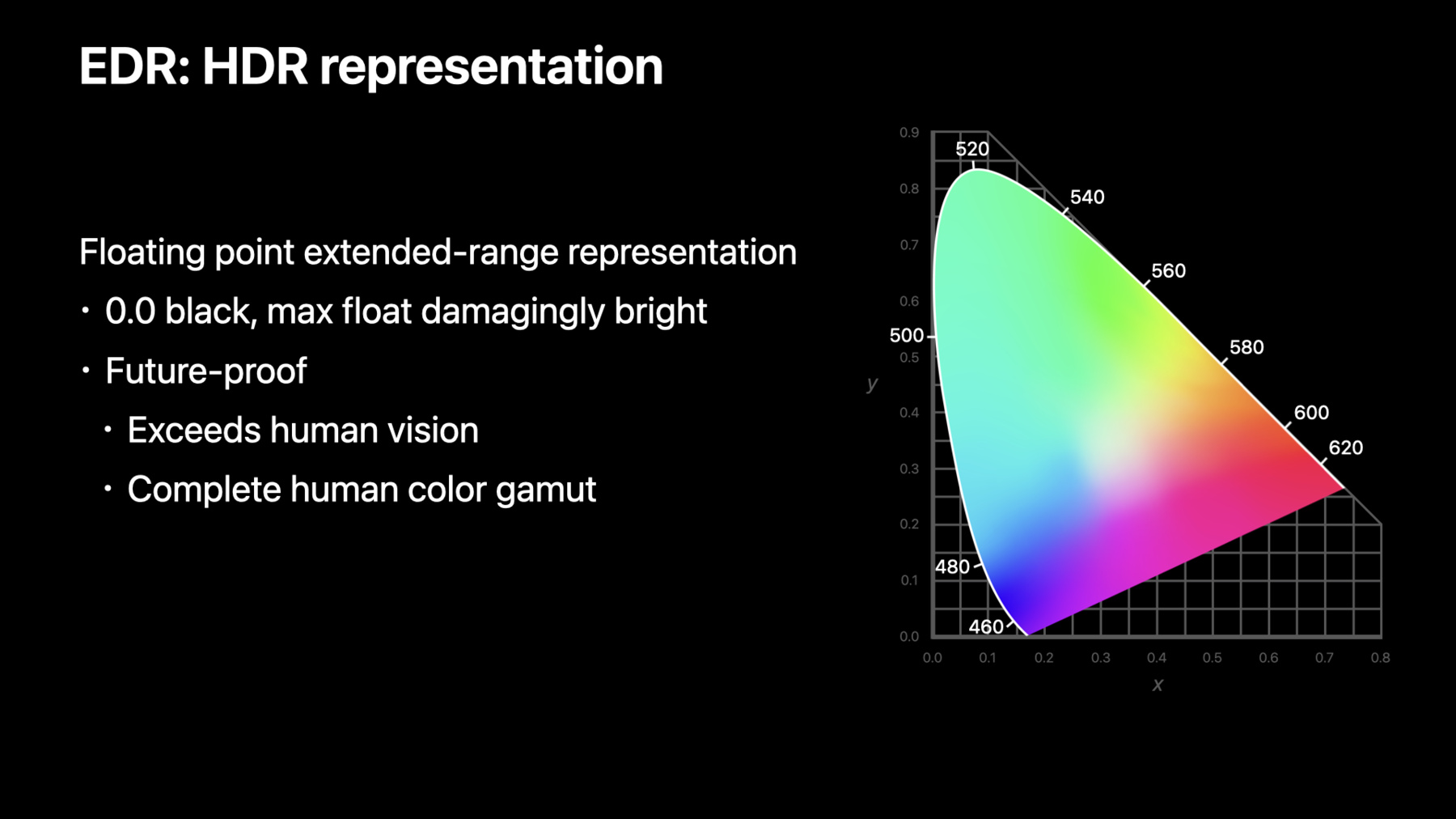

Let's explore the implications of EDR's floating point HDR representation and rendering pipeline.

Being floating point, EDR can represent every color that humans can perceive, as represented by this completely filled chromaticity diagram. Similarly, EDR can represent brightness that would be damaging to eyesight if fully rendered. As such, EDR's representation is future-proof.

As mentioned, EDR is not only a representation, but is also an HDR rendering pipeline.

EDR is an extension to the color management technologies that Apple pioneered, and has been shipping for a very long time. For instance, GPU accelerated color managed video first shipped way back in MacOS 10.4 Tiger. Conceptually similar to allowing media of normal and wide color gamut to be displayed side by side, EDR allows HDR and SDR assets to share the screen in a harmonious manner, as well as to be appropriately rendered to displays of differing capabilities. Among other things, this means that developers may continue to use existing SDR assets, and selectively add EDR support as needed.

Let's explore visually EDR's representation of HDR pixel values.

EDR is a floating point representation. EDR 0.0 represents black, 1.0 represents SDR max, also known as "reference white" or "UI white," thus EDR 0.0 to 1.0 represent the SDR range, the same as always. These values represent the subject of an image, and will never be clipped when rendered. EDR values beyond 1.0 represent values exceeding SDR brightness, such as highlights and emissive surfaces. These values might get extraordinarily bright, and while representable, are subject to clipping when rendered. EDR is used to drive the display, no matter if the display has a conventional pure power response or a newer HDR transfer function.

Let's consider Pro Display XDR in the default XDR preset, which is PQ-based. EDR 0.0 drives the display to zero nits, via the zero nit PQ code. EDR 1.0, called "reference white," is scaled by the display brightness slider or auto brightness, the same as always. The XDR preset has the typical, about 4 to 500 nits reference white brightness range. Let's consider the maximum 500 nits setting for this example. Regardless of the brightness setting, the peak white is always 1,600 nits in this preset. Thus, EDRmax, the brightest, renderable EDR value, is 3.2, as can be calculated by dividing the 1,600-nit peak white by the 500-nit reference white. Correspondingly, we say there is 3.2x SDR headroom, as the brightest value renderable to the display is 3.2x brighter than the reference white. In summary, on Pro Display XDR, using the default XDR preset, with display brightness, also known as "reference white," at 500 nits, EDR values 0.0 to 3.2 are rendered to the display as 0.0 to 1,600 nits, with EDR 1.0 displayed as 500 nits. Finally, EDR values above EDRmax's 3.2 are clipped to peak white, 1,600 nits. Amazingly, as display brightness is reduced toward the minimum 4 nits, EDR values up to 400 become renderable, thus exposing incredibly bright details at their true dynamic range.

Now that we have an overview of how EDR works, we'll explore adding EDR to your application. There are four steps to add EDR support to most applications. These steps are all straightforward, however, their effects can be subtle and you likely won't reliably have EDR results until you apply all of them.

First, you have to request EDR by adding an attribute to the appropriate context, layer, or object. Second, you have to associate an extended-range colorspace with your buffer, layer, or app. Color Management will clip EDR values beyond 1.0, if an extended-range colorspace is not specified. You should know that color management might only engage when the source and destination colorspaces don't match, so this clip might be intermittent or content dependent.

Third, you need to select a pixel buffer format that can represent values above 1.0. This is usually some form of floating point. Finally, your application must generate pixels that exceed the SDR 0.0 to 1 range, and thus have sparkly elements to show off. Many developers have existing apps to which they might want to add EDR support.

Perhaps the easiest way to get started with EDR is to simply substitute HDR video or still image content in place of existing SDR content. Then follow the four steps we just outlined. Some applications, for instance, the email app shown, might selectively enable EDR only when HDR content is encountered. AVFoundation's AVPlayer interface supports a growing number of HDR video formats such as Dolby Vision, HDR 10, and HLG. AVPlayer will automatically render these formats as EDR when possible on all platforms, except for watchOS. Existing AVPlayer-based applications don't require any changes to render supported HDR video formats via EDR.

Let's look at an EDR enabled AVPlayer code example. As always, one simply first creates an AVPlayer instance, using AVPlayer's playerWithURL. However, in our example, we specify HDR video content. Next, add the player to a layer, using playerLayerWithPlayer. Finally, point the controller to the player and request the player to begin playing. AVFoundation will automatically perform what is required to render the HDR media as EDR, and will enable and disable EDR based on the content type. HDR video playback is pretty straightforward. Next, let's consider HDR still image rendering.

ImageIO has extensive support for HDR image formats, and conveniently, will return the decoded pixels as EDR in floating point buffers. While ImageIO is available on all platforms, presently, the decoded results may only be rendered as EDR on macOS. We will demonstrate how to incorporate ImageIO-based assets into an application in the upcoming CAMetalLayer example.

Now, let's explore the native EDR API.

As I mentioned, the native EDR API was created for applications like Games and Pro apps, where the developer may want to render custom content and have more control over how HDR media is rendered. Presently, this API is available on macOS via CAMetalLayer, and NSOpenGLView. Let's look at an example using CAMetalLayer, the preferred native EDR framework. To begin, we are going to look at the first three of the four-step process, opting in to EDR, setting an extended-range colorspace, and selecting an FP16 pixel buffer format. First, opt-in to EDR by setting the metalLayer's "wantsExtended DynamicRangeContent" attribute to "yes." Second, set the metalLayer's colorspace to an extended-range colorspace, such as ExtendedLinearDisplayP3. Third, set the metalLayer's pixel format to a floating point format, such as RGBA16Float. Next, we will examine the fourth EDR opt-in step, actually generating EDR pixels. In this case, we will use ImageIO to import HDR still image content, and render the result as an EDR texture. It is a little involved, so we will walk though it step by step. We create the CGImage from HDR content, draw the decoded image into a floating point bitmap, create a floating point texture, load the EDR bitmap to texture, and finally, render the texture to the EDR enabled metal pipeline. First, the CGImage is created from the source specified in HDRimageURL, using CGImageSourceCreateWithURL and CGImageSourceCreateImageAtIndex. Next, create the CGContext with CGBitmapContextCreate, using the same colorspace we set earlier on the metalLayer, and draw the EDR image into the floating point context using CGContextDrawImage.

Now, we create a floating point texture of type RGBA16Float, using newTextureWithDescriptor. Load the EDR image data, using CGBitmapContextGetData into the EDR texture, using replaceRegion. Finally, the developer is ready to render the EDR texture into their EDR enabled metal pipeline and enjoy the dynamic results. For those still supporting OpenGL-based applications, we will explore enabling EDR on NSOpenGLView using similar steps to those we have already demonstrated. Adoption of EDR might also provide the opportunity to fully embrace color management. However, we will not cover that in this talk. We will explore the following steps: Opting-in to EDR, selecting a floating point pixel buffer format, and drawing EDR and content to the NSOpenGLView. Setting an extended-range colorspace is not required, as NSOpenGLView is not automatically color managed. First, opt-in to EDR by setting the NSOpenGLView's "wantsExtendedDynamicRange OpenGLSurface" attribute to "yes." Second, set the pixel buffer format to NSOpenGLPFAColorFloat with ColorSize, 64. Finally, draw EDR values into the OpenGLView, run your modified application, and enjoy the results. Now that we've discussed enabling basic EDR support in existing applications, let's dive a little deeper and explore a number of best practices to get the most from your EDR enabled application. Recall EDR opt-in step four is to set an extended-range colorspace. And in our example so far, we explicitly set an appropriate extended-range colorspace. However, many apps don't set their color space explicitly, and instead use the default colorspace provided by the framework. This often corresponds to the display or composition space, which are not extended range, thus breaking EDR unless something is done. Let's explore an example of how to promote existing colorspace to extended range.

First, get the existing colorspace, in this case, from your view's window. Second, promote that colorspace to extended-range using CGcolorspaceCreateExtended. Finally, assign the newly generated extended-range colorspace to the window, buffer, or layer, as appropriate, enabling apps that don't explicitly set their colorspace to use EDR. Next, we will explore best practices for generating EDR content. So far, we've mentioned EDR content has values that exceed 1.0, but not how to actually synthesize this content, or convert it from HDR sources. Please be careful generating EDR content, for it is possible to experience the dreaded "glowing bunny syndrome," where content may have an eerie, iridescent glow that makes properly authored content look dim in comparison. As has been emphasized, pixels above EDR 1.0, should only encode specular highlights and emissive surfaces. This means that is it inappropriate to take SDR content and stretch it to be HDR. SDR content is compatible with EDR as-is in its native 0.0 to 1.0 range. This aligns the SDR reference white to that of HDR contents. When HDR content is authored to be too bright, with what should be dimmer elements or even shadows exceeding EDR 1.0, it will appear unnatural compared to well-authored content. The user's vision may begin to adapt to this overly bright content, making other content appear dim in comparison. Please consider this example, of a girl holding an 18% gray card, as used by photographers and animators to set exposure. The girl is the subject of the photograph, and consequently, the photographer or animator will set the exposure so that she is at or below reference white, and thus will not be clipped. Most user interface elements shouldn't exceed EDR 1.0 as well. However, there may be exceptions, including EDR color pickers, or temporarily scaled UI, brightly illuminated to capture attention. Emissive surfaces, such as the sun or clouds, as well as specular highlights, such as the sun reflecting off a shiny surface, are potentially far brighter than reference white, and consequently, are expected to exceed EDR 1.0. A scene's brightest elements are already clipped by SDR encoding. Consequently, SDR content is correctly represented as EDR 0.0 to 1.0. Sometimes a workflow will require HDR formats to be converted to EDR explicitly. As mentioned, ImageIO decodes HDR content to EDR, requiring no further conversion. For instance, HLG is decoded to the 0.0 to 12.0 EDR range, with EDR 1.0 representing reference white. ImageIO decodes other HDR still image formats to their own particular ranges, with EDR 1.0 always corresponding to the source's reference white. AVFoundation does not presently decode HDR formats, such as HDR10, to EDR. Consequently, these need to be adapted for use with EDR rendering. This conversion is straightforward and involves two steps. First, converting to linear light by applying the inverse transfer function. And second, dividing by the medium's reference white. For example, 100 nits for PQ content, so that reference white will be mapped to EDR 1.0, and the maximum PQ 10,000 nit value will map to EDR 100. Consider this photograph that, like many photos, both digital and film, has the sky clipped to white. While EDR will never clip values up to EDR 1.0, reference white, which represent the subject of the image, EDR will clip values above reference white that are beyond the current EDRmax, and thus are not able to be rendered on the current system display and display settings. These values above reference white are highlights or emissive surfaces, such as the clouds, sky, and sun, that we can't see in this image, and, as such, are usually okay to be clipped. However, clipping of bright details is not always acceptable. For instance, consider this image of a sun-lit airplane flying high above the clouds. Notice the blue "HDR11" livery? Brightly illuminated, the number, as well as the fuselage it's painted onto, might both be rendered at greater than reference white, and hence, potentially be clipped depending on the display capabilities and the current EDRmax. If this number was a crucial plot element in a film or game, then dynamic tone mapping may be employed to avoid or manage clipping, and keep the number visible. Let's explore how sophisticated applications may stay abreast of the current EDRmax value, and accordingly, adapt what they render. Applications may want to subscribe to NSScreen notifications, perhaps to adjust the brightness of objects in the scene, change the scene's exposure, apply a bloom effect, or even soft-clip to ensure critical details are not lost. Presently, there is only one dynamic EDR value accessible from the NSScreen interface. It is maximumExtendedDynamic RangeColorComponentValue, and represents the current maximum renderable linear EDR value. As we have stated, EDR values greater than EDRmax will clip to EDRmax. And EDRmax may dynamically change, since it is dependent on characteristics, such as display brightness or True Tone, that may themselves change over time. The two other EDR values accessible from NSScreen are static, meaning they won't change over time. In contrast to the preceding value, maximumPotentialExtendedDynamic RangeColorComponentValue returns just that, the maximum EDR linear pixel value that might be renderable without clipping on this display. That is, if the display brightness and other features were set appropriately. Max potential EDR may be used to guide decisions, such as if SDR or HDR versions of content should be used, or even deciding if it is worthwhile to enable EDR at all. Please remember that potential is in the name. The current maximum renderable value, EDRmax, might be below this value. 1.0 is returned for displays, or display presets, not supporting EDR. The final static value, maximumReferenceExtendedDynamic RangeColorComponentValue, is mainly of interest to Pro application developers concerned about achieving the highest fidelity to a reference standard. It provides the maximum EDR value that is guaranteed to be rendered without distortion, such as clipping or tone mapping, on a given display. 0.0 is returned on displays that do not support reference rendering. Now, let's explore example code that reads EDR values via NSScreen. Here, we read the two static EDR values, maxPotential and maxReference. EDR values may be different across displays, so be sure to make the call on the screen your app is actually on. In this example, we are using the NSScreen associated with your window. The application may keep abreast of dynamic EDR changes by subscribing to "NSWindowDidChangeScreen" notification, then query the current EDR parameters upon receiving the screen changed event, and potentially altering what is rendered based on knowledge of the current EDR headroom. To complete our discussion of tone mapping, we will briefly explore the CAMetalLayer tone mapper. This is of interest for Pro apps that author and render HDR video, such as HLG and HDR10, to project-based HDR mastering parameters. System apps, as well as apps employing AVPlayer, as we've already described, use the CAMetalLayer tone mapper to provide media-specific, optical-to-optical transfer function, and soft clip for HDR values that would otherwise not be renderable. The CAMetalLayer tone mapper is enabled via the "CAEDRMetadata" attribute and is available on macOS. This example demonstrates how to enable the system tone mapper by setting the "EDRMetadata" attribute on your app's CAMetalLayer.

A number of EDRMetadata constructors are available. They are specific to the HDR video standard, as well as the mastering information provided. Here we see the HLGMetadata constructor, which takes no parameters. Next, we demonstrate one of the HDR10 constructors. This one takes three parameters: Explicit minimum and maximumLuminance, both in nits, as well as opticalOutputScale, which is often set to HDR10's 100-nit reference white. Once constructed, set the resulting object on your application's CAMetalLayer, causing all content rendered on this layer to be processed by the system tone mapper based on the provided metadata.

Closely related to adaptive tone mapping, an app may want to draw with the brightest white currently renderable. We already understand how to get the current EDRmax from NSScreen, however, EDR headroom is a linear value and pixels are most often nonlinearly encoded. In this final example, we will convert EDRmax into the app's potentially nonlinear colorspace. First, we use CGColorSpaceCreateWithName to create the linear EDRmax white pixel we want to render. And then convert the linear EDRmax pixel to the application's colorspace, using CGColorCreateCopy ByMatchingToColorSpace. The resulting color may then be used in any EDR enabled application. This leaves us with one last topic to discuss, the power and performance implications of EDR. Similar to all CG rendering, evaluating EDR's impact on power and performance can be complicated, and often depends on the specific hardware architecture and display technology used on a given device. While we won't go into details in this talk, there are some general points to consider, such as, producing brighter pixels often consumes more power, floating point buffers used with EDR may be larger, and thus consume more bandwidth than the fixed point buffers that might otherwise be used. This, in turn, correlates to more power consumed. Even enabling CAEDRMetadata-based tone mapping involves an extra processing pass. This, in turn, increases latency and bandwidth. Simply, EDR, like many features, isn't free and, as such, it should be used judiciously. The best practice is to enable EDR when there is both HDR content and potential EDR headroom available. Disable EDR when this is not the case. Similarly, choose HDR or SDR versions of content to open or stream based on if the EDR potential headroom, or even the current EDRmax headroom, is significantly greater than 1.0. Or, more simply, only enable EDR when the user will see the difference. We've covered a lot about EDR in a short period of time. I hope you are as excited by EDR as I am, and are eager to integrate it into your applications. To summarize, EDR is used on macOS, iOS, iPadOS, and tvOS devices. EDR is an extension to the color management and SDR representations you are already using. EDR is easy for applications to opt-in to. EDR is compatible with industry HDR standards, and provides a non-modal adaptive experience across devices and environments. Please check out the following talks and resources for more information on HDR video playback, Apple Pro Display XDR, and metal rendering of EDR. Thank you! Hope you enjoy the rest of your WWDC. [music]

-

-

16:00 - AVPlayer automatically uses EDR with HDR content

// Instantiate AVPlayer with HDR Video Content VPLayer* player = [AVPLayer playerWithURL:HDRVideoURL]; AVPlayerLayer* playerLayer = [AVPlayerLayer playerLayerWithPlayer:player]; // Play HDR Video via EDR AVPlayerViewController* controller = [[AVPlayerViewController alloc] init]; controller.player = player; [player play]; -

17:52 - EDR with CAMetalLayer 1

// Opt-in to EDR metalLayer.wantsExtendedDynamicRangeContent = YES; // Set extended-range colorspace metalLayer.colorspace = CGColorSpaceCreateWithName(kCGColorSpaceExtendedLinearDisplayP3); // Select FP16 pixel buffer format metalLayer.pixelFormat = MTLPixelFormatRGBA16Float; -

18:53 - EDR with CAMetalLayer 2

// Create CGImage from HDR Image CGImageSourceRef isr = CGImageSourceCreateWithURL((CFURLRef)HDRimageURL, NULL); CGImageRef img = CGImageSourceCreateImageAtIndex(isr, 0, NULL); // Draw into floating point bitmap context size_t width = CGImageGetWidth(img); size_t height = CGImageGetHeight(img); CGBitmapInfo info = kCGBitmapByteOrder16Host | kCGImageAlphaPremultipliedLast | kCGBitmapFloatComponents; CGContextRef ctx = CGBitmapContextCreate(NULL, width, height, 16, 0, metalLayer.colorspace, info); CGContextDrawImage(ctx, CGRectMake(0, 0, width, height), img); // Create floating point texture MTLTextureDescriptor* desc = [[MTLTextureDescriptor alloc] init]; desc.pixelFormat = MTLPixelFormatRGBA16Float; desc.textureType = MTLTextureType2D; id<MTLTexture> tex = [metalLayer.device newTextureWithDescriptor:desc]; // Load EDR bitmap into texture const void* data = CGBitmapContextGetData(ctx); [tex replaceRegion:MTLRegionMake2D(0, 0, width, height) mipmapLevel:0 withBytes:data bytesPerRow:CGBitmapContextGetBytesPerRow(ctx)]; // Draw with the texture in your EDR enabled metal pipeline -

20:30 - EDR with NSOpenGLView

// Opt-in to EDR - (void) viewWillMoveToWindow:(nullable NSWindow *)newWindow { self.wantsExtendedDynamicRangeOpenGLSurface = YES; } // Select OpenGL float pixel buffer format NSOpenGLPixelFormatAttribute attribs[] = { NSOpenGLPFADoubleBuffer, NSOpenGLPFAMultiSample, NSOpenGLPFAColorFloat, NSOpenGLPFAColorSize, 64, NSOpenGLPFAOpenGLProfile, NSOpenGLProfileVersion4_1Core, 0}; NSOpenGLPixelFormat* pf = [[NSOpenGLPixelFormat alloc] initWithAttributes:attribs]; // Draw EDR content into NSOpenGLView -

21:46 - EDR with NSOpenGLView

// Get existing colorspace from the window CGColorSpaceRef color_space = [view.window.colorSpace CGColorSpace]; // Promote the colorspace to extended-range CGColorSpaceRef color_space_extended = CGColorSpaceCreateExtended(color_space); // Apply the extended-range colorspace to your app NSColorSpace* extended_ns_color_space = [[NSColorSpace alloc] initWithCGColorSpace:color_space_extended]; view.window.colorSpace = extended_ns_color_space; CGColorSpaceRelease(color_space_extended); -

29:00 - EDR display change notifications via NSScreen

// Read static values NSScreen* screen = window.screen; double maxPotentialEDR = screen.maximumPotentialExtendedDynamicRangeColorComponentValue; double maxReferenceEDR = screen.maximumReferenceExtendedDynamicRangeColorComponentValue; // Register for dynamic EDR notifications NSNotificationCenter* notification = [NSNotificationCenter defaultCenter]; [notification addObserver:self selector:@selector(screenChangedEvent:) name:NSApplicationDidChangeScreenParametersNotification object:nil]; // Query for latest values - (void)screenChangedEvent:(NSNotification *)notification { double maxEDR = screen.maximumExtendedDynamicRangeColorComponentValue; } -

30:28 - CAEDRMetadata tone-mapper

// HLG CAEDRMetadata* edrMetaData = [CAEDRMetadata HLGMetadata]; // HDR10 CAEDRMetadata* edrMetaData = [CAEDRMetadata HDR10MetadataWithMinLuminance:minLuminance maxLuminance:maxContentMasteringDisplayBrightness opticalOutputScale:outputScale]; // Set on CAMetalLayer metalLayer.EDRMetadata = edrMetaData; -

31:35 - Computing your app’s brightest pixel

// Create the linear pixel we want to render double EDRmaxComponents[4] = {EDRmax, EDRmax, EDRmax, 1.0}; CGColorSpaceRef linearColorSpace = CGColorSpaceCreateWithName(kCGColorSpaceExtendedLinearDisplayP3); CGColorRef EDRmaxColorLinear = CGColorCreate(linearColorSpace, EDRmaxComponents); // Convert from linear to application’s colorspace CGColorSpaceRef winColorSpace = [self.window.colorSpace CGColorSpace]; CGColorRef EDRmaxColor = CGColorCreateCopyByMatchingToColorSpace(winColorSpace, kCGRenderingIntentDefault, EDRmaxColorLinear, NULL);

-