-

Create audio drivers with DriverKit

Discover how to use the AudioDriverKit API to consolidate your Audio Server plug-in and DriverKit extension into a single package. Learn how you can simplify audio driver installation with an app instead of an installer package and distribute your driver through the Mac App Store. And we'll take you through how the Core Audio HAL interacts with AudioDriverKit and discover best practices for audio device drivers.

리소스

관련 비디오

WWDC22

WWDC20

WWDC19

-

비디오 검색…

Hello. My name is Baek San Chang, and I work on Core Audio. Today I will discuss a new way to create audio drivers with DriverKit. But first let's review how audio drivers work today. Prior to macOS Big Sur, an audio server plug-in would need to communicate with a hardware device through a user client to a kernel extension. In macOS Big Sur, the CoreAudio HAL provided support to create an audio server plug-in built on top of a DriverKit Extension. The layer between the plug-in and the dext was the same as with a kext, but security was improved by moving out of the kernel and into user space. For more information on DriverKit, please check out the previous WWDC DriverKit videos. While the current solution allows audio driver development to move out of the kernel, two separate components are still needed to implement a functional hardware audio driver: An audio server plug-in and a driver extension. This complicates development, increases resources, and can increase overhead and latency. Starting in macOS Monterey, you only need a dext, no more plug-in.

AudioDriverKit is a new DriverKit framework used to write audio driver extensions alongside USBDriverKit or PCIDriverKit. This new framework handles all the inter process communication to the CoreAudio HAL. Since you only have a dext, now you don't need to communicate between your dext and an audio server plug-in. You can stay focused within DriverKit.

Since AudioDriverKit extensions are bundled inside a Mac app, a separate installer is no longer necessary.

And now your driver is loaded immediately, no reboot required.

Now that you know the benefits of AudioDriverKit, let's dive into making a new audio driver. I will start with a brief overview of the components involved in an audio driver, then cover some things you'll need before writing your dext.

Once we're ready to start writing code, I will walk through how to configure and initialize your dext, create devices, streams, and other audio objects, and deal with the IO path and timestamps. Lastly, I will discuss how to handle configuration changes and show you a demo of the dext at the end.

So let's start with architecture. The diagram shows how the HAL communicates with Driver Extensions using the AudioDriverKit framework. The AudioDriverKit framework will create a private user client that will be used for all the communication between CoreAudio and your audio dext. This user client is not intended to be used directly and not exposed to your dext. Notice that there is no plug-in or custom user client required to communicate between your app and dext. Optionally, your app can open a custom user client to communicate with your dext directly if needed. Now let's talk about the entitlements you will need.

All DriverKit driver extensions must have the DriverKit entitlement.

AudioDriverKit dexts must also have the entitlement to allow any user client access.

This is available to all developers who have been approved for any DriverKit entitlements.

Additionally, any transport family entitlements should be added as necessary.

If you have not yet requested a USB or PCI transport entitlement, visit Apple's developer site to submit a request.

Keep in mind that the sample code presented is purely for demonstration purposes and creates a virtual audio driver that is not associated with a hardware device, and so entitlements will not be granted for that kind of use case. If a virtual audio driver or device is all that is needed, the audio server plug-in driver model should continue to be used. Now let's look at your dext's info.plist. These settings need to be added to the IOKitPersonalities of the dext. AudioDriverKit will handle creation of IOUserAudioDriverUserClient required by the HAL. The HAL has the required entitlements to connect to the user client connection. Here's an example of a custom user client for SimpleAudioDriverUserClient. See the AudioDriverKitTypes.h header file for more information. Next, let's talk about configuration and initialization. The first step to configuring an audio dext is to subclass IOUserAudioDriver and override the virtual methods. IOUserAudioDriver is a subclass of IOService. Subclass any IOUserAudio objects that are needed to implement custom behavior. Then configure and add them to IOUserAudioDriver. The diagram shows an overview of IOUserAudio objects you will be creating. SimpleAudioDriver is a subclass of IOUserAudioDriver and the entry point into the dext. SimpleAudioDriver will create a SimpleAudioDevice, which is a subclass of IOUserAudioDevice. The audio device handles all start-stop IO-related messages, timestamps, and configuration changes. SimpleAudioDevice will create various IOUserAudioObjects. The device object will also create OSTimerDispatchSources, OSActions, and implement a tone generator to simulate hardware interrupts and IO. IOUserAudioStream is a stream owned by the device. The stream will use an IOMemoryDescriptor for audio IO, which will be mapped to the HAL. IOUserAudioVolumeLevelControl is a control object that takes scalar or dB values. The control value will be used to apply gain to the input audio buffer. All IOUserAudioObjects can have IOUserAudioCustomProperties. SimpleAudioDevice will create an example of a custom property and a string as its qualifier and data value. Let's take a look at the code. SimpleAudioDriver is a subclass of IOUserAudioDriver. Start, Stop, and NewUserClient are virtual methods from the IOService class that the driver needs to override.

StartDevice and StopDevice are IO-related virtual methods from IOUserAudioDriver. These will be called when the HAL starts or stops IO for an audio device. I will discuss the IO path after going over devices, streams, and other audio objects. The example shows how to override NewUserClient to create user client connections. NewUserClient will be called when a client process wants to connect to the dext. The AudioDriverKit framework will handle creation of the user client required by the HAL by calling NewUserClient on the IOUserAudioDriver base class. This will create the IOUserAudioDriverUserClient that is needed by CoreAudio HAL. A custom user client can be created as well by calling IOService Create, which will create the user client object from the driver extensions info.plist entry added earlier. Let's take a look at how to override Start and create a custom IOUserAudioDevice object. First, call Start on the super class. Then allocate the SimpleAudioDevice and initialize it with a few required parameters. The initialized device needs to then be added to the audio driver by calling AddObject. Finally, register the service, and the driver is ready to go.

Now that your driver is initialized, let's create a device, stream, and a few other audio objects. Subclass IOUserAudioDevice to get custom behavior.

Let's create an input stream, volume control, and a custom property object. The init method for SimpleAudioDevice shows how to configure the device and create various audio objects. The sample rate related information of the device is configured by calling SetAvailableSampleRates and SetSampleRate on the device. Create an IOBufferMemoryDescriptor that will be passed to the IOUserAudioStream. The memory will be mapped to CoreAudio HAL and used for audio IO. The memory should ideally be the same IO memory used for DMA to hardware. IOUserAudioStream is created by specifying it with input stream direction and passing in the IO memory descriptor that was created above. A few additional things need to be configured on the stream before it is functional. The stream formats are defined by creating a format list of IOUserAudio StreamBasicDescriptions. Specify the sample rate, format ID, and the other required format properties. Set the available formats by passing in the stream format list declared above. And then set the current format of the stream. Finally, add the configured stream to the device by calling AddStream. Now let's go over creating a volume level control. To create a volume control object, call the IOUserAudioLevelControl::Create method. The control is a settable volume control with initial level set to -6dB and with a range of 96dB. The element, scope, and class of the control also needs to be specified. Finally, add the control object to the device. The volume control gain value will be used in the IO path by applying the gain to the input stream's IO buffer. Now let's go over creating a custom property object for the device. A property address needs to be provided for every custom property object. Define a custom selector type with a global scope and main element. Next, create the custom property object by providing the property address defined above. The custom property is settable, and the qualifier and data value types are both strings. Now create an OSString for the qualifier and data value. Then set it on the custom property. Finally, add the custom property to the device. Now that you have created audio objects, let's talk about IO. The GetIOMemoryDescriptor method will return the IOMemoryDescriptor used by the IOUserAudioStream. The IOMemoryDescriptor is passed into the init method when creating a stream, and the stream can be updated with a new memory descriptor as well. The memory will be mapped to the HAL and used for audio IO. The same memory descriptor used by the stream should ideally be the same one used for DMA to the hardware device. IOUserAudioClockDevice is the base class of IOUserAudioDevice. UpdateCurrentZeroTimestamp and GetCurrentZeroTimestamp should be used to handle timestamps from the hardware device. The timestamps will be handled atomically, and the HAL will use the sample time-host time pair to run and synchronize IO. It is vital to track the hardware clock's timestamps as close as possible. Let's take a look at the SimpleAudioDevice class and focus on the IO-related methods. StartIO and StopIO will be called from the driver when the HAL is attempting to run IO. The private methods are shown that use IOTimerDispatchSource and OSAction to simulate hardware interrupts, which will be used to generate zero timestamps and audio data on the input IO buffer. Since this example is not running against a hardware device, timers and actions are used in place of hardware interrupts and DMA. StartIO will be called on the device object when the HAL is attempting to start IO on the device. Any calls necessary to start IO on the hardware should be done here. Afterwards, StartIO should be called on the base class. Next, get the input stream's IOMemoryDescriptor so an IOMemoryMap can be created by calling CreateMapping. The buffer address, length, and offset will be used in the action occurred handler to generate a tone on the IO buffer. StartTimers is called to configure and enable the time sources and actions to generate timestamps and fill out the input audio buffer. UpdateCurrentZeroTimestamp is called to atomically update the sample time-host time pair for the IOUserAudioDevice. The timer source is enabled and set with a wake-up time based on mach_absolute_time and host ticks configured from the device. The ZtsTimerOccurred action will be called based on the wake time so that a new timestamp can be updated on the device. Not shown here, but the sample code also updates the tone generation timer and action in a similar way. When the zero timestamp action fires, the last zero timestamp value is obtained from the device by calling GetCurrentZeroTimestamp. If this is the first timestamp, use the mach_absolute_time passed into the timer as the anchor time. Otherwise, the timestamps are updated by the zero timestamp period and host ticks per buffer. Calling UpdateCurrentZeroTimestamp will update the device's timestamps so that the HAL can use the new values. Set the ZTS timer to wake up in the future for the next zero timestamp.

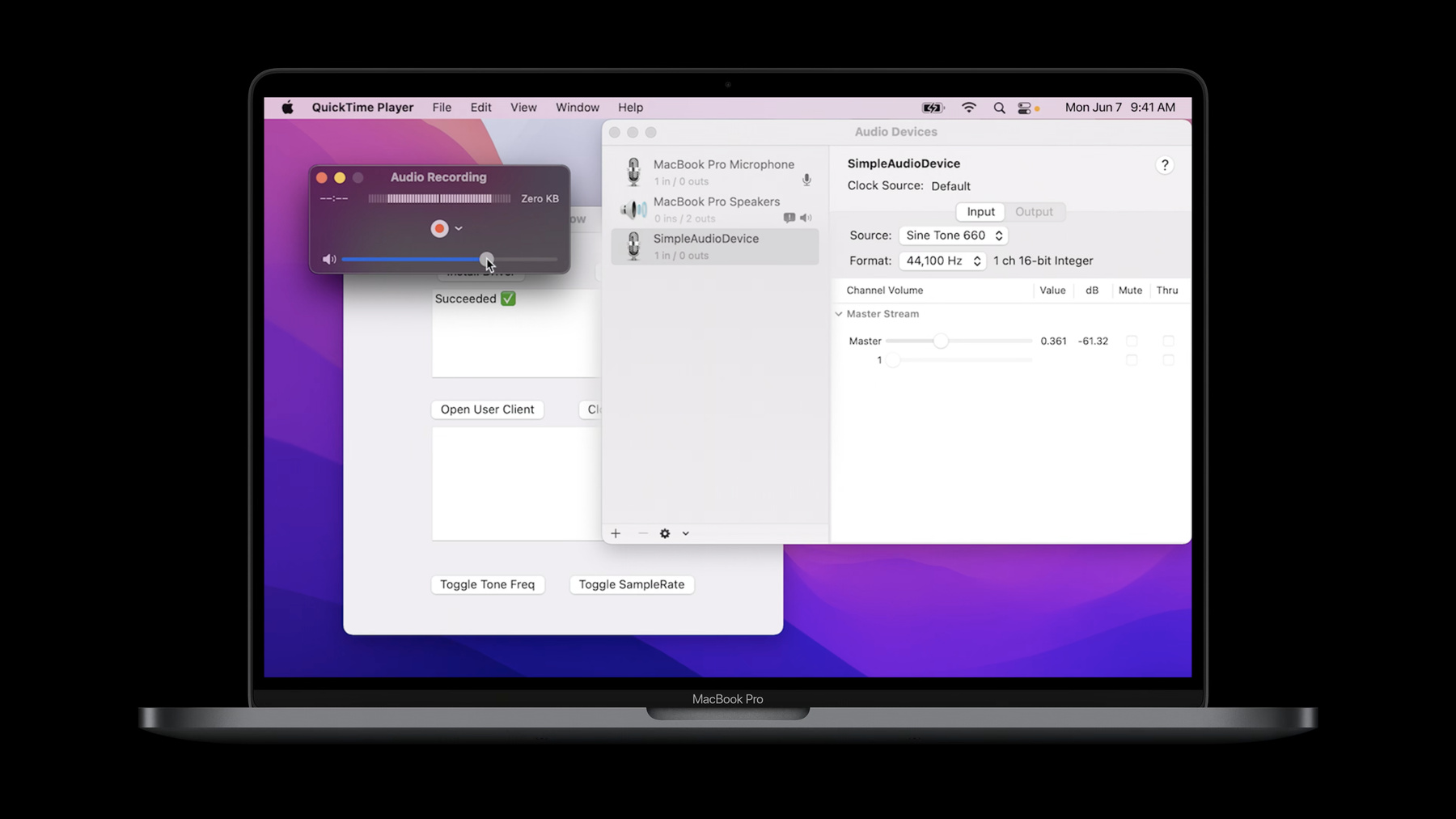

To simulate DMA, audio data will be written to the input IO buffer when the timer action runs. First, check if the input memory map that was assigned when start IO was called is valid. Use the memory map buffer length and stream format to get the length in samples for the IO buffer. Since the stream only supports signed 16 bit pcm sample format, get the buffer address and offset, and assign it as a int16_t buffer pointer. Now the input IO buffer can be filled out by generating a sine tone. First, get the input volume control gain as a scalar value. Then loop for the number of samples needed and generate a sine tone, applying the volume control gain. Next, loop through the buffer and fill in the sine tone sample to the IO buffer based on the channel count, also accounting for wraparound. Now that the audio dext is configured and can run IO, the next step is to handle configuration changes to update the device and its IO-related state. The device methods shown can be used to request and perform configuration changes. For changes to an audio device's state that will affect IO or its structure, the driver needs to request a config change by calling RequestDeviceConfigurationChange The HAL will stop any running IO, and PerformDeviceConfigurationChange will be called on the driver. Only then can the audio device update its IO-related state. A common scenario of this would be updating the audio device's current sample rate or changing the current stream format to correspond with changes to the hardware device. The diagram shows the sequence of events for device configuration changes. The driver should first request a configuration change. The HAL will notify any listeners that the config change will begin for the device. IO will be stopped on the device if it is currently running. The current state of the device will be captured. PerformDeviceConfigurationChange will be called on the driver. This is when the driver is allowed to change any state on the device and hardware. Once the config change is performed, the new state of the device will be captured, and all IO-related state, such as IO buffers or sample rate, will be updated. Any changes to the device's state will then be notified to any client listeners. If IO was previously running prior to the config change, IO will be restarted on the device. Finally, the HAL will notify any listeners that the config change has now ended. To simulate a hardware bottom up config change request, a custom user client command is used to trigger a sample rate change on the dext. RequestDeviceConfigurationChange will notify the HAL of a config change request on the audio device. Notice that the change info can be any kind of OSObject. This example provides a custom config change action and the change info as an OSString. To handle performing the configuration change, the SimpleAudioDevice class needs to override the method PerformDeviceConfigurationChange In PerformDeviceConfigurationChange handle the config change action in the switch statement. Log the same OSString object that was supplied as the change info when the config change was requested. Next, get the current sample rate, and set a new rate on the device. Make sure the audio stream updates its current stream format to handle the sample rate change by calling DeviceSampleRateChanged on the stream object. Other config change actions the device does not handle directly can be passed off to the base class. Let's take a look at this on the Mac. So SimpleAudio is the sample code application that bundles the driver extension. To install the audio driver extension, simply press Install Driver, and that should bring up the security preferences. So if we press Allow, that should dynamically load the audio driver extension. Previously, this was not possible with the kext because a reboot would be required. So SimpleAudioDevice has the sample rate formats available and the tone selection data source. And the volume control we added in the sample code. Now we can open QuickTime and do audio recording on the audio device.

And to test the bottom-up config changes, we can directly communicate with the dext to toggle the tone frequency or the sample rate, and the changes should also be reflected in Audio MIDI setup. To get rid of the driver extension, simply delete the application.

And you can see it is no longer available in Audio MIDI setup. To wrap things up, I did a recap of the state of audio server plug-ins and DriverKit extensions. This will continue to be supported and the AudioServerPlugIn driver interface is not deprecated. I introduced the new AudioDriverKit framework and discussed the benefits of the new driver model.

I went over an in-depth example of how to adopt the AudioDriverKit framework and showed sample code to create an IOUserService based audio dext, all running in user space. Download the latest Xcode and DriverKit SDK. Adopt AudioDriverKit for audio devices that have DriverKit supported hardware device families. And please provide any feedback about AudioDriverKit through Apple's Feedback Assistant. Thanks. [upbeat music]

-

-

5:58 - Subclass of IOUserAudioDriver

// SimpleAudioDriver example, subclass of IOUserAudioDriver class SimpleAudioDriver: public IOUserAudioDriver { public: virtual bool init() override; virtual void free() override; virtual kern_return_t Start(IOService* provider) override; virtual kern_return_t Stop(IOService* provider) override; virtual kern_return_t NewUserClient(uint32_t in_type, IOUserClient** out_user_client) override; virtual kern_return_t StartDevice(IOUserAudioObjectID in_object_id, IOUserAudioStartStopFlags in_flags) override; virtual kern_return_t StopDevice(IOUserAudioObjectID in_object_id, IOUserAudioStartStopFlags in_flags) override; -

6:27 - Override of IOService::NewUserClient

// SimpleAudioDriver example override of IOService::NewUserClient kern_return_t SimpleAudioDriver::NewUserClient_Impl(uint32_t in_type, IOUserClient** out_user_client) { kern_return_t error = kIOReturnSuccess; // Have the super class create the IOUserAudioDriverUserClient object if the type is // kIOUserAudioDriverUserClientType if (in_type == kIOUserAudioDriverUserClientType) { error = super::NewUserClient(in_type, out_user_client, SUPERDISPATCH); } else { IOService* user_client_service = nullptr; error = Create(this, "SimpleAudioDriverUserClientProperties", &user_client_service); FailIfError(error, , Failure, "failed to create the SimpleAudioDriver user-client"); *out_user_client = OSDynamicCast(IOUserClient, user_client_service); } return error; } -

7:04 - SimpleAudioDevice::init

// SimpleAudioDevice::init, set device sample rates and create IOUserAudioStream object ... SetAvailableSampleRates(sample_rates, 2); SetSampleRate(kSampleRate_1); // Create the IOBufferMemoryDescriptor ring buffer for the input stream OSSharedPtr<IOBufferMemoryDescriptor> io_ring_buffer; const auto buffer_size_bytes = static_cast<uint32_t>(in_zero_timestamp_period * sizeof(uint16_t) * input_channels_per_frame); IOBufferMemoryDescriptor::Create(kIOMemoryDirectionInOut, buffer_size_bytes, 0, io_ring_buffer.attach()); // Create input stream object and pass in the IO ring buffer memory descriptor ivars->m_input_stream = IOUserAudioStream::Create(in_driver, IOUserAudioStreamDirection::Input, io_ring_buffer.get()); ... -

8:19 - SimpleAudioDevice::init continued

// SimpleAudioDevice::init continued IOUserAudioStreamBasicDescription input_stream_formats[2] = { kSampleRate_1, IOUserAudioFormatID::LinearPCM, static_cast<IOUserAudioFormatFlags>( IOUserAudioFormatFlags::FormatFlagIsSignedInteger | IOUserAudioFormatFlags::FormatFlagsNativeEndian), static_cast<uint32_t>(sizeof(int16_t)*input_channels_per_frame), 1, static_cast<uint32_t>(sizeof(int16_t)*input_channels_per_frame), static_cast<uint32_t>(input_channels_per_frame), 16 }, ... } ivars->m_input_stream->SetAvailableStreamFormats(input_stream_formats, 2); ivars->m_input_stream_format = input_stream_formats[0]; ivars->m_input_stream->SetCurrentStreamFormat(&ivars->m_input_stream_format); error = AddStream(ivars->m_input_stream.get()); -

8:50 - Create a input volume level control object

// Create volume control object for the input stream. ivars->m_input_volume_control = IOUserAudioLevelControl::Create(in_driver, true, -6.0, {-96.0, 0.0}, IOUserAudioObjectPropertyElementMain, IOUserAudioObjectPropertyScope::Input, IOUserAudioClassID::VolumeControl); // Add volume control to device error = AddControl(ivars->m_input_volume_control.get()); -

9:22 - Create custom property

// SimpleAudioDevice::init, Create custom property IOUserAudioObjectPropertyAddress prop_addr = { kSimpleAudioDriverCustomPropertySelector, IOUserAudioObjectPropertyScope::Global, IOUserAudioObjectPropertyElementMain }; custom_property = IOUserAudioCustomProperty::Create(in_driver, prop_addr, true, IOUserAudioCustomPropertyDataType::String, IOUserAudioCustomPropertyDataType::String); qualifier = OSSharedPtr( OSString::withCString(kSimpleAudioDriverCustomPropertyQualifier0), OSNoRetain); data = OSSharedPtr( OSString::withCString(kSimpleAudioDriverCustomPropertyDataValue0), OSNoRetain); custom_property->SetQualifierAndDataValue(qualifier.get(), data.get()); AddCustomProperty(custom_property.get()); -

10:47 - Subclass of IOUserAudioDevice

// SimpleAudioDevice example subclass of IOUserAudioDevice class SimpleAudioDevice: public IOUserAudioDevice { ... virtual kern_return_t StartIO(IOUserAudioStartStopFlags in_flags) final LOCALONLY; virtual kern_return_t StopIO(IOUserAudioStartStopFlags in_flags) final LOCALONLY; private: kern_return_t StartTimers() LOCALONLY; void StopTimers() LOCALONLY; void UpdateTimers() LOCALONLY; virtual void ZtsTimerOccurred(OSAction* action, uint64_t time) TYPE(IOTimerDispatchSource::TimerOccurred); virtual void ToneTimerOccurred(OSAction* action, uint64_t time) TYPE(IOTimerDispatchSource::TimerOccurred); void GenerateToneForInput(size_t in_frame_size) LOCALONLY; } -

11:18 - StartIO

// StartIO kern_return_t SimpleAudioDevice::StartIO(IOUserAudioStartStopFlags in_flags) { __block kern_return_t error = kIOReturnSuccess; __block OSSharedPtr<IOMemoryDescriptor> input_iomd; ivars->m_work_queue->DispatchSync(^(){ // Tell IOUserAudioObject base class to start IO for the device error = super::StartIO(in_flags); if (error == kIOReturnSuccess) { // Get stream IOMemoryDescriptor, create mapping and store to ivars input_iomd = ivars->m_input_stream->GetIOMemoryDescriptor(); input_iomd->CreateMapping(0, 0, 0, 0, 0, ivars->m_input_memory_map.attach()); // Start timers to send timestamps and generate sine tone on the stream buffer StartTimers(); } }); return error; } -

11:57 - StartTimers

kern_return_t SimpleAudioDevice::StartTimers() { ... // clear the device's timestamps UpdateCurrentZeroTimestamp(0, 0); auto current_time = mach_absolute_time(); auto wake_time = current_time + ivars->m_zts_host_ticks_per_buffer; // start the timer, the first time stamp will be taken when it goes off ivars->m_zts_timer_event_source->WakeAtTime(kIOTimerClockMachAbsoluteTime, wake_time, 0); ivars->m_zts_timer_event_source->SetEnable(true); ... } -

12:27 - ZtsTimerOccurred

void SimpleAudioDevice::ZtsTimerOccurred_Impl(OSAction* action, uint64_t time) { ... GetCurrentZeroTimestamp(¤t_sample_time, ¤t_host_time); auto host_ticks_per_buffer = ivars->m_zts_host_ticks_per_buffer; if (current_host_time != 0) { current_sample_time += GetZeroTimestampPeriod(); current_host_time += host_ticks_per_buffer; } else { current_sample_time = 0; current_host_time = time; } // Update the device with the current timestamp UpdateCurrentZeroTimestamp(current_sample_time, current_host_time); // set the timer to go off in one buffer ivars->m_zts_timer_event_source->WakeAtTime(kIOTimerClockMachAbsoluteTime, current_host_time + host_ticks_per_buffer, 0); } -

13:03 - GenerateToneForInput

void SimpleAudioDevice::GenerateToneForInput(size_t in_frame_size) { // Fill out the input buffer with a sine tone if (ivars->m_input_memory_map) { // Get the pointer to the IO buffer and use stream format information // to get buffer length const auto& format = ivars->m_input_stream_format; auto buffer_length = ivars->m_input_memory_map->GetLength() / (format.mBytesPerFrame / format.mChannelsPerFrame); auto num_samples = in_frame_size; auto buffer = reinterpret_cast<int16_t*>(ivars->m_input_memory_map->GetAddress() + ivars->m_input_memory_map->GetOffset()); ... } -

13:30 - GenerateToneForInput continued

void SimpleAudioDevice::GenerateToneForInput(size_t in_frame_size) { ... auto input_volume_level = ivars->m_input_volume_control->GetScalarValue(); for (size_t i = 0; i < num_samples; i++) { float float_value = input_volume_level * sin(2.0 * M_PI * frequency * static_cast<double>(ivars->m_tone_sample_index) / format.mSampleRate); int16_t integer_value = FloatToInt16(float_value); for (auto ch_index = 0; ch_index < format.mChannelsPerFrame; ch_index++) { auto buffer_index = (format.mChannelsPerFrame * ivars->m_tone_sample_index + ch_index) % buffer_length; buffer[buffer_index] = integer_value; } ivars->m_tone_sample_index += 1; } } -

14:02 - IOUserAudioClockDevice.h and IOUserAudioDevice.h

// IOUserAudioClockDevice.h and IOUserAudioDevice.h kern_return_t RequestDeviceConfigurationChange(uint64_t in_change_action, OSObject* in_change_info); virtual kern_return_t PerformDeviceConfigurationChange(uint64_t in_change_action, OSObject* in_change_info); virtual kern_return_t AbortDeviceConfigurationChange(uint64_t change_action, OSObject* in_change_info); -

15:32 - HandleTestConfigChange

kern_return_t SimpleAudioDriver::HandleTestConfigChange() { auto change_info = OSSharedPtr(OSString::withCString("Toggle Sample Rate"), OSNoRetain); return ivars->m_simple_audio_device->RequestDeviceConfigurationChange( k_custom_config_change_action, change_info.get()); } class SimpleAudioDevice: public IOUserAudioDevice { ... virtual kern_return_t PerformDeviceConfigurationChange(uint64_t change_action, OSObject* in_change_info) final LOCALONLY; } -

16:05 - PerformDeviceConfigurationChange

// In SimpleAudioDevice::PerformDeviceConfigurationChange kern_return_t ret = kIOReturnSuccess; switch (change_action) { case k_custom_config_change_action: { if (in_change_info) { auto change_info_string = OSDynamicCast(OSString, in_change_info); DebugMsg("%s", change_info_string->getCStringNoCopy()); } double rate_to_set = static_cast<uint64_t>(GetSampleRate()) != static_cast<uint64_t>(kSampleRate_1) ? kSampleRate_1 : kSampleRate_2; ret = SetSampleRate(rate_to_set); if (ret == kIOReturnSuccess) { // Update stream formats with the new rate ret = ivars->m_input_stream->DeviceSampleRateChanged(rate_to_set); } } break; default: ret = super::PerformDeviceConfigurationChange(change_action, in_change_info); break; } // Update the cached format: ivars->m_input_stream_format = ivars->m_input_stream->GetCurrentStreamFormat(); return ret; }

-