Hi, thanks for your reply. I am running this on macOS 12.4, and my machine is M1 Max 64GB. The current tensorflow-macos version is 2.9.1, and tensorflow-metal is 0.5.0.

I was using a jupyter notebook in PyCharm, and here are the code:

import cv2

import pandas as pd

import tensorflow as tf

import psutil

# for auto-completion

import typing

from tensorflow import keras

if typing.TYPE_CHECKING:

from keras.api._v2 import keras

from helper_functions import *

import gc

import tqdm.notebook as tqdm

# tf.config.run_functions_eagerly(False)

gc.enable()

gc.collect()

if not os.path.exists('101_food_classes_10_percent'):

if not os.path.exists('101_food_classes_10_percent.zip'):

!wget https://storage.googleapis.com/ztm_tf_course/food_vision/101_food_classes_10_percent.zip

# !unzip 10_food_classes_10_percent.zip

unzip_data('101_food_classes_10_percent.zip')

train_dir = '101_food_classes_10_percent/train/'

test_dir = '101_food_classes_10_percent/test/'

IMG_SIZE = (224, 224)

train_data = keras.preprocessing.image_dataset_from_directory(train_dir,

label_mode='categorical',

image_size=IMG_SIZE,

seed=45)

test_data = keras.preprocessing.image_dataset_from_directory(test_dir,

label_mode='categorical',

image_size=IMG_SIZE,

shuffle=False,

seed=45)

# train_generator = keras.preprocessing.image.ImageDataGenerator()

# Setup data augmentation

with tf.device('CPU: 0'):

data_augmentation = keras.Sequential([

keras.layers.experimental.preprocessing.RandomFlip("horizontal"), # randomly flip images on horizontal edge

keras.layers.experimental.preprocessing.RandomRotation(0.2), # randomly rotate images by a specific amount

keras.layers.experimental.preprocessing.RandomHeight(0.2), # randomly adjust the height of an image by a specific amount

keras.layers.experimental.preprocessing.RandomWidth(0.2), # randomly adjust the width of an image by a specific amount

keras.layers.experimental.preprocessing.RandomZoom(0.2), # randomly zoom into an image

# keras.layers.experimental.preprocessing.Rescaling(1./255) # keep for models like ResNet50V2, remove for EfficientNet

], name="data_augmentation")

train_data = train_data\

.map(map_func=lambda x, y: (data_augmentation(x, training=True), y), num_parallel_calls=tf.data.AUTOTUNE)\

.prefetch(tf.data.AUTOTUNE)

test_data = test_data\

.prefetch(tf.data.AUTOTUNE)

valid_data = test_data\

.take(int(0.15*len(test_data)))\

.prefetch(tf.data.AUTOTUNE)

base_model = keras.applications.EfficientNetB0(include_top=False)

base_model.trainable = False

for i, layer in enumerate(base_model.layers):

print(i, layer.name)

inputs = keras.layers.Input(shape=(224, 224, 3), name='input_layer')

# x = keras.layers.experimental.preprocessing.Rescaling(1./255)(inputs)

x = base_model(inputs, training=False)

x = keras.layers.GlobalAveragePooling2D(name='global_average_pooling')(x)

outputs = keras.layers.Dense(101, activation='softmax', name='output_layer')(x)

model = keras.Model(inputs, outputs)

gc.collect()

# Create checkpoint callback to save model for later use

checkpoint_path = "101_classes_10_percent_data_model_checkpoint"

checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(checkpoint_path,

save_weights_only=True, # save only the model weights

monitor="val_accuracy", # save the model weights which score the best validation accuracy

save_best_only=True) # only keep the best model weights on file (delete the rest)

# def free_memory(epochs, logs):

# gc.collect()

#

# free_memory_callback = keras.callbacks.LambdaCallback(on_batch_end=free_memory)

# Compile

model.compile(loss="categorical_crossentropy",

run_eagerly=False,

optimizer=tf.keras.optimizers.Adam(), # use Adam with default settings

metrics=["accuracy"])

# from tqdm.keras import TqdmCallback

# tqdm_callback = TqdmCallback()

# Fit

history_all_classes_10_percent = model.fit(train_data,

epochs=5,

validation_data=test_data,

validation_steps=int(0.15 * len(test_data)), # evaluate on smaller portion of test data

callbacks=[checkpoint_callback],

max_queue_size=10,

workers=10,

use_multiprocessing=True) # save best model weights to file

plot_loss_curves(history_all_classes_10_percent)

model.load_weights(checkpoint_path)

print(model.evaluate(test_data))

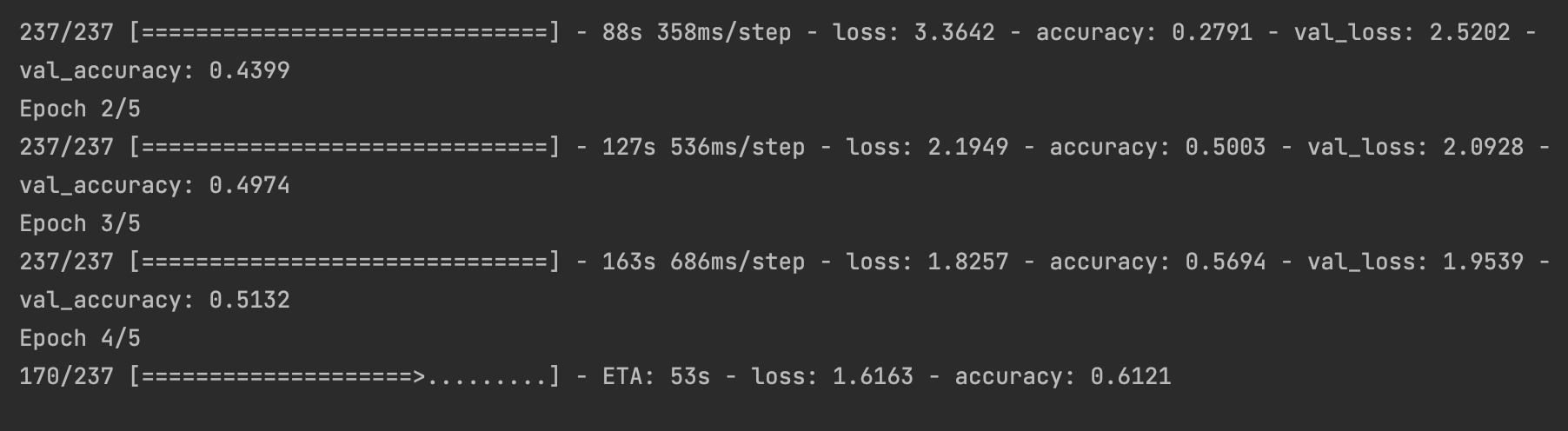

I also notice that the training is weirdly slowing down significantly after finishing one epoch.