You can add your own model with MLX. I've used MLX_LM.lora to 'upgrade' Mistral-7B-Instruct-v0.3-4bit with my own content. The 'adapter' folder that is created can be added to the original model with mlx_lm.fuse and tested with mlx_lm.generate in the Terminal. I then added the resulting Models folder into my macOS/iOS Swift app. The app works on my Mac as expected. It worked on my iPhone a few days ago but now crashes in the simulator. I don't want to try it my phone until it works (again) on the simulator.

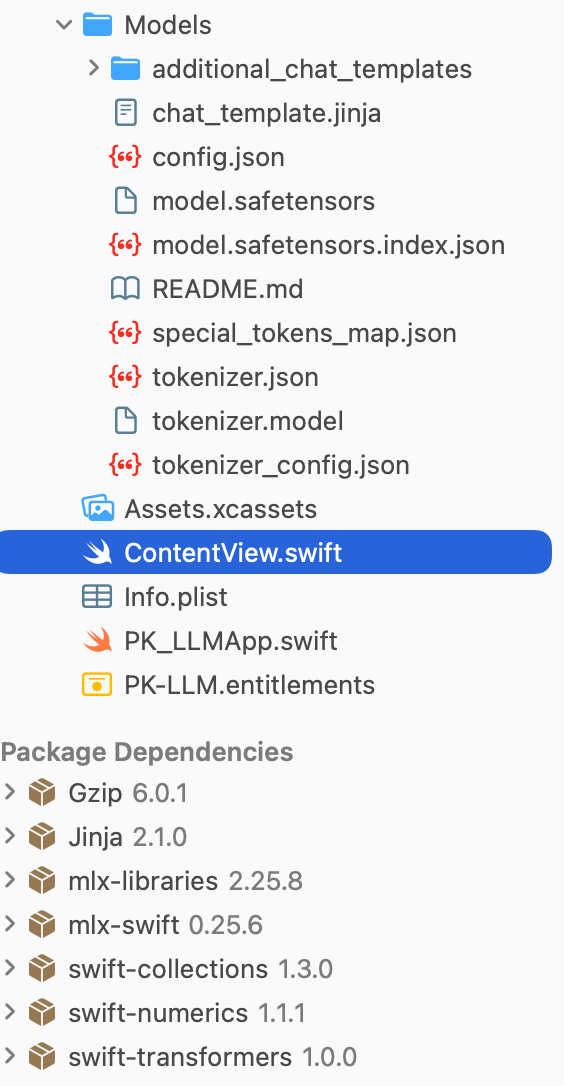

The models folder contains the files created by the fuse command.

I'm not sure that I changed anything in the last few days but it seems to be crashing in the loadmodel function with a lot of 'strange' output that I don't understand.

My loadModel func

`func loadModel() async throws -> Void {

// return // one test for model not loaded alert

// Avoid reloading if the model is already loaded

let isLoaded = await MainActor.run { self.model != nil }

if isLoaded { return }

let modelFactory = LLMModelFactory.shared

let configuration = MLXLMCommon.ModelConfiguration(

directory: Bundle.main.resourceURL!,

)

// Load the model off the main actor, then assign on the main actor

let loaded = try await modelFactory.loadContainer(configuration: configuration)

await MainActor.run {

self.model = loaded

}

}`

I plan to back track to previous commits to see where the problem might be. I may have used some ChatGPT help which isn't always helpful ;-)