-

Bringing People into AR

ARKit 3 enables a revolutionary capability for robust integration of real people into AR scenes. Learn how apps can use live motion capture to animate virtual characters or be applied to 2D and 3D simulation. See how People Occlusion enables even more immersive AR experiences by enabling virtual content to pass behind people in the real world.

Resources

- Capturing Body Motion in 3D

- Effecting People Occlusion in Custom Renderers

- ARKit

- Presentation Slides (PDF)

Related Videos

WWDC21

WWDC19

-

Search this video…

Hello everyone. Thank you so much for coming.

This is a deep dive session for ARKit. We'll be showing you how we're bringing people into AR.

My name is Adrian. And, I'll be joined onstage by Tanmay.

Earlier this week, Apple announced the RealityKit framework. This is a new framework for rendering photorealistic content. It has also been built from the ground up to support AR. We also held a What's New session for ARKit 3, where we showed you many of the cool features that we're bringing into ARKit this year.

And, for this deep dive, we're going to focus on two of these.

First, I'll be showing you how people occlusion works. And then, I'm going to hand it over to Tanmay to give you the deep dive of how motion capture works.

So, let's begin.

What is people occlusion? In ARKit today, we're already able to position rendered content in the real world. However, if we look at the video behind me, we can see that something is clearly wrong.

What we would expect, is for the person closer to the camera to occlude the rendered content as it moves behind him. And, with people occlusion, we're bringing just that. We're enabling rendered content and people to correctly occlude each other in the scene. And, to understand what we need to do in order to enable that, I'm going to break down a frame. So, here we have the camera image, and we have two people standing around a table. And, we want to place a rendered object on top of this table.

So far, for ARKit 2, the way we position rendered content is by simply overlaying it on the image. And, when we do it this way, we see that we end up with an incorrect occlusion.

This is not what we would expect. And, we want to turn that red cross into a green checkmark. So, the way we do that is, we need to understand there's a person closer to the camera than the rendered object. And, when we do so, we correctly make sure that the rendered object gets occluded. And, this is essentially a depth ordering problem. And, in order to understand how we're going to solve this, I'm going to decompose the scene. So, here's the same image in an oblique angle, and let's explode the scene and look at the different depth planes that we have.

We can see that we have different things in different depth planes, mixing real and rendered. And, here we have each person in their own depth plane, with the rendered object in between.

For rendered content, the graphics pipeline already knows exactly where it is, simply by using a depth buffer.

And, in order for us to also do the same thing for the real content, we need to understand where the people are in the scene. And, in order to enable this, we're adding two new buffers.

We're adding the segmentation buffer, which tells you, per pixel, where a person is in this scene.

And, we're also giving you a corresponding depth buffer which tells you where that person is in depth. Now, the amazing thing about this feature is that the way we're generating these buffers is by leveraging the power of the A12 chip and using machine learning in order to generate these buffers, using only the camera image. And so, these two new buffers will be exposed on the ARFrame as two new properties, the segmentationBuffer, and the estimatedDepthData.

Since we want to use these buffers for people occlusion, we also have to have them generated at the same cadence as the camera frame. So, when your camera frame is running at 60 frames a second, we are also able to generate these buffers for you at 60 frames a second. We would also like these buffers to be at the same resolution as the camera image.

However, in order to enable this in real time, the neural network only sees a smaller image. And so, if you take the output of the neural network, and we magnify it, we will see that there's a lot of detail that the neural network just simply did not see.

So, in order to compensate for this, we're doing some additional processing.

We're applying matting. And, what matting does is, it's basically using the segmentationBuffer as a guide, and then looking at the camera image in order to figure out what the missing detail was.

And now, with a matted image, we're able to correctly extract the people from the scene, and together with the estimatedDepthData, we can then position them in the correct depth plane. Finally letting us solve the depth ordering problem, and we can then recompose the scene. Now, this is a lot of technology, and we want to make it as easy as possible for you developers to adopt this. So, we're enabling this feature in three different ways. First, we have RealityKit, the new framework that we announced this Dub-dub. However, if you've already been using SceneKit, and we also added support for people occlusion using the ARCSNView. And, in case you have your own renderer, or you're working with a third-party rendering, we're giving you the building blocks to enable incorporating people occlusion into your own app using the power of Metal.

So, let's look at how we'd do it in RealityKit.

RealityKit is the recommended way if you're going to build a new AR app. It has a new UI element called the ARView, which enables you-- gives you an easy-to-use API that brings photorealism to AR, further blending the border between the real and the rendered content. It also has built-in support for people occlusion. And, if you attended the What's New session, you have already seen a live demo of how you can enable people occlusion in ARView. And so, for this deep dive, we'll do a quick recap. Let's look at some code.

So, here I have my viewDidLoad function in my view controller. And, the first thing I need to do is to make sure that it's future supported.

I do that by checking my configuration, shown as my WorldTrackingConfiguration.

And, I need to look for a new property called FrameSemantics. And, the FrameSemantics we're going to use to enable people occlusion is personSegmentationWithDepth.

Once I know that this is supported on my configuration, the only thing I have to do is set the FrameSemantics on my configuration, and then, when the session starts running, the ARView will automatically pick this up, and enable people occlusion for my AR application.

So, all we had to do was really to change our configuration, using the new property that we're introducing for this year, FrameSemantics. And, as you saw in the previous example, I was using personSegmentationWithDepth. But, we can also do only PersonSegmentation in case you want to enable a weatherman-like experience.

Now, ARView is the recommended way for using people occlusion. And, the reason is, it has a deep renderer integration. And, what that means is the entire graphics pipeline is aware that there are people in the scene. And, it's therefore able to also handle transparent objects when you're using this feature.

It's doing this while also being built for optimal performance. And, if you're wondering what kind of experiences you can enable using people occlusion and RealityKit, I would like to play a short video for you.

This is Swiftstrike. It's a really cool demo that we're showing right here in Dub-dub, and I urge everyone to check it out if you haven't done so already.

So, what if you've been using SceneKit? Well, let's look at how I would enable people occlusion for SceneKit.

If you've already been using ARSCNView, we're enabling people occlusion in very much the same way we're enabling for RealityKit. All we have to do is set the FrameSemantics on our configuration, and the ARSCNView will automatically pick this up.

However, there's a difference between the SceneKit implementation and RealityKit, where SceneKit does a post-processing composition. And, what that means for you concretely is it may not work as well with transparent objects, depending on how you write depth.

Finally, what if I have my own rendering engine? Well, we want to enable you to incorporate people occlusion into your own rendering engine, or a third-party rendering engine. And, what this gives you is complete control over the composition. We want to give you as much flexibility as possible, while still giving you easy-to-use API in order to incorporate this great feature. So, before I show you how we do this, let's do a quick review.

We had the segmentationBuffer that came out of the neural network, that was working on a smaller image. And then, we applied matting in order to recapture some of that missing detail.

Well, when we do our custom composition, we're providing a new class that will generate this matte for you, using the power of Metal, giving you the matte as a texture for you to incorporate into your own pipeline. Let's look at an example of how we can do just that.

So, here I have my custom composition function. And, the first thing I do is to make sure whether this feature is supported. And, once I've done that, all I have to do is call generateMatte, and I give it the frame and the commandBuffer, and it gives me back the Metal texture that I can then use when I do my own custom composition, and finally schedule everything to the GPU. So, the class that we're bringing to ARKit is called ARMatteGenerator, and as you saw in the example, it takes the ARFrame and the commandBuffer, and it gives you back a texture that you can use. However, we're not done yet. Just like the segmentationBuffer was of a lower resolution, the estimatedDepthData is also lower, and if we simply magnify it, and overlay it on our matted image, we will see that there might be a mismatch. We can have depth values where there's no alpha value in the matte, and more importantly, we can have alpha values in the matte where there's no corresponding depth value.

Now, since the matte has already recaptured some of the missing detail, we can't really modify the alpha itself. Instead, we need to modify the depth buffer. So, let's go back to my previous example, and see how we do just that. So, here we have the line where I added-- we generated the matte in order for me to use for my custom composition. And so I add an addition function call to generateDilatedDepth. And, much in the same way, it takes the frame in the command buffer, and gives me back a texture. And, if you look at the API, it looks very similar to how we generated the matte, giving us the texture, taking the frame and the command buffer.

And, what this does is ensures that for every alpha value we find in the matte, we will have a corresponding depth value that we can then use when we do our final composition.

So, with the dilated depth, and the matte, we're now finally able to move on to composition.

Composition is usually done on the GPU in the fragment shader. So, let's look at an example shader of how I bring everything together. I begin by doing what we would usually do for an AR experience. I sample the camera image, as well as the rendered texture, but since we're going to do occlusion, I'm also sampling the rendered depth. And then, I do what I would usually do in AR, I simply overlay the rendered content on the real image, given the rendered alpha.

The new part, in order to enable people occlusion, is I also sample my matte, and my dilatedDepth.

And then, I make sure to compare the dilatedDepth with the renderedDepth. And, if I find that there's something in the dilatedDepth that's closer to the camera, meaning that there might be a person there, I then mix the camera back, given the value of the matte. However, if the render content is closer to the camera, I simply do what we always do, and have the render content overlaid on top of it. And, with all of this, we're finally able to do people occlusion in our own custom renderer.

Since this feature is using the neural engine, and machine learning, it's supported on A12 devices and later.

It also works best in indoor environments. And, in all the videos you saw, you saw people standing in the scene, but this feature also works for your own hands and feet.

It also works for multiple people in the scene.

Before I hand it over to Tanmay to show you all about motion capture, let's do a quick recap. So, with people occlusion, we're enabling correct occlusion between rendered and real content for people.

The recommended way, if you're building a new app, is to use RealityKit and ARView, since this does the deep integration.

If you already have an app using SceneKit, we've also added support for people occlusion in the ARSCNView.

And, if you have your own renderer, we're providing you with a new API called the ARMatteGenerator, so you can do your own compositing and incorporating into your own renderer.

And, with that, I'd like to hand it over to Tanmay to give you a deep dive into motion capture. Thank you, Adrian. Hello everyone. I'm Tanmay, and today I'm going to introduce you to this new piece of technology that we are bringing this year, motion capture. So, what is motion capture? It's just a process of capturing movement of people.

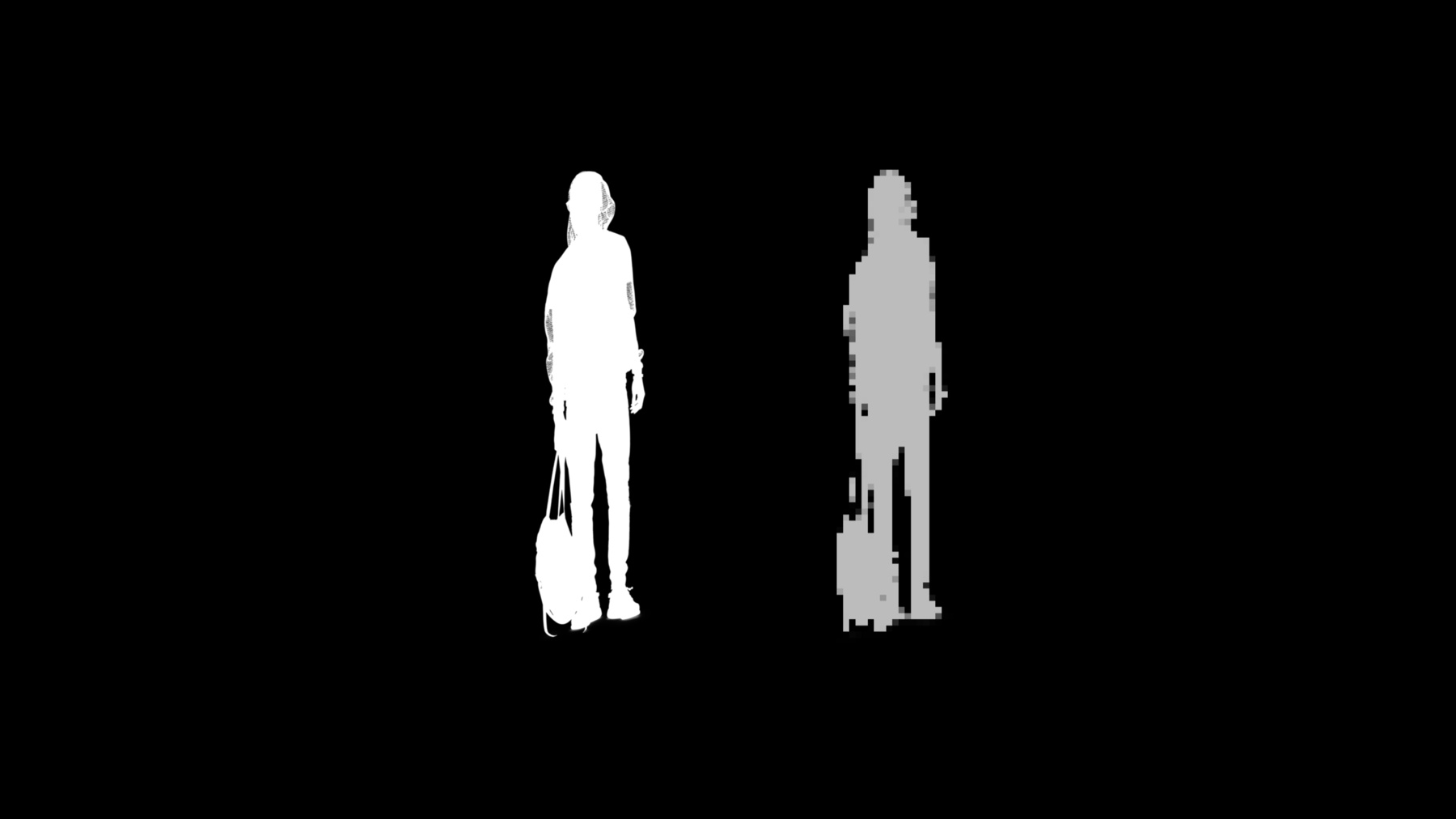

You see a person, you capture all the movements, and you use that motion to animate a virtual character so that the character performs the same set of actions as the person you're looking at. And, we're trying to enable this technology in your applications.

Now, let's dive in.

We would like the character to mimic the person that you're looking at. But, before we do that, we need to understand what exactly are we trying to animate? What does a character entail? So, this is an example of a virtual character. Let's take an x-ray of it and see what's inside it. You can see that it has two main components. The outer coating, which is called a mesh. And, the bony structure inside, which is called a skeleton. And, these two combined together give you the complete character.

The skeleton is the driving force behind the entire character. It contains all the limbs that we use for motion, just like people.

And so, in order to animate a character, we need to animate the skeleton with the same motion.

So, what's the first step here? We have a person. And, the first step here is to have the skeleton mimic this person. And, this is what it looks like. And, once the skeleton moves, the character follows suit.

And, you have the entire virtual character mimicking you automatically. So, how do we do it? How do we animate this skeleton? Given this image, we use machine learning technology to first estimate the pose of the person in the image. And, we use that pose to build a full-fledged, high-fidelity skeleton. And, finally, we use this skeleton, and combine it with a mesh to give you the final character. And, we interface all of this, everything that you're looking here, through ARKit. To get a complete overview, we are introducing the technology of motion capture in this year's ARKit. And, with that, you can track movement of people in real time, on your devices.

It works seamlessly with RealityKit, which gives you the ability to drive animated characters, and render them on your screens.

It's powered by machine learning and runs smoothly on Apple Neural Engine.

And, we have made it available on A12 devices and beyond.

So, now you have this technology, what can you use it for? What can you enable with it? Well, for starters, you can always have a virtual character follow and track a person. You can have your own puppet in AR. And, this is something that we enable out of the box. But, beyond that, there are a lot of other use cases as well, that you can enable with it.

For example, you can enhance it further by creating your own models for detecting what actions people are doing. You can use it to build tools for analyzing motions like how good a golf swing is, or is your posture correct, or are you performing an exercise in a correct way or not? Also, now since a person has a virtual presence in the scene, you can enable interaction with any virtual object that you like. And, it's supported for all the virtual objects present in the scene. And, finally, you can also use it for image and video analytics. Because we also provide 2D version of the skeleton as well in image space, and you can build it to use editing tools, or for semantic image understanding. And, this doesn't even cover the entire set of possibilities that you can enable with it remotely. There are so many other things that you can do with it. Now, let me show you how you can leverage motion capture for your applications.

Depending on the use cases, we have three different ways of using it.

The first one is motion capture in RealityKit.

If you just want to quickly animate a character, this high-level API will help you get there. If you want to enable advanced use cases, like activity recognition, analysis, or interaction with 3D objects in a scene, we've also provided low-level APIs to extract each and every element of the skeleton.

And, it's for you to use it. And, finally, if your use case requires 2D versions of the skeleton in image space, maybe for doing semantic image analysis, or for editing tools, or for something else, we've provided access to that as well. So, let's get started with motion capture in RealityKit.

As you all know, we introduced RealityKit this year, and given our API in RealityKit, in just few lines of code, you can track a person and add a character to mimic.

We've provided a very simple and easy-to-use API for this purpose.

You can add your own custom characters as well, based on the structure of our provided example. So, you can use any mesh, any character that you want, provided that it is based on the structure of our provided example in the sample bundle.

Finally, the tracked person is very easy to access here, via an element called AnchorEntities, which are basically just building blocks of the scene. And, it automatically gathers all the required transforms that we need for motion capture. So, to start with, every element of motion capture that you get in ARView, which as you all know, is the main UI element combining AR and RealityKit together, is powered by a new configuration called ARBodyTrackingConfiguration. And, once you enable this, you start adding anchors containing bodies, and this entire thing is encapsulated in a special type of anchor entity called bodyTrackedEntity. So, let me give you a brief description of what this bodyTrackedEntity actually is.

A body tracked entity represents one single person. It contains the underlying skeleton and its position. It's tracked in real time and gets updated every frame. And, finally it combines the skeleton to a given mesh automatically and give you the complete character. Now, let's go to the code to animate a character quickly, and you'll see how easy it is. You just have to follow three steps.

The first step is to load a character.

To automatically load a tracked human, asynchronously, you just need to call entity.loadBodyTrackedAsync function.

And, you can use .sync to either catch errors in the received completion block, or if everything works fine, you can get your character in the received value block. And, this character is of the type bodyTrackedEntity.

We have a file called robot.usdz in our sample code bundle, and if you provided the file bot to this file, it will automatically add the skeleton to the robot mesh, and provide you with a character.

So, moving on, the second step is to get the location where you would like to put your character on.

For example, let's say, if you would like to put the character right on top of the person that you are tracking, you can get the location by using AnchorEntity with the argument .body. And, please note that this is just an example, and it can be any other location as well, be it on the floor, on a tabletop, or anywhere else. You just have to provide an anchor containing that location. And, the character will still mimic the person. And, finally, just add your character to the location, and voila. You can drive your character. It's that simple.

So, you might be wondering that, how can you replace this robot with any other custom character that you like? Like I said before, we have a file called robot.usdz. And, this usdz file has an entire structure. And, if your custom model follows the same structure, then you can use it. And, just to let you know that the underlying skeleton that we provide is a very high-fidelity skeleton consisting of 91 joints. And, for your information, here are all of them. It's a lot, right? And, these are just regular joints, contained in arms, legs, and so on. And, if your character follows this naming scheme, you can use it directly in RealityKit.

That was a quick and easy way to load a tracked person and drive the character. Now, let's move on to low-level APIs for advanced access.

Here we will provide you with access to each and every element of the skeleton. And, we interface it through a very easy-to-use API. You can enable all those advanced use cases that we discussed earlier, which requires either using the skeleton data for analysis, or as an input to your models.

And, finally the skeleton that we provide is contained in a new type of anchor that we're introducing, called ARBodyAnchor.

And, ARBodyAnchor is the starting point of the entire data structure that we provide.

And, this is what the data structure looks like. We have ARBodyAnchor on the top, and it contains all the skeletal elements.

So, let's just walk through this structure, and start at the top. ARBodyAnchor is just a regular anchor. It contains a geometry. And, in this case, the geometry itself is the skeleton. And, this is what the skeleton looks like. It consists of nodes and edges, just like any other geometry.

It also contains a transform. And, this transform is just the location of the anchor in rotation and translation matrix form.

Accessing the skeleton is what we're interested in here, mainly. So, let's just get right into it. What is this skeleton that we are showing you? It's a geometry, composed of nodes, which represent the joints, the green points and the yellow points that you see here. And, it contains edges, which represent the bones, the white lines that you see here. They show you how the joints are connected. And, once all the joints are connected, you form this entire geometry.

We call this skeleton as ARSkeleton, and you can simply access it by using skeleton property of ARBodyAnchor.

The root node of this skeleton, the topmost point in this geometry in the geometry hierarchy, is the hip joint, which you can see here.

So, let's move on and have a look at its structural definition.

Definition, what we call here, is just a property of the skeleton, containing two components. The names of all the joints present in the skeleton, and the connections between them, which show you how to connect those joints together. So, let's just have a look at both of these properties.

Here, we are labeling some of the joints in the skeleton, and as you can see, that these joints have semantically meaningful names, like left shoulder, right shoulder, left hand, right hand, and so on. Similar to people.

Here I would like to point out that the green points are the ones that we control and are estimated from the person that you're looking at, and the yellow ones are not tracked. They just follow the motion of the closest green parent.

Zooming in, focus on the right arm, and consider these three joints. Right hand, right elbow and right shoulder. They follow a parent-child relationship. So, your hand is a child of elbow. And elbow is a child of shoulder. And, this continues for rest of the skeleton as well, thus giving you the complete hierarchy.

We know now what all the joints are called, and how to connect them. But, how do we get their locations? We've provided two ways to access the locations of all the joints.

The first one is relative to its parent. If you want the location of your right hand relative to the elbow, you can have that transform by calling localTransform function, and provide it with the argument .rightHand. And, if you want to transform relative to the root, which in our case is the hip joint, you can call modelTransform function, and provide, again, same argument, .rightHand.

Now, if you don't want to access the transforms of all the joints individually, but instead you want a list containing transforms of all the joints, you can also do that by simply using localTransforms property and modelTransforms property. This will give you a list of transforms of all the joints. And, if you want that relative to the parent, you can use localTransforms, and if you want relative to the root, you can use modelTransforms. So, now that we had a good look over the skeleton, let's go to the code and learn how to use each and every element. You start by iterating over all the anchors in the scene. And, just look for the bodyAnchor.

Once you have the bodyAnchor, you might want to know where that bodyAnchor is located in the world.

And, you can use the transform property of the bodyAnchor to get that. And, since in our geometry, hip is the root, so the bodyAnchor.transform will give you the world position of the hip joint.

Once you have the transform of the anchor, you might need to access the geometry of the anchor, and you can do that by using skeleton property of the anchor. Once you have this geometry, you need all the joints. You need all the nodes. And, to get a list of transforms of all the joints, that is the list of locations of all the joints, you can simply use jointModelTransforms property of the skeleton.

Once you have this list, you can iterate over this list and access transform of every element. Or, every joint, in this case.

So, iterating over all the joints, you can access the parentIndex of each joint by using the parentIndices property in the definition. And, just check if the parent is not the root. Because the root is the topmost point of the hierarchy, so it doesn't have a parent. So, if the parent is not the root, you can access the transform of the parent by using the same jointTransforms list, but just index it with the parentIndex. And, that's it. This gives you every child pair in the entire hierarchy, and once you have every child-parent pair in the hierarchy, you have the entire hierarchy of the skeleton. And, you can now use it however you want. So, let's just run this code. Let's just take this, and simply draw the skeleton, and see what it looks like.

This is what it looks like. All we did was, we took the entire hierarchy. We took all parent-child points, and we just drew the skeleton, that's it. And, it starts to mimic the person automatically. And, this is the most basic thing that you can do, because once you have this entire skeleton hierarchy, you can use it for many other use cases that we discussed earlier.

This technology is at your disposal, wherever your imagination takes you.

So far, we've only talked about 3D objects in world space. But, in case you want 2D versions of the skeleton in image space, we have provided an API for that as well.

Here, we provide you with detailed access to each and every element in 2D space. We also provide all the skeleton joints as normalized image coordinates.

We have a very easy to use API for this as well. And, you can use it for semantic image analysis, or for building editing tools both for images and videos. And, finally this entire structure is interfaced through an object called ARBody2D. And, this is what ARBody2D object looks like when visualized. ARBody2D object contains the entire skeletal structure.

And, this is what the structure looks like. So, you have the object itself on the top. And then, again, similar to its 3D counterpart, all the skeletal elements under that object.

So, let's walk through this structure, and just learn about these elements quickly. Starting with the ARBody2D object on top, there are two ways that you can access this object.

If you're already working in 2D space, the default way is to access it via ARFrame. And, you can simply do that by using detectedBody property of the ARFrame.

And, here the person is an instance of ARBody2D object.

For your convenience, if you're already working in 3D space, if you're already working with a 3D skeleton, and for some reason, you want the corresponding 2D skeleton in image space as well, we have provided a direct way to access it by simply using referenceBody property of ARBodyAnchor.

And, this gives you the ARBody2D object as well. After accessing the ARBody2D object, we can extract skeleton from it by simply using skeleton property, and this is the visualization of that skeleton.

So, like I said, this skeleton is present in normalized image space. So, if this is your image grid, and the top left is 0,0 and bottom right is 1,1, all the points on this diagram are in the range of 0 and 1. Both in x and y direction.

The green points that you see here are called landmarks. Note that here we don't call them joints, although they do represent joints. We just call them landmarks, because they are pixel locations on image. Similar to the 3D version, it contains an object called definition, describing what the landmarks are called in the skeleton, and how to connect those landmarks. In this skeleton there are 16 joints, and similar to 3D, they have semantically meaningful names like left shoulder, right shoulder, left hand, right hand, and so on. The root node is still the hip joint here. Again, similar to the 3D. So, focusing on the right arm, we can see that the hand is a child of right elbow, and elbow is a child of right shoulder. And, this is, again, similar parent-child relationship that you saw in the 3D version as well. And, the similar hierarchy is formed here. So, having all that information with us, let's quickly walk through this structure via code now.

We start with accessing ARBody2D object.

So, once you have your ARFRame, you can simply use detectedBody property to get ARBody2D object. And now, once you have ARBody2D object, you can access the entire skeletal structure from underneath it. You can extract the geometry by using person.skeleton. In this case, person refers to the ARBody2D object. And, the definition of the skeleton, which again, comprises of the names of all the joints, and the information of how to connect those joints, is present in the definition, which you can access it by using definition property of the skeleton. Once you have this information, you might need to know where those landmarks are located. And, similar to the 3D version, we have a property called jointLandmarks, which gives you a list of locations of all those green points that you see here.

But, please note that, in this case, the green points are in 2D, so they're in image space. They're normalized pixel coordinates. And, once you have that list, you can iterate over all these landmarks. And, for each landmark, you can access its parent by calling parentIndices property in the definition. And, again, just check if the parent is not the root, because the root is the topmost point of the hierarchy. And, if the parent is not the root, you can access its transform by using jointLandmarks list, and indexing it with the parentIndex.

And, again, in this way, you have the transforms of every child-parent pair in the skeletal hierarchy. And, you can, again, use it however you want, and you can continue from here and build your own ideas, which brings us to the conclusion. We've introduced motion capture in AR this year. We've provided access to tracked person in real time. We have provided both 3D and 2D skeletons for you guys to use it, and that is how we interface the pose of the person. We've enabled character animation out of the box. And, it runs seamlessly in RealityKit. We have a RealityKit API that we discussed earlier for quickly animating a character, and like I mentioned earlier as well, you can use your own custom characters as well, as long as it's based on the structure of our provided example. And, we've provided an ARKit API for all the advanced use cases that you might think of, like recognition tasks, or analysis tasks.

And, that brings us to the end of the session. We presented two new features in this session, person occlusion, and motion capture. And, we've provided APIs for both of these features.

For more information from today's session, please visit our website, and please feel free to download the sample code and use it.

We will be in the lab tomorrow, so come visit us to get all your questions answered.

Thank you. [ Applause ]

-