-

What's new in Vision

Learn about the latest updates to Vision APIs that help your apps recognize text, detect faces and face landmarks, and implement optical flow. We'll take you through the capabilities of optical flow for video-based apps, show you how to update your apps with revisions to the machine learning models that drive these APIs, and explore how you can visualize your Vision tasks with Quick Look Preview support in Xcode.

To get the most out of this session, we recommend watching “Detect people, faces, and poses using Vision” from WWDC21.Resources

Related Videos

Tech Talks

WWDC22

WWDC21

-

Search this video…

♪ ♪ Hi, my name is Brett Keating, and it's my pleasure to be introducing you to what is new in the Vision framework. You may be new to Vision. Perhaps this is the first session you've seen about the Vision framework. If so, welcome. For your benefit, let's briefly recap some highlights about the Vision framework. Some Vision framework facts for you. Vision was first introduced in 2017, and since then, many thousands of great apps have been developed with the technology Vision provides. Vision is a collection of computer vision algorithms that continues to grow over time and includes such things as face detection, image classification, and contour detection to name a few. Each of these algorithms is made available through an easy-to-use, consistent API. If you know how to run one algorithm in the Vision framework, you know how to run them all. And Vision takes full advantage of Apple Silicon on all of the platforms it supports, to power the machine learning at the core of many of Vision's algorithms. Vision is available on tvOS, iOS, and macOS; and will fully leverage Apple Silicon on the Mac.

Some recent additions to the Vision framework include Person segmentation, shown here.

Also hand pose estimation, shown in this demo.

And here is our Action and Vision sample app, which uses body pose estimation and trajectory analysis.

Our agenda today begins with an overview of some new revisions, which are updates to existing requests that may provide increased functionality, improve performance, or improve accuracy.

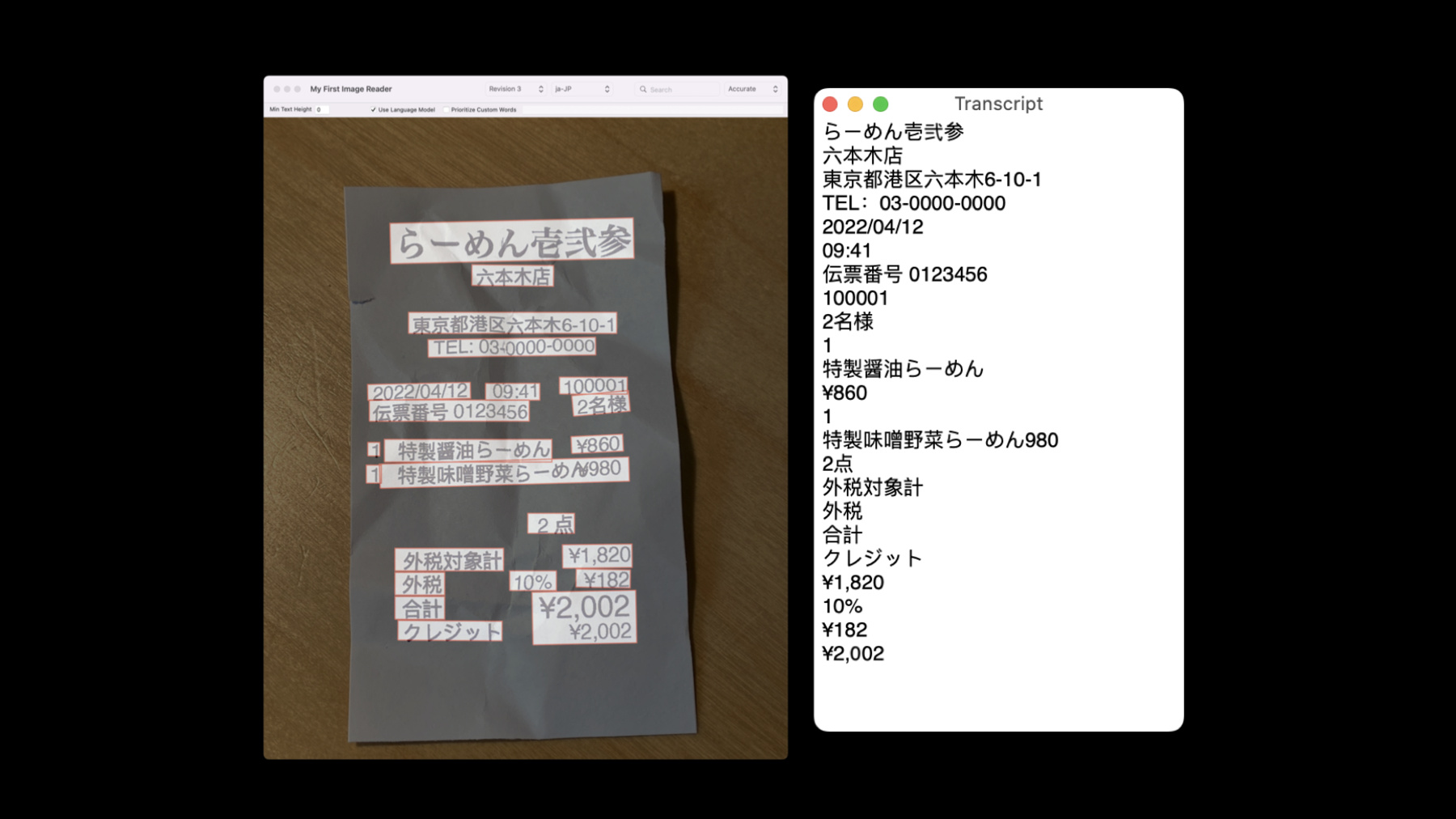

First, we have a new revision for text recognition. This is the third revision, given by VNRecognizeTextRequestRevision3. This is the text recognizer that powers the amazing Live Text feature. The text recognizer supports several languages, and you may discover which languages are supported by calling supportedRecognitionLanguages. We have now added a few new languages, and I'll show you a couple examples. We are now supporting the Korean language in Vision. Here is an example of Vision at work transcribing a Korean receipt. And here is a corresponding example for Japanese, also showing the results of Vision's text recognition on this now-supported language. For text recognition, we have a new automatic language identification. You may still specify the recognition languages to use using the recognitionLanguages property. But suppose you don't know ahead of time which languages your app user might be trying to recognize. Now, but only for accurate recognition mode, you may ask the text recognizer to automatically detect the language by setting automaticallyDetectsLanguage to true.

It's best to use this just for such a situation where you don't know which language to recognize, because the language detection can occasionally get this wrong. If you have the prior knowledge about which language to recognize, it's still best to specify these languages to Vision and leave automaticallyDetectsLanguage turned off.

Next, we have a new third revision for our barcode detection, called VNDetectBarcodesRequestRevision3. This revision leverages modern machine learning under the hood, which is a departure from prior revisions. Barcodes come in a variety of symbologies, from barcodes often seen on products in stores, to QR codes, to specialty codes used in healthcare applications. In order to know which symbologies Vision supports, you may call supportedSymbologies.

Let's talk about performance. Partly because we are using ML, we are detecting multiple codes in one shot rather than one at a time, so the request will be faster for images containing multiple codes. Also, more codes are detected in a given image containing many codes, due to increased accuracy. And furthermore, there are few, if any, duplicate detections. The bounding boxes are improved for some codes, particularly linear codes such as ean13, for which a line was formerly returned. Now, the bounding box surrounds the entire visible code.

Finally, the ML model is more able to ignore such things as curved surfaces, reflections, and other artifacts that have hindered detection accuracy in the past.

Both of these new revisions, for text recognition and for barcode detection, form the technological foundations for the VisionKit Data Scanner API, which is a drop-in UI element that sets up the camera stream to scan and return barcodes and text. It's really a fantastic addition to our SDK, and I highly recommend you check out the session about it to learn more. The final new revision I'll tell you about today is a new revision for our optical flow request called VNGenerateOpticalFlowRequestRevision2. Like the barcode detector, this new revision also uses modern machine learning under the hood.

Although optical flow is one of the longest studied computer vision problems, you might not be aware of what it does, compared to detection of things which form part of all of our daily lives, like text and barcodes.

Optical flow analyzes two consecutive images, typically frames from a video. Depending on your use case, you might look at motion between two adjacent frames, or skip a few frames in between, but in any case, the two images should be in chronological order.

The analysis provides an estimate of the direction and magnitude of the motion, or by how much parts of the first image need to "move," so to speak, to be positioned correctly in the second image. A VNPixelBufferObservation is the result, which represents this motion at all places in the image. It is a two-channel image. One channel contains the X magnitude, and the other contains the Y magnitude. Together, these form 2D vectors at each pixel arranged in this 2D image so that their locations map to corresponding locations in the images that were provided as input. Let's have a look at this visually. Suppose you have an incoming video and several frames are coming in, but let's look at these two images in particular. Here we have a dog running on the beach. From the left image to the right image, it appears the dog has moved a bit to the left. How would you estimate and represent this motion? Well, you would run optical flow and arrive at something akin to the image below. The darker areas are where motion has been found, and notice that it does indeed look just like the shape of the dog. That's because only the dog is really moving in this scene. We are showing the motion vectors in this image by using "false color," which maps the x,y from the vectors into a color palette. In this false color representation, "red" hues happen to indicate movement primarily to the left. Now that you've seen an example from one frame, let's see how it looks for a whole video clip. Here we compute optical flow for a short clip of this dog fetching a water bottle on a beach. On the left is the result from revision 1. On the right is the result from our new ML-based revision 2. Hopefully some of the improvements in revision 2 are clear to see. For one thing, perhaps most obviously, the water bottle's motion is captured much more accurately. You might also notice improvements in some of the estimated motion of the dog. I notice improvements in the tail most clearly but also can see the motion of his ears flapping in the new revision. The first revision also contains a bit of background noise motions, while the second revision more coherently represents the backgrounds as not moving. Hopefully that example gave you a good idea what this technology does. Now let's dive in a bit on how you might use it in your app. Clearly the primary use case is to discover local motion in a video. This feeds directly into security video use cases, where it's most important to identify and localize motions that deviate from the background, and it should be mentioned that optical flow does work best for stationary cameras, such as most security cameras. You might want to use Vision's object tracker to track objects that are moving in a video, but need to know where to initialize a tracker. Optical flow can help you there as well. If you have some computer vision or image processing savvy of your own, you might leverage our optical flow results to enable further video processing. Video interpolation, or video action analysis, can greatly benefit from the information optical flow provides. Let's now dig into some important additional differences between revision 1 and revision 2.

Revision 1 always returns optical flow fields that have the same resolution as the input. Revision 2 will also do this by default. However, there is a tiny wrinkle: partially due to the fact that revision 2 is ML-based, the output of the underlying model is relatively low resolution compared to most input image resolutions. Therefore, to match revision 1 default behavior, some upsampling must be done, and we are using bilinear upsampling to do this. Here is a visual example explaining what upsampling does. On the left, we have a zoomed-in portion of the network output, which is low resolution and therefore appears pixelated. The overall flow field might have an aspect ratio of 7:5. On the right, we have a similar region taken from the same field, upsampled to the original image resolution. Perhaps that image also has a different aspect ratio, let's say 16:9. You will notice that the edges of the flow field are smoothed out by the bilinear upsampling. Due to the potential for the aspect ratios to differ, keep in mind that as part of the upsampling process, the flow image will be stretched in order to properly correspond the flow field to what is happening in the image. When working with the network output directly, you should account for resolution and aspect ratio in a similar fashion when mapping flow results to the original images.

You have the option to skip the upsampling by turning on keepNetworkOutput on the request. This will give you the raw model output. There are four computationAccuracy settings you may apply to the request in order to choose an available output resolution. You can see the resolutions for each accuracy setting in this table, but be sure to always check the width and height of the pixel buffer contained in the observation.

When should you use network output, and when should you allow Vision to upsample? The default behavior is best if you already are using optical flow and want the behavior to remain backward compatible. It's also a good option if you want upsampled output, and bilinear is acceptable to you and worth the additional memory and latency. Network output is best if you don't need full resolution and can form correspondences on the fly or just want to initialize a tracker. Network output may also be the right choice if you do need a full resolution flow, but would prefer to use your own upsampling methods. That covers the new algorithm revisions for this session. Let's move on to discuss some spring cleaning we are doing in the Vision framework and how it might impact you. We first introduced face detection and face landmarks when Vision was initially released five years ago, as "revision 1" for each algorithm. Since that time we've released two newer revisions, which use more efficient and more accurate technologies. Therefore, we are removing the first revisions of these algorithms from Vision framework, while keeping the second and third revisions only. However, if you use revision 1, never fear. We will continue to support code that specifies revision 1 or code that has been compiled against SDKs which only contained revision 1. How is that possible, you may ask? Revision 1 executes an algorithm under the hood that I have called "the revision 1 detector" in this diagram. In the same way, revision 2 uses the revision 2 detector. What we have done for this release of Vision is to satisfy revision 1 requests with the output of the revision 2 detector. Additionally, the revision 1 request will be marked as deprecated. This allows us to remove the old revision 1 detector completely, allowing the Vision framework to remain streamlined. This has several benefits, not the least of which is to save space on disk, which makes our OS releases and SDKs less expensive to download and install. All you Vision experts out there might be saying to yourselves, "But wait a minute, "revision 2 returns upside down faces while revision 1 does not. Couldn't this behavior difference have an impact on some apps?" It certainly would, except we will be taking precautions to preserve revision 1 behavior. We will not be returning upside-down faces from the revision 2 detector. Similarly, the revision 2 landmark detector will return results that match the revision 1 landmark constellation. The execution time is on par, and you ought to experience a boost in accuracy. In any case, this change will not require any apps to make any modifications to their code, and things will continue to work.

Still, we have a call to action for you. You shouldn't be satisfied with using revision 1 when we have much better options available. We always recommend using the latest revisions, and for these requests, that would be revision 3.

Of course the main reason for this recommendation is to use the latest technology, which provides the highest level of accuracy and performance available, and who doesn't want that? Furthermore, we have established and communicated several times, and we reiterate again here, the best practice of always explicitly specifying your revisions, rather than relying upon default behaviors. And that's what we've done for our spring cleaning. Now let's talk about how we've made it easier to debug apps that use the Vision framework. We've added Quick Look Preview support to Vision. What does this mean for Vision in particular? Well, now you can mouse over VNObservations in the debugger, and with one click, you can visualize the result on your input image. We've also made this available in Xcode Playgrounds. I think the only way to really explain how this can benefit your debugging is to show you. Let's move to an Xcode demo.

Here we have a simple routine that will detect face landmarks and return the face observations. First, we set up a face landmarks request. Then, if we have an image ready to go in our class, we display it. Then, we declare an array to hold our results.

Inside the autoreleasepool, we instantiate a request handler with that image, and then perform our request. Assuming all went well, we can retrieve the results from the request.

I will run it and get to a breakpoint after we retrieve the results. So now I'm in the debugger. When I mouse over the results, the overlay shows I've detected three faces. That's great. I do have three faces in my input image. But how do I know which observation is which face? That's where the Quick Look Preview support comes in. As I go into this request, I can click on each "eye" icon in order to visualize the result. The image appears with overlays drawn for the landmarks constellation and for the face bounding box.

Now you know where the first observation is in the image.

I can click on the next one to draw overlays for the second observation and for the third observation.

Continuing to the next breakpoint, we run some code that prints the face observations to the debug console. As you can imagine, here in the debug console where the face information is printed, it's pretty hard to immediately visualize in your mind which face is which or whether the results look correct just from these printed coordinates.

But there is one more thing to point out here. Notice that I've somewhat artificially forced the request handler out of scope by introducing an autoreleasepool. Now that the request handler is out of scope, let's use the Quick Look Preview support again on the results. Well, what do you know, the overlays are still drawn, but the image is not available.

This is something to keep in mind: the image request handler that was used to generate the observations must still be in scope somewhere in order for Quick Look Preview support to use the original image for display. That is because the image request handler is where your input image resides. Things will continue to work, but the image will not be available. This Quick Look preview support can be especially useful in an Xcode Playgrounds session, while doing quick experiments to see how things work. Let's have a look at that now. Here we have a simple Playground set up to analyze images for barcodes. Rather than go through this code, let's just make a couple modifications and check out how it impacts the results. We'll start off by using revision 2 on an image with two barcodes of different symbologies. All the results at once are displayed if we ask for all the results, and just the first result is also displayed at the end.

Notice that revision 2 has a couple issues. First, it missed the first barcode. Also, it detected the second barcode twice. And it gives you a line through the barcode rather than a complete bounding box.

What happens if we change to revision 3 now, instead of revision 2? First of all, we detect both barcodes. And, instead of a line, we are given complete bounding boxes.

What is great about this Quick Look Preview support is that we've removed the need for you to write a variety of utility functions to visualize the results. They can be overlaid directly on your images in the debugger or in an Xcode Playground.

So that is Quick Look Preview support in Vision. Now you can more easily know which observation is which. Just be sure to keep the image request handler in scope in order to use it with your input image, and hopefully the Xcode Playground support will make live tuning of your Vision framework code much easier. We've covered some important updates to Vision today. To quickly review, we've added some great new revisions to text recognition, barcode detection, and optical flow.

As we continue to add updated revisions, we will also be removing older ones, so keep your revisions up-to-date and use the latest and greatest technology. We've also made debugging Vision applications much easier this year with Quick Look Preview support. I hope you enjoyed this session, and have a wonderful WWDC. ♪ ♪

-