-

Take ScreenCaptureKit to the next level

Discover how you can support complex screen capture experiences for people using your app with ScreenCaptureKit. We'll explore many of the advanced options you can incorporate including fine tuning content filters, frame metadata interpretation, window pickers, and more. We'll also show you how you can configure your stream for optimal performance.

Resources

Related Videos

WWDC23

WWDC22

-

Search this video…

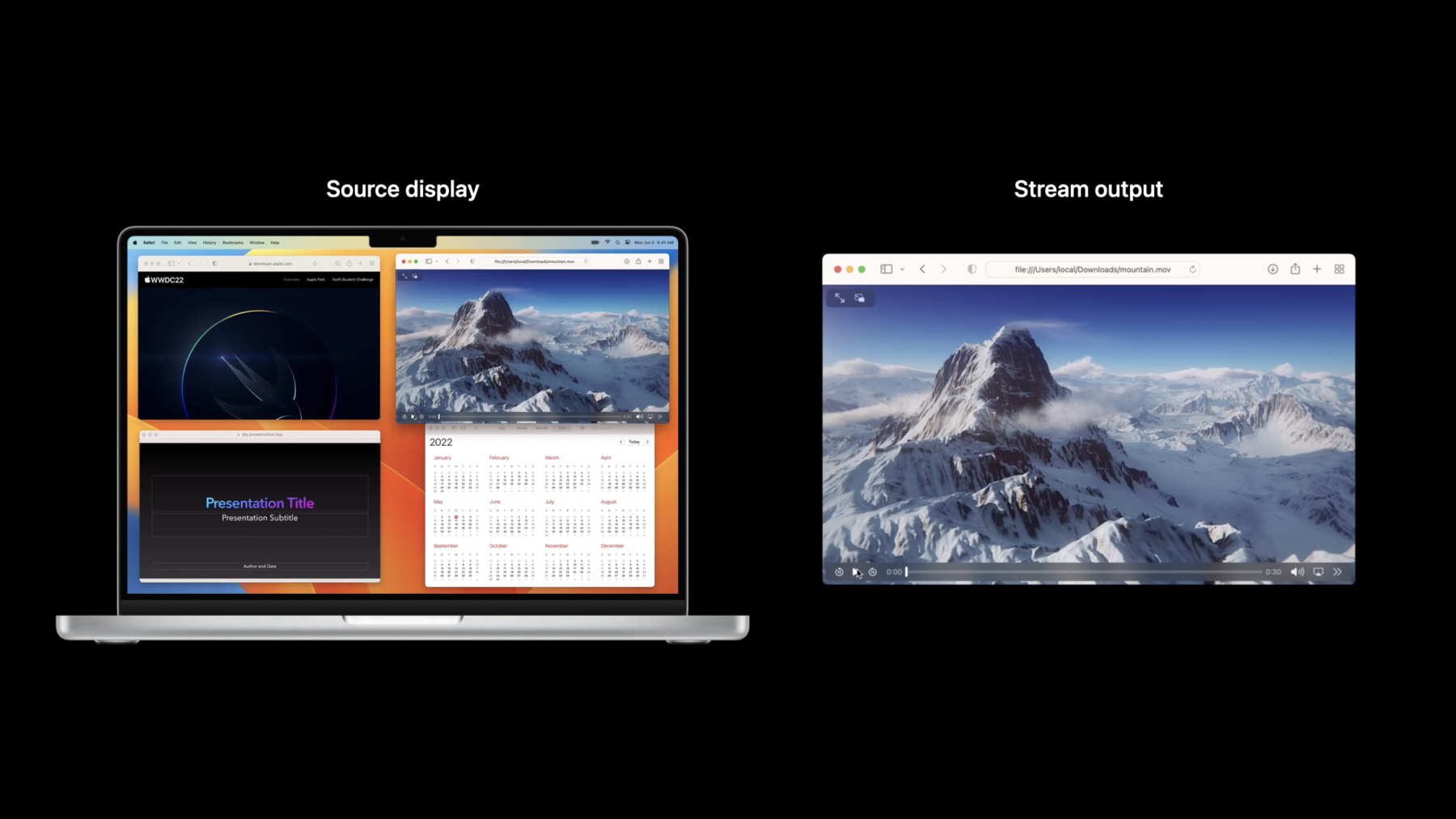

♪ Mellow instrumental hip-hop music ♪ ♪ Meng Yang: Hi, my name is Meng Yang, an engineer from GPU Software here at Apple. Today I am going cover a few advanced topics about ScreenCaptureKit and how it can take your app's screen sharing experience to the next level. Later, my colleague Drew will demonstrate this exciting new API in action. Screen capture is at the heart of screen sharing applications such as Zoom, Google Meet, SharePlay and even popular game streaming services like Twitch, which have become the new norm of how we work, study, collaborate, and socialize over the past few years. ScreenCaptureKit is a brand-new, high-performance screen capture framework built from ground up with a powerful feature set. The rich set of features includes highly customizable content control that allows you to easily pick and then choose any combination of windows, applications, and displays to capture. Ability to capture up to the screen content's native resolution and frame rate. Dynamic stream property controls like resolution, frame rate, pixel format. And these controls can be modified on the fly without recreating the stream. Capture buffers that are GPU memory-backed to reduce memory copies. Hardware-accelerated content capture, scaling, pixel and color format conversion to achieve high-performance capture with reduced CPU usage. Last but not least, support for both video and audio capture. Before getting started, this talk assumes you are already familiar with the basic concepts, building blocks, and workflow of how the framework works. Please visit the intro session "Meet ScreenCaptureKit" to learn more. In this session, I am going to talk about how to capture and display a single window. Next, how to add screen content to full display capture. How to remove content from display capture. I will then show you a few ways to configure the stream for different use cases. And last, you will see a demo of how ScreenCaptureKit transformed the screen and audio capture experience of OBS Studio, a popular open source screen capture app. Now, let's start with the first example, and probably the most common use case: capture a single window. This example is going to cover how to set up a single window filter; what to expect from the stream output when the captured window is being resized, occluded, moved off-screen, or minimized. You will also learn how to use per-frame metadata and how to properly display the captured window. Let's dive in. To capture a single window that's independent of which display it's on, you can start by using a single window filter and initialize the filter with just one window. In the example here, the filter is configured to include a single Safari window. The video output includes just that window and nothing else. No child, pop-up, or other windows from Safari will be included. ScreenCaptureKit's audio capture policy on the other hand always works at the app level. When a single window filter is used, all the audio content from the application that contains the window will be captured, even from those windows that are not present in the video output. Now let's take a look at the code sample. To create a stream with a single window, start by getting all available content to share via SCShareableContent. Next, get the window you want to share from SCShareableContent by matching the windowID. Then, create a SCContentFilter with the type desktopIndependentWindow with the specified SCWindow. You can further configure the stream to include audio as part of the stream output. Now you are ready to create a stream with contentFilter and streamConfig. You can then add a StreamOutput and start the stream. Let's take a look at the stream output next. In the example here, the source display is on the left and the stream output is on the right. The stream filter includes a single Safari window. Now I am going to start to scroll the Safari window that's being captured. The stream output includes the live content from the single Safari window and is updating at the same cadence as the source window, up to the source display's native frame rate. For example, when the source window is constantly updating on a 120Hz display, the stream output can also achieve up to 120 fps update. You might wonder what happens when the window resizes. Please keep in mind that frequently changing the stream's output dimension can lead to additional memory allocation and therefore not recommended. The stream's output dimension is mostly fixed and it does not resize with the source window. Now let me start to resize the source window and see what happens to the stream's output. ScreenCaptureKit always performs hardware scaling on the captured window so it never exceeds the frame output as the source window resizes. How about windows that are covered by other windows? When the source window is occluded or partially occluded, the stream output always includes the window's full content. And this also applies to the case when the window is completely off-screen or moved to other displays. And for minimized windows, when the source window is minimized, the stream output is paused, and it resumes when the source window is no longer minimized. Next, let's move to audio output. In this example here, there are two Safari windows with audio tracks, and the window on the left is being captured. The video output includes just the first window, and the audio tracks from both Safari windows will be included in the audio output. Let's take a look and listen. ♪ Electronic dance music ♪ Chef: And I wrote down my favorite guacamole recipe. It calls for four avocados. Meng: With the stream up and running, your app receives a frame update whenever there's a new frame available. The frame's output includes IOSurface representing the captured frame and the per-frame metadata. I'd like to spend some time talking about metadata. I am going to show you examples of metadata that can be quite useful for your app. And these include dirty rects, content rect, content scale, and scale factor. Let's start with dirty rects. Dirty rects indicate where the new content is from the previous frame. In the example here, the dirty rects are being highlighted to illustrate the regions of frame updates. Instead of always encoding the entire frame, or calculate the delta between two frames in the encoder, you can simply use dirty rects to only encode and transmit the regions with new updates and copy the updates onto the previous frame on the receiver side to generate a new frame. Dirty rects can be retrieved from the output CMSampleBuffer's metadata dictionary using the matching key. Now let's move to content rect and the content scale. The source window to be captured is on the left and the stream output is on the right. Since a window can be resized, the source window's native backing surface size often doesn't match the stream output's dimension. In the example here, the captured window has different aspect ratio from the frame's output and is bigger. The captured window is scaled down to fit into the output. A content rect, which is highlighted in green here, indicates the region of interest of the captured content on the stream output. And the content scale indicates how much the content is scaled to fit. Here the captured Safari window is scaled down by 0.77 to fit inside the frame. Now you can use the metadata just discussed to correctly display the captured window as close to its native appearance as possible. First, let's start by cropping the content from its output using the content rect. Next, scale the content back up by dividing the content scale. Now the captured content is scaled to match one-to-one in pixel size as the source window. But how is the captured window going to look on the target display? To answer that question, I would like to start by describing how scale factor works. A display's scale factor indicates the scale ratio between a display or window's logical point size and its backing surface's pixel size. A scale factor 2, or a 2x mode, means every one point onscreen equals four pixels on the backing surface. A window can be moved from a Retina display with scale factor 2, such as in the example here, to a non-Retina display with scale factor 1 while being captured. With scale factor 1, each one logical point onscreen corresponds to one pixel on the backing surface. In addition, the source display might have mismatched scale factor from the target display where the captured content will be displayed. In this example, a window is being captured from a Retina display on the left with a scale factor 2 and to be displayed on a non-Retina display on the right. If the captured window is displayed as-is without scaling on the target non-Retina display with one point to one pixel mapping, the window will look four times as big. To fix this, you should always check the scale factor from the frame's metadata against the scale factor of the target display. When there's a mismatch, scale the size of the captured content by the scale factor before displaying it. After scaling, the captured window on the target display now appears to be the same size as its source window. Now let's take a look at the code, and it's quite simple. Content rect, content scale, and scale factor can also be retrieved from the output CMSampleBuffer's metadata attachment. You can then use these metadata to crop and scale the captured content to display it correctly. To recap, a single window filter always includes full window content even when the source window is off-screen or occluded. It's display and space independent. The output is always offset at the top-left corner. Pop-up or child windows are not included. Consider using metadata to best display the content. And the audio includes tracks from the entire containing app. Now that you have just learned about how to capture and display a single window, let me move to the next class of display-based content filters. In this next example, you will learn to create a display-based filter with windows or apps, and I will demonstrate some differences between video- and audio-filtering rules. A display-based inclusion filter specifies which display you want to capture content from. By default, no windows are captured. You can choose the content you want to capture by window. In the example here, a Safari window and a Keynote window are added to the display filter. The video output includes just these two windows placed in a display space and the audio output includes all the soundtracks from Keynote and Safari apps. This code sample demonstrates how to create display-based filters with included windows. Start by creating a list of SCWindows using SCShareableContent and windowIDs. And then, create a display-based SCContentFilter with a given display and a list of included windows. You can then create a stream using the filter and configuration in the same way as a desktop independent window and start the stream. With the stream up and running, let's take a look at the stream's output. The filter is configured to include two Safari windows, menu bar, and wallpaper windows.

If a window is moved off-screen, it will be removed from the stream output. When a new Safari window is created, the new window doesn't show up in the stream output because the new window is not in the filter. The same rule also applies to child or pop-up windows, which do not show up in the stream's output. If you want to ensure that child windows are included automatically in your stream output, you can use a display-based filter with included apps. In this example, adding the Safari and Keynote apps to the filter ensures that the audio and video output from all the windows and soundtracks from these two apps are included in the output Window exception filters are a powerful way of excluding specific windows from your output when the filter is specified as a display with included apps. For example, a single Safari window is removed from the output. ScreenCaptureKit enables audio capture at the app level, so excluding audio from a single Safari window is the equivalent to removing audio tracks for all Safari apps. Although the stream's video output still includes a Safari window, all the sound tracks from Safari apps are removed and the audio output includes just the soundtrack from Keynote. In the code example here, we change the SCContentFilter to include a list of SCRunningApplications instead of SCWindows. If there are individual windows you want to further exclude, build a list of SCWindows and then create an SCContentFilter using the list of SCApplications with the list of excepting windows to exclude. Let's take a look at what the stream output looks like now when new or child windows are created by specifying included apps. This time, Safari app and system windows are added to the filter. A new Safari window is now automatically included in the stream output and the same rule applies to child and pop-up windows. This can be quite useful when you are doing a tutorial and want to demonstrate the full action including invoking pop-up or new windows. I have just demonstrated how to add content to the stream output through a few different ways. My next example will show you how to remove content from the stream output. This example includes a test app that emulates a video conferencing app that contains a preview of the display being shared. Because the test app recursively shows itself in the preview, it's creating the so-called mirror hall effect. Even during full display share, it's common for screen sharing applications to remove its own windows, capture preview, participant camera view to avoid the mirror hall effect, or other system UIs such as notification windows. ScreenCaptureKit provides you with a set of exclusion-based filters that allow you to quickly remove content from display capture. An exclusion-based display filter captures all the windows from the given display by default. You can then start to remove individual windows or apps by adding them to the exclusion filter. For example, you can add the content capture test app and Notification Center to the list of excluded applications. To create a display-based filter excluding a list of applications, start by retrieving SCApplications to exclude by matching bundle ID. If there are individual windows you'd like to cherry-pick back to the stream output, you can also build an optional list of excepting SCWindows. And then use a given display, the list of applications to exclude, and a list of excepting windows to create the content filter. Let's take a look at the result. The content capture test app that's causing the mirror hall problem and the notification windows are both removed from the stream output. New or child windows from these apps will be automatically removed as well. If these removed apps include any audio, their audio will be removed from the audio output. We've just seen how to capture a single window, how to add and remove windows from a display filter. Let's move to stream configuration next. In the next few examples, you will learn about different stream properties you can configure, how to set up the stream for screen capture and streaming, and how to build a window picker with live preview. Let's start with configuration properties. These are some of the common stream properties you can configure, such as stream output dimensions, source and destination rects, color space, color matrix, and pixel format, whether to include cursor, and frame rate control. We will take a look at each property in details next. Let's start with output dimension, which can be specified as width and height in pixels. The source display's dimension and aspect ratio doesn't always match the output dimension. And when this mismatch happens while capturing a full display, there will be pillar or letterbox in the stream output. You can also specify a source rect that defines the region to capture from and the result will be rendered and scaled to the destination rect on the frame output. ScreenCaptureKit supports hardware accelerated color space, color matrix, and pixel format conversion. Common BGRA and YUV formats are supported. Please visit our developer page for the full list. When show cursor is enabled, the stream output includes a cursor prerendered into the frame. This applies to all system cursors, even custom cursor like the camera-shaped one here. You can use minimum frame interval to control desired output frame rate. For example, when requesting 60 fps, set the minimal interval to 1/60. You will receive frame update no more than 60 fps, and no more than the content's native frame rate. Queue depth can be specified to determine the number of surfaces in the server-side surface pool. More surfaces in the pool can lead to better frame rate and performance, but it results in higher system memory usage and potentially a latency trade-off, which I will discuss in more details later. ScreenCaptureKit accepts queue depth range between three to eight with a default queue depth of three. In this example here, the surface pool is configured to include four surfaces available for ScreenCaptureKit to render to. The current active surface is surface 1 and ScreenCaptureKit is rendering the next frame to it. Once surface 1 is complete, ScreenCaptureKit sends surface 1 to your app. Your app is processing and holding surface 1, while ScreenCaptureKit is rendering to surface 2. Surface 1 is now marked as unavailable in the pool since your app is still using it. When surface 2 is complete, it's sent to your app and ScreenCaptureKit now renders to surface 3. But if your app is still processing surface 1, it will start to fall behind as frames are now provided faster than they can be processed. If the surface pool contains a large number of surfaces, new surfaces will start to pile up and you might need to consider starting to drop frames in order to keep up. In this case, more surfaces in the pool can potentially lead to higher latency. The number of surfaces left in the pool for ScreenCaptureKit to use, equals the queue depth minus the number of surfaces held by your app. In the example here, both surface 1 and 2 are still held by your app. There are 2 surfaces left in the surface pool. After surface 3 is complete and is sent to your app, the only available surface left in the pool is surface 4. If your app continues to hold on to surface 1, 2, and 3, ScreenCaptureKit will soon run out of surfaces to render to and you will start to see frame loss and glitch. Your app needs to finish and release surface 1 before ScreenCaptureKit starts to render the next frame after surface 4 in order to avoid frame loss. Now your app releases surface 1 and it's available for ScreenCaptureKit to use again. To recap: there are two rules your app needs to follow in order avoid frame latency and frame loss. To avoid delayed frame, you need to be able to process a frame within the MinimumFrameInterval. To avoid frame loss, the time it takes your app to release the surfaces back to the pool must be less than MinimumFrameInterval times QueueDepth minus 1, after which ScreenCaptureKit runs out of surfaces to use, enters a stall, and will start to miss new frames. Now that you've seen the various properties you can configure, let's dive into some examples to configure the stream for screen capture and streaming. Some screen content includes videos, games, or animations that are constantly updating and that requires higher frame rate. While others include mostly static text like the keynote window, which prioritize higher resolution over frame rate, you can live-adjust the stream's configuration based on the content being shared and the networking condition. In this code example, you are going to see how to configure the capture to stream 4K, 60-fps game. You can start by setting the stream output dimension to 4K in pixel size. And then, set the output frame rate to 60 fps by setting the minimum frame interval to 1/60. Next, use pixel format YUV420 for encoding and streaming. Set the optional source rect to just capture a portion of the screen. Next, change the background fill color to black, and then include a cursor in the frame output. Configure surface queue depth to five for optimal frame rate and performance. Last, enable audio on the output stream. All the stream configurations you've just seen in the previous example can be dynamically changed on the fly without recreating the stream. For example, you can live adjust some properties such as output dimension, dynamically change the frame rate, and update stream filters. Here's an example to switch the output dimension from 4K down to 720p. And downgrade the frame rate from 60 fps to 15 fps. You can then simply call updateConfiguration to apply the new settings on the fly without interrupting the stream. In the last example, I'd like to walk you through building a window picker with live preview. Here is an example of what a typical window picker looks like. It's common for web conferencing screen sharing apps to offer users an option to choose the exact window to share. ScreenCaptureKit provides an efficient and high-performance solution to creating large number of thumbnail-sized streams with live content update, and it's simple to implement. Let's break it down to see what it takes to build a window picker like this using ScreenCaptureKit. To set up the picker, you can start by creating one single window filter for each eligible window that your app allows the user to pick with desktop independent window as the filter type. Next, set up the stream configuration that's thumbnail-sized, 5 fps, with BGRA pixel format for onscreen display, default queue depth, no cursor or audio. Use single window filter and the stream configuration here to create one stream for each window. To do this in code, you can start by getting the SCShareableContent by excluding desktop and system windows. Next, create a content filter of type desktop independent window for each eligible window. Then, move to the stream configuration part. Choose an appropriate thumbnail size -- in this example, it's 284 by 182 -- and then set the minimum frame interval to one over five. With a pixel format of BGRA for onscreen display, disable audio and cursor since we don't need them in the preview. And set queue depth to three because we don't expect updates that are too often. With the stream content filter and the configuration created, you are now ready to create the streams. Create one stream for each window, add stream output for each stream, and then start the stream. Last, append it to the stream list. This is the window picker with live preview created using the sample code we saw earlier. Each thumbnail is live updating and then backed by an individual stream with single-window filter. With ScreenCaptureKit, you can easily build a live preview picker like this, that allows you to concurrently capture so much live screen content simultaneously, without overburdening the system. Now let me hand it over to my colleague, Drew, who's going to give you an exciting demo about OBS adoption of ScreenCaptureKit. Drew Mills: Thanks, Meng. Hi, my name is Drew, and I'm a Partner Engineer here at Apple. OBS Studio is an open source application that allows users to manage recording and streaming content from their computer. It contains an implementation of ScreenCaptureKit that we worked with the project on integrating this spring. ScreenCaptureKit was easy to implement thanks to utilizing similar code to OBS's existing CGDisplayStream-based capture. The ScreenCaptureKit implementation demonstrates many of the features discussed in the "Meet ScreenCaptureKit" session. This includes: capturing an entire desktop, all of the windows of an application, or just one specific window. ScreenCaptureKit has lower overhead than OBS's CGWindowListCreateImage-based capture. This means that when capturing a portion of your screen, you are left with more resources that you can use for producing your content. Let's dive into a demo to see what we've been discussing in action. On the left, there is a worst case example of OBS's Window Capture. This capture uses the CGWindowListCreateImage API, and has significant stuttering. In our testing, we saw frame rates dip as low as 7 fps. Meanwhile, the ScreenCaptureKit implementation on the right has a much smoother result, providing an output video with significantly smoother motion. In this case, delivering 60 fps. All while OBS uses up to 15 percent less RAM than Window Capture. And while OBS's CPU utilization is cut by up to half when using ScreenCaptureKit instead of OBS's Window Capture. Let's look at the other improvements that ScreenCaptureKit has to offer OBS users. I'm still trying to track down all of the Gold Ranks in Sayonara Wild Hearts. I want to show off my best run, so I've been recording my gameplay. Thanks to ScreenCaptureKit, I can now capture direct audio stream from the game, so when I get a notification on my Mac, it won't ruin my recording's audio or video. And this is possible without having to install any additional audio routing software. ♪ Now, using all of the enhancements provided by ScreenCaptureKit on Apple silicon, I can stream games like Taiko no Tatsujin Pop Tap Beat from my Mac to popular streaming services. A new constant bitrate option for Apple silicon's hardware encoder means that I can encode my streaming content for services requiring constant bitrate without significantly impacting my game's performance. Now, thanks to ScreenCaptureKit's lower resource usage and encoding offloading, I have even more performance available for the content that matters. Back to you, Meng. Meng: Thank you, Drew. Through the demos and examples, you learned about advanced screen content filters. Several ways to configure the stream for different use cases. And how to use per-frame metadata and correctly display the captured content. Some best practices to help you achieve best performance. And finally, Drew showcased the significant capability and performance improvement ScreenCaptureKit brought to OBS. I can't wait to see how you redefine your app's screen sharing, streaming, and collaboration experience using ScreenCaptureKit. Thank you for watching! ♪

-

-

4:36 - Create a single window filter

// Get all available content to share via SCShareableContent let shareableContent = try await SCShareableContent.excludingDesktopWindows(false, onScreenWindowsOnly: false) // Get window you want to share from SCShareableContent guard let window : [SCWindow] = shareableContent.windows.first( where: { $0.windowID == windowID }) else { return } // Create SCContentFilter for Independent Window let contentFilter = SCContentFilter(desktopIndependentWindow: window) // Create SCStreamConfiguration object and enable audio capture let streamConfig = SCStreamConfiguration() streamConfig.capturesAudio = true // Create stream with config and filter stream = SCStream(filter: contentFilter, configuration: streamConfig, delegate: self) stream.addStreamOutput(self, type: .screen, sampleHandlerQueue: serialQueue) stream.startCapture() -

9:38 - Get dirty rects

// Get dirty rects from CMSampleBuffer dictionary metadata func streamUpdateHandler(_ stream: SCStream, sampleBuffer: CMSampleBuffer) { guard let attachmentsArray = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, createIfNecessary: false) as? [[SCStreamFrameInfo, Any]], let attachments = attachmentsArray.first else { return } let dirtyRects = attachments[.dirtyRects] } } // Only encode and transmit the content within dirty rects -

13:34 - Get content rect, content scale and scale factor

/* Get and use contentRect, contentScale and scaleFactor (pixel density) to convert the captured window back to its native size and pixel density */ func streamUpdateHandler(_ stream: SCStream, sampleBuffer: CMSampleBuffer) { guard let attachmentsArray = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, createIfNecessary: false) as? [[SCStreamFrameInfo, Any]], let attachments = attachmentsArray.first else { return } let contentRect = attachments[.contentRect] let contentScale = attachments[.contentScale] let scaleFactor = attachments[.scaleFactor] /* Use contentRect to crop the frame, and then contentScale and scaleFactor to scale it */ } } -

15:37 - Create display filter with included windows

// Get all available content to share via SCShareableContent let shareableContent = try await SCShareableContent.excludingDesktopWindows(false, onScreenWindowsOnly: false) // Create SCWindow list using SCShareableContent and the window IDs to capture let includingWindows = shareableContent.windows.filter { windowIDs.contains($0.windowID)} // Create SCContentFilter for Full Display Including Windows let contentFilter = SCContentFilter(display: display, including: includingWindows) // Create SCStreamConfiguration object and enable audio let streamConfig = SCStreamConfiguration() streamConfig.capturesAudio = true // Create stream stream = SCStream(filter: contentFilter, configuration: streamConfig, delegate: self) stream.addStreamOutput(self, type: .screen, sampleHandlerQueue: serialQueue) stream.startCapture() -

18:13 - Create display filter with included apps

// Get all available content to share via SCShareableContent let shareableContent = try await SCShareableContent.excludingDesktopWindows(false, onScreenWindowsOnly: false) /* Create list of SCRunningApplications using SCShareableContent and the application IDs you’d like to capture */ let includingApplications = shareableContent.applications.filter { appBundleIDs.contains($0.bundleIdentifier) } // Create SCWindow list using SCShareableContent and the window IDs to except let exceptingWindows = shareableContent.windows.filter { windowIDs.contains($0.windowID) } // Create SCContentFilter for Full Display Including Apps, Excepting Windows let contentFilter = SCContentFilter(display: display, including: includingApplications, exceptingWindows: exceptingWindows) -

20:46 - Create display filter with excluded apps

// Get all available content to share via SCShareableContent let shareableContent = try await SCShareableContent.excludingDesktopWindows(false, onScreenWindowsOnly: false) /* Create list of SCRunningApplications using SCShareableContent and the app IDs you’d like to exclude */ let excludingApplications = shareableContent.applications.filter { appBundleIDs.contains($0.bundleIdentifier) } // Create SCWindow list using SCShareableContent and the window IDs to except let exceptingWindows = shareableContent.windows.filter { windowIDs.contains($0.windowID) } // Create SCContentFilter for Full Display Excluding Windows let contentFilter = SCContentFilter(display: display, excludingApplications: excludingApplications, exceptingWindows: exceptingWindows) -

28:46 - Configure 4k 60FPS capture for streaming

let streamConfiguration = SCStreamConfiguration() // 4K output size streamConfiguration.width = 3840 streamConfiguration.height = 2160 // 60 FPS streamConfiguration.minimumFrameInterval = CMTime(value: 1, timescale: CMTimeScale(60)) // 420v output pixel format for encoding streamConfiguration.pixelFormat = kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange // Source rect(optional) streamConfiguration.sourceRect = CGRectMake(100, 200, 3940, 2360) // Set background fill color to black streamConfiguration.backgroundColor = CGColor.black // Include cursor in capture streamConfiguration.showsCursor = true // Valid queue depth is between 3 to 8 streamConfiguration.queueDepth = 5 // Include audio in capture streamConfiguration.capturesAudio = true -

30:08 - Live downgrade 4k 60FPS to 720p 15FPS

// Update output dimension down to 720p streamConfiguration.width = 1280 streamConfiguration.height = 720 // 15FPS streamConfiguration.minimumFrameInterval = CMTime(value: 1, timescale: CMTimeScale(15)) // Update the configuration try await stream.updateConfiguration(streamConfiguration) -

31:57 - Build a window picker with live preview

// Get all available content to share via SCShareableContent let shareableContent = try await SCShareableContent.excludingDesktopWindows(false, onScreenWindowsOnly: true) // Create a SCContentFilter for each shareable SCWindows let contentFilters = shareableContent.windows.map { SCContentFilter(desktopIndependentWindow: $0) } // Stream configuration let streamConfiguration = SCStreamConfiguration() // 284x182 frame output streamConfiguration.width = 284 streamConfiguration.height = 182 // 5 FPS streamConfiguration.minimumFrameInterval = CMTime(value: 1, timescale: CMTimeScale(5)) // BGRA pixel format for on screen display streamConfiguration.pixelFormat = kCVPixelFormatType_32BGRA // No audio streamConfiguration.capturesAudio = false // Does not include cursor in capture streamConfiguration.showsCursor = false // Valid queue depth is between 3 to 8 // Create a SCStream with each SCContentFilter var streams: [SCStream] = [] for contentFilter in contentFilters { let stream = SCStream(filter: contentFilter, streamConfiguration: streamConfig, delegate: self) try stream.addStreamOutput(self, type: .screen, sampleHandlerQueue: serialQueue) try await stream.startCapture() streams.append(stream) }

-