-

Create a great video playback experience

Find out how you can use the latest iOS and iPadOS system media players to build amazing media apps. We'll share how we designed the updated player and give you best practices and tips to help you design media experiences of your own. We'll also explore Live Text for video and show you how to integrate interstitials and playback speed controls into your apps.

Resources

Related Videos

WWDC22

-

Search this video…

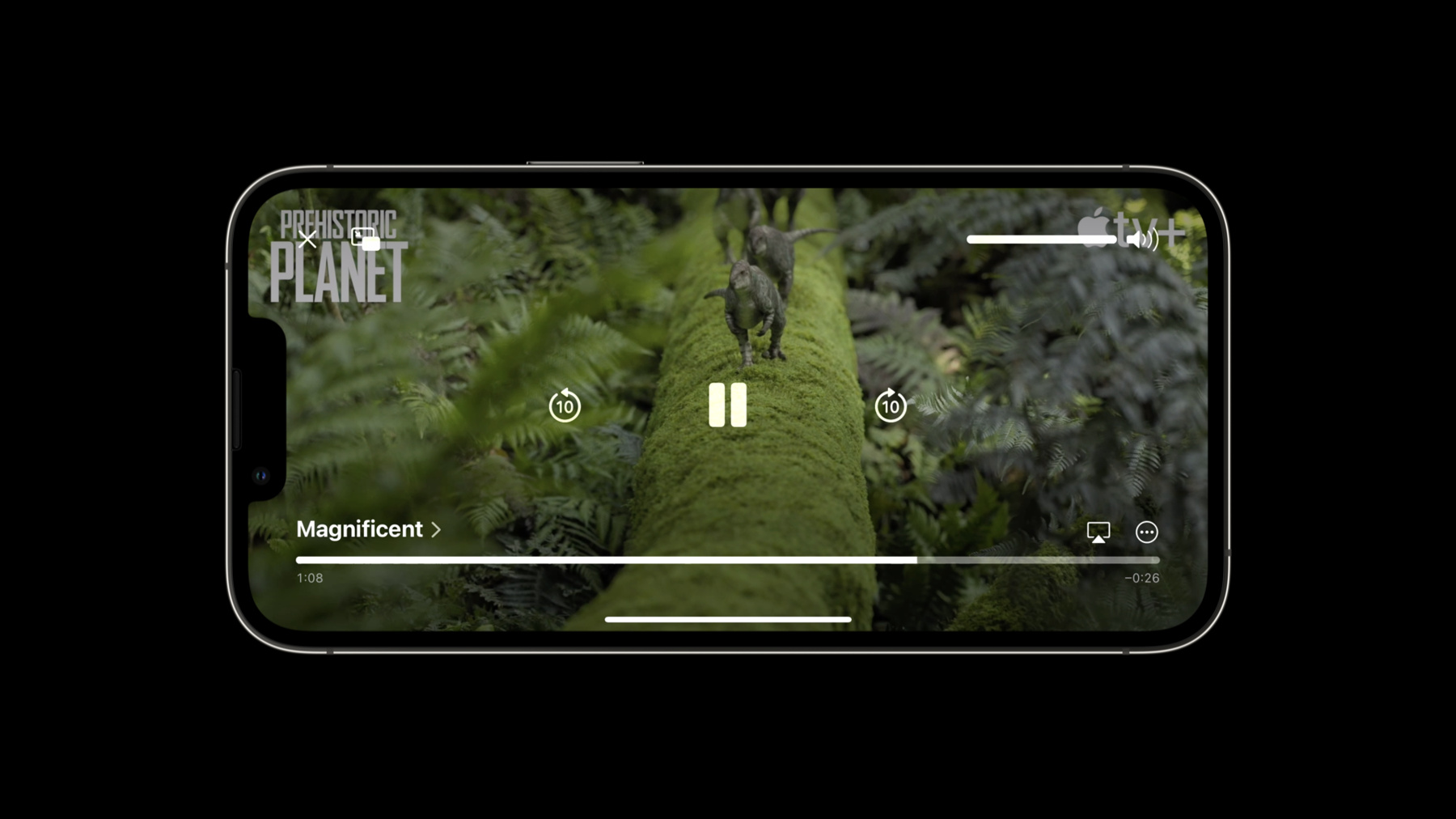

♪ ♪ Jake: Hi, my name's Jake. I'm an engineer on the AVKit team, and welcome to Creating a Great Video Playback Experience. In iOS and iPadOS 16, we've built a completely new media player from the ground up bringing a whole new look and feel, designed to keep the focus on the content and fit within a broader spectrum of apps. We've also built in many novel interaction models that make using this new media player feel even more intuitive and seamless, and we think you're are gonna love it. In this session, we'll take a deep dive into the new system media player. We'll learn how to design amazing playback experiences. We'll see some exciting new visual intelligence features coming to macOS and iOS. I'll introduce an all new interstitials experience coming with the new media player and go over some new APIs we're bring over from tvOS. And lastly, we'll go over a new feature in AVKit: selectable playback speeds. For tvOS 15.0 we redesigned the system player, bringing a whole new look and feel, as well as a host of new features and usability improvements to the system player. Well, we heard your requests. I'm happy to say, we've revamped the iOS system player as well. We've completely redesigned the native media player, adopting the look and feel of the tvOS player, but reimagined for a touch first device. We've removed the chrome across the board, allowing the interface to feel native within broader spectrum of apps and bringing a more modern feel to the player. Let's dig deeper into some of the changes we've made. First, we've brought the play/pause and skip controls front and center to make them even easier to interact with. We've also adjusted the skip interval from 15 seconds down to 10 making it easier to track how far you've jumped with consecutive skips. Next, we made some significant improvements to the usability of the timeline as well. We're removing the slider knob marking the timeline's current position. Instead, the timeline can now be interacted with from anywhere along the slider. Drags no longer needs to begin at the current time marker. This makes it even easier to find exactly where you want to go. We've also replaced the video aspect control with a more intuitive pinch to zoom gesture, which I'll show in a moment. And of course, the new UI looks great for portrait content as well.

On iPadOS, the player integrates seamlessly into the system with full support for keyboards, trackpads, mice, game controllers, and much more! We also added a number of new ways to interact with the controls that make navigating the content and some common interactions even easier and more intuitive. Let's take a look at these. First, we've added a new way to change the video's fill aspect. You can now use a pinch gesture to move through the available zoom levels. Pinching in will bring the video within the safe area of the display. Pinching out will zoom the video in to fully fill the display. Next, we stream lined one of the most common interactions, play/pause. You can now tap the center of the display, even while the controls are hidden to play and pause the video. And lastly, we've added a new way to navigate through the media timeline. You can now scroll through the timeline from anywhere over the video using the same interactions we all know and love in scroll views. As you begin to scroll through the video frames, the interface drops away, leaving only the most relevant UI, allowing the focus to stay on the content. We've also brought over some new features from the tvOS player. AVPlayerViewController now supports displaying a content title, subtitle, and description natively from within the fullscreen UI.

You can provide strings for each of these by passing AVMetadataItems to an existing AVKit API. Let's see how this is done. By default, the title, subtitle, and content description will be pulled from the media's metadata. However, the values in the media can be overridden if needed through the externalMetadata API on AVPlayerItem.

A title can be added by creating an AVMetadataItem with the identifier commonIdentifierTitle and adding it to the playerItems externalMetadata property. Titles should be short and descriptive to avoid cluttering the UI. Similarly, here we've added a subtitle by creating an AVMetadataItem with the identifier .iTunesMetadataTrackSubtitle. The subtitle will be displayed above the content title and should be a few words describing the content.

Lastly, a description can be added with the identifier .commonIdentifierDescription. This will display a chevron to the right of the title and subtitle. Selecting the title will display the info panel containing the content description. The description should be a few sentences with more info about the content. Tapping anywhere will dismiss the info panel.

So to wrap up, we've revamped the iOS system player with an all new look and feel, with new streamlined touch first interaction models as well as a host of other improvements. Using AVPlayerViewController in your app, you'll have the full support of the system player– support for Picture in Picture, SharePlay, Visual Analysis, Native Catalyst Support, New hardware and feature support, and much, much more. And of course, all of this you get with just a few lines of code. Now, let's talk about how you can design amazing playback experiences in your apps. When we set out to redesign the system media player, we took a step back from what we had built in the past and what we had built for other platforms and we asked ourselves, "What makes for a good user experience?" We wanted to share this process with you; how we've gone about designing the new players, why we designed them the way we did, and what we think defines an amazing media experience. We think there are three things that make a media experience great. The experience should be intuitive. It should feel easy, familiar, natural, even if you've never used it. It should be tightly integrated, both within your app and with the system. And lastly, it should be content forward. At the end of the day, people are there to experience the media and your apps and designs should reflect that. Now let's dig deeper into these three, starting with making your experience intuitive. Oftentimes, when an app feels intuitive it can be hard to put your finger on why. You just know it when you see it. So what actually makes an interface or an experience feel intuitive? And how can you design for it? We think it starts with familiarity. When you can draw on your past experiences to help understand something new, that's intuitive. When you don't need an explanation of how it works or even need to think about how it works– it just works exactly as you expect.

Every one of us builds experiences daily interacting technology and the real world. Both of these are great sources of experiential familiarity and is often where we started when designing the new system media players. There are many types of experiences to draw intuition from, but I want to focus on two; the two that we most often relied on when designing the system media players. Platform paradigms and the real world. The first comes from our experiences using technology every day. Years of using TV remotes tells you that the arrow keys move focus left and right. Similarly, tapping a volume button on a touch first device will mute the audio. These interactions feel intuitive because they're familiar. You can use these types of familiar interactions in your media experiences to help make your apps feel more intuitive, engaging, and even natural to use. Conversely, finding unfamiliar or unexpected interactions can be confusing and sometimes even frustrating. Let's look at some examples where we drew on this type of platform familiarity in the system players. A great example of this is the presentation and dismissal model of the iOS system player. The player animates its presentation in from the bottom, giving a subtle hit that the player can be dismissed by pushing it back down. We see this model used extensively across our touch first devices. For example, the now playing UI in Music presents from the mini bar at the bottom and can be dismissed with an interactive swipe downwards. In some cases though, we may draw on experiences not from our understanding of technology, but from everyday life.

These types of experiences come from the real world. Millions of years of evolution have helped us develop a deep instinctual understanding of natural processes. You can tap in to this understanding to help build familiar and intuitive experiences in your software. A great example of this is our new scrolling gesture in the iOS player. Similar to rolling a toy car across a table, each swipe over the video has momentum, continuing the movement of the timeline past the direct interaction until the timeline slowly comes to a stop. The momentum here alone builds an association with real world moving objects. This association helps you discover the subtle depths of the interaction. Just like the toy car, I can make it go faster by pushing it harder, or pushing it several times in a row. And if I grab it, it stops. It feels natural because it is natural. And the best part about this is, if you use the system players, your app will feel intuitive. All the natural interactions we've built and inherit intuition and familiarity people have with the system player, all of the design paradigms optimized in a way that tvOS, iOS, and macOS users will understand natively– all of this you get with just a few lines of code.

Building an intuitive design is one aspect of making your media experience great, but without all the features and integration points people expect, your app can inadvertently pull focus away from the content. This leads us to the second crucial aspect of an amazing media experience– tight integration. When an experience is tightly integrated, all the functionality, features, and devices people expect to work just work. And importantly, they work in a way that's consistent with their expectations. As people use their devices, they become accustomed to relying on the features of the platform. For example, pulling down the Control Center and seeing your content populated in the Now Playing media controls, or responding to a notification while watching a TV show, and having the video continue smoothly into Picture in Picture. Building this tight system integration into your app is key to making your experience feel seamless. Your app should feel like a native part of the system and we work hard to provide you with the tools needed to make that possible. This includes things like CoreSpotlight integration to make your content searchable, Now Playing info so your content can appear in the system media UIs, MediaRemote commands allowing your app to respond to things like the play button being pressed on a keyboard or TV remote. We even provide the ability to integrate your media directly into the TV app, delivering your content to an even wider audience. In addition to making your apps feel native, it's important to provide all the features that people love. Features like AirPlay, SharePlay, and Picture in Picture. We think people will expect these features and providing them enhances the experience in using your app. People will use your app across many devices and even more input formats. Providing support for all these is crucial in ensuring your experience is accessible to everyone. This is particularly important on tvOS, where supporting all available remotes is critical to ensuring everyone can use your app. This is one reason we always recommend using the system media player on tvOS. Your apps should ensure a fluid experience with all TV remotes, keyboards, trackpads, game controllers, and headphone controls. Additionally, you should ensure your app's UI elements are drawn within the screen's safe area to avoid collision with rounded corners or notches in the display. We recognize building support for all of these integration points, features, and hardware configurations can be daunting. This is why we built AVPlayerViewController, so with just a few lines of code, anyone using your app can have an amazing media experience.

And all of this leads us, lastly, to the most important aspect in designing a media experience; making it content forward. This should be the primary goal in your design, and is what we considering the defining aspect of a great media experience. When your app feels intuitive, when all of the integration points and all features people expect just work, you bring your content into focus, and everything else fades into the background.

There are a few things you should keep in mind, though, specific to designing your content. Make sure you provide all of the appropriate metadata, both in your interface and to the system. Providing this info helps give context to your media and allows the system to provide better experiences in Control Center and the Lock Screen. This includes things like a title and subtitle, a description, thumbnail, season and episode information, or start and end dates for live streams. Always keep your media in its original aspect ratio. This allows the system to position your videos on screen in the correct location. Letter boxing your content can lead to experiences like this or this. Make sure to include support for the latest media standards where possible. For example, HDR and Dolby Atmos. And lastly, make sure to include audio and subtitle tracks for multiple languages so your media is accessible to as many people as possible.

If there is one thing to take away from this section of the talk, it's that you should keep the focus on your content. We've built the system media players, provided through AVPlayerViewController, to make that goal as easy as possible for you as a developer. Now, let's go over some new features we've added to AVPlayerViewController, starting with the new visual intelligence features. In this example, we have a video paused on a frame with a code snippet in it. Long pressing the code snippet will select it. You can then copy and paste it directly into playgrounds to try it out. This works great for macOS as well. Hovering your cursor over the same code will show the I-beam indicating the text is selectable. You can then use your cursor to highlight it or use CMD+A to Select All. We're introducing a new API to go along with this functionality. Available in AVPlayerViewController on iOS and AVPlayerView on macOS, allowsVideoFrameAnalysis will toggle this feature. This will be enabled by for all apps linking against the new SDKs. While allowsVideoFrameAnalysis is set to true, and once the media is paused, AVKit will begin analyzing the current video frame after a set period. Note that we will not analyze frames while scrolling, for performance reasons, or for FairPlay protected content. In general, we think people will expect this functionality in most situations. However, there are some instances where you may want to disable visual analysis as is appropriate in your application. For example, in performance critical applications, such as a collection view of videos, or in cases where interaction with the video is not expected, like splash screens. For more info on how to integrate the visual intelligence feature set into your apps, see our related talks on VisionKit. Next, let's take a look some improvements we made to interstitials. Up until now, interstitials were only supported in AVPlayerViewController on tvOS. Well, I'm happy to announce we're bringing the same level of support to iOS as well. Interstitials, either in the stream or defined locally through AVPlayerInterstitialEvents, will now be marked along the timeline. When the timeline hits a marker, we'll begin playing the interstitial. If your interstitials are already fully defined within your HLS playlist, you'll get this behavior automatically– no adoption required. If not, or if you app requires some more custom behavior, we're introducing some new API as well. AVInterstitialTimeRange is being brought over from tvOS to iOS. These will be populated automatically into the AVPlayerItem property, interstitialTimeRanges, which is also being brought over from tvOS. When using an HLS stream, an AVInterstitialTimeRange will be synthesized for each interstitial in the stream. When creating interstitial events locally through the AVFoundation API, an AVInterstitialTimeRange will be synthesized for each AVPlayerInterstitialEvent. Unlike on tvOS however, interstitialTimeRanges is a read-only property. Interstitials will either need to be defined within the HLS stream or through AVPlayerInterstitialEvents. For those migrating their support from their tvOS apps, this is in essence equivalent to setting translatesPlayerInterstitialEvents to yes.

We're also bringing over two delegate methods from tvOS as well. These can be used to know when an interstitial has begun or ended playback. Let's see how we can use these to APIs to add a skip button for a pre-roll ad on iOS. First, we'll create an AVPlayerInterstitialEventController for the primary media's player. Next, we'll create an interstitial event. We'll define some restrictions for it. These restrictions prevent seeking within the interstitial and prevent skipping past the interstitial. Then we'll add the interstitial to the event controller. And lastly, we can implement the new willPresentInterstitial delegate callback to bring up an ad skip button after a set interval. And once the button is pressed, we'll cancel the interstitial. It's that easy. Note that when adding any custom UI elements to an AVPlayerViewController, such as this ad skip button, always make sure to add to them as subviews of the contentOverlayView. To learn more about how you can integrate your interstitials right into your HLS playlists or how you can use the AVFoundation interstitials API, check out our related talks on exploring HLS dynamic pre-rolls and mid-rolls. Now we'll go over a new feature we've added this year across all our platforms; native support for playback speed control. Both AVPlayerView and AVPlayerViewController can now optionally show a playback speed menu using some new API we've added.

We're making this available on macOS, iOS, and tvOS. Let's take a look at what this looks like. On tvOS, you'll see a new control in the transport bar. Selecting the control will display a list of the available playback speeds to choose from. On iOS, this menu will appear in the transport control overflow menu. And similarly, on macOS, the control will appear in the overflow menu. All apps linking against the new iOS, macOS, and tvOS SDKs will get this functionality automatically with no additional changes required. However, depending on your use case, some applications may want to modify the list of speeds, programmatically select a speed, or disable the menu entirely. To accommodate these use cases, we've added some new APIs to AVPlayerView and AVPlayerViewController. Let's take a look at these.

First, we've added a new class in AVKit– AVPlaybackSpeed. AVPlaybackSpeeds represent user selectable speed options in a playback UI and they have three properties. A rate value, defined on initialized, which will be set on the player when the playback speed is selected. A localized name, used to represent the playback speed within the accessibility system For example, a speed of 2.5 might use a localizedName of "Two and a half times speeds." And a localized numeric name. This value is synthesized from the rate property and will be the String displayed in the playback speed menu If your app requires a custom playback speed menu external to the player, use this string to represent the speed.

Lastly, AVPlaybackSpeed defines a list of system default speeds that should be used whenever possible. You can use AVPlaybackSpeed in conjunction with some new API on AVPlayerView and AVPlayerViewController to adapt this feature to fit within your app. The speeds property allows defining a custom list of playback speeds. By default this property will be set to the AVPlaybackSpeed systemDefaultSpeeds list. Setting this to an empty list will hide the menu. The selected speed property will return the speed that's currently active. And lastly, the selectSpeed function allows programmatic selection of the current speed. Note that you should only use this function in response to explicit selection of the speed outside of the player UI. Never implicitly override the chosen playback speed. Let's take a look at an example. Here we're creating a AVPlayerViewController and presenting it. By default this will use the system provided list of playback speeds. You can add a new speed to the menu by creating an AVPlaybackSpeed and appending it to the list of speeds in AVPlayerViewController. We can also disable the menu by setting an empty list of speeds. It's as easy as that. Note though, you should always call AVPlayer play() to begin playback. Never start playback by calling setRate:1.0, as the selected rate might not be 1.0. And with that, I'd like to wrap up the session. We saw the new redesigned iOS system player. We heard how you can design amazing playback experiences of your own. We saw some cool new visual intelligence features, and went over our new interstitials and playback speed APIs. I hope you enjoyed the session and look forward to seeing these features in your apps. Enjoy the rest of the conference.

-

-

4:08 - Setting content external metadata

// Setting content external metadata let titleItem = AVMutableMetadataItem() titleItem.identifier = .commonIdentifierTitle titleItem.value = // Title string let subtitleItem = AVMutableMetadataItem() subtitleItem.identifier = .iTunesMetadataTrackSubTitle subtitleItem.value = // Subtitle string let infoItem = AVMutableMetadataItem() infoItem.identifier = .commonIdentifierDescription infoItem.value = // Descriptive info paragraph playerItem.externalMetadata = [titleItem, subtitleItem, infoItem] -

19:03 - Creating a skip button for a preroll ad

// Creating a skip button for a preroll ad let eventController = AVPlayerInterstitialEventController(primaryPlayer: mediaPlayer) let event = AVPlayerInterstitialEvent(primaryItem: interstitialItem, time: .zero) event.restrictions = [ .requiresPlaybackAtPreferredRateForAdvancement, .constrainsSeekingForwardInPrimaryContent ] eventController.events.append(event) func playerViewController(playerViewController: AVPlayerViewController, willPresent interstitial: AVInterstitialTimeRange) { showSkipButton(afterTime: 5.0, onPress: { eventController.cancelCurrentEvent(withResumptionOffset: CMTime.zero) }) } -

21:14 - Setting custom playback speeds

// Setting custom playback speeds let player = AVPlayerViewController() player.player = // Some AVPlayer present(player, animated: true) let newSpeed = AVPlaybackSpeed(rate: 2.5, localizedName: "Two and a half times speed”) player.speeds.append(newSpeed) -

23:02 - Hiding the playback speed menu

// Hiding the playback speed menu let player = AVPlayerViewController() player.player = // Some AVPlayer present(player, animated: true) player.speeds = []

-