I will try to answer your questions to the best of my ability.

Q: What is a Shared Space, and how does it work

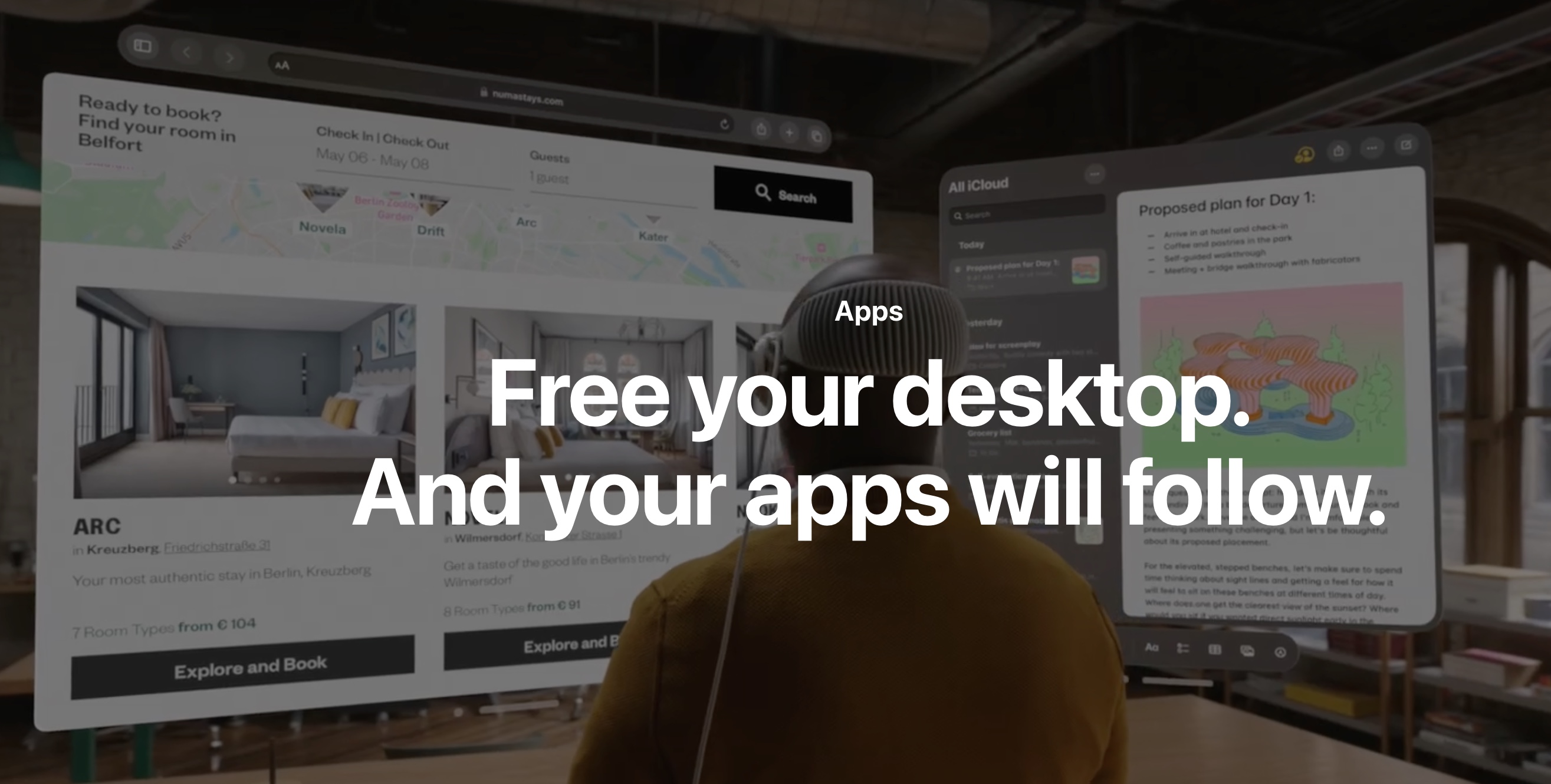

A: The Shared Space is the area apps launch when opened. In a Share Space, multiple apps "share" the same "space" (Hence the name). For example, you could open Keynote at 12 o'clock, notes at 11 o'clock, and safari at 1 o'clock. When they say it is the default way apps launch, they mean if you already have Pages launched at 12 o'clock, and you open messages, it will open at 11 o'clock. Obviously, if you don't like where it opens, you can always move it. Here is what the Developer website says about Shared Spaces: "By default, apps launch into the Shared Space, where they exist side-by-side — much like multiple apps on a Mac desktop. Apps can use windows and volumes to show content, and the user can reposition these elements wherever they like."

Q: What is the difference between the apps types.

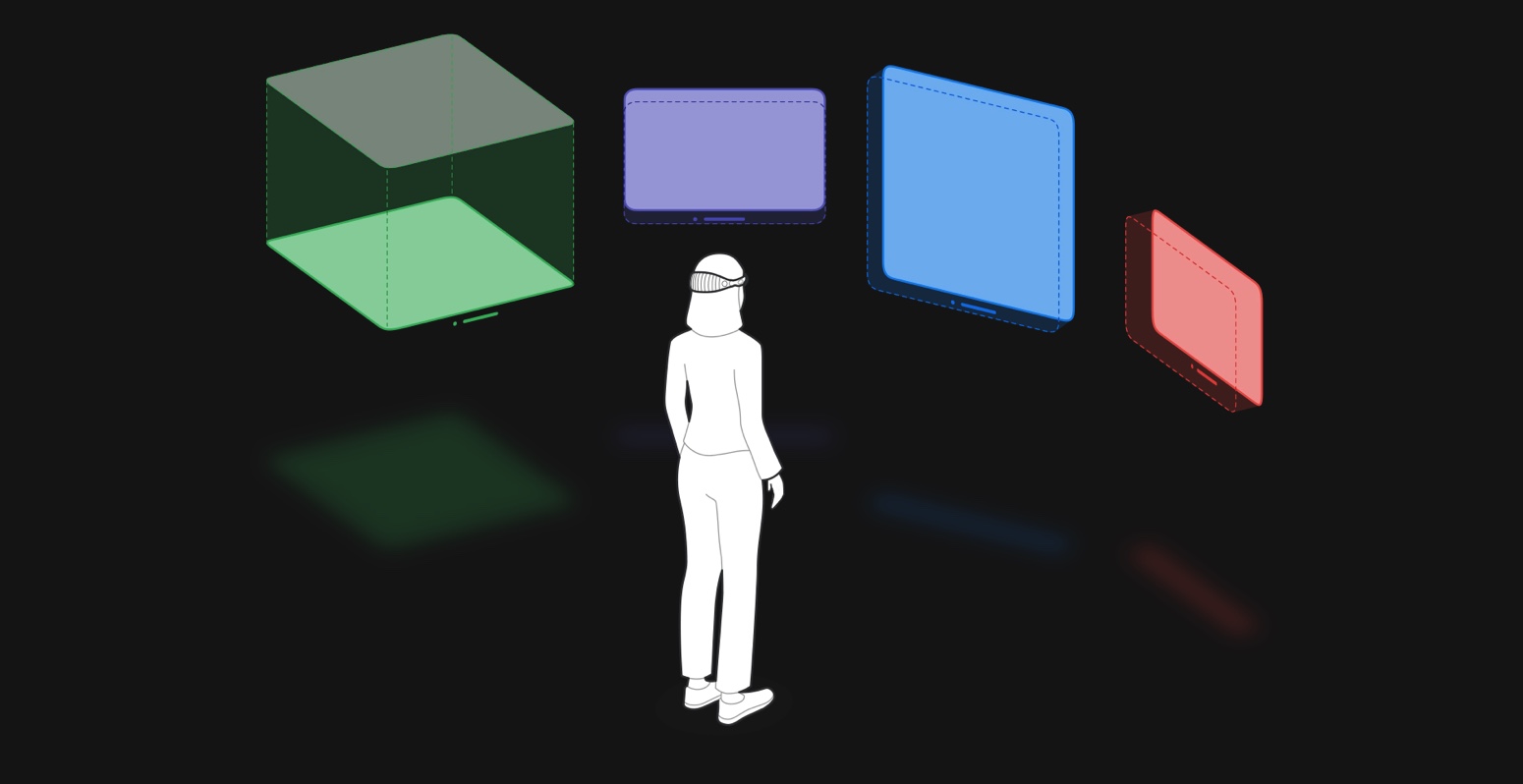

A: There are three different spaces an app can be in. I'll run through each one. 1. Windows: A window is how most apps will work. For example, safari works in windows. It is a 2D plane floating in the air, with some 3D aspects, such as buttons, shadows, etc. Here is what the Developer website says about Windows: "You can create one or more windows in your visionOS app. They’re built with SwiftUI and contain traditional views and controls, and you can add depth to your experience by adding 3D content." 2. Volumes. A volume is another form of app type. This one is designed for 3D objects, but not for a 3D surrounding. For example, if there was a fish tank app, it would open in a Volume. It had depth, you can move it around, place it one the table in front of you, walk around it, etc. But you don't go in it. Another example would be opening a USDZ file. If someone texted you a 3D file, and you opened it, it would open in a Volume format. These apps are different than Windows in size, however, they can still share a space with other Window apps. So, you could be reading an article about the heart, while looking at a 3D model of the heart. Here is what the Developer website says about Volumes: "Add depth to your app with a 3D volume. Volumes are SwiftUI scenes that can showcase 3D content using RealityKit or Unity, creating experiences that are viewable from any angle in the Shared Space or an app’s Full Space." 3. Full Spaces. A full space is something that takes priority over all other apps. For example, if I wanted to use the SkyGuide app, and see the stars and planets around me, it would be a Full Space. No other apps can be opened when you are in full space. It takes up the "Full Space". Here is what the Developer website says about Full Spaces: "For a more immersive experience, an app can open a dedicated Full Space where only that app’s content will appear. Inside a Full Space, an app can use windows and volumes, create unbounded 3D content, open a portal to a different world, or even fully immerse someone in an environment."

Q: What is passthrough?

A: Passthrough is a fancy tech word to mean, "We use cameras to see what you would see, and then show it to you on a screen." The Apple Vision Pro's front glass is not actually see-through. The cameras and sensors on the outside of the goggles take in the outside word, and then transmit that world to each of the screens inside the headset. Basically, what you see is a regurgitation of what the outside cameras see. When Apple says you "remain connected" they mean you still get to see your surrounds, so you can walk with the headset on, or you can make dinner with it on, and the recipe floating in front of you. Passthrough just makes the headset functional in everyday life.

I have attached some photos to make it more clear.

Shared Space

Volume beside Window

Volume beside Window

Full Space

Full Space

Each Type

Each Type