-

What’s new in privacy

At Apple, we believe that privacy is a fundamental human right. Learn about new technologies on Apple platforms that make it easier for you to implement essential privacy patterns that build customer trust in your app. Discover privacy improvements for Apple's platforms, as well as a study of how privacy shaped the software architecture and design for the input model on visionOS.

Chapters

- 1:01 - Privacy pillars

- 3:03 - Photos picker

- 5:44 - Screen capture picker

- 7:46 - Calendar access

- 10:29 - Oblivious HTTP (OHTTP)

- 14:22 - Communication Safety

- 17:46 - App sandbox

- 21:57 - Advanced Data Protection

- 23:41 - Safari Private Browsing

- 26:40 - Safari app extensions

- 27:22 - Spatial input model

Resources

Related Videos

WWDC23

- Discover Calendar and EventKit

- Elevate your windowed app for spatial computing

- Embed the Photos Picker in your app

- Ready, set, relay: Protect app traffic with network relays

- What’s new in App Store Connect

- What’s new in Safari extensions

- What’s new in ScreenCaptureKit

WWDC22

WWDC21

WWDC20

-

Search this video…

♪ Mellow instrumental hip-hop ♪ ♪ Hi there! I'm Michael from Privacy Engineering, and welcome to "What's new in privacy." At Apple, we believe privacy is a fundamental human right.

To respect and protect it is all of our shared responsibility.

For this reason, privacy is a central component to how we approach designing new and improved features at Apple.

Many products and services have become a central mainstay in all our lives, and so you have become a vital partner in protecting people.

Providing a great privacy experience in your app, where people understand and control what data is being accessed and for what purpose, helps them trust your app, especially in sensitive parts of their lives.

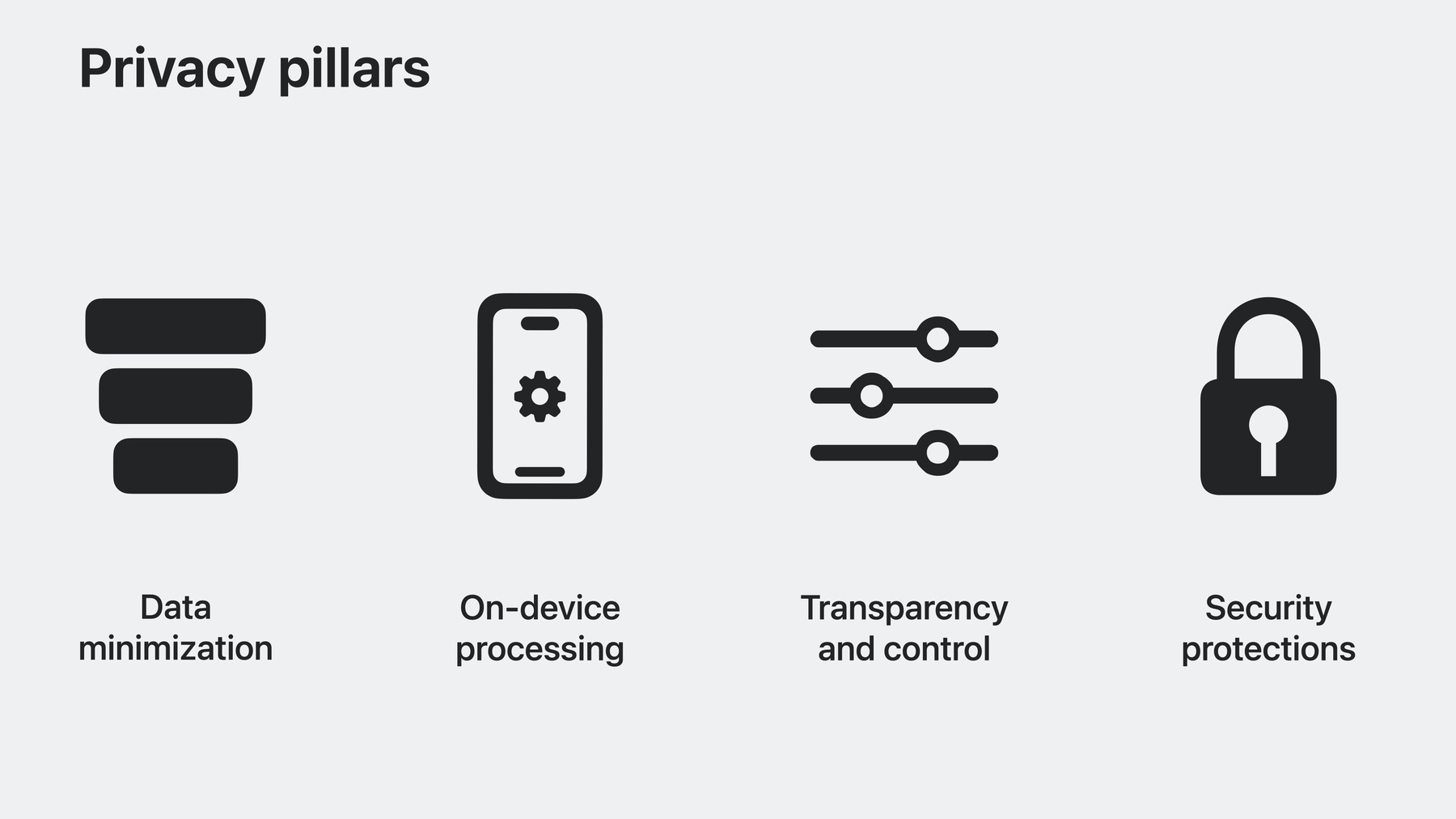

The privacy pillars are a great guide for you when building privacy into your app.

You might have heard us talk about them before, as these are four central ideas we use at Apple to think about the privacy of our products and services.

The first pillar is data minimization: use only the data needed to build a feature.

This applies throughout your app's architecture, from the amount of data features accessed, to data shared with application servers, to data that might be shared with third parties.

Second is on-device processing: tap into the power of the device to process data locally and avoid sharing it with any servers.

Third, transparency and control is about making sure people understand the what, why, when, and where of data access and processing, and to give them adequate controls before that happens.

And also, enable them to change their mind later.

The last pillar is security protections: apply strong technical mitigations to enforce the other pillars, such as end-to-end encryption.

To help you implement the privacy pillars in your app, I will tell you about new tools with privacy-centric design that you can adopt, give the latest updates on important platform changes for privacy, and finally, I will talk about how the spatial input model on Apple's newest platform was designed to protect privacy.

I will start with new privacy-enhancing technologies coming to Apple's platforms that make it easier for you to build great apps with great privacy.

This includes a set of new APIs that allow your app to obtain access to content seamlessly, while giving users fine-grain control over sharing, as well as new APIs to better protect communication with servers and people.

I will begin with improvements to the Photos picker, designed to provide low-friction access to photos.

Over the years, our photo libraries have grown massively as more and more of our precious moments are captured there, starting with embarrassing childhood photos, to the last hiking trip.

For this reason, a fundamental way to build up trust in your app is to empower people to make fine-grain decisions about which data they share with your app and when they share it.

So if someone wants to use your app to share the most scenic photos from their last trip, they can do so without granting your app access to all photos.

This is what the Photos picker allows you to do.

This API gives your app access to selected photos or videos, without requiring permission to access the entire photo library.

And in iOS 17 and macOS Sonoma, you can fully embed the picker into your app for a completely seamless experience.

Even though the photos look like they are part of your app, they are rendered by the system and only shared when selected, so the user's photos always remain in their control.

With the new customization options, you can choose whether you want to show the picker without any chrome, like this, or as a minimal, single row of photos that can be scrolled horizontally, or as an inline version of the full picker, with a new Options menu, providing controls about sharing photo metadata, such as captions or location information.

The Photos picker is a great and fast way to obtain photos, as you don't need to worry about obtaining permission for accessing the entire library, or designing and implementing a photo-picking flow.

Consider adopting the picker to individually access photos instead of requesting full access.

For an in-depth explanation of the picker's new capabilities, watch the video "Embed the Photos picker in your app." And in case you are developing an app that has a strong requirement for accessing the full photo library, iOS 17 has a redesigned permission dialog that includes the number of photos and a sample of what will be shared.

This helps people make the decision that is right for them about what to share.

Because the preferences might change over time, the system also periodically reminds people if your app has full access to the photo library.

Next up is the Screen Capture picker, a new API in ScreenCaptureKit for macOS that enables people to share only the windows or screen your app needs when screen sharing, while providing a better experience.

Prior to macOS Sonoma, when a user wants to screen share their presentation in a virtual video conference, they need to grant the conferencing app permission to record the full screen via the Settings app, resulting in a poor experience and risk of oversharing.

With the new SCContentSharingPicker API, macOS Sonoma shows a window picker on your behalf where people can pick the screen content that they want to share.

macOS makes sure to only share the selected windows or screen as soon as they are picked.

Because of the explicit action to record the screen, your app is permitted to record selected content for the duration of the screen capture session.

This means your app is not required to obtain separate permission to capture the screen or build its own screen content picker -- this is all handled by SCContentSharingPicker.

To make sure that people are always aware, macOS Sonoma also includes a new screen sharing menu bar item serving as a reminder that your app is recording the screen.

Keep this in mind and only record screen content when it is expected.

When clicked, the menu expands to provide a preview of the shared content.

This also allows quickly adding or removing screen content to the capture session or ending it altogether.

SCContentSharingPicker also provides options for customization in order to adjust it based on your app's needs, such as preferred selection modes or applications.

For more details, watch "What's new in ScreenCaptureKit." Calendar is another area that now enables seamless experiences in your app, especially for apps that only create new events.

The calendar provides a detailed view into people's lives, such as doctor appointments or flight information, which is why people may be surprised or even say no when your app asks for access.

For apps that are only seeking access to write calendar events, this can result in a failure to add events, frustration, and maybe even people missing a concert or a good friend's birthday party.

In order to avoid this issue, there are two important changes to Apple's platforms for Calendar access.

First, if your app only creates new calendar events, I have great news for you: with EventKitUI, your app does not need any permission.

This is made possible by rendering EventKitUI view controllers outside of your app, without any changes to the APIs or functionality.

Second, if you prefer to provide your own UI for creating events, there is a new add-only calendar permission, allowing your app to add events without access to other events on the calendar.

This is another great way to integrate your app's events into the user's day, without them having to worry whether your app might be fetching content of their calendar.

And, should your app need full Calendar access later, you can ask once to upgrade.

It is best to make this request when linked to user intent.

When asked at an unexpected time or for an unexpected reason, it might be rejected and can degrade the experience in your app.

Help people understand why the access is needed, by defining a meaningful purpose string.

In order to provide the benefits of write-only access to all apps, there are two important things to remember.

If your app has been granted Calendar access previously, it defaults to write-only permission on upgrade to iOS 17 or macOS Sonoma.

Similarly, if you are linking against an older version of EventKit and your app asks for Calendar access, the system only prompts for write-only access.

In this case, when your app attempts to fetch Calendar events, the system automatically asks to upgrade your app to full access.

For more information about how to integrate EventKit and EventKitUI into your app, watch "Discover Calendar and EventKit." Next is the new Oblivious HTTP API, which helps you to hide client IP addresses from your servers and potentially sensitive app usage patterns from network operators.

Knowing when and which apps people use on a daily basis can provide deep insight into their lives.

Because cellular and Wi-Fi network operators can observe what servers someone connects to, they can observe their personal app usage and life patterns.

And some network operators may be interested in using their position to learn about how people use your app.

This could be especially sensitive to them, such as dating apps or apps for specific health conditions.

IP addresses are an essential element for communication on the internet; however, the IP address can be abused to determine someone's location or their identity.

Exposure to IP addresses can result in additional challenges for you if you want to implement features with anonymity guarantees in your app, such as client analytics.

Apple platforms now support Oblivious HTTP, or OHTTP for short, in order to help you protect people's app usage and IP address by separating the who from what.

OHTTP is a standardized internet protocol that is designed to be lightweight, proxying encrypted messages at the application layer to allow for fast transactional server interactions.

With OHTTP, the network operator can only observe a connection to the relay provider, instead of your application server.

The cornerstone of this architecture is the relay, which knows the client's IP address and the destination server name, but none of the encrypted content.

It is assumed that the relay always sees connections to your application server, so the only meaningful information the relay gains is the client IP.

The final connection to your application server is made by the relay.

When the relay is operated by a third party, no single party has full visibility of the source IP, destination IP, and the content.

This also enables you to add technical guarantees to features where you don't want to be able to identify or track users, such as anonymous analytics.

With OHTTP support, you have the chance to build stronger internet privacy protections that impact people meaningfully.

Services like iCloud Private Relay already make use of OHTTP for its great performance and strong privacy protections.

For example, Private Relay uses it to protect all DNS queries.

To learn more about how you can adopt OHTTP, watch the network relay video.

Adopting OHTTP also means you will need to think about how to replace IP addresses in your system architecture -- for example, to perform real-user detection.

Refer to "Replace CAPTCHAs with Private Access Tokens" from WWDC22 to learn how to replace IP reputation systems with a privacy-preserving alternative.

Encrypting DNS queries is another essential part of protecting app usage from networks.

To learn how, watch "Enable Encrypted DNS" from WWDC20.

The last new tool is Communication Safety and the new Sensitive Content Analysis framework, which utilizes on-device processing to help you protect children in your app.

Apple platforms and the apps you build have become an important part of many families, as children use our products and services to explore the digital world and communicate with family and friends.

Communication Safety helps families to keep children safe, by warning children and providing helpful resources if they receive or attempt to share photos that likely contain nudity.

And it is important that these protections are applied throughout Apple's platforms, as well as in your app.

To this end, Communication Safety expands beyond Messages to also detect sensitive content when sharing via AirDrop, when leaving a message on FaceTime, when sharing contact posters in the Phone app, and in the Photos picker.

We also make these features available to everyone, no matter their age, with Sensitive Content Warning.

And now, with the new Sensitive Content Analysis framework, you can detect sensitive content in your app too.

It taps into the same on-device technology with system-provided ML models, so you don't have to share any content with any servers.

And with this framework, this is possible for you without having to deal with the complexity of training large ML models and packing them into your app.

Integration into your app is possible with just a few lines of code.

To get started, create an instance of SCSensitivityAnalyzer.

You can check the analysisPolicy attribute to decide whether analysis is needed, and what kind of intervention should be shown if the image or video contains nudity.

Then, call the analyzeImage method with the URL of the photo, or the CGImage, that you want to analyze.

For analyzing a video, call the videoAnalysis method instead.

This returns a handler, so you can track progress and cancel the analysis if needed.

To obtain the analysis result, call hasSensitiveContent on the handler.

If isSensitive is true, the image or video likely contains nudity.

In this case, your app should provide its own intervention -- which can consist of blurring or otherwise obfuscating the image or video -- and an option to view the content.

Also, check the analysis policy to tailor the intervention depending on whether Communication Safety or Sensitive Content Warning is enabled.

You can find more detailed design guidelines for interventions in the Apple Developer documentation.

Those are the new APIs to adopt to offer great privacy in your app.

In addition to that, there are a few privacy changes to existing features on Apple platforms.

This includes new ways you can protect data in your app as well as privacy improvements for Safari and Safari app extensions.

First, our new privacy protections for macOS, designed to help you protect data in your app from other apps on the same device.

Locations on disk like the Desktop, Documents, and Downloads folder have a system-managed permission.

This ensures people are in control over when apps have access to their private data.

This model works well for files that people directly interact with, like a project presentation or a spreadsheet with a budget.

Some applications might store private data in different locations, such as a messaging app that has a database of sent and received messages, or a notes app with vacation plans.

These files are often stored in locations like the Library folder, or for App Sandboxed apps, the app's data container.

macOS Sonoma gives users additional control over who can access their data.

Specifically, macOS ensures that they give permission before an app can access data in an application data container from a different developer.

This can impact your application in two ways.

First, if your application stores data outside of system-managed data stores, adopt App Sandbox in order to extend this new protection to data of your users.

Then, all files created by your app will be protected.

Apps that are already using App Sandbox get this new protection automatically.

Second, if your app accesses data from other apps, there are a few ways that you can ask for permission.

Without any changes on your side, macOS Sonoma will ask for permission when your app accesses a file in another app's data container.

The permission is valid for as long as your app remains open, and once the app is quit, the permission resets.

You should only attempt to read other apps' files when it is expected.

If the timing of the prompt is surprising, or the purpose is unclear, your app's access might be denied.

A meaningful purpose string will help people understand why your app is requesting access.

There are a few alternative ways you can get explicit permission to access files from other apps.

For seamless access to individual files and folders, use NSOpenPanel.

This shows the macOS file picker outside of your process, and your app can read or write selected resources once the user confirms the selection.

NSOpenPanel also allows you to specify the path that is shown by default in the picker to make the selection even easier.

For backup utilities or disk-management tools that have already been granted Full Disk Access, no additional prompt will be shown at time of access, since people have already chosen to allow these apps access to all files.

In addition, all apps signed with your Team ID can access data in your other app's containers by default, without a permission prompt.

So if you release a new app that imports data from a previous version of your app, this will work seamlessly.

However, there may be instances where you want to define a more restrictive policy.

For example, if you build a code interpreter such as an editor, browser, or a shell, you might want macOS to ask for permission when this app accesses data from a messaging app that you also developed.

To do so, you can specify an NSDataAccessSecurityPolicy in your app's Info.plist, to replace the default same-team policy with an explicit AllowList.

When specified, listed processes and installer packages are permitted to access your app's data without additional consent while other apps require permission.

Advanced Data Protection is another opportunity for you to protect data of your users.

Advanced Data Protection was added in 2022, providing a way for people to enable end-to-end encryption for the vast majority of their data stored in iCloud.

By adopting CloudKit, you can end-to-end encrypt data stored in CloudKit by your app whenever someone enables Advanced Data Protection.

And this is possible without any changes required on your end, in order to manage encryption keys, encryption operations, or complex and risky recovery flows.

To extend the great privacy benefits of Advanced Data Protection to your users, you only need to follow a few steps.

First, make sure to use encrypted data types for all fields in your CloudKit schema.

This includes CKAsset fields by default, and for most data types in CloudKit, there is an encrypted variant, such as EncryptedString.

Then, you can use the encryptedValues API, to retrieve or store data on your CloudKit records.

All encryption and decryption operations are abstracted away by this API for your convenience.

As a result, your app's data receives the full benefit of security breach and privacy protection that is available from Advanced Data Protection, whenever someone enables this feature.

For an explanation of how you can adopt CloudKit in your app, including code samples, watch "What's New in CloudKit" from WWDC21.

Next up are new fingerprinting and tracking protections in Safari Private Browsing mode.

Safari is designed with privacy at its core.

Private Browsing Mode enables additional privacy protections in Safari, such as making sure that when a tab is closed, Safari doesn't remember the pages you visited, search history, or AutoFill information.

Private Browsing mode in Safari 17 adds advanced tracking and fingerprinting protection, which includes two new protections for preventing tracking across websites.

First, Safari prevents known tracking and fingerprinting resources from being loaded.

If you are a website developer, make sure to test your website's functionality in Private Browsing mode: focus testing on login flows, cross-site navigation from your website, and use of browser APIs related to screen, audio, and graphics.

You can reload without advanced tracking and fingerprinting protections, to confirm if a change in behavior of your website is due to the new protections.

Do this by right-clicking on the reload button on macOS, via the page settings button on iOS, or by testing in Safari in normal browsing mode.

You can also open the Web Inspector to examine any output to the JavaScript console.

Network requests that were blocked as a result of contacting known trackers show up as a message beginning with "Blocked connection to known tracker." Another common method for cross-website tracking are unique identifiers embedded into URLs, for example, in query parameters.

To give people control over where they can be tracked, another new protection is removal of tracking parameters as part of browser navigation, and when copying a link.

When a tracking parameter is detected, Safari strips the identifying components of the URL, while leaving nonidentifiable parts intact.

Remember that ad attribution can be done without identifying individuals across websites.

For example, Private Click Measurement is a privacy-preserving alternative to tracking parameters for advertising attribution.

And it is now also available in Private Browsing mode for direct-response advertising, where no data is written to disk and attribution is limited to a single browsing context, based on a single tab.

This follows Safari's existing strict model of ephemeral browsing and separation of tabs in Private Browsing.

For more information, check out the video "Meet privacy-preserving ad attribution" from WWDC21.

The last platform change is the new permission model for app extensions in Safari.

With Safari 17, the permission model Apple pioneered for web extensions is coming to app extensions as well.

This means that people are able to choose which webpages your extension is able to access on a per-site basis.

We're also giving them control over which extensions can run in Private Browsing mode.

To learn more about these changes to extensions and how permissions for your extensions can be granted per-site, you can watch the video "What's new in Safari extensions." The privacy principles behind new tools for developers and our platform changes extend across all of the features we build at Apple, including the input model on our new spatial computing platform.

The system is really simple to use: just look around, decide what you want to interact with, and tap.

There are no new permissions, no extra work for every app developer, and no worrying about apps tracking where you look.

The results are a great new product with great privacy.

Let's go through the privacy engineering approach to the development of eye and hand control.

First, there are high-level goals the input model needs to achieve.

The input experience should be fast and fluid, enabling natural interaction with UI elements.

It should give real-time feedback about what people are looking at in order to provide confidence in interacting with UI elements of all kinds and sizes.

In addition, existing iPhone and iPad applications should work out of the box.

And last, it should not require new app permissions just for apps to receive basic inputs.

Next are the privacy goals for the input model.

To prevent apps from learning sensitive details about your eyes, including medical conditions, only relevant system components should be able to access the eye cameras.

To enable permissionless access, apps should be able to work without learning details about people's eyes or hands.

And what people look at can reveal what they're thinking, so it is important that apps work without learning what people look at and only learn what they interact with.

Let's go over the system Apple engineered to achieve all of these goals.

The internal and external camera data that measures eyes and hands for the input system is processed in an isolated system process.

This delivers a measurement of where the eyes and hands are located to the eye and pinch detection systems, and abstracts away complex camera processing from all other system components, including your app.

The hover feedback system combines what is shown onscreen with the eye position to determine what the user is looking at.

If they are looking at a UI element, the system adds a highlighting layer as it is rendered.

This is done by the rendering engine outside of the app's process and only visible to the person using the device, so they understand what they are looking at without revealing any information to apps.

And as soon as a pinch gesture is detected, the system delivers a normal tap event for the highlighted UI element to your app.

With this system architecture, the complexity of translating camera data into input events is handled by the operating system.

This means, no changes are required to receive inputs on this new platform.

In addition to the default system behavior, UIKit, SwiftUI, and RealityKit make it easy to tune these effects to match your app's design, with the same privacy protections as system-provided UI elements.

You can change the type, shape, and what elements the hover effects apply to.

To learn more about how to adopt, watch "Meet SwiftUI for spatial computing" as well as "Elevate your windowed app for spatial computing." This spatial input model is made possible by implementing the privacy pillars.

The system minimizes the amount of data each component of the system needs access to.

All of the processing takes place on the device.

People have control, since only intentional interactions are shared with apps.

And where you look is protected using process isolation enforced by the operating system's kernel.

By making privacy a core design goal, it is possible to deliver both great features and great privacy.

We hope that learning about the new privacy changes across Apple platforms has helped inspire you as they make it easier for you to build trust through great privacy; minimize data accessed by your app with content-picking APIs providing seamless user control; protect children in your app by adopting the Sensitive Content Analysis framework with on-device processing.

And for protecting user data in your app, adopt security protections such App Sandbox on macOS, and make sure to encrypt data in CloudKit.

Thank you for watching this video.

We can't wait to see what you build.

♪

-

-

16:00 - Detect sensitive content

// Analyzing photos let analyzer = SCSensitivityAnalyzer() let policy = analyzer.analysisPolicy let result = try await analyzer.analyzeImage(at: url) let result = try await analyzer.analyzeImage(image.cgImage!) // Analyzing videos let handler = analyzer.videoAnalysis(forFileAt: url) let result = try await handler.hasSensitiveContent() if result.isSensitive { intervene(policy) }

-