-

Integrate with motorized iPhone stands using DockKit

Discover how you can create incredible photo and video experiences in your camera app when integrating with DockKit-compatible motorized stands. We'll show how your app can automatically track subjects in live video across a 360-degree field of view, take direct control of the stand to customize framing, directly control the motors, and provide your own inference model for tracking other objects. Finally, we'll demonstrate how to create a sense of emotion through dynamic device animations.

To learn more techniques for image tracking, check out “Detect animal poses in Vision” from WWDC23 and "Classify hand poses and actions with Create ML” from WWDC21.Resources

Related Videos

WWDC23

WWDC21

WWDC20

-

Search this video…

♪ ♪ Onur Tackin: Hi. My name is Onur Tackin. I'm an Engineering Manager, and today, I will be introducing you to integration with motorized iPhone stands using DockKit. In this video, I'll talk about what DockKit is, then dive into how it works out of the box, walk through how you can customize your apps using DockKit, and then finally go into motion as an affordance using device animations.

So, what is DockKit? DockKit is the framework that allows the iPhone to act as the central compute for motorized camera stands.

DockKit stands extend the field of view for any iPhone camera to 360 degrees of pan and 90 degrees of tilt by supporting a Pitch and Yaw motion model and an automatic system tracker. This allows the user to focus on their content and without worrying about being in frame in any camera app. These stands may include simple buttons for power and disabling tracking, and an LED indicator to let you know if tracking is active and you are in the frame. You pair the phone with the stand and then it is good to go. All the magic happens in the application and system services on the iPhone itself. Due to the motor control and subject tracking being handled in system level, any app that uses the iOS camera APIs can take advantage of DockKit. That creates opportunities for better experiences and new features in apps for Video Capture, Live Streaming, Video Conferencing, Fitness, Enterprise, Education, Healthcare, and more.

Instead of just talking about DockKit, let me demonstrate it to you.

In front of me, I have a prototype DockKit stand. I already paired it with the iPhone. When on the stand, the phone will communicate with the paired dock through DockKit. Now, let's give it a try. I'm going to launch the built-in Camera app and when I press this button here, it's gonna start tracking.

As I move around the table, the dock tracks me. Notice the green LED blinking to denote I'm in frame.

Now, let me switch to the back camera.

Notice how the dock responds by rotating 180 degrees to get me into the field of view. Super cool. But this doesn't work with only the built-in Camera app. Any app that uses the camera APIs can be used with the dock.

For instance, let's try FILMICPRO. This is the same FILMICPRO that you can download from the App Store today.

When I launch the app, it is defaulting to the rear-facing camera. And all of this works out of the box. With DockKit stands, you can interact with the space and objects around you. For instance, I can go to my stack of books here and talk about my books, or I can rearrange my space, interacting with the objects.

See, DockKit applications allow the storyteller to focus on the story instead of worrying about field of view.

With iPhone's built-in image intelligence and the smooth motors, these stands truly come alive. Let's dive into how this works. DockKit system tracker runs inside the camera processing pipeline, analyzing camera frames through built-in inference, deciding which subject to track, estimating subject trajectory, and framing the subject appropriately by driving the motors. Much like how a cinematographer observes real world events and adjusts the camera perspective, DockKit infers an object from camera frames and adjusts the motors through actuation commands to the dock.

The motor control is achieved through the DockKit daemon which manages and communicates with the DockKit stand. The actuation commands are sent to the stand via the DockKit protocol and the sensor feedback is used to close the loop on motor control. To handle the tracking, camera frames are analyzed via ISP inference and passed to DockKit at 30 frames per second. There's a Visual Understanding Framework under the hood to help with multi-person scenarios. Face and Body bounding boxes are generated and fed to a multi-model system level tracker. The tracker generates a track for each person or object tracked and runs a statistical EKF filter to smooth for gaps and errors from inference. After all, real world signals are not always perfect. The tracker estimates for the subject are combined with the motor positional and velocity feedback, as well as phone IMU to arrive at a final trajectory and actuator commands. Often, multiple subjects can be present in a frame. By default, DockKit tracks the primary subject with the center framing. If they engage a second person or an object, then DockKit will also try to frame that person or object.

For example, here we have Mahmut, Steve, Dhruv, and Vamsi. Mahmut is the primary subject denoted by the green bounding box. Notice that there are bounding boxes for the body as well as the detected face. Even with other team members obstructing Mahmut and crossing paths, the tracker continues to track the primary subject.

When the recognition or the inference gives incorrect results due to obstruction, the statistical tracker corrects the error and is able to continue to track Mahmut. That's how DockKit works without you having to add any additional code to your app. However, things get really exciting when you integrate with DockKit to deliver new features your customers will love. Let's explore how you can take control of the DockKit accessory.

This will involve obtaining a reference to the dock. From there, you can choose to change the framing, specify what is being tracked, or directly control the motors after stopping system tracking. First, you will want to register dock accessory state changes. A docking or undocking notification occurs when a person docks or removes the iPhone from a compatible dock accessory. The notification is a prerequisite for modifying the tracking behavior.

You can query the state the of the dock through the DockAccessoryManager State events. The only relevant states are Docked and Undocked. The connectivity is managed by DockKit itself. The Docked state also means that iPhone is connected to the dock via DockKit Protocol. Through state events, your application can also get the state of the tracking button. Once you know that the iPhone is docked, you can take control of other aspects of the accessory. One of the most useful is for your app to manage the way in which the video is cropped.

There are two ways you can control the cropping of camera's field of view.

First, you can simply select left, center, or right alignment of the automatic framing, or you can specify a specific region of interest. Let's go through cases where you may want to choose one or the other.

By default, DockKit will keep your subject centered in frame. This works well for simple things like video streaming, but there are use cases where this may not be ideal.

For example, what if your app injects custom graphic overlays, like this logo, into the video frame? In this case, you'd want to make sure that your subject isn't obscured by the artwork.

You can correct this by simply changing the framing mode. Here, I'm specifying "right" to help balance against the graphic that is aligned to the left third of the frame. And with that simple bit of code, the composition is perfect for the opening sequence of this video. Another way to control cropping is to specify a specific region of interest in the video frame.

Take this video conferencing app, for example. All video frames are intended to be cropped to a square aspect ratio. However, the default framing of DockKit could cause someone's face to be cut off.

You can fix this by setting a region of interest on the DockKit accessory.

The upper left corner of the iPhone's display is considered the point of origin. Regions of interest are defined in normalized coordinates. In this example, I'm telling the DockKit accessory that the region of interest is a centered square. With the adjustment to the region of interest, the subject is perfectly cropped within the frame. With DockKit you can also do custom motor control in your app for either utility or as an affordance. This can open up lots of new feature opportunities.

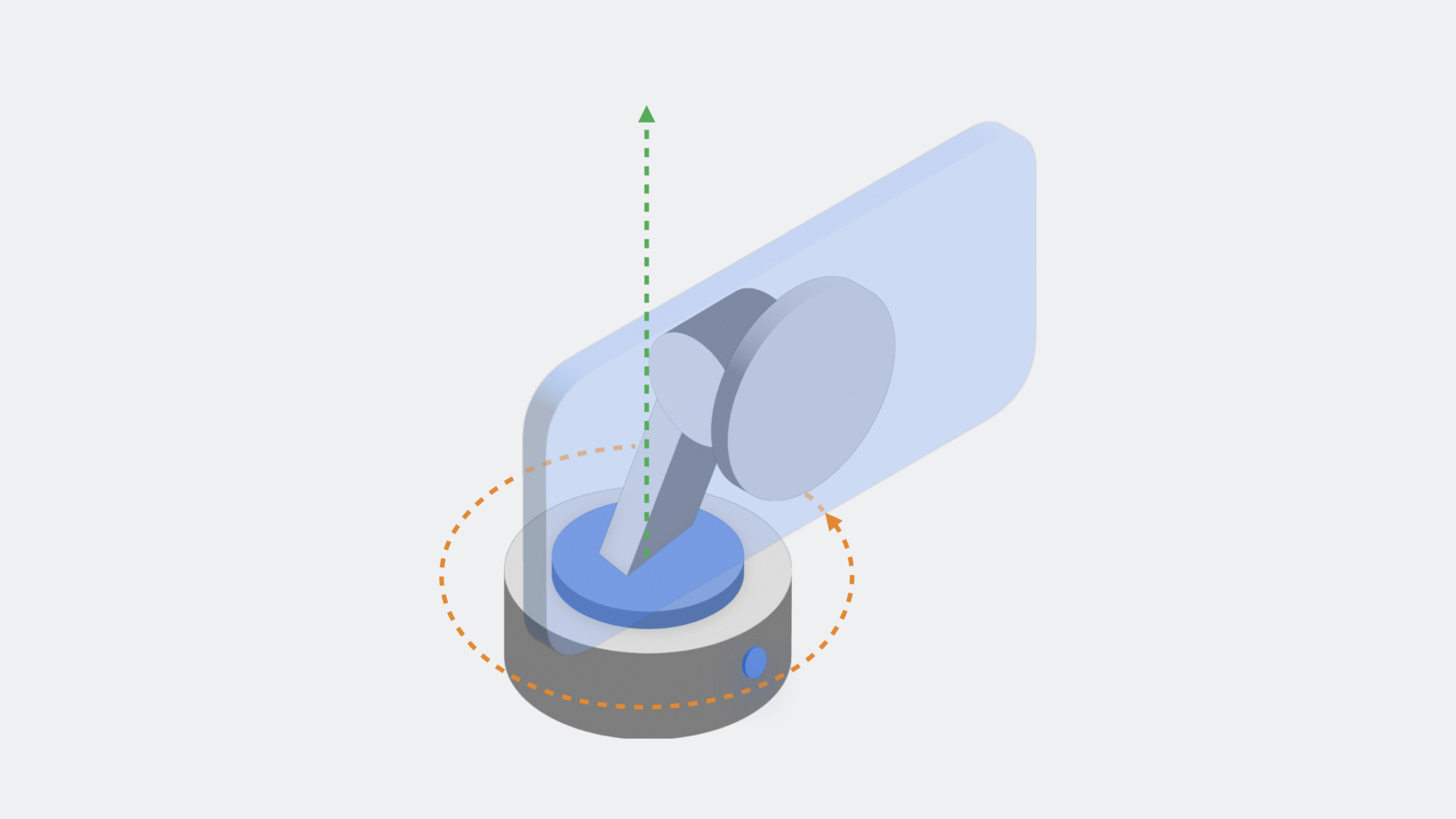

Since DockKit enables system tracking by default, you will need to set this value to false before taking control of the motors or performing your own custom tracking. DockKit stands operate on two axis of rotation: X and Y. The tilt or, specifically, Pitch rotation is around X axis aligned with the motor behind the magnet dock point. For pan, known as Yaw, the rotation is around Y axis aligned with the motor at the base of the stand. Let's look at example of controlling this in code.

Say you want to move the motor at a low speed of 0.2 radians per second to the right while pitching down 0.1 radians per second. First you define the initial velocity vector, then send that vector to the dock. You can keep the motion going for 2 seconds by sleeping the task. And reverse direction with a different vector to move left at 0.2 rad/s and Pitch up with 0.1 rad/s. Now, that's not all that's possible when your app integrates with DockKit. In addition to controlling the motors directly, you can also take control of the Inference. You can use the Vision framework, your own custom ML model, or whichever perception algorithm your application needs. From the custom inference output, you construct observations to feed into DockKit to track the object. So, what is an observation? An observation is any rectangular bounding box that represents a subject of interest in the camera frame.

That is, what you want to track. It could be a face, or an animal, or even a hand.

Honestly, any object or point that you can observe over time.

The bounding boxes are defined in normalized coordinates based lower left point of origin. For instance, to create an observation for this detected face, I'd use a bounding box of roughly 0.25, 0.5, with width and height a percentage of the overall image frame.

To create an observation, you construct a CGRect in normalized coordinates and construct an Observation from it. When you do so, you want to specify that the observation type is either "humanFace" or "object".

By using the "humanFace" option, you can ensure that the system-level multi-person tracking and framing optimizations are still in effect.

Once the observations are created, they can be fed to the tracker. You start by getting the current camera information. In this case, I'm specifying that the orientation is "corrected" to let DockKit know the coordinates are relative to bottom left corner of the screen and no transformation is necessary, and then pass the observations array and camera info to the dock accessory. Now, the good news is, you don't have to calculate the bounding boxes of many things you may want to observe and track completely by hand.

The Vision framework is great for custom inference, as it already includes many built-in requests that return bounding boxes which can easily be converted into trackable observations, including Body Pose Detection, Animal Body Pose Detection, and even Barcode Recognition. And the coordinate system for Vision is the same for DockKit, so you can pass those in directly without any conversion. You just have to let DockKit know what the device orientation is. Now, let's walk through implementing custom observations in code.

In this case, I want to replace default face and body tracking with hand tracking using the Hand Pose Detection request.

Start by creating the Vision request and the request handler-- for this use case, I'm using VNDetectHumanHandPoseRequest-- then, perform the request. You can construct an Observation based on the recognized points. For simplicity, I'm focusing on the point of the thumb tip, but I could use any other finger joint or the whole hand to construct the observation. And finally, you pass the observations to DockKit to track.

With that, let's see it in action! I'll launch my custom camera app and start tracking.

As I move my hand, moving left, note the stand panning left to follow. And then, as I move my hand to the right, the stand follows in the opposite direction.

If I sweep my hand up, the stand will tilt to keep my hand in frame, and following again as I sweep my hand back down. Perfect.

With the ability to control the motors directly through the DockKit APIs, you can bring the device to life through animations! The motion of the dock can be used as an affordance for confirmation or as a way to convey emotion. You can create your own custom animations through direct motor control to emulate physical interaction with the phone, like a push or a pull, or you can leverage one of the built-in animations.

The built-in animations are Yes, No, Wakeup, and Kapow. You can see the Wakeup animation in action every time the device startup occurs. Going back to the custom hand tracking app I just demonstrated, you could trigger a built-in animation any time a specific hand gesture occurs. To do this, you first need to train a custom Hand Action Classification model. This is easily done using the Create ML app. For more information on creating one of these models, be sure to watch the "Classify hand poses and actions" video from 2021.

Any time the custom model predicts that the hand gesture occurred, you can trigger the animation. Begin by first disabling system tracking, then initiate the animation-- in this case, Kapow. The animation will start from the stand's current position and will execute asynchronously. Once the animation executes, you will want to re-enable system tracking. Now, back to the demo to see it in action.

The app will continue to track my hand as I step back a bit. If I make a gesture that I'm pushing the camera, kapow! The camera swings back and forth in a pendulum motion. Let's do that one more time because that is so much fun. And kapow! You can use animations like these to end an experience, or express something, anything. I encourage you to experiment with this, and by all means, consider creating your own animations using custom motor control.

With DockKit, the ability to track objects using motorized stands is introduced, which gives applications 360 degree field of view. Objects can be tracked at system level or by applications using custom inference. And you can use mechanical motion in your applications as an affordance for conveying emotion or utility. To learn even more about ways you could use the Vision framework for custom tracking in DockKit, be sure to check out the video on detecting animal poses. I can't wait for you to get your hands on these amazing accessories and to see the experiences you'll bring to your app when using one.

-