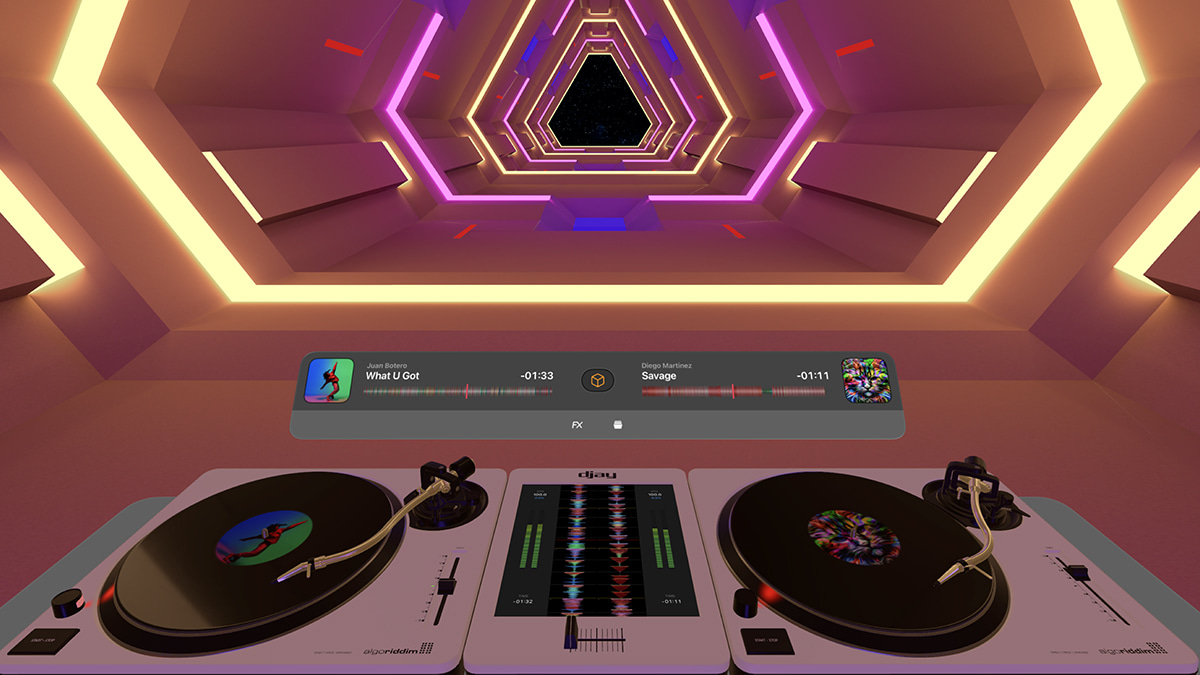

visionOS enables you to create ground-breaking spatial computing experiences that uniquely blend digital content with the physical world. People can interact with your app while staying connected to their surroundings, or immerse themselves completely in a world of your creation. And your experiences can be fluid: start in a window, bring in 3D content, transition to a fully immersive scene, and come right back.

The choice is yours, and it all starts with the building blocks of spatial computing in visionOS.

Windows

You can create one or more windows in your visionOS app. They’re built with SwiftUI and contain traditional views and controls, and you can add depth to your experience by adding 3D content.

Volumes

Add depth to your app with a 3D volume. Volumes are SwiftUI scenes that can showcase 3D content using RealityKit or Unity, allowing you to create experiences that are viewable from any angle in the Shared Space or in an app’s Full Space.

Spaces

By default, apps launch into the Shared Space, where they exist side by side — much like multiple apps on a Mac desktop. Apps can use windows and volumes to show content, and people can reposition these elements wherever they like. For a more immersive experience, an app can open a dedicated Full Space where only that app’s content will appear. Inside a Full Space, an app can use windows and volumes, create unbounded 3D content, open a portal to a different world, or even fully immerse people in an environment.