-

What's new in Nearby Interaction

Discover how the Nearby Interaction framework can help you easily integrate Ultra Wideband (UWB) into your apps and hardware accessories. Learn how you can combine the visual-spatial power of ARKit with the radio sensitivity of the U1 chip to locate nearby stationary objects with precision. We'll also show you how you can create background interactions using UWB accessories paired via Bluetooth.

Resources

Related Videos

WWDC21

WWDC20

-

Search this video…

♪ instrumental hip hop music ♪ ♪ Hi, I'm Jon Schoenberg, and I'm an engineer on the Location Technologies team at Apple.

In this session, I'll cover the new features we've brought to Nearby Interaction, that are going to enable you to build richer and more diverse experiences with spatial awareness.

The Nearby Interaction framework makes it simple to take advantage of the capabilities of U1 -- Apple's chip for Ultra Wideband technology -- and enables creating precise and spatially aware interactions between nearby Apple devices or accessories compatible with Apple's U1 chip for Ultra Wideband.

Let's get started with a quick review of what's been available to you over the last two years.

When Nearby Interaction was introduced at WWDC 2020, the functionality focused on creating and running a session between two iPhones with U1.

At WWDC 2021, the functionality was extended to support running sessions with Apple Watch and third-party Ultra Wideband-compatible accessories.

If you're interested in a deep dive into the Nearby Interaction framework's APIs, please review the WWDC talk "Meet Nearby Interaction" from 2020 and "Explore Nearby Interaction with third-party accessories" from 2021.

We've been blown away by the community's response to Nearby Interaction, and in this session, I'm excited to unveil the new capabilities and improvements for you.

I will focus on two main topics: enhancing Nearby Interaction with ARKit and background sessions.

Along the way, I'll share some improvements that make it easier to use the Nearby Interaction framework and I'll conclude with an update on third-party hardware support that was announced last year.

We're excited about what you will do with the new capabilities, so let's dive right into the details.

I'll start with an exciting new capability that tightly integrates ARKit with Nearby Interaction.

This new capability enhances Nearby Interaction by leveraging the device trajectory computed from ARKit.

ARKit-enhanced Nearby Interaction leverages the same underlying technology that powers Precision Finding with AirTag, and we're making it available to you via Nearby Interaction.

The best use cases are experiences that guide a user to a specific nearby object such as a misplaced item, object of interest, or object that the user wants to interact with.

By integrating ARKit and Nearby Interaction, the distance and direction information is more consistently available than using Nearby Interaction alone, effectively broadening the Ultra Wideband field of view.

Finally, this new capability is best used for interacting with stationary devices.

Let's jump right into a demonstration of the possibilities this new integration of ARKit and Nearby Interaction enable with your application.

I've got an application here for my Jetpack Museum that has Ultra Wideband accessories to help guide users to the exhibits.

Let's go find the next jetpack.

As the user selects to go to the next exhibit, the application discovers the Ultra Wideband accessory and performs the necessary exchanges to start using Nearby Interaction.

The application then instructs the user to move the phone side to side while it begins to determine the physical location of the next exhibit, using the enhanced Nearby Interaction mode with ARKit.

Now that the application understands the direction that corresponds to the next exhibit, a simple arrow icon telling the user the direction to head to check it out appears.

This rich, spatially-aware information utilizing the combination of ARKit and Nearby Interaction can even indicate when the exhibit is behind the user and the user is heading in a direction away from the exhibit.

Finally, the application can display, in the AR world, an overlay of the next exhibit's location, and the application prompts the user to move the iPhone up and down slightly to resolve where the exhibit is in the AR world.

Once the AR content is placed in the scene, the powerful combination of Nearby Interaction -- with its Ultra Wideband measurements -- and ARKit, allows the user to easily head over to check out the next jetpack.

I may not have found a jetpack, but I found a queen.

Let's turn now to how you can enable this enhanced Nearby Interaction mode.

With iOS 15, you probably have a method in your application that accepts the NIDiscoveryToken from a nearby peer, creates a session configuration, and runs the NISession.

Enabling the enhanced mode with ARKit is easy on new and existing uses of Nearby Interaction with a new isCameraAssistanceEnabled property on the subclasses of NIConfiguration.

Setting the isCameraAssistanceEnabled property is all that's required to leverage the enhanced mode with ARKit.

Camera assistance is available when interacting between two Apple devices, and an Apple device to third-party Ultra Wideband accessories.

Let's look at the details of what happens when an NISession is run with camera assistance enabled.

When camera assistance is enabled, an ARSession is automatically created within the Nearby Interaction framework.

You are not responsible for creating this ARSession.

Running an NISession with camera assistance enabled will also run the ARSession that was automatically created within the Nearby Interaction framework.

The ARSession is running within the application process.

As a result, the application must provide a camera usage description key within the application's Info.plist.

Be sure to make this a useful string to inform your users why the camera is necessary to provide a good experience.

Only a single ARSession can be running for a given application.

This means that if you already have an ARKit experience in your app, it is necessary to share the ARSession you create with the NISession.

To share the ARSession with the NISession, a new setARSession method is available on the NISession class.

When setARSession is called, prior to run, on the NISession, an ARSession will not be automatically created within the Nearby Interaction framework when the session is run.

This ensures the application ARKit experience happens concurrently to the camera assistance in Nearby Interaction.

In this SwiftUI example, as part of the makeUIView function, the underlying ARSession within the ARView is shared with the NISession via the new setARSession method.

If you are using an ARSession directly, it is necessary to call run on the ARSession with an ARWorldTrackingConfiguration.

In addition, several properties must be configured in a specific manner within this ARConfiguration to ensure high-quality performance from camera assistance.

The worldAlignment should be set to gravity, collaboration and userFaceTracking disabled, a nil initialWorldMap, and a delegate whose sessionShouldAttempt Relocalization method returns false.

Let's turn to some best practices when sharing an ARSession you created.

In your NISessionDelegate didInvalidateWith error method, always inspect the error code.

If the ARConfiguration used to run the shared ARSession does not conform to the outlined properties, the NISession will be invalidated.

A new NIError code invalidARConfiguration will be returned.

To receive nearby object updates in your app, continue to use the didUpdateNearbyObjects method in your NISessionDelegate.

In your didUpdateNearbyObjects method, you probably check the nearby objects for your desired peer and update the UI based on distance and direction properties of the NINearbyObject when available, always being careful to recall these can be nil.

When camera assistance is enabled, two new properties are available within the NINearbyObject.

The first is horizontalAngle.

This is the 1D angle in radians indicating the azimuthal direction to the nearby object.

When unavailable, this value will be nil.

Second, verticalDirectionEstimate is the positional relationship to the nearby object in the vertical dimension.

This is a new VerticalDirectionEstimate type.

Distance and direction represent the key spatial relationship between the user's device and a nearby object.

Distance is measured in meters and direction is a 3D vector from your device to the nearby object.

Horizontal angle is defined as the angle between the device running the NISession and the nearby object within a local horizontal plane.

This accounts for any vertical displacement offset between the two devices and any horizontal rotation of the device itself.

While direction is 3D, horizontal angle is a 1D representation of the heading between the two devices.

This horizontal angle property is complimentary to the direction property, and if the direction cannot be resolved, the horizontal angle can be available to help you guide your user to a nearby object.

Vertical direction estimate is a qualitative assessment of the vertical position information.

You should use it to guide the user between floor levels.

Let's look now at the new VerticalDirectionEstimate type.

VerticalDirectionEstimate is a nested enum within the NINearbyObject and represents a qualitative assessment of the vertical relationship to the nearby object.

Be sure to check if the VerticalDirectionEstimate is unknown before using the property.

The vertical relationship can be same, above, below, or a special aboveOrBelow value that represents the nearby object is not on the same level, but not clearly above or below the device.

The Ultra Wideband measurements are subject to field of view and obstructions.

The field of view for direction information corresponds to a cone projecting from the rear of the device.

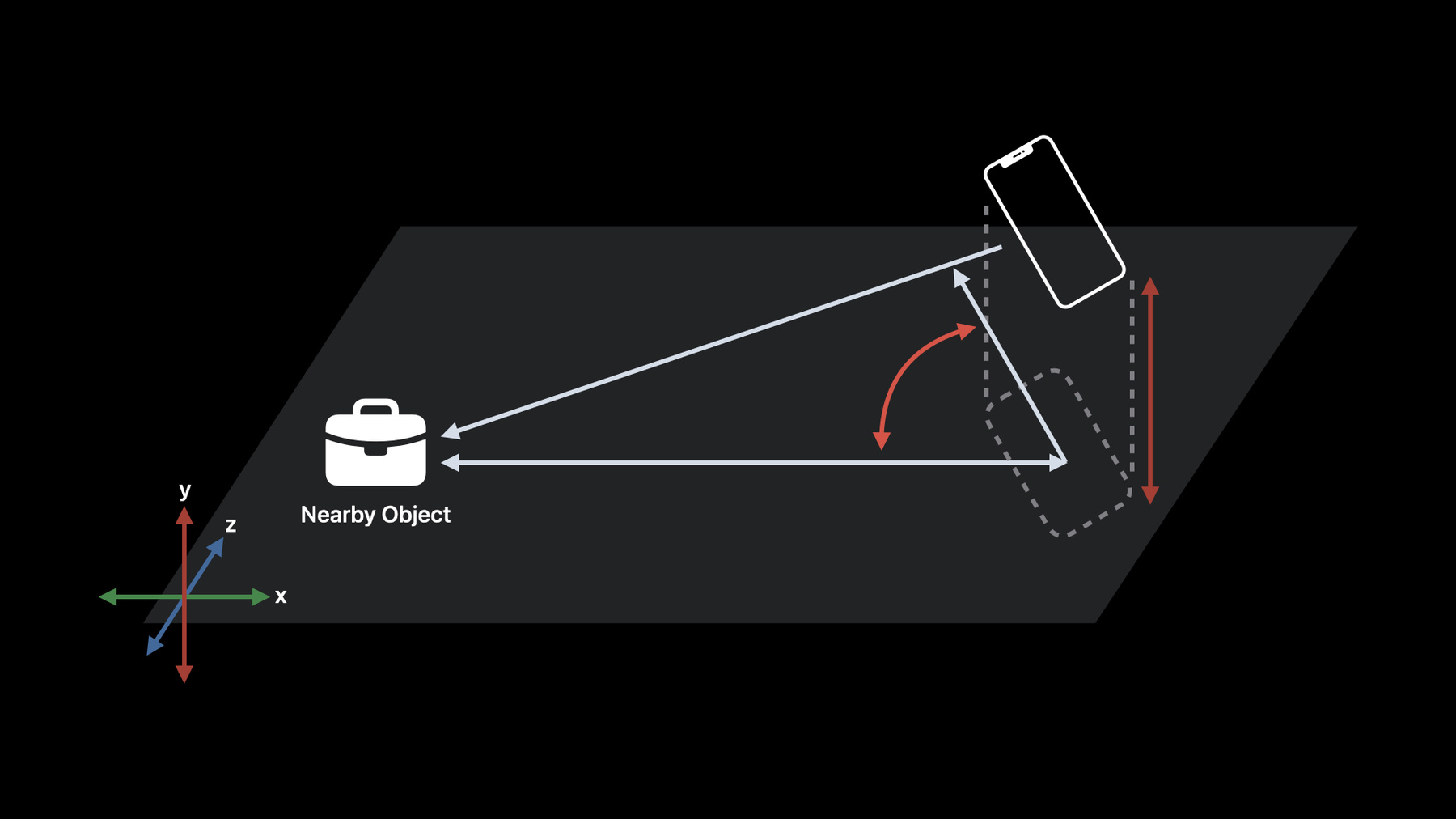

The device trajectory computed from ARKit when camera assistance is enabled allows the distance, direction, horizontal angle, and vertical direction estimate to be available in more scenarios, effectively expanding the Ultra Wideband sensor field of view.

Let's turn now to leveraging this integration of ARKit and Nearby Interaction to place AR objects in your scene.

To make it easier for you to overlay 3D virtual content that represents the nearby object onto a camera feed visualization, we've added a helper method: worldTransform on NISession.

This method returns a worldTransform in ARKit's coordinate space that represents the given nearby object's position in the physical environment when it's available.

When not available, this method returns nil.

We used this method in the demonstration to place the floating spheres above the next exhibit.

We want to make it as easy as possible for you to leverage Nearby Interaction positional output to manipulate content in the AR world in your app.

Two powerful systems in iOS, combined.

Your users must sweep the device sufficiently in vertical and horizontal directions to allow the camera assistance to adequately compute the world transform.

This method can return nil when the user motion is insufficient to allow the camera assistance to fully converge to an ARKit world transform.

When this transform is important to your app experience, it is important to coach the user to take action to generate this transform.

Let's look now at some additions we've made to the NISessionDelegate to make it possible for you to guide the user similar to what you saw in the demonstration.

To aid in guiding the user towards your object, an NISessionDelegate callback provides information about the Nearby Interaction algorithm convergence via the new didUpdateAlgorithmConvergence delegate method.

Algorithm convergence can help you understand why horizontal angle, vertical direction estimate, and worldTransform are unavailable and what actions the user can take to resolve those properties.

The delegate provides a new NIAlgorithmConvergence object and an optional NINearbyObject.

This delegate method is only called when you have enabled camera assistance in your NIConfiguration.

Let's look at the new NIAlgorithmConvergence type.

NIAlgorithmConvergence has a single-status property that is an NIAlgorithm ConvergenceStatus type.

The NIAlgorithmConvergenceStatus type is an enum that represents whether the algorithm is converged or not.

If the algorithm is not converged, an array of associated values NIAlgorithmConvergenceStatus .Reasons is provided.

Let's return to the new delegate method and say you want to update the status of the camera assistance to the user, you can switch on the convergence status and if unknown or converged, display that information to the user.

Be sure to inspect the NINearbyObject.

When the object is nil, the NIAlgorithmConvergence state applies to the session itself, rather than a specific NINearbyObject.

When the status is notConverged, it also includes an associated value that describes the reasons the algorithm is not converged.

A localized description is available for this reason to help you communicate better with your users.

Let's look next at how to use these values.

Inspecting the notConverged case more closely and the associated reasons value, it is possible to guide the user to take actions that helps produce the desired information about a nearby object.

The associated value is an array of NIAlgorithmConvergence StatusReasons.

The reason can indicate there's insufficient total motion, insufficient motion in the horizontal or vertical sweep, and insufficient lighting.

Be mindful that multiple reasons may exist at the same time and guide the user sequentially through each action based on which is most important for your application.

Recall how I moved the phone in the demonstration and needed to sweep in both the horizontal and vertical direction to resolve the world transform.

That's the most important bits about the enhanced Nearby Interaction mode with camera assistance.

We've made some additional changes to help you better leverage this mode.

Previously, a single isSupported class variable on the NISession was all that was necessary to check if Nearby Interaction was supported on a given device.

This is now deprecated.

With the addition of camera assistance, we've made the device capabilities supported by Nearby Interaction more descriptive with a new deviceCapabilities class member on the NISession that returns a new NIDeviceCapability object.

At a minimum, checking the supportsPreciseDistance Measurement property is the equivalent of the now deprecated isSupported class variable.

Once you've established that the device supports the precise distance measurement, you should use NIDeviceCapability to fully understand the capabilities available from Nearby Interaction on the device running your application.

It is recommended you tailor your app experience to the capabilities of the device by checking the additional supportsDirectionMeasurement and supportsCameraAssistance properties of the NIDeviceCapability object.

Not all devices will support direction measurements nor camera assistance, so be sure to include experiences that are tailored to the capabilities of this device.

In particular, be mindful to include distance-only experiences in order to best support Apple Watch.

That's all for camera assistance as a way to enhance Nearby Interaction with ARKit. So let's turn our attention now to accessory background sessions.

Today, you can use Nearby Interaction in your app to allow users to point to other devices, find friends, and show controls or other UI based on distance and direction to an accessory.

However, when your app transitions to the background or when the user locks the screen on iOS and watchOS, any running NISessions are suspended until the application returns to the foreground.

This means you needed to focus on hands-on user experiences when interacting with your accessory.

Starting in iOS 16, Nearby Interaction has gone hands-free.

You're now able to use Nearby Interaction to start playing music when you walk into a room with a smart speaker, turn on your eBike when you get on it, or trigger other hands-free actions on an accessory.

You can do this even when the user isn't actively using your app via accessory background sessions.

Let's look at how you can accomplish this exciting new capability.

Let's spend just a minute reviewing the sequence for how to configure and run an NISession with an accessory.

You might recognize this sequence from last year's WWDC presentation.

The accessory sends its Ultra Wideband accessory configuration data over to your application via a data channel, and you create an NINearbyAccessoryConfiguration from this data.

You create an NISession, set an NISessionDelegate to get Ultra Wideband measurements from the accessory.

You run the NISession with your configuration and the session will return sharable configuration data to setup the accessory to interoperate with your application.

After sending this sharable configuration data back to the accessory, you are now able to receive Ultra Wideband measurements in your application and at the accessory.

For all the details on configuring and running Nearby Interaction with third-party accessories, please review last year's WWDC session.

Let's look now at how you set up the new background sessions.

The previous sequence diagram showed data flowing between your application and the accessory.

It is very common to have the communication channel between an accessory and your application use Bluetooth LE.

When paired to the accessory using Bluetooth LE, you can enable Nearby Interaction to start and continue sessions in the background.

Let's look closely at how this is possible.

Today, you can configure your app to use Core Bluetooth to discover, connect to, and exchange data with Bluetooth LE accessories while your app is in the background.

Check out the existing Core Bluetooth Programming Guide or the WWDC session from 2017 for more details.

Taking advantage of the powerful background operations from Core Bluetooth to efficiently discover the accessory and run your application in the background, your application can start an NISession with a Bluetooth LE accessory that also supports Ultra Wideband in the background.

Let's look now at how the sequence diagram updates to reflect this new mode.

To interact with this accessory, first, ensure that it is Bluetooth LE-paired.

Then, connect to the accessory.

When the accessory generates its accessory Ultra Wideband configuration data, it should both send it to your application and populate the Nearby Interaction GATT service; more on this next.

Finally, when your application receives the accessory's configuration data, construct an NINearbyAccessoryConfiguration object using a new initializer providing both your accessory's UWB configuration data and its Bluetooth peer identifier.

Run your NISession with this configuration and ensure you complete the setup by receiving the sharable configuration in your NISessionDelegate and send the sharable configuration to the accessory.

In order for your accessory to create a relationship between its Bluetooth identifier and the Ultra Wideband configuration, it must implement the new Nearby Interaction GATT service.

The Nearby Interaction service contains a single encrypted characteristic called Accessory Configuration Data.

It contains the same UWB configuration data used to initialize the NINearbyAccessoryConfiguration object.

iOS uses this characteristic to verify the association between your Bluetooth peer identifier and your NISession.

Your app cannot read from this characteristic directly.

You can find out more about the details of this new Nearby Interaction GATT service on developer.apple.com/ nearby-interaction.

If your accessory supports multiple NISessions in parallel, create multiple instances of Accessory Configuration Data, each with a different NISession's UWB configuration.

That's what's necessary on the accessory.

Let's turn to what you need to implement in your application by diving into some code! Accessory background sessions require that the accessory is LE-paired to the user's iPhone.

Your app is responsible for triggering this process.

To do this, implement methods to scan for your accessory, connect to it, and discover its services and characteristics.

Then, implement a method to read one of your accessory's encrypted characteristics.

You only need to do this once.

It will show the user a prompt to accept pairing.

Accessory background sessions also require a Bluetooth connection to your accessory.

Your app must be able to form this connection even when it's backgrounded.

To do this, implement a method to initiate a connection attempt to your accessory.

You should do this even if the accessory is not within Bluetooth range.

Then, implement CBManagerDelegate methods to restore state after your app is relaunched by Core Bluetooth and handle when your connection is established.

Now you're ready to run an accessory background session.

Create an NINearbyAccessoryConfiguration object by providing both the accessory's UWB configuration data and its Bluetooth peer identifier from the CBPeripheral identifier.

Run an NISession with that configuration and it will run while your app is backgrounded.

That's it! Well, there is one more setting you need to update for your app in Xcode.

This background mode requires the Nearby Interaction string in the UIBackgroundModes array in your app's Info.plist.

You can also use Xcode capabilities editor to add this background mode.

You will also want to ensure you have "Uses Bluetooth LE accessories" enabled to ensure your app can connect with the accessory in the background.

One important note about this new accessory background session.

When your application is in the background, the NISession will continue to run and will not be suspended, so Ultra Wideband measurements are available on the accessory.

You must consume and act on the Ultra Wideband measurements on the accessory.

Your application will not receive runtime, and you will not receive didUpdateNearbyObject delegate callbacks until your application returns to the foreground.

When using this new background mode, let's review the following best practices.

Triggering LE pairing with your accessory will show the user a prompt to accept the pairing.

Do this at a time that is intuitive to the user why they want to pair the accessory.

This could be in the setup flow that it creates the relationship with the accessory or when the user clearly indicates their desire to interact with the accessory.

While your app is backgrounded, your NISession will not be suspended, but it will not receive didUpdateNearbyObject delegate callbacks.

However, your accessory will receive Ultra Wideband measurements.

Process these measurements directly on your accessory to determine what action should happen for the user.

Finally, manage battery usage by only sending data from your accessory to your app during a significant user interaction; for example, to show a notification to the user.

That's all you need to know on background sessions and leads me to the last topic on third-party hardware support.

Today, I'm happy to announce that the previously available beta U1-compatible development kits are now out of beta and available for wider use.

Please visit developer.apple.com /nearby-interaction to find out more about compatible Ultra Wideband development kits.

We've also updated the specification for accessory manufacturers to support the new accessory background sessions, including the Nearby Interaction GATT service, and it is available on the same website.

So, let's summarize what we've discussed in this session.

Nearby Interaction now includes a new camera-assisted mode that tightly integrates ARKit and Nearby Interaction to provide a seamless experience for you to create spatially aware experiences that guide users to a nearby object.

The accessory background sessions enable you to initiate and extend sessions into the background for you to build a more hands-off experience for your users.

We've announced exciting updates to the third-party compatible Ultra Wideband hardware support.

That's it for the Nearby Interaction updates this year.

Download the demos, reach out with feedback on the updated capabilities, review the updated third-party specification, and go build amazing apps with spatial experiences.

Thank you.

♪

-