-

Modernizing Grand Central Dispatch Usage

macOS 10.13 and iOS 11 have reinvented how Grand Central Dispatch and the Darwin kernel collaborate, enabling your applications to run concurrent workloads more efficiently. Learn how to modernize your code to take advantage of these improvements and make optimal use of hardware resources.

リソース

関連ビデオ

WWDC21

WWDC19

-

このビデオを検索

Good morning. And welcome to the modernizing Grand Central Dispatch Usage Session. I'm Daniel Chimene from the Core Darwin Team and my colleagues and I are here today to show you how you can take advantage of Grand Central Dispatch to get the best performance in your application.

As app developers, you spent hundreds or thousands of hours building amazing experiences for your users. Taking advantage of our powerful devices.

You want your users to be able to have a great experience. Not just on one device, but across all the variety of devices that Apple makes. GCD is designed to help you dynamically scale your application code. From the single core Apple Watch, all the way up to a mini core Mac.

You don't want to have to worry too much about what kind of hardware your users are running.

But there are problematic problems that can affect the scalability and the efficiency of your code. Both on the low end and on the high end.

That's what we're here to talk about today.

We want to help you ensure that all the work you're putting into your app to make it a great experience for your users translates across all of these devices.

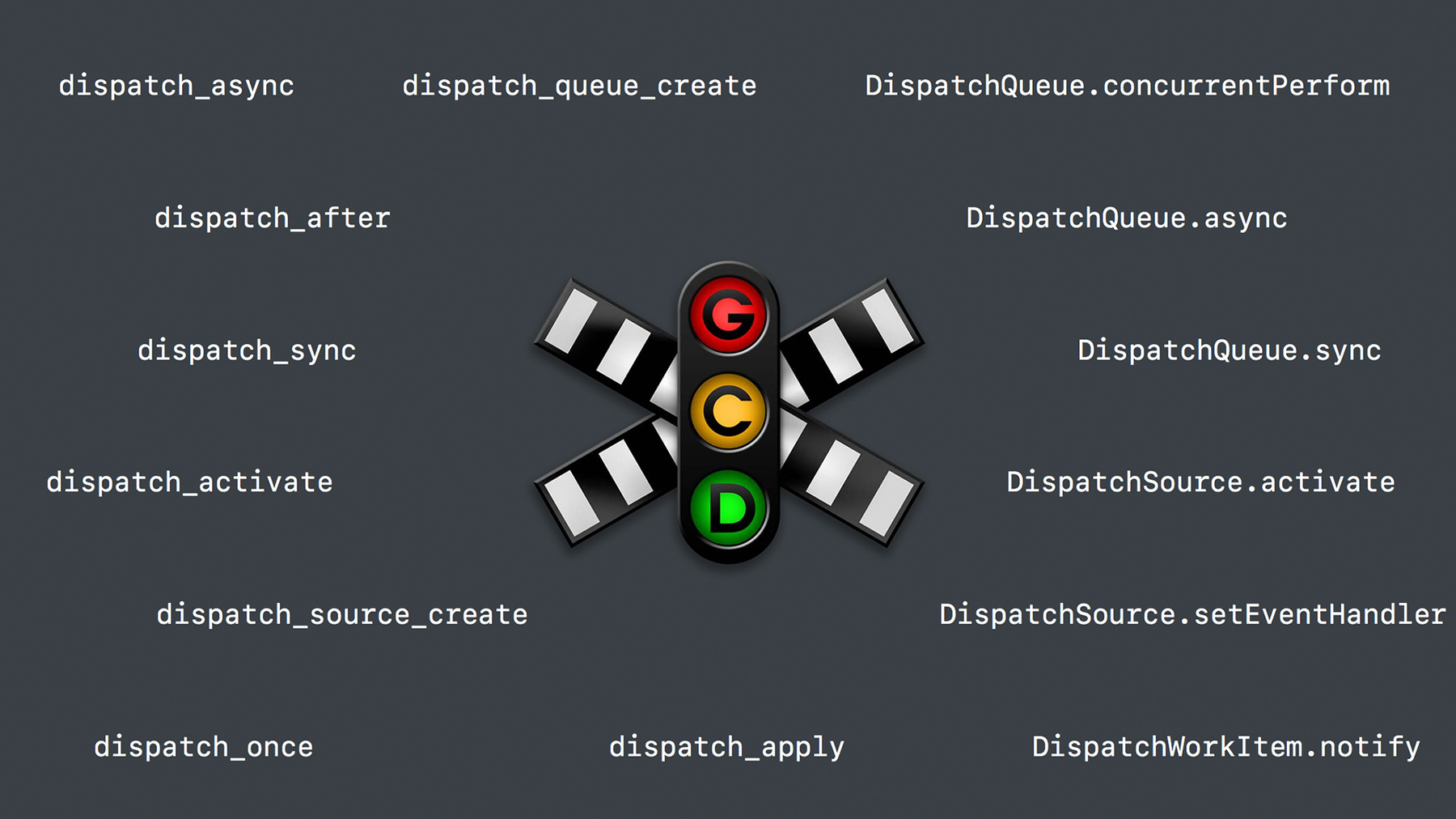

You may have been using GCD API's like dispatch async, and others to create cues and dispatch work to the system.

These are only some of the interfaces to the concurrency technology that we call Grand Central Dispatch.

Today, we're going to take a peek under the covers of GCD. This is an advanced session packed full of information. So, let's get started right away, by looking at our hardware.

The amazing chips in our devices have been getting faster and faster over time. However, much of the speed is not just because the chips themselves are getting faster, but because they're getting smarter, and smarter about running your code. And they're learning from what your code does over time to operate more efficiently. However, if your code goes off core, before it's completed its task, then it may no longer be able to take advantage of the history that that core has built up. And you might leave performance on the table when you come back on core.

We've even seen examples of this on our own frameworks, when we applied some of the optimization techniques that we're going to discuss today, we saw large speed ups from simple changes to avoid these problematic patterns.

So, using these techniques lets you bring high performance apps to more users with less work.

Today, we're going to give you some insight into what our system is doing under the covers with your code. So, you can tune your code to take the best advantage of what GCD has to offer.

We're going to discuss a few things today.

First, we're going to discuss how you can best express parallelism and concurrency. How you can chose the best way to express concurrency to grand central dispatch.

We're going to introduce Unified Queue Identity, which is a major under the hood improvement to GCD that we're publishing this year.

And we're finally going to show you how you can find problem spots in your code, with instruments.

So, let's start by discussing parallelism and concurrency.

So, for the purpose of this talk, we're talking about parallelism which is about how your code executes in parallel, simultaneously across many different cores. Concurrency is about how you compose the independent components of your application to run concurrently. The easy way to separate these two concepts in your mind, is to realize that parallelism is something that usually requires multiple cores and you want to use them all at the same time. And concurrency is something that you can do even on a single core system. It's about how you interpose the different tasks that are part of your application. So, let's start by talking about parallelism and how you might use it when you're writing an app.

So, let's imagine you make an app and it processes huge images. And you want to be able to take advantage of the many cores on a Mac Pro to be able to process those images faster.

What you do is break up that image into chunks.

And have each core process those chunks in parallel.

This gives you a speed up, because the cores are simultaneously working on different parts of the image.

So, how do you implement this? Well first, you should stop and consider whether or not you could take advantage of our system frameworks. For example, accelerate has built-in support for parallel execution of advanced image algorithms. Metal and core image can take advantage of the powerful GPU.

Well, let's say you've decided to implement this yourself, GCD gives you a tool that lets you easily express this pattern.

The way you express parallelism in GCD is with the API called concurrentPerform.

This lets the framework optimize the parallel case because it knows that you're trying to do a parallel computation across all the cores.

concurrentPerform is a parallel for loop that automatically load balances your computation across all the cores in the system. When you use this with Swift, it automatically chooses the correct context to run all your computation in. This year, we've brought that same power to the objective C interface dispatch_apply with the DISPATCH_APPLY_AUTO keyword.

This replaces the queue argument allowing the system to choose the right context to run your code in automatically.

So, now let's take a look at this other parameter, which is the iteration count. This is how many times your block is called in parallel across the system.

How do you choose a good value here? You might imagine that a good value would be the number of cores. Let's imagine that we're executing our workload on a three-core system.

Here, you can see the ideal case, where three blocks run in parallel on all three cores. The real world isn't necessarily this perfect.

What might happens if the third core is taken up for awhile with UI rendering? Well, what happens is the load balancer has to move that third block over to the first core in order to execute it, because it's the third course taken up. And we get a bubble of idle CPU. We could have taken advantage of this time, to do more parallel work. And so, instead our workload took longer.

How can we fix that? Well, we can increase the iteration count and give the load balancer more flexibility.

It looks good. That hole is gone. There's actually another hole over here, on the third core. We could take advantage of that time as well.

So, as Tim said on Monday, let's turn the iteration count up to 11. There. We filled the hole, and we have efficient execution. We're using all of the available resources on the system until we finish. This is still a very simplistic example. To deal with the real-world complexity, you want to use an order of magnitude more, say 1000.

You can use a large enough iteration count so the load balancer has the flexibility to fill the gaps in the system and take the maximum advantage of the available resources of the system.

However, you should be sure to balance the overhead of the load balancer versus the useful work that each block in your parallel for loop does.

Remember that not every CPU is available to you all the time. There are many tasks running concurrently on the system. And additionally, not every worker thread will make equal progress.

So, to recap, if you have a parallel problem, make sure to leverage the system frameworks that are available to you. You can use their power to solve your problem.

Additionally, make sure to take advantage of the automatic load balancing inside concurrentPerform. Give it the flexibility to do what it does best.

So, that's the discussion about parallelism. Now, let's switch to the main topic for today, which is concurrency.

So, concurrency.

Let's image you're writing a simple news app.

How would you structure it? Well, you start by breaking it up into the independent subsystems that make up the app.

Thinking about how you might break up a news app into its independent subsystems, you might have a UI component that renders the UI, that's the main thread. You might also have a database that stores those articles. And you might have a networking subsystem that fetches those articles from the network. To give a better picture of how this app works and breaking it up into subsystems gives you an advantage, let's visualize how that executes concurrently on a modern system.

So, let's say here's a timeline that shows at the top the CPU track. Let's image that we only have one CPU remaining. The other CPUs are busy for some reason.

We only have one core available. At any time only one of these threads can run on that CPU.

So, what happens when a user clicks the button and refreshes the article list in the news app? Well, these interface renders the response to that button and then sends an asynchronous Request to the database.

And then the database decides it needs to refresh the articles, which issues another command to the networking subsystem.

However, at this point, the user touches the app again.

And because the database is run off the main thread of the application, the OS can immediately switch the CPU to working on the UI thread, and it can respond immediately to the user without having to wait for the database thread to complete.

This is the advantage of moving work off the main thread.

When the user interface is done responding, the CPU can then switch back to the database thread, and then finish the networking task as well.

So, taking advantage of concurrency like this lets you build responsive apps. The main thread can always respond to the user's action without having to wait for other parts of your application to complete. So, let's take a look at what that looks like to the CPU.

These white lines above here show the context switches between the subsystems.

A context switch is when the CPU switches between these different subsystems or threads that make up your application.

If you want to visualize what this looks like to your application, you can use Instrument System Trace, which shows you what the CPUs and the threads are doing when they're running in your application. If you want to learn more about this, you can watch the "System Trace In-Depth" Talk from last year where the Instrument's team described how you use System Trace.

So, this concept of context switching is where the power of concurrency comes from. Let's look at when these context switches might happen and what causes them.

Well, they can start when a high priority thread needs the CPU as we saw earlier, with the UI thread pre-empting the database thread.

It can also happen when a thread finishes its current work, or it's waiting to acquire resource. Or it's waiting for an asynchronous request to complete.

However, with this great power of concurrency comes great responsibility as well. You can have too much of a good thing.

Let's say you're switching between the network and database threads on your CPU.

A few context switches is fine, that's the power of concurrency you're switching between different tasks.

However, if you're doing this thousands of times in really rapid succession, you run into trouble. You're starting to lose performance because each white bar here is a context switch. And the overhead of a context switch adds up. It's not just the time we spend executing the context switch, it's also the history that the core has built up, it has to regain that history after every Context switch.

There are also other effects you might experience. For example, there might be others ahead of you in line for access to the CPU.

You have to wait each time you context switch for the rest of the queue to drain out and so you may be delayed by somebody else ahead of you in line.

So, let's look at what might cause excessive context switching.

So, there's three main causes we're going to talk about today. First, repeatedly waiting for exclusive access to contended resources. Repeatedly switching between independent operations, and repeatedly bouncing an operation between threads.

You note, that I repeated the word repeatedly several times.

That's intentional.

Context switching a few times is okay, that's how concurrency works, that's the power that we're giving you. However, when you repeat it too many times, the costs start to add up.

So, let's start by looking at the first case, which is exclusive access to contended resources.

When can this happen? Well, the primary case in which this happens is when you have a lock and a bunch of threads are all trying to acquire that lock.

So, how can you tell this is occurring in your application? Well, we can go back to system trace. We can visualize what it looks like in instruments.

So, let's say this shows us that we have many threads running for a very short time and they're all handing off to each other in a little cascade.

Let's focus on the first thread and see what instruments is telling us.

We have this blue track, which shows when a thread is on CPU.

And the red track shows when it's making a sys call. In this case, it's making mutex_wait sys call. This shows that most of its time is waiting for the mutex to become available. And the on core time is very short at only 10 microseconds.

And there are a lot of context switches going on on the system, which it shows you on the context switches track at the top.

So, what's causing this? Let's go back to look at our simple timeline and see how excess contention could be playing out.

So, you see this sort of staircase pattern in time. Where each thread is running for a short time, and then giving up the CPU to the next thread, rinse and repeat, for a long time.

You want your work to look more like this.

Where the CPU can focus on one thing at a time, get it done, and then work on the next task.

So, what's going on here that causes that staircase? Let's zoom in to one of these stair steps.

So, here we're focusing on two threads, the green thread and the blue thread. And we have a CPU on top.

We've added a new lock track here that shows the state of the lock and what thread owns it. In this case, the blue thread owns the lock, and the green thread is waiting.

So, when the blue thread unlocks, the ownership of that lock is transferred to the green thread, because it's next in line.

However, when the blue thread turns around and grabs the lock again, it can't because the lock is reserved for the green thread.

It forces a context switch because we now have to do something else. And we switch to the green thread, and the CPU can then finish the lock and we can repeat.

Sometimes this is useful. You want every thread that's waiting on the lock to get a chance to acquire the resource, however, what if you had a lock that works a different way.

Let's start again by looking at what an unfair lock does.

So, this time when blue thread unlocks, the lock isn't reserved. The ownership of the lock is up for grabs.

Blue can take the lock again, and it can immediately reacquire and stay on CPU without forcing a context switch.

This might make it difficult for the green thread to actually get a chance at the lock, but it reduces the number of context switches the blue thread has to have in order to reacquire the lock.

So, to recap when we're talking about lock contention, you actually want to make sure to measure your application in System Trace and see if you have an issue. If you do, often the unfair lock works best for objects, properties, for global state in your application that may have the lock taken and dropped many, many times.

There's one other thing I want to talk about when we're mentioning locks, and that is lock ownership.

So, remember the lock track we had earlier, the runtime knows which thread will unlock the lock next.

We can take advantage of that power to automatically resolve priority inversions in your app between the waiters and the owners of the lock. And even enable other optimizations, like directed CPU handoff to the owning thread. Pierre is going to discuss this later in our talk, when talking about dispatch sync.

We often get the question, which primitives have this power and which ones don't.

Let's take a look at which low-level primitives do this today.

So, primitives with a single known owner have this power. Things like serial queues and OS unfair lock.

However, asymmetric primitives, like dispatch semaphore and dispatch group don't have this power, because the runtime doesn't know what thread will singal the sync primitive.

Finally, primitives with multiple owners like private, concurrent queues and reader or writers locks, the systems doesn't take advantage of that today, because there isn't a single owner. When you're picking a primitive consider whether or not your use case involves threads of different priorities interacting.

In like a high priority UI thread with a lower priority background thread.

If so, you might want to take advantage of a primitive with ownership that ensures that your UI thread doesn't get delayed by waiting on a lower priority background thread. So, in summary, these inefficient behaviors are often emergent properties of your application. It's not easy to find these problems just by looking at your code. You should observe it in Instruments System Trace to visualize your apps true, real behavior and so you can use the right lock for the job.

So, I've just discussed the first cause on our context switching list, which is exclusive access.

To discuss some other ways your apps can experience excessive context switching, I'm going to bring out my Daniel Steffen to talk to you about how you can organize your concurrency with GCD, to avoid these pitfalls.

All right. Thank you, Daniel.

So, we've got a lot to cover today, so I won't be able to go into too many details on the fundamentals of GCD. If you're new to the technology, or need a bit of a refresher, here are some of the sessions at previous WWDC conferences that covered GCD and the enhancements that we've made to it over the years. So, I encourage you to go and see those on video.

We do need a few of the basic concepts of GCD today however, starting with the serial dispatch queue.

This is really our fundamental synchronization primitive in GCD. It provides you with mutual exclusion as well as FIFO ordering. This is one of these ordered and fair primitives that Daniel just mentioned.

And it has a concurrent atomic enqueue operation, so it's fine for multiple threads to enqueue, operations into the queue at the same time, as well as a single dequeue thread that the system provides to execute asynchronous work out of the queue.

So, let's look at an example of this in action. Here we're creating a serial queue by calling the dispatch queue constructor and that will give you a piece of memory that as long as you haven't used it yet, it's just inert in your application.

Now, imagine there's two threads that come along in call the queue.async method to submit some asynchronous work into this queue. As mentioned, it's fine for multiple threads to do this, and the items will just get enqueued in the order that they appeared.

And because this is the asynchronous method, this method returns and the threads can go on their way, so maybe this first thread eventually calls queue.sync. This is the way you interact synchronously with the queue. And because this is an ordered primitive here, what this does is it will enqueue a placeholder into the queue so that the thread can wait until it is its turn.

And now, there's an asynchronous worker thread that will come along to execute the asynchronous work items, until you get to that placeholder at which point the ownership of the queue will transfer to the thread waiting in queue.sync so that it can execute its block.

So, the next concept that we'll need is the dispatch source. This is our event monitoring primitive in GCD. Here we are setting one up to monitor a file descriptor for readability with the makeRead source constructor. You pass it in a queue which is the target queue of the source, which is where we execute the event handler of the source, which here just reads from the File descriptor.

This target queue is also where you might put other work that should be serialized with this operation, such as processing the data that was read.

Then, we set the cancel handler for the source, which is how sources implement the invalidation pattern.

And finally, when everything is set up, we call source. activate to start monitoring. So, it's worth noting that sources are really just an instance of a more general pattern throughout the OS, where you have objects that deliver events to you on a target queue that you specify. So, if you're familiar with XPC, that would be another example of that, XPC connections.

And, it's worth noting that all of everything we're telling you today about sources really applies to all such objects in general.

So, putting these two concepts together, we get what we call the target queue hierarchy.

So, here we have two sources with their associated target queues, S1, S2 and the queue is Q1 and Q2. And we can form a little tree out of this situation by adding yet another serial queue to the mix, by adding a mutual exclusion queue, EQ, at the bottom. The way we do this is simply by passing in the optional target argument into the dispatch queue constructor. So, this gives you a shared single mutual exclusion context for this whole tree. Only one of the sources or one item in one of the queues can execute at one time.

But it preserves the independent individual queue order for queue 1 and queue 2. So, let's look at what I mean by that.

Here I have the two queues, queue 1 and queue 2 with some itmes enqueued in a specific order.

And because we have this extra serial queue at the bottom, and this executes, they will execute in EQ and there will be a single worker thread executing these items giving you that mutual exclusion property, only one item executing at one time. But as you can see, the items from both queues can execute interleaved while preserving the individual order that they had in their original queues. So, the last concept that we'll need today, is the notion of quality of service.

This is a fairly deep concept that was talked about in some detail in the past. In particular, in the 'Power Performance and Diagnostics' session in 2014. So, if this is new to you, I would encourage you to go and watch that.

But what we'll need today from this is really mostly it's abstract notion of priority.

And we'll use the terms QOS and priority somewhat interchangeably in the rest of the session.

We have four quality of service classes on the system. From the highest user interactive UI to user initiated, or IN, utility, UT to background BG. The lowest priority.

So, let's look at how we would combine this concept of quality of service with the target queue hierarchy that we just looked at.

In this hierarchy, every node in the tree can actually have a quality of service label associated with it.

So, for instance the source 2 might be relevant to the user interface. It might be monitored for an event where we should update the UI as soon as the event triggers. So, it could be that we want to put the UI label onto the source.

Another common use case would be to put a label on the mutual exclusion queue to provide a flow of execution so that nothing in this tree can execute below this level, so UT in this example. And now if anything else in this tree fires, for instance source 1, we will be using this flow for the tree if it doesn't have its own quality of service associated.

And a source firing is really just an async that executes from the kernel. And the same as before happens, we enqueue the source handler eventually into the mutual exclusion queue for execution.

For asyncs from user space, your quality of service is usually determined from the thread that called queue.async. So we have a user initiated thread that submits an item at IN into the queue and for execution into EQ eventually. And now, maybe we have the source 2 that fires with this very high priority UI relevant event that executes its event handler, and enqueues its event handler into EQ.

So, now you'll notice that we have a priority inversion situation. We have three items enqueued with a very high priority item at the end preceded by some low priority items. And these have to execute in order.

The system resolves this inversion for you by bringing up a worker thread at the highest priority of anything that is currently enqueued. And it's worth keeping this little tree on the right hand side here in mind because it comes up again later in the session.

And with that let's move on to our main topic of the section which is how to use what we just learned to express good granularity of concurrency to GCD. Let's go back to our news application that Daniel introduced earlier and focus on the networking subsystem for a little bit. In a networking subsystem, you'll have to monitor some network connections in the kernel. And with GCD you'll do that with a dispatch source, and the dispatch queue like you just saw. But of course in any networking subsystem you usually not just have one network connection, you'll have many of them and they will all replicate the same setup.

So, let's focus on the right hand side on the three connections here and see how they execute.

If the first connection triggers, just like the same thing we just saw happens, we will enqueue the event handler for that source onto its target queue. Of course if the other two connections fire at the same time, they'll still replicate and you'll end up with three queues with an event handler enqueued.

And because you have these three independent serial queues at the bottom, you've really asked the system to provide you with three independent concurrency contexts. If all these become active at once, the system will oblige and create three threads for you to execute these event handlers.

Now, this may be what you wanted, and maybe exactly what you were after, but it is quite common for these event handlers to be small and only read some data from the network and enqueue it into a common data structure.

Additionally, as we saw before, you don't have just three connections, you may have many, many of them if you have a number of network connections in your subsystem.

So, this can lead to a situation where you have this kind of context switching pattern, and excessive context switching that Daniel talked about where you execute a small amount of work, context switch to another thread and do that again, and again, and again. So, how can we improve on this situation in this example here? We can apply the single mutual exclusion context idea that we just talked about by simply putting in an additional serial queue at the bottom and forming a hierarchy, you can get a single mutual exclusion context for all of these network connections.

And if they fire at the same time, the same thing as before will happen, the event handlers will get enqueued onto the target queues, but because there's an additional serial queue at the bottom here, it's a single thread that will come and execute them in order instead of the multiple threads that we had before.

So, this seems like a really simple change but it is exactly the type of change that led to the 1.3 X performance improvement in some of our own code that Daniel talked about earlier in the session.

So, this is one example of how we can avoid the problematic pattern of repeatedly switching between independent operations.

But it really comes under the general heading of avoiding unwanted and unbounded concurrency in your application.

One way you can get that is by having many queues becoming active all at once. And one example of this is that independent per client source pattern that we just saw. You can also get this if you have independent per object queues. If many objects in your application have their own serial queues and you submit asynchronous work into them at the same time you can get exactly the same phenomenon.

You can also see this if you have many work items submitted to the global concurrent queue at the same time.

In particular if those work items block. The way the global concurrent queue works is that it creates more threads when existing threads block to give you a continuing good level of concurrency in your application. But if those threads then block again, you can get something that we call the thread explosion.

This is a topic that we went into some detail in the "Building Responses and Efficient Apps with GCD" in 2015. So, if this sounds new to you, I'd encourage you to go and watch that session.

So, how do you choose the right amount of concurrency in your application to avoid these problematic patterns? One idea that we've recommended to you in the past is to use one queue per subsystem.

So, here back in our news application, we already have one queue for the user interface, the main queue and we could choose one serial queue for the networking and one serial queue for the database subsystem in addition.

But what we've learned today a more general way to think of this is really to use one queue hierarchy per subsystem.

This gives you a mutual exclusion context for the subsystem, where you can leave the rest of the queue event Substructure of your system alone and just target that network queue or database queue that underlies the bottom of your queue hierarchies.

But, that may well be a bit too simplistic a pattern for a complex application or a complex subsystem. The main thing that is important here is to have a fixed number of serial queue hierarchies in your application. So, it may make sense to have additional queue hierarchies for a complicated subsystem, say a secondary one for slower work, or larger work items, so that the first one, the primary one, can keep the subsystem responsive to requests coming in from outside.

Another thing that's important to think about in this context is the granularity of the work submitted to those subsystems.

You want to use fairly large work items when you move between subsystems to get a picture like what we saw earlier in the session, where the CPU is able to execute your subsystem for long enough to reach an efficient performance state.

Once you're inside the subsystem, say the networking subsystem here. It may make sense to subdivide into smaller work items and have a finer granularity to improve the responsiveness of that subsystem. For instance, you can do that by splitting up your work and re-asyncing to another queue in your queue hierarchy. And that doesn't introduce a context switch because you're already in that one subsystem. So, in summary, what have we looked at in this section? We saw how we can organize queues and sources into serial queue hierarchies. How to use a fixed number of the serial queue hierarchies to give GCD a good granularity of concurrency. And how to size your work items appropriately earlier in the section for parallel work and here for concurrent work inside your subsystem as well as between subsystems. And with this, I'll hand it over to Pierre to dive into how we have improved GCD to always execute the queue hierarchy on a single thread and how you can modernize your code to take advantage of this.

Thank you Daniel.

So, indeed we have completely reinvented the internals of GCD this year to eliminate some unwanted context switches and execute single queue hierarchies like the ones that Daniel showed on the single thread.

To do so we have created a new kind of concept that we call Unified Queue Identity that lets us do that. And we will walk you through how it works.

So, really this part of the talk will focus on a single queue hierarchy, like the ones Daniel showed earlier. However, we'll work on simplified ones with the sources at the top, and your mutual exclusion context at the bottom. The internal GCD notes are not quite relevant for that part of the talk. So, when you create a new execution context you use to dispatch queue constructor, that creates just a piece of memory in your application that is a note. And one of the first things that you may do is to dispatch asynchronous Work items to it. So, you will have a thread in your application that will here enqueue a background work item on the queue. When that happened before we used to request a thread anonymously to the system. And the resolution of what the thread was meant to do happens late inside your application.

In this release, we change that and what we do is that we create our counter object, the Unified Queue Identity that is strongly tied to your queue and is exactly meant to represent your queue in the kernel.

We can tie that object with the required priority to execute to your work, which here is background (BG). And that causes the system to ask for a thread.

The thread request, that dotted line on the slide, may not be fulfilled for some time, because here that's a background thread, and maybe the system is loaded enough that it's not even worth giving you a thread for it. Later on, some other path of your application may actually try to enqueue more work. Here a UT utility work item that is slightly higher priority. We can use the queue identity, the unified identity in the kernel to look and solve the priority inversion, and elevate the priority of that thread request. And maybe that is the small nudge that the system needed to actually give you a thread here to execute your work. But this thread is in the scheduler queues not yet on call, not executing. And the reason why is because there is another thread in your application that is interacting with that queue and working synchronously at a higher priority, even, user initiated (IN). Now that we have that Unified Queue Identity, we can actually since that thread has to block to enqueue the placeholder that Daniel told you about a bit earlier, we can block the synchronous execution of that thread on the Unified Queue Identity. The same on that we use for asynchronous work, with all the priority inversion. But now that we unified the asynchronous and the synchronous part of the queue in a single identity, we can apply an optimization and delicately switch the thread that's blocking you by passing the scheduler queue and registering the queue delays that Daniel introduced while talking about the scheduler very early.

So, that's how the unified queue identity is used for asynchronous and synchronous work items.

Now, how would we use that for events? Why is it useful? So, that is the small tree that we've been using so far, let's look at the creation of these sources.

When you create the source using the makeResource factory button, you set a bunch of events, of favorite handlers and properties. But what is really interesting is what happens when you activate the object.

This is actually at that moment, that we will notice that the utility is QOS, at which the handler for your source will always execute. Because it's inherited from your queue hierarchy. We will also know now, with the new system, that the handler will eventually execute in that EQ execution mutual exclusion context.

And will now register the source at front with the sync unified identity that I just talked about a bit earlier. If we look at the higher UI QOS source that we have on the tree, the way we treat it is very similar to the first one, except that when you're setting the event handler here you're specifying the QOS that you actually want.

And again, what's interesting is what happens at activate time. That is when we take the snapshot and unlike before when we got the utility QOS from your hierarchy, here we get it from your hint.

We still recall the fact that they will execute both the sources on the same execution context. And will register that second source up front again, with the same unified identity in the kernel. So, really what we're trying to solve with that quite complex identity is a problem that we had in previous releases of the OS, where related operations would actually bounce off the old threads. Let's look at how it used to work.

So, remember that's our queue hierarchy, and let's bring up the timeline that you've seen a bunch of times now in our talk. At the top, the CPU, but now there is a new track, the exclusion queue track that will show you what is executing at any given moment on that queue. So, that's really how the runtime used to work before this release in macOS Sierra and iOS 10. So, let's look at what happens if the first source fails. Before, like I said thread requests were anonymous. We would ask for an anonymous thread, deliver the event on the thread and then we would look at the event.

And when we look at the event inside your application, that is only then that we realize that this event is meant to run on a queue. We would then queue the event handler. But since the queue is unclaimed, the thread could actually become that queue and start executing the event handler for your source.

And we do so. Now, the interesting thing is what happens when the second source that is higher priority fires? The same actually. Since it's a hierarchy QOS here, higher priority that's what you're executing right now.

We would bring up a new anonymous thread deliver that higher priority event on the thread.

And look at what that event means. And we will notice that it is for exactly the same queue hierarchy only then. And enqueue the handler after the one we just pre emptied.

As you see, we closed our first context switch because of that higher priority event. But, we cannot make for what progress, because unlike the first time, that second thread cannot take over the queue it is already associated with a thread. We cannot take it over. So, the thread is done. Which as Daniel explained is one reason why you will context switch again. And that's what we do, we context switch back to the first thread that is the one that can actually make progress. We execute the rest of the first handler and finally move to the second one.

So, as you can see, we use two threads and two context switches that you really didn't want for a single execution context. We fixed that using Unified Identity in macOS High Sierra and iOS 11.

We got rid of that thread.

And we also, of course got rid of the two context switches that we had, that were unwanted.

And of course, its important because unlike what happened when Daniel showed you the pre-emption with that UI touch event, where we could take advantage of the fact that we actually had two threads that were independent to be more responsive for your application. Here, we didn't benefit from any of these context switches, because these event handlers, S1 and S2 had to execute in order anyways. So, knowing about that event early was not useful. And if you look at how this actually, what the flow is today, it looks more like this.

What happened here? The most important thing on that flow is that now if you look at the thread, it's called EQ, because that's the point of the unified identity, the thread and the EQ are basically the same object. And the kernel knows that it's really executing a queue, which is reflected on the CPU track. You don't see the events anymore, it's just running your queue.

However, you might ask, how did we manage to deliver the event, that second event without requiring a helper. That is actually a good question. When the event fires, now we know where it will execute, where you will handle it. We just mark the thread.

No helper needed.

And at the first possible time, we will notice that the thread was marked with 'you have pending events'. And we will de-queue the events, When it's the right time, right after the first handler finishes. We can grab the events from the kernel, look at them, and enqueue their handlers on your hierarchy. So, why did we go through that quite complex explanation? That's so that you can understand how to best take advantage of the runtime behavior.

Because clearly, the runtime uses every possible hint you're giving us to optimize behavior in your application.

And it's important to know how to hint and when to hint the runtime correctly so that we make the right decisions. Which brings me to what should you do to existing code bases to take advantage of all that core technology that we rebuilt. Now, actually two steps to follow to take the full advantage of that technology. The first one is no mutation after activation.

And the second one is paying extra care with extra attention to your target queue hierarchies.

So, what does that mean? No mutation past activation really means that when you have any kind of property on a dispatch object, you can set them, but as soon as you activate the object, you should stop mutating them. The second example, that's our source that we've seen quite a few times already in the talk. That monitors a file descriptor for readability. And you're setting a bunch of properties, handlers; the event handler, the cancel handler. You may have registration handlers.

You can even change them a few times, that's fine, you can change your mind. And then you activate the source.

The contract here is that you should stop mutating your objects.

It's very tempting to, after-the-fact, for example change the target queue of your source. That will cause problems. And the reason why is exactly what I showed a bit earlier, at activate time we take a snapshot of the properties of your objects, and we will take decisions in the future based on that snapshot. And if you change the target queue hierarchy after-the-fact, it will render that snapshot stale and that will defeat a bunch of very important optimization such as the priority inversion avoidance algorithm in GCD, the direct handoff that we have for the dispatch sync that I presented earlier, or all defensive and deliberate optimizations that we just went through.

And I insist on the points that Daniel made early on, which is that many of you probably never had to create a dispatch source in your application. And this is fine, this is really how it's supposed to work.

You probably actually use them a lot of them through system frameworks. It's a shame you have a framework that you have to vend a dispatch queue to because they are asyncing some notifications on the queue on your behalf. Behind the scenes, they have one of these sources. So, if you're changing the assumptions of the system, you will actually break all of these optimizations as well.

So, I hope I made a point really clear that to target your hierarchy is essential and you have to protect it.

What does that mean? And how to do that? The first way, which is a very simple device, is that when you're building one, start from the bottom and build it toward the top. When you show hierarchy on the slide build up, as you see, these white arrows there, they are your target queue relationships. None of them have to be mutated if you build it in that order. However, when you have a large application, or you're hiding your frameworks and you're vending one of these queues to another part of your engineering company, you may want to have stronger guarantees than that. You may want to lockdown these relationships, so that really no one can mutate them after-the-fact. This is actually something that you can do with the technology that we call a 'static queue hierarchy'. We introduced it last year, and actually if you are using Swift 3, then you can stop listening to me, because you're already in that form and that is the only world you're living in.

However, if you have an existing code base, or you use older versions than of Swift, you need to do some extra steps. So, let's focus on the relationship between Q1 and EQ here.

When you created that with Objective-C you probably wrote code that looks like this. You create your queue and then in the second step, you will set your target queue of Q1 to EQ. That is not protecting your queue hierarchy. Anyone can come along and call dispatch target queue again and break all your assumptions. That's not totally great.

There is a simple step to just fix that code into a way that is safe, which is to adopt a new API we introduced last year, which is dispatch_queue_create_with_target, which in a single atomic step will create the queue, set the queue hierarchy right, and protect it. And that's it. These were the two steps to follow for you to really work with the runtime well. They are really easy. Other, a bit like the mute case that Daniel walked you through early on, finding when you're doing one of these things wrong is fairly challenging, especially on a large cloud base. Finding that in an existing cloud base through code inspection is hard.

This is why we created a new GCD performance instruments tool to find problem spots in an existing application. And I will call Daniel back to the stage to demo for you.

Thank you, Pierre. All right to start out with please note that this GCD performance instrument that we'll see is not yet present in the version of XCode 9 that you have, but it will be available in an upcoming seed of XCode 9. So, for this demo, let's analyze the execution of our sample news application in some detail.

So, what happens here if you click this connect button at the bottom, is that this app creates a number of network connections to a server, to read lists of URLs from, which are then displayed in the WebViews whenever the refresh button is hit.

So, let's jump into XCode to see how we are setting up those network connections.

So, here we are in XCode in the createConnections method, which does just that. It's very simple. We have a for loop, maybe just create some sockets and connect them to our server.

And we monitor that socket for readability with one of these dispatch read sources that we've seen so many times already in this session. And here this is just the 'C' API.

We then set up the event handler block for that dispatch source here. And when the socket becomes readable, we just read from it with the read system call until there is no more data available.

Once we have the data, we pass it to our database, subsystem in the application with this process 0 method.

So, let's build and run, and take a system trace of this application and see how it executes. So here we are in Instruments, in system trace, and in addition to the usual tracks in system trace, we've added this new GCD performance instrument. When we click on there, we see a number of performance events that have been reported for performance problems. One of these is this 'mutation after activation' event, that we can also see when we go and mouse over the timeline. We can also click on one of the other events here, such as this, 're-target after activation' event. And the list will take us directly there. If you want more details on this, we can disclose the backtrace on the right hand side of Instruments which will show us where exactly this event occurred in your application. So, here for instance it is in our createConnections method.

If we double click on this frame, instruments will show us directly the line of code where the problem occurred.

This is actually a target queue call here that indeed occurs after activate. Like, this is the pattern up here just told you about. To go and fix that, we can jump directly into XCode with the open file and XCode button in Instruments. So, here we are at that dispatch_set_target_queue_line and indeed it, as well as the dispatch_source_event_handler set up happens after activate. So, here in this example, it's really easy to fix. We just move these two lines down below. And we have fixed the problem. We have activate after we set up the source, and not before. So, let's jump back into instruments and see what we can see in the system trace now. It looks the same as before, except when you click on the GCD performance track, you will see there is no more significant performance problems detected. And that's what you ought to see if you use this instrument. So, of course this was very simple in this application. You may have to do some work.

So, let's focus on the points track in the application. This shows us a number of network event handlers. And these are the source event handlers in our application. How did you manage to make these show up in instruments? That's actually really interesting to understand because it's something you can apply to your own code to understand how it executes in instruments.

Well, going back to XCode in our createConnections method, when we set up our source and its source event handler, we are interested in the execution of that event handler, and try to understand its timing. To see that in Instruments, we've added the kdebug_signpost_start function at the beginning of the handler, and the kdebug_signpost_end function at the end. And that is all it takes for the section of code to appear highlighted in the points track in instrument system trace. So, if you switch back to instruments, that is these red dots at the pop in the points track and we can see in the back trace that it matches our event handler for one of these events.

If you zoom in on one of these interesting looking areas in the points track, here, you can see that there is a number of event handlers that are occurring very close together. And by mousing over we can actually see that they execute for very short amounts of time. The pop-up will tell us the amount of time it has executed and we can even see that sometimes we have overlapping event handlers that are all executing concurrently at the same time. So, this is one of the symptoms of potentially unwanted concurrency in our application, where something that didn't look like it would cause concurrency in your code, actually does run in a concurrent way or multiple threads and cause potentially extra context switches.

So, to understand this better, let's bring up the threads in instruments. And the system trace that are executing this code.

So, here I've highlighted the three worker threads that are executing these event handlers. And we can see as before that they are executing on call during this time. And the time they were running. But here we can see they were again, running for a very short amount of time in this area. And we can verify that they are making these read system calls that we saw earlier in the event handler.

And we can get some more detail by looking at the back trace again, and seeing, yes it is us that is calling that read system call and here it reads 97 bytes from our socket.

And looking at the other threads, the same pattern repeats. You can see it's the same read system calls occurring there, more or less at the same timeframe and so on the second thread here or on the first thread. They're really all doing the same thing, and overlapping.

It would be much better for our program if these things executed on a single thread. Here we don't really get any benefit from the concurrency because we are executing such short pieces of code. And we are probably getting more harm than good from adding these extra context switches.

So, let's apply the patterns that we saw earlier to fix this problem in this sample application. Jumping back into XCode, let's see how we set up the target queue for this source that we have.

So, that's sort of when you create this queue at the top of this function framework and as you can see, we do it simply by calling this dispatch_queue_create.

And that creates an independent serial queue that isn't connected to anything else in our application. This is exactly like the case we had earlier in my example of the networking subsystem.

So, let's fix that by adding a mutual exclusion context at the bottom of all of these queues for all of these connections. And we do that by adding the, or by switching to the dispatch_queue_create_with_target function up here introduced to you earlier. So, here we add dispatch_queue_create_wth_target. And we use a single mutual exclusion queue as the target queue for all of these. And this is a serial queue that we created somewhere else.

And with that, we build and run again and look at the system trace again.

And now it looks very different. Here we have still the same points track and we still see the same network events that occur, but as you can see, there's no more overlapping events in that track, and there's a single worker thread that executes this code. And if we zoom in on one of these clusters we can see this is actually many instances of that event handler executing in rapid succession, which is exactly what we expected. And when you zoom in more on one particular event, you can see it's still executing for a fairly short amount of time, and making those same read sys calls. But now that is much less problematic because it's all happening on a single thread.

So, this may seem like a very simple and trivial change, but it's worth pointing out that it's exactly this type of small tweak that led to the 1.3X performance improvement in some of our own framework code that Daniel pointed out at the beginning of the session. So, very small changes like this can make a significant difference.

All right so, let's look back at what we've covered today. Daniel, at the beginning went with you over the details of how not to go off core unnecessarily is ever more important for modern CPUs so that it can reach the most efficient performance state.

We looked at the importance of sizing the workforce of power workloads and for work moving between subsystems in your application as well as inside those subsystems.

We talked about how to choose good granularity of concurrency with GCD by using a fixed number of serial queue hierarchies in your application. And Pierre walked you through how to modernize your GCD usage to take full advantage of improvements in the OS, and in our hardware.

And finally, we saw how we can use instruments to find problems spots in our application and how to fix them. For more information on this session, I will direct you to this URL where the documentation links for GCD are as well as the movie for the session, and we have some related sessions this week that might be worthwhile going to. Introducing Core ML already having happened, the other two are going to help you with parallel and computing intensive task in your application like we talked about at the beginning. And the last two are going to help you with more performance analysis and improvements of different aspects of your app. And with that, I'd like to thank you for coming. If you have any questions, please come and see us at the labs.

[ Applause ]

-