-

ARKitを用いたフェーストラッキング

ARKitおよびiPhone Xは、堅牢なフェーストラッキングによる革命的な機能をAR Appにもたらします。位置、トポロジー、表情を、高精度かつリアルタイムにAppで検出する方法について確認します。ライブのセルフィーエフェクトの適用や、3Dキャラクターを操作する方法についても確認します。

リソース

関連ビデオ

WWDC17

-

このビデオを検索

iOS 11 introduced ARKit: a new framework for creating augmented reality apps for iPhone and iPad.

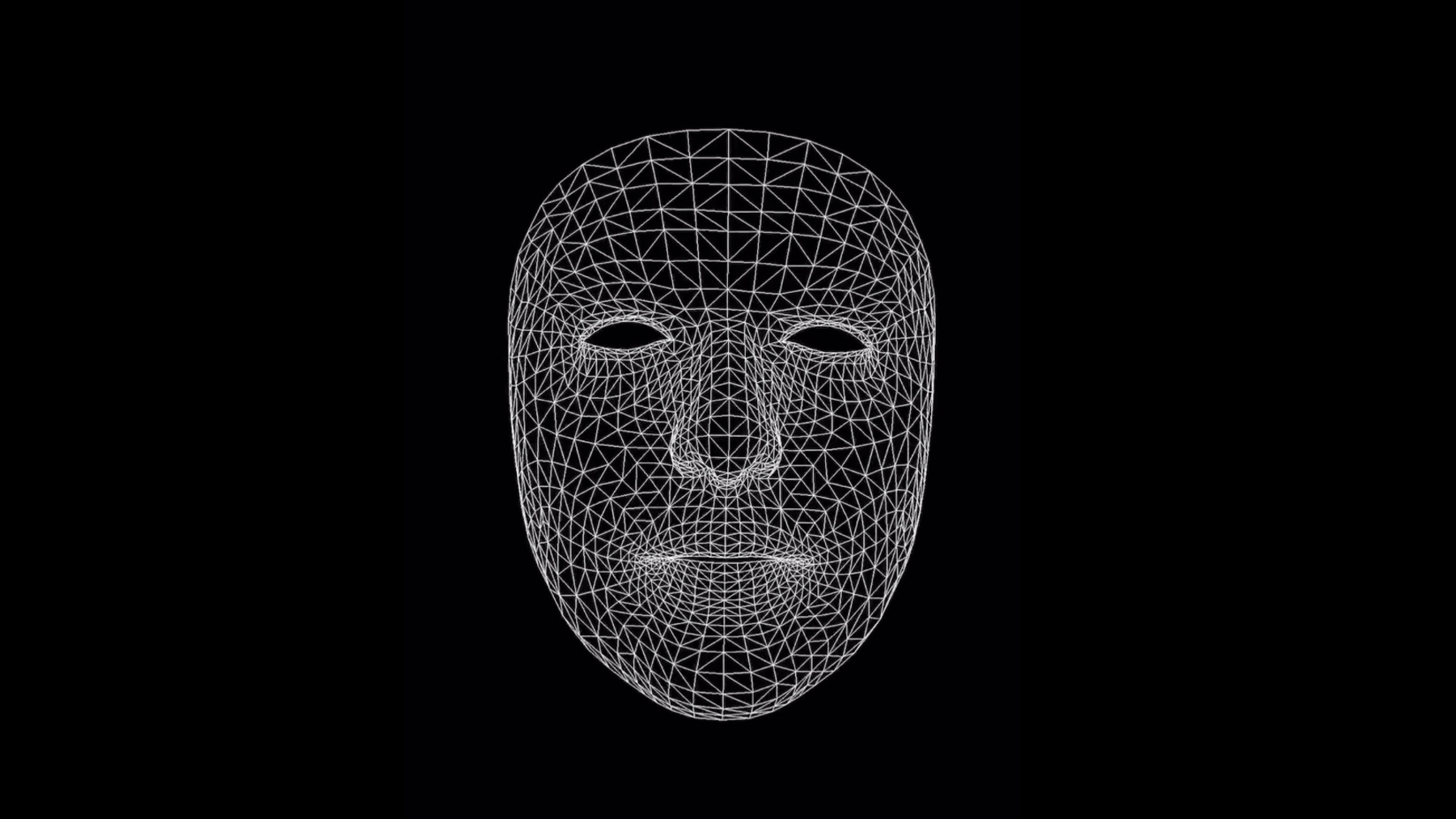

ARKit takes apps beyond the screen by placing digital objects into the environment around you, enabling you to interact with the real world in entirely new ways. At WWDC we introduced three primary capabilities for ARKit. Positional tracking detects the pose of your device, letting you use your iPhone or iPad as a window into a digital world all around you. Scene understanding detects horizontal surfaces like tabletops, finds stable anchor points, and provides an estimate of ambient lighting conditions, and integration with rendering technologies like SpriteKit, SceneKit, and Metal, as well as with popular game engines such as Unity and Unreal. Now with iPhone X, ARKit turns its focus to you, providing face tracking using the front-facing camera. This new ability enables robust face detection and positional tracking in six degrees of freedom. Facial expressions are also tracked in real-time, and your apps provided with a fitted triangle mesh and weighted parameters representing over 50 specific muscle movements of the detected face. For AR, we provide the front-facing color image from the camera, as well as a front-depth image. And ARKit uses your face as a light probe to estimate lighting conditions, and generates spherical harmonics coefficients that you can apply to your rendering. And as I mentioned, all of this is exclusively supported on iPhone X. There's some really fun things that you can do with Face Tracking. The first is selfie effects, where you're rendering a semitransparent texture onto the face mesh for effects like a virtual tattoo, or face paint, or to apply makeup, growing a beard or a mustache, or overlaying the mesh with jewelry, masks, hats, and glasses. The second is face capture, where you are capturing the facial expression in real time and using that as rigging to project expressions onto an avatar, or for a character in a game. So let's dive into the details and see how to get started with face tracking. The first thing you'll need to do is to create an ARSession. ARSession is the object that handles all the processing done for ARKit, everything from configuring the device to running different AR techniques. To run a session, we first need to describe what kind of tracking we want for this app. So to do this, you'll create a particular ARConfiguration for face tracking and set it up. Now to begin processing, you simply call the "run" method on the session and provide the configuration you want to run. Internally, ARKit will configure an AVCaptureSession and CMMotionManager to begin receiving camera images and the sensor data. And after processing, results will be outputted as ARFrames. Each ARFrame is a snapshot in time, providing camera images, tracking data, and anchor points -- basically everything that's needed to render your scene. Now let's take a closer look at the ARConfiguration for face tracking. We've added a new subclass called ARFaceTrackingConfiguration. This is a simple configuration subclass that tells the ARSession to enable face tracking through the front-facing camera. There's a few basic properties to check for the availability of face tracking on your device, and whether or not to enable lighting estimation. Then once you call "run," you'll start the tracking and begin receiving ARFrames. Once a face is detected, the session will generate an ARFaceAnchor. This represents the primary face -- the single biggest, closest face in view of the camera. An ARFaceAnchor provides you with the face pose in world coordinates, through the transform property of its superclass. It also provides the 3D topology and parameters of the current facial expression. And as you can see, it's all tracked, and the mesh and parameters updated, in real time, 60 times per second. Now, focusing in on the topology, ARKit provides you with a detailed 3D mesh of the face fitted in real time to the dimensions, the shape, and matching the facial expression of the user. This data is available in a couple different forms; the first is the ARFaceGeometry class. This is essentially a triangle mesh, so an array of vertices, triangle indices, and texture coordinates, which you can take to visualize in your renderer. ARKit also provides an easy way to visualize the mesh in SceneKit through the ARSCNFaceGeometry class, which defines a geometry object that can be attached to any SceneKit node. Now aside from the geometry mesh, we also have something that we call blend shapes. Blend shapes provide a high-level model of the current facial expression. They're a dictionary of named coefficients representing the pose of specific features -- your eyelids, eyebrows, jaw, nose, etcetera -- all relative to their neutral position. They're expressed as floating point values from zero to one, and they're all updated live. So you can use these blend shape coefficients to animate or rig, a 2D or 3D character in a way that directly mirrors the user's facial movements. Just to give you an idea of what's available, here's the list of blend shape coefficients. So each of these is tracked and updated independently -- the right and left eyebrows, the position of your eyes, your jaw, the shape of your smile, etcetera.

Something that goes hand-in-hand with rendering the face geometry or animating a 3D character is realistic lighting. And by using your face as a light probe, an ARSession that's running face detection can provide you with a directional light estimate, representing the light intensity and its direction in world space. For most apps, this lighting vector and intensity are more than enough. But ARKit also provides second-degree spherical harmonics coefficients, representing the intensity of light detected in the scene. So for apps with more advanced requirements, you can take advantage of this as well. And a couple more features to mention. In addition to the front-facing camera image with color data, ARKit can also provide your app with the front-facing depth image as well. And I'm showing this here as a greyscale image. The data itself is provided as an AVDepthData object, along with a timestamp. But it's important to note, this is being captured at 15Hz, which is a lower frequency than the color image which ARKit captures at 60Hz.

And finally, a feature that can be used with any ARKit session, but is particularly interesting with face tracking is: Audio Capture. Now it's disabled by default, but if enabled, then while your ARSession is running, it will capture audio samples from the microphone, and deliver a sequence of CMSampleBuffers to your app. So this is useful if you want to capture the user's face and their voice at the same time.

For more information about face tracking, and links to the sample code, please visit our Developer website at developer.apple.com/arkit. Thank you for watching!

-